Chenhong Zhou

Dual-Balancing for Physics-Informed Neural Networks

May 19, 2025Abstract:Physics-informed neural networks (PINNs) have emerged as a new learning paradigm for solving partial differential equations (PDEs) by enforcing the constraints of physical equations, boundary conditions (BCs), and initial conditions (ICs) into the loss function. Despite their successes, vanilla PINNs still suffer from poor accuracy and slow convergence due to the intractable multi-objective optimization issue. In this paper, we propose a novel Dual-Balanced PINN (DB-PINN), which dynamically adjusts loss weights by integrating inter-balancing and intra-balancing to alleviate two imbalance issues in PINNs. Inter-balancing aims to mitigate the gradient imbalance between PDE residual loss and condition-fitting losses by determining an aggregated weight that offsets their gradient distribution discrepancies. Intra-balancing acts on condition-fitting losses to tackle the imbalance in fitting difficulty across diverse conditions. By evaluating the fitting difficulty based on the loss records, intra-balancing can allocate the aggregated weight proportionally to each condition loss according to its fitting difficulty level. We further introduce a robust weight update strategy to prevent abrupt spikes and arithmetic overflow in instantaneous weight values caused by large loss variances, enabling smooth weight updating and stable training. Extensive experiments demonstrate that DB-PINN achieves significantly superior performance than those popular gradient-based weighting methods in terms of convergence speed and prediction accuracy. Our code and supplementary material are available at https://github.com/chenhong-zhou/DualBalanced-PINNs.

Long-lead forecasts of wintertime air stagnation index in southern China using oceanic memory effects

May 16, 2023

Abstract:Stagnant weather condition is one of the major contributors to air pollution as it is favorable for the formation and accumulation of pollutants. To measure the atmosphere's ability to dilute air pollutants, Air Stagnation Index (ASI) has been introduced as an important meteorological index. Therefore, making long-lead ASI forecasts is vital to make plans in advance for air quality management. In this study, we found that autumn Ni\~no indices derived from sea surface temperature (SST) anomalies show a negative correlation with wintertime ASI in southern China, offering prospects for a prewinter forecast. We developed an LSTM-based model to predict the future wintertime ASI. Results demonstrated that multivariate inputs (past ASI and Ni\~no indices) achieve better forecast performance than univariate input (only past ASI). The model achieves a correlation coefficient of 0.778 between the actual and predicted ASI, exhibiting a high degree of consistency.

KRADA: Known-region-aware Domain Alignment for Open World Semantic Segmentation

Jun 11, 2021

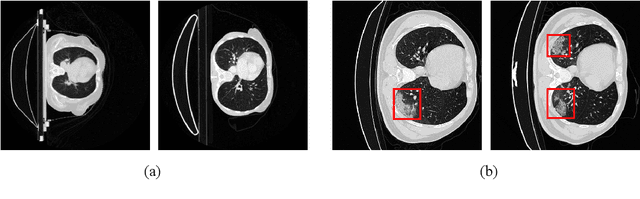

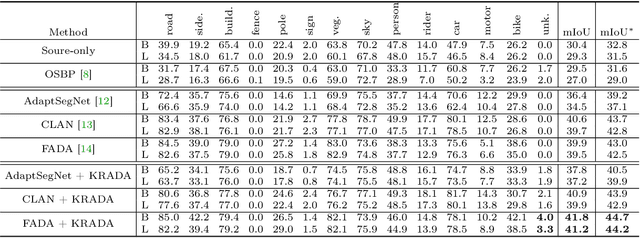

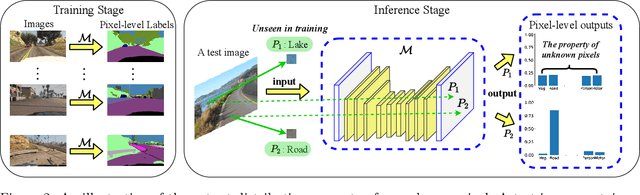

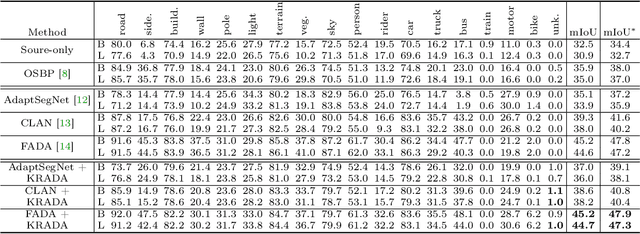

Abstract:In semantic segmentation, we aim to train a pixel-level classifier to assign category labels to all pixels in an image, where labeled training images and unlabeled test images are from the same distribution and share the same label set. However, in an open world, the unlabeled test images probably contain unknown categories and have different distributions from the labeled images. Hence, in this paper, we consider a new, more realistic, and more challenging problem setting where the pixel-level classifier has to be trained with labeled images and unlabeled open-world images -- we name it open world semantic segmentation (OSS). In OSS, the trained classifier is expected to identify unknown-class pixels and classify known-class pixels well. To solve OSS, we first investigate which distribution that unknown-class pixels obey. Then, motivated by the goodness-of-fit test, we use statistical measurements to show how a pixel fits the distribution of an unknown class and select highly-fitted pixels to form the unknown region in each image. Eventually, we propose an end-to-end learning framework, known-region-aware domain alignment (KRADA), to distinguish unknown classes while aligning distributions of known classes in labeled and unlabeled open-world images. The effectiveness of KRADA has been verified on two synthetic tasks and one COVID-19 segmentation task.

One-pass Multi-task Networks with Cross-task Guided Attention for Brain Tumor Segmentation

Jun 05, 2019

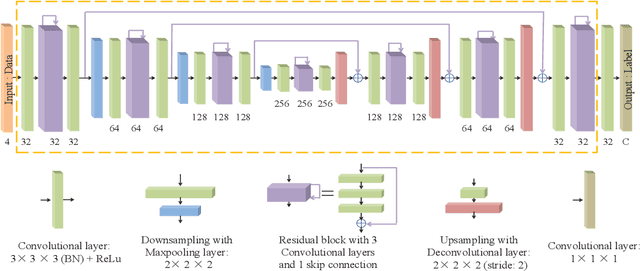

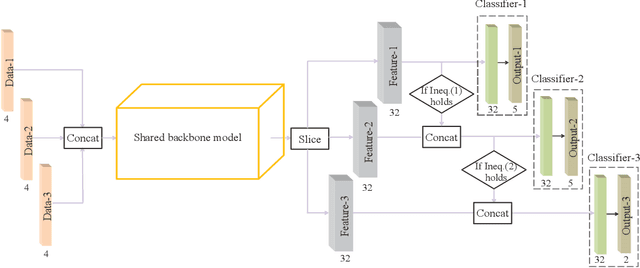

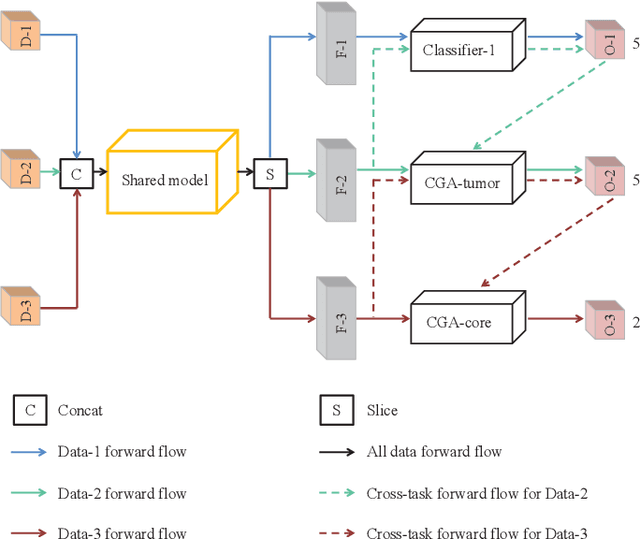

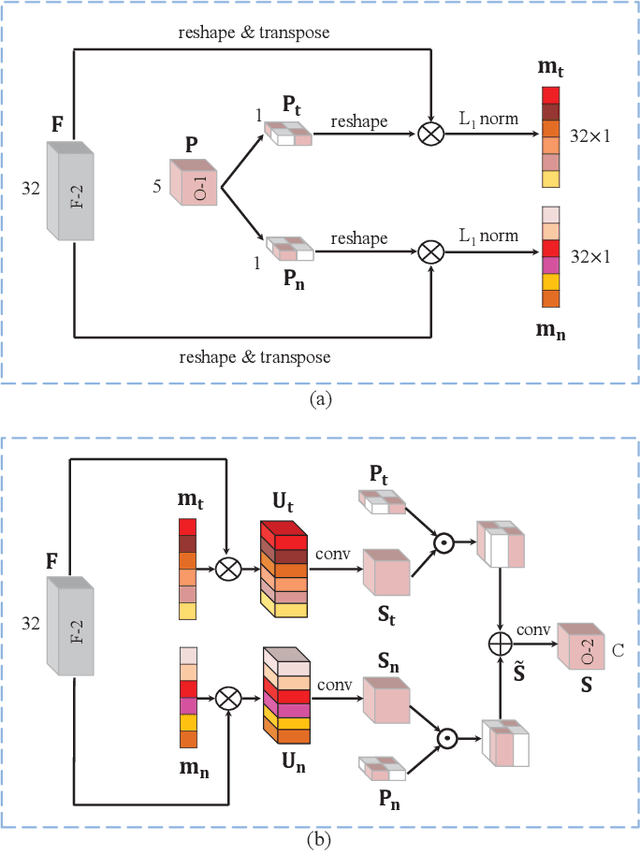

Abstract:Class imbalance has been one of the major challenges for medical image segmentation. The model cascade (MC) strategy significantly alleviates class imbalance issue. In spite of its outstanding performance, this method leads to an undesired system complexity and meanwhile ignores the relevance among the models. To handle these flaws of MC, we propose in this paper a light-weight deep model, i.e., the One-pass Multi-task Network (OM-Net) to solve class imbalance better than MC and require only one-pass computation for brain tumor segmentation. First, OM-Net integrates the separate segmentation tasks into one deep model. Second, to optimize OM-Net more effectively, we take advantage of the correlation among tasks to design an online training data transfer strategy and a curriculum learning-based training strategy. Third, we further propose to share prediction results between tasks, which enables us to design a cross-task guided attention (CGA) module. With the guidance of prediction results provided by the previous task, CGA can adaptively recalibrate channel-wise feature responses based on the category-specific statistics. Finally, a simple yet effective post-processing method is introduced to refine the segmentation results of the proposed attention network. Extensive experiments are performed to justify the effectiveness of the proposed techniques. Most impressively, we achieve state-of-the-art performance on the BraTS 2015 and BraTS 2017 datasets. With the proposed approaches, we also won the joint third place in the BraTS 2018 challenge among 64 participating teams. We will make the code publicly available at https://github.com/chenhong-zhou/OM-Net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge