Changsheng Xu

Understanding and Mitigating Overfitting in Prompt Tuning for Vision-Language Models

Nov 14, 2022Abstract:Pre-trained Vision-Language Models (VLMs) such as CLIP have shown impressive generalization capability in downstream vision tasks with appropriate text prompts. Instead of designing prompts manually, Context Optimization (CoOp) has been recently proposed to learn continuous prompts using task-specific training data. Despite the performance improvements on downstream tasks, several studies have reported that CoOp suffers from the overfitting issue in two aspects: (i) the test accuracy on base classes first gets better and then gets worse during training; (ii) the test accuracy on novel classes keeps decreasing. However, none of the existing studies can understand and mitigate such overfitting problem effectively. In this paper, we first explore the cause of overfitting by analyzing the gradient flow. Comparative experiments reveal that CoOp favors generalizable and spurious features in the early and later training stages respectively, leading to the non-overfitting and overfitting phenomenon. Given those observations, we propose Subspace Prompt Tuning (SubPT) to project the gradients in back-propagation onto the low-rank subspace spanned by the early-stage gradient flow eigenvectors during the entire training process, and successfully eliminate the overfitting problem. Besides, we equip CoOp with Novel Feature Learner (NFL) to enhance the generalization ability of the learned prompts onto novel categories beyond the training set, needless of image training data. Extensive experiments on 11 classification datasets demonstrate that SubPT+NFL consistently boost the performance of CoOp and outperform the state-of-the-art approach CoCoOp. Experiments on more challenging vision downstream tasks including open-vocabulary object detection and zero-shot semantic segmentation also verify the effectiveness of the proposed method. Codes can be found at https://tinyurl.com/mpe64f89.

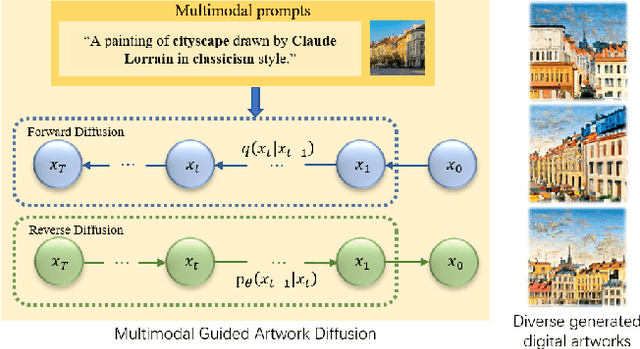

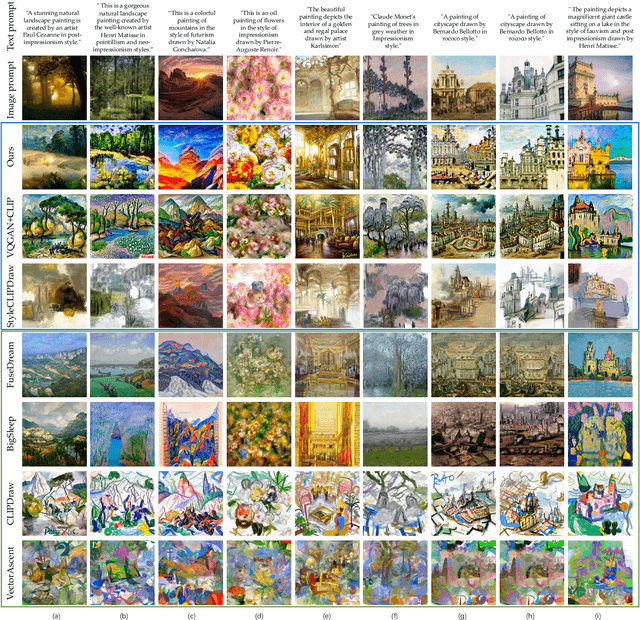

Draw Your Art Dream: Diverse Digital Art Synthesis with Multimodal Guided Diffusion

Sep 28, 2022

Abstract:Digital art synthesis is receiving increasing attention in the multimedia community because of engaging the public with art effectively. Current digital art synthesis methods usually use single-modality inputs as guidance, thereby limiting the expressiveness of the model and the diversity of generated results. To solve this problem, we propose the multimodal guided artwork diffusion (MGAD) model, which is a diffusion-based digital artwork generation approach that utilizes multimodal prompts as guidance to control the classifier-free diffusion model. Additionally, the contrastive language-image pretraining (CLIP) model is used to unify text and image modalities. Extensive experimental results on the quality and quantity of the generated digital art paintings confirm the effectiveness of the combination of the diffusion model and multimodal guidance. Code is available at https://github.com/haha-lisa/MGAD-multimodal-guided-artwork-diffusion.

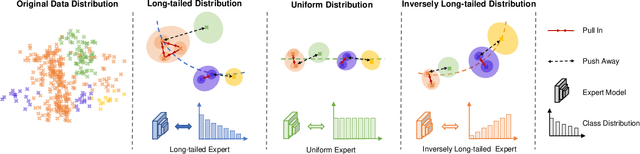

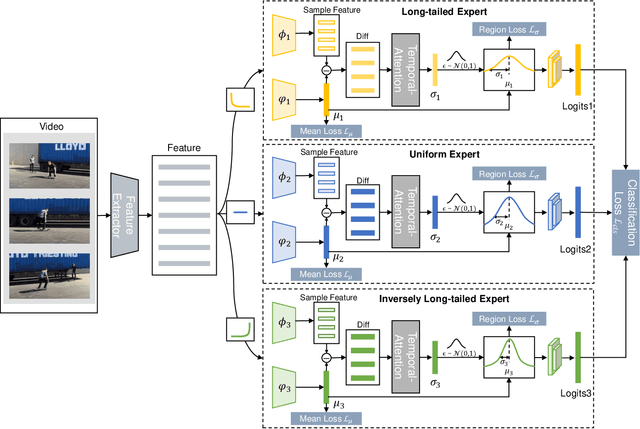

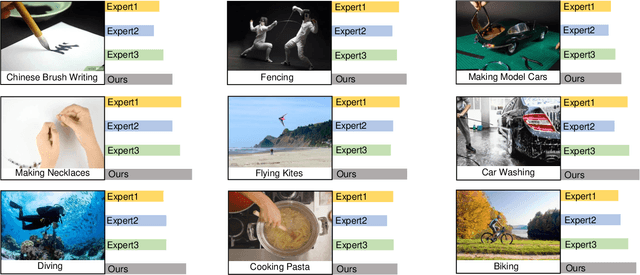

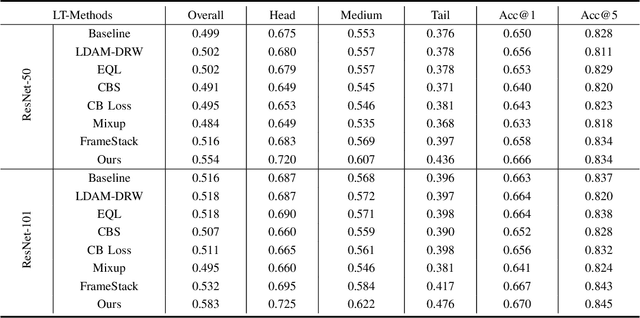

Learning Muti-expert Distribution Calibration for Long-tailed Video Classification

May 22, 2022

Abstract:Most existing state-of-the-art video classification methods assume the training data obey a uniform distribution. However, video data in the real world typically exhibit long-tail class distribution and imbalance, which extensively results in a model bias towards head class and leads to relatively low performance on tail class. While the current long-tail classification methods usually focus on image classification, adapting it to video data is not a trivial extension. We propose an end-to-end multi-experts distribution calibration method based on two-level distribution information to address these challenges. The method jointly considers the distribution of samples in each class (intra-class distribution) and the diverse distributions of overall data (inter-class distribution) to solve the problem of imbalanced data under long-tailed distribution. By modeling this two-level distribution information, the model can consider the head classes and the tail classes and significantly transfer the knowledge from the head classes to improve the performance of the tail classes. Extensive experiments verify that our method achieves state-of-the-art performance on the long-tailed video classification task.

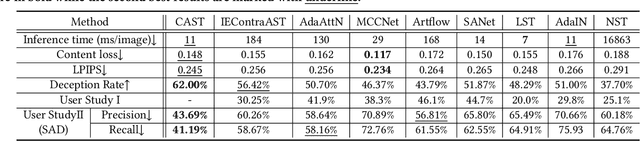

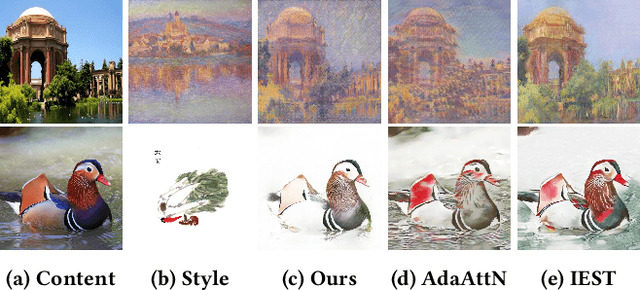

Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning

May 20, 2022

Abstract:In this work, we tackle the challenging problem of arbitrary image style transfer using a novel style feature representation learning method. A suitable style representation, as a key component in image stylization tasks, is essential to achieve satisfactory results. Existing deep neural network based approaches achieve reasonable results with the guidance from second-order statistics such as Gram matrix of content features. However, they do not leverage sufficient style information, which results in artifacts such as local distortions and style inconsistency. To address these issues, we propose to learn style representation directly from image features instead of their second-order statistics, by analyzing the similarities and differences between multiple styles and considering the style distribution. Specifically, we present Contrastive Arbitrary Style Transfer (CAST), which is a new style representation learning and style transfer method via contrastive learning. Our framework consists of three key components, i.e., a multi-layer style projector for style code encoding, a domain enhancement module for effective learning of style distribution, and a generative network for image style transfer. We conduct qualitative and quantitative evaluations comprehensively to demonstrate that our approach achieves significantly better results compared to those obtained via state-of-the-art methods. Code and models are available at https://github.com/zyxElsa/CAST_pytorch

Learning Commonsense-aware Moment-Text Alignment for Fast Video Temporal Grounding

Apr 12, 2022

Abstract:Grounding temporal video segments described in natural language queries effectively and efficiently is a crucial capability needed in vision-and-language fields. In this paper, we deal with the fast video temporal grounding (FVTG) task, aiming at localizing the target segment with high speed and favorable accuracy. Most existing approaches adopt elaborately designed cross-modal interaction modules to improve the grounding performance, which suffer from the test-time bottleneck. Although several common space-based methods enjoy the high-speed merit during inference, they can hardly capture the comprehensive and explicit relations between visual and textual modalities. In this paper, to tackle the dilemma of speed-accuracy tradeoff, we propose a commonsense-aware cross-modal alignment (CCA) framework, which incorporates commonsense-guided visual and text representations into a complementary common space for fast video temporal grounding. Specifically, the commonsense concepts are explored and exploited by extracting the structural semantic information from a language corpus. Then, a commonsense-aware interaction module is designed to obtain bridged visual and text features by utilizing the learned commonsense concepts. Finally, to maintain the original semantic information of textual queries, a cross-modal complementary common space is optimized to obtain matching scores for performing FVTG. Extensive results on two challenging benchmarks show that our CCA method performs favorably against state-of-the-arts while running at high speed. Our code is available at https://github.com/ZiyueWu59/CCA.

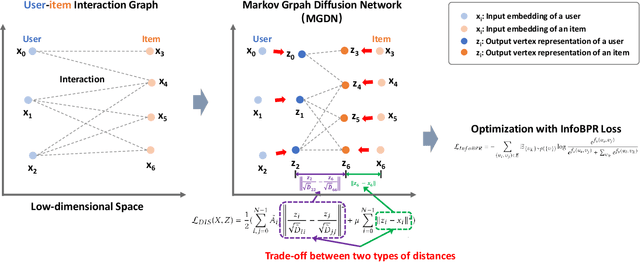

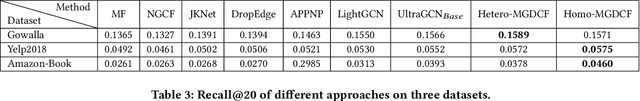

MGDCF: Distance Learning via Markov Graph Diffusion for Neural Collaborative Filtering

Apr 05, 2022

Abstract:Collaborative filtering (CF) is widely used by personalized recommendation systems, which aims to predict the preference of users with historical user-item interactions. In recent years, Graph Neural Networks (GNNs) have been utilized to build CF models and have shown promising performance. Recent state-of-the-art GNN-based CF approaches simply attribute their performance improvement to the high-order neighbor aggregation ability of GNNs. However, we observe that some powerful deep GNNs such as JKNet and DropEdge, can effectively exploit high-order neighbor information on other graph tasks but perform poorly on CF tasks, which conflicts with the explanation of these GNN-based CF research. Different from these research, we investigate the GNN-based CF from the perspective of Markov processes for distance learning with a unified framework named Markov Graph Diffusion Collaborative Filtering (MGDCF). We design a Markov Graph Diffusion Network (MGDN) as MGDCF's GNN encoder, which learns vertex representations by trading off two types of distances via a Markov process. We show the theoretical equivalence between MGDN's output and the optimal solution of a distance loss function, which can boost the optimization of CF models. MGDN can generalize state-of-the-art models such as LightGCN and APPNP, which are heterogeneous GNNs. In addition, MGDN can be extended to homogeneous GNNs with our sparsification technique. For optimizing MGDCF, we propose the InfoBPR loss function, which extends the widely used BPR loss to exploit multiple negative samples for better performance. We conduct experiments to perform detailed analysis on MGDCF. The source code is publicly available at https://github.com/hujunxianligong/MGDCF.

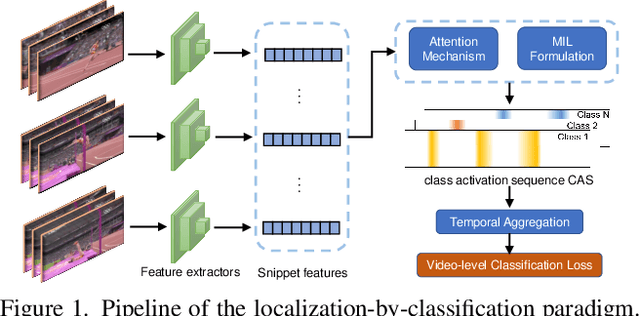

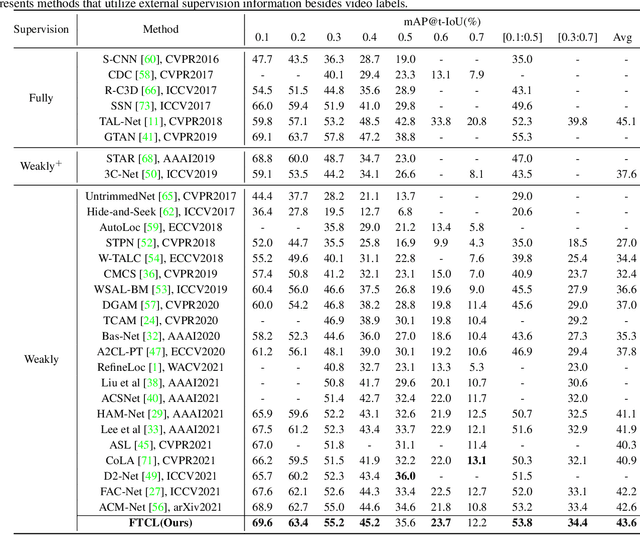

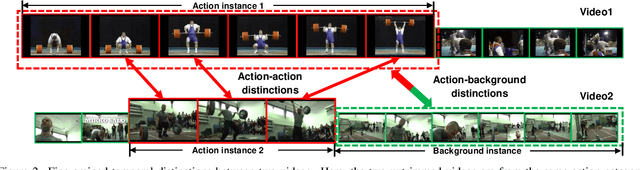

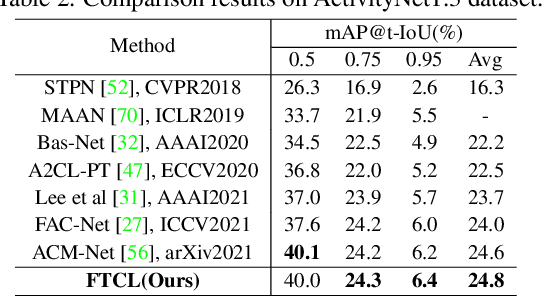

Fine-grained Temporal Contrastive Learning for Weakly-supervised Temporal Action Localization

Mar 31, 2022

Abstract:We target at the task of weakly-supervised action localization (WSAL), where only video-level action labels are available during model training. Despite the recent progress, existing methods mainly embrace a localization-by-classification paradigm and overlook the fruitful fine-grained temporal distinctions between video sequences, thus suffering from severe ambiguity in classification learning and classification-to-localization adaption. This paper argues that learning by contextually comparing sequence-to-sequence distinctions offers an essential inductive bias in WSAL and helps identify coherent action instances. Specifically, under a differentiable dynamic programming formulation, two complementary contrastive objectives are designed, including Fine-grained Sequence Distance (FSD) contrasting and Longest Common Subsequence (LCS) contrasting, where the first one considers the relations of various action/background proposals by using match, insert, and delete operators and the second one mines the longest common subsequences between two videos. Both contrasting modules can enhance each other and jointly enjoy the merits of discriminative action-background separation and alleviated task gap between classification and localization. Extensive experiments show that our method achieves state-of-the-art performance on two popular benchmarks. Our code is available at https://github.com/MengyuanChen21/CVPR2022-FTCL.

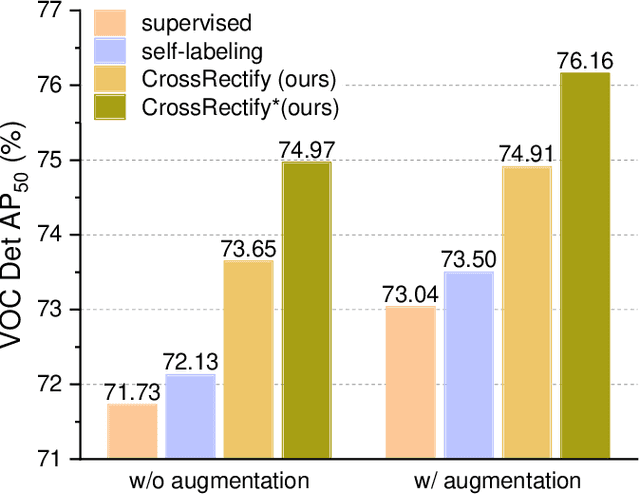

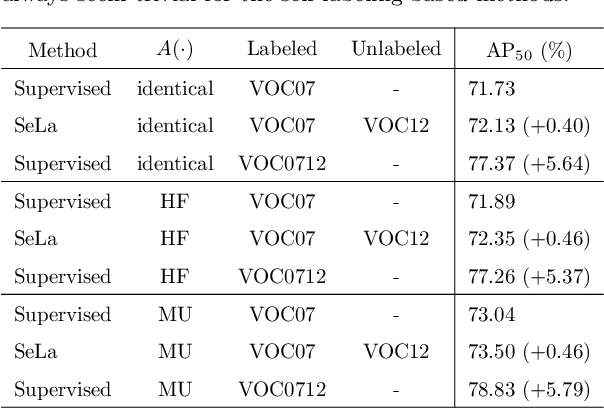

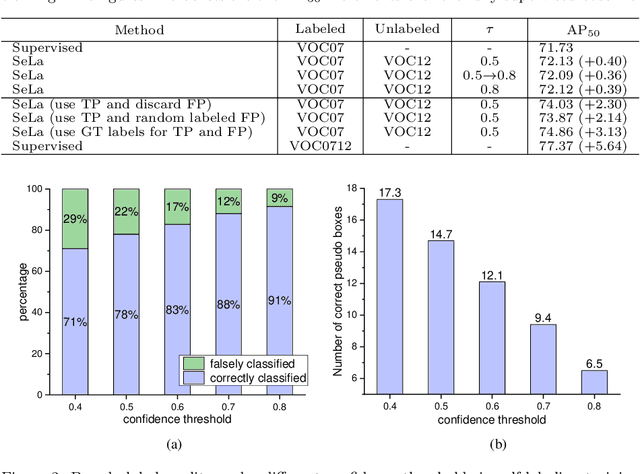

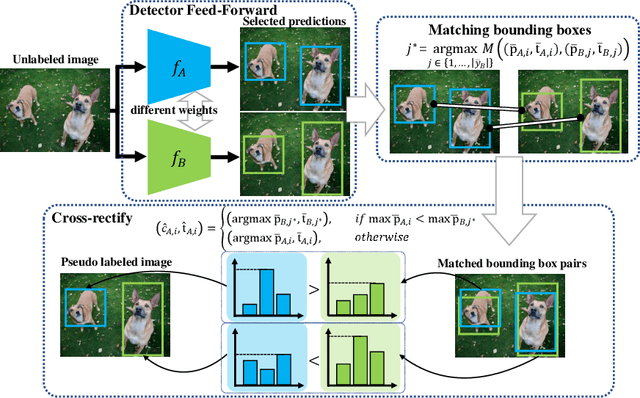

Mitigating the Mutual Error Amplification for Semi-Supervised Object Detection

Jan 26, 2022

Abstract:Semi-supervised object detection (SSOD) has achieved substantial progress in recent years. However, it is observed that the performances of self-labeling SSOD methods remain limited. Based on our experimental analysis, we reveal that the reason behind such phenomenon lies in the mutual error amplification between the pseudo labels and the trained detector. In this study, we propose a Cross Teaching (CT) method, aiming to mitigate the mutual error amplification by introducing a rectification mechanism of pseudo labels. CT simultaneously trains multiple detectors with an identical structure but different parameter initialization. In contrast to existing mutual teaching methods that directly treat predictions from other detectors as pseudo labels, we propose the Label Rectification Module (LRM), where the bounding boxes predicted by one detector are rectified by using the corresponding boxes predicted by all other detectors with higher confidence scores. In this way, CT can enhance the pseudo label quality compared with self-labeling and existing mutual teaching methods, and reasonably mitigate the mutual error amplification. Over two popular detector structures, i.e., SSD300 and Faster-RCNN-FPN, the proposed CT method obtains consistent improvements and outperforms the state-of-the-art SSOD methods by 2.2% absolute mAP improvements on the Pascal VOC and MS-COCO benchmarks. The code is available at github.com/machengcheng2016/CrossTeaching-SSOD.

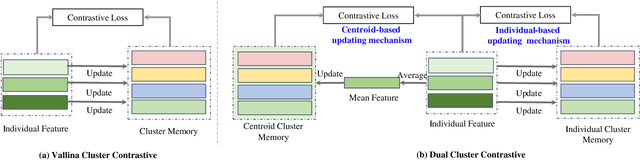

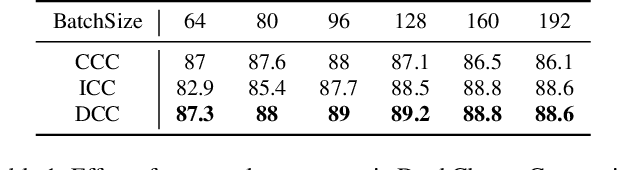

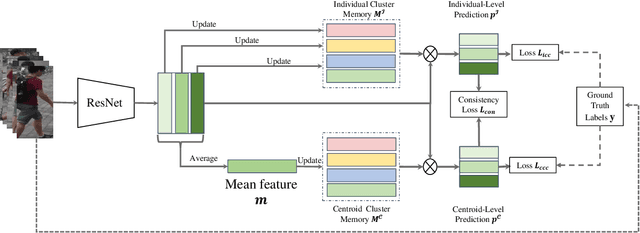

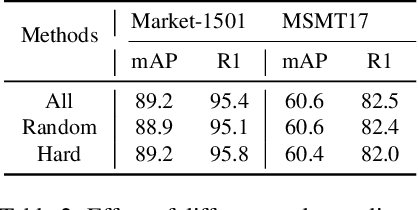

Dual Cluster Contrastive learning for Person Re-Identification

Dec 20, 2021

Abstract:Recently, cluster contrastive learning has been proven effective for person ReID by computing the contrastive loss between the individual feature and the cluster memory. However, existing methods that use the individual feature to momentum update the cluster memory are not robust to the noisy samples, such as the samples with wrong annotated labels or the pseudo-labels. Unlike the individual-based updating mechanism, the centroid-based updating mechanism that applies the mean feature of each cluster to update the cluster memory is robust against minority noisy samples. Therefore, we formulate the individual-based updating and centroid-based updating mechanisms in a unified cluster contrastive framework, named Dual Cluster Contrastive learning (DCC), which maintains two types of memory banks: individual and centroid cluster memory banks. Significantly, the individual cluster memory is momentum updated based on the individual feature.The centroid cluster memory applies the mean feature of each cluter to update the corresponding cluster memory. Besides the vallina contrastive loss for each memory, a consistency constraint is applied to guarantee the consistency of the output of two memories. Note that DCC can be easily applied for unsupervised or supervised person ReID by using ground-truth labels or pseudo-labels generated with clustering method, respectively. Extensive experiments on two benchmarks under supervised person ReID and unsupervised person ReID demonstrate the superior of the proposed DCC. Code is available at: https://github.com/htyao89/Dual-Cluster-Contrastive/

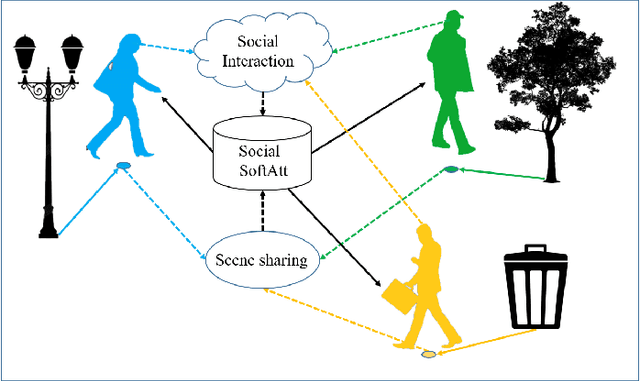

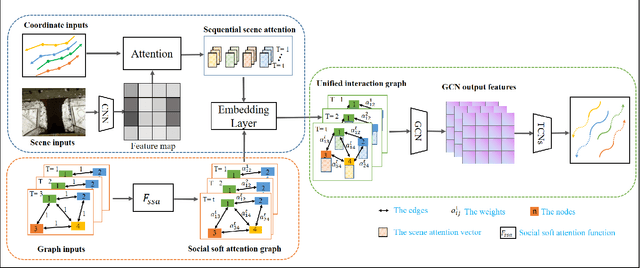

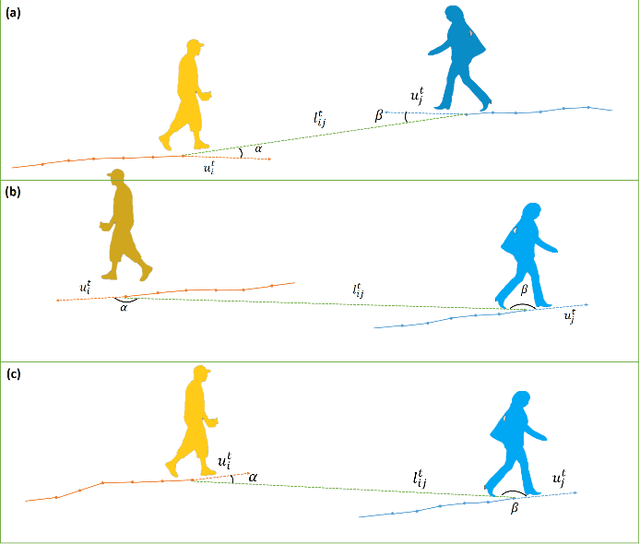

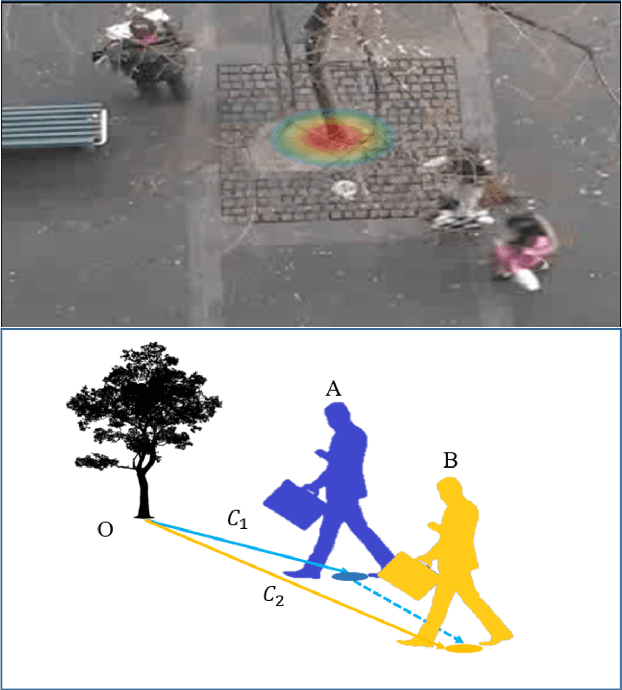

SSAGCN: Social Soft Attention Graph Convolution Network for Pedestrian Trajectory Prediction

Dec 05, 2021

Abstract:Pedestrian trajectory prediction is an important technique of autonomous driving, which has become a research hot-spot in recent years. Previous methods mainly rely on the position relationship of pedestrians to model social interaction, which is obviously not enough to represent the complex cases in real situations. In addition, most of existing work usually introduce the scene interaction module as an independent branch and embed the social interaction features in the process of trajectory generation, rather than simultaneously carrying out the social interaction and scene interaction, which may undermine the rationality of trajectory prediction. In this paper, we propose one new prediction model named Social Soft Attention Graph Convolution Network (SSAGCN) which aims to simultaneously handle social interactions among pedestrians and scene interactions between pedestrians and environments. In detail, when modeling social interaction, we propose a new \emph{social soft attention function}, which fully considers various interaction factors among pedestrians. And it can distinguish the influence of pedestrians around the agent based on different factors under various situations. For the physical interaction, we propose one new \emph{sequential scene sharing mechanism}. The influence of the scene on one agent at each moment can be shared with other neighbors through social soft attention, therefore the influence of the scene is expanded both in spatial and temporal dimension. With the help of these improvements, we successfully obtain socially and physically acceptable predicted trajectories. The experiments on public available datasets prove the effectiveness of SSAGCN and have achieved state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge