Bram Adams

Understanding Prompt Management in GitHub Repositories: A Call for Best Practices

Sep 15, 2025

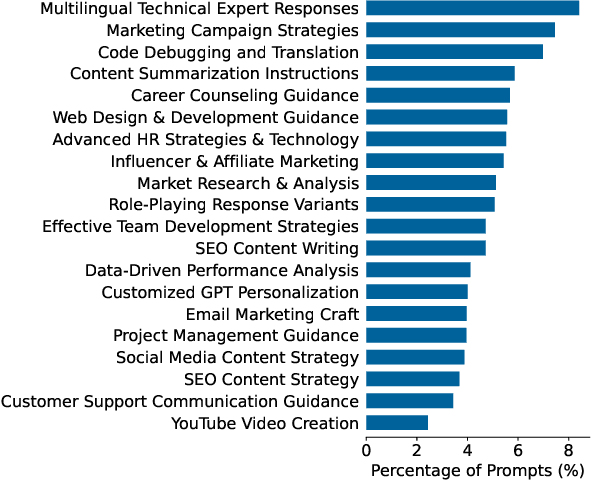

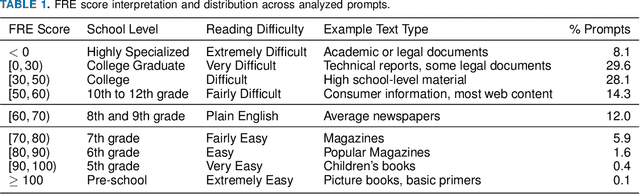

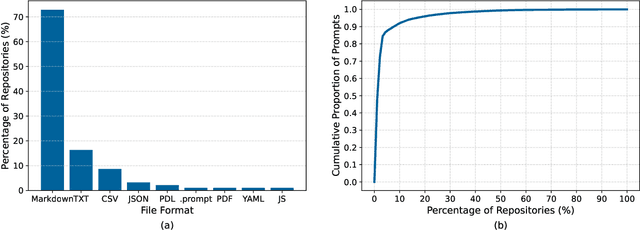

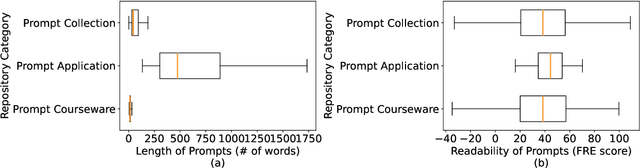

Abstract:The rapid adoption of foundation models (e.g., large language models) has given rise to promptware, i.e., software built using natural language prompts. Effective management of prompts, such as organization and quality assurance, is essential yet challenging. In this study, we perform an empirical analysis of 24,800 open-source prompts from 92 GitHub repositories to investigate prompt management practices and quality attributes. Our findings reveal critical challenges such as considerable inconsistencies in prompt formatting, substantial internal and external prompt duplication, and frequent readability and spelling issues. Based on these findings, we provide actionable recommendations for developers to enhance the usability and maintainability of open-source prompts within the rapidly evolving promptware ecosystem.

OmniLLP: Enhancing LLM-based Log Level Prediction with Context-Aware Retrieval

Aug 12, 2025

Abstract:Developers insert logging statements in source code to capture relevant runtime information essential for maintenance and debugging activities. Log level choice is an integral, yet tricky part of the logging activity as it controls log verbosity and therefore influences systems' observability and performance. Recent advances in ML-based log level prediction have leveraged large language models (LLMs) to propose log level predictors (LLPs) that demonstrated promising performance improvements (AUC between 0.64 and 0.8). Nevertheless, current LLM-based LLPs rely on randomly selected in-context examples, overlooking the structure and the diverse logging practices within modern software projects. In this paper, we propose OmniLLP, a novel LLP enhancement framework that clusters source files based on (1) semantic similarity reflecting the code's functional purpose, and (2) developer ownership cohesion. By retrieving in-context learning examples exclusively from these semantic and ownership aware clusters, we aim to provide more coherent prompts to LLPs leveraging LLMs, thereby improving their predictive accuracy. Our results show that both semantic and ownership-aware clusterings statistically significantly improve the accuracy (by up to 8\% AUC) of the evaluated LLM-based LLPs compared to random predictors (i.e., leveraging randomly selected in-context examples from the whole project). Additionally, our approach that combines the semantic and ownership signal for in-context prediction achieves an impressive 0.88 to 0.96 AUC across our evaluated projects. Our findings highlight the value of integrating software engineering-specific context, such as code semantic and developer ownership signals into LLM-LLPs, offering developers a more accurate, contextually-aware approach to logging and therefore, enhancing system maintainability and observability.

InterTrans: Leveraging Transitive Intermediate Translations to Enhance LLM-based Code Translation

Nov 05, 2024

Abstract:Code translation aims to convert a program from one programming language (PL) to another. This long-standing software engineering task is crucial for modernizing legacy systems, ensuring cross-platform compatibility, enhancing performance, and more. However, automating this process remains challenging due to many syntactic and semantic differences between PLs. Recent studies show that even advanced techniques such as large language models (LLMs), especially open-source LLMs, still struggle with the task. Currently, code LLMs are trained with source code from multiple programming languages, thus presenting multilingual capabilities. In this paper, we investigate whether such multilingual capabilities can be harnessed to enhance code translation. To achieve this goal, we introduce InterTrans, an LLM-based automated code translation approach that, in contrast to existing approaches, leverages intermediate translations across PLs to bridge the syntactic and semantic gaps between source and target PLs. InterTrans contains two stages. It first utilizes a novel Tree of Code Translation (ToCT) algorithm to plan transitive intermediate translation sequences between a given source and target PL, then validates them in a specific order. We evaluate InterTrans with three open LLMs on three benchmarks (i.e., CodeNet, HumanEval-X, and TransCoder) involving six PLs. Results show an absolute improvement between 18.3% to 43.3% in Computation Accuracy (CA) for InterTrans over Direct Translation with 10 attempts. The best-performing variant of InterTrans (with Magicoder LLM) achieved an average CA of 87.3%-95.4% on three benchmarks.

On the Impact of White-box Deployment Strategies for Edge AI on Latency and Model Performance

Nov 01, 2024

Abstract:To help MLOps engineers decide which operator to use in which deployment scenario, this study aims to empirically assess the accuracy vs latency trade-off of white-box (training-based) and black-box operators (non-training-based) and their combinations in an Edge AI setup. We perform inference experiments including 3 white-box (i.e., QAT, Pruning, Knowledge Distillation), 2 black-box (i.e., Partition, SPTQ), and their combined operators (i.e., Distilled SPTQ, SPTQ Partition) across 3 tiers (i.e., Mobile, Edge, Cloud) on 4 commonly-used Computer Vision and Natural Language Processing models to identify the effective strategies, considering the perspective of MLOps Engineers. Our Results indicate that the combination of Distillation and SPTQ operators (i.e., DSPTQ) should be preferred over non-hybrid operators when lower latency is required in the edge at small to medium accuracy drop. Among the non-hybrid operators, the Distilled operator is a better alternative in both mobile and edge tiers for lower latency performance at the cost of small to medium accuracy loss. Moreover, the operators involving distillation show lower latency in resource-constrained tiers (Mobile, Edge) compared to the operators involving Partitioning across Mobile and Edge tiers. For textual subject models, which have low input data size requirements, the Cloud tier is a better alternative for the deployment of operators than the Mobile, Edge, or Mobile-Edge tier (the latter being used for operators involving partitioning). In contrast, for image-based subject models, which have high input data size requirements, the Edge tier is a better alternative for operators than Mobile, Edge, or their combination.

Data Quality Antipatterns for Software Analytics

Aug 22, 2024

Abstract:Background: Data quality is vital in software analytics, particularly for machine learning (ML) applications like software defect prediction (SDP). Despite the widespread use of ML in software engineering, the effect of data quality antipatterns on these models remains underexplored. Objective: This study develops a taxonomy of ML-specific data quality antipatterns and assesses their impact on software analytics models' performance and interpretation. Methods: We identified eight types and 14 sub-types of ML-specific data quality antipatterns through a literature review. We conducted experiments to determine the prevalence of these antipatterns in SDP data (RQ1), assess how cleaning order affects model performance (RQ2), evaluate the impact of antipattern removal on performance (RQ3), and examine the consistency of interpretation from models built with different antipatterns (RQ4). Results: In our SDP case study, we identified nine antipatterns. Over 90% of these overlapped at both row and column levels, complicating cleaning prioritization and risking excessive data removal. The order of cleaning significantly impacts ML model performance, with neural networks being more resilient to cleaning order changes than simpler models like logistic regression. Antipatterns such as Tailed Distributions and Class Overlap show a statistically significant correlation with performance metrics when other antipatterns are cleaned. Models built with different antipatterns showed moderate consistency in interpretation results. Conclusion: The cleaning order of different antipatterns impacts ML model performance. Five antipatterns have a statistically significant correlation with model performance when others are cleaned. Additionally, model interpretation is moderately affected by different data quality antipatterns.

On the Workflows and Smells of Leaderboard Operations (LBOps): An Exploratory Study of Foundation Model Leaderboards

Jul 04, 2024

Abstract:Foundation models (FM), such as large language models (LLMs), which are large-scale machine learning (ML) models, have demonstrated remarkable adaptability in various downstream software engineering (SE) tasks, such as code completion, code understanding, and software development. As a result, FM leaderboards, especially those hosted on cloud platforms, have become essential tools for SE teams to compare and select the best third-party FMs for their specific products and purposes. However, the lack of standardized guidelines for FM evaluation and comparison threatens the transparency of FM leaderboards and limits stakeholders' ability to perform effective FM selection. As a first step towards addressing this challenge, our research focuses on understanding how these FM leaderboards operate in real-world scenarios ("leaderboard operations") and identifying potential leaderboard pitfalls and areas for improvement ("leaderboard smells"). In this regard, we perform a multivocal literature review to collect up to 721 FM leaderboards, after which we examine their documentation and engage in direct communication with leaderboard operators to understand their workflow patterns. Using card sorting and negotiated agreement, we identify 5 unique workflow patterns and develop a domain model that outlines the essential components and their interaction within FM leaderboards. We then identify 8 unique types of leaderboard smells in LBOps. By mitigating these smells, SE teams can improve transparency, accountability, and collaboration in current LBOps practices, fostering a more robust and responsible ecosystem for FM comparison and selection.

A State-of-the-practice Release-readiness Checklist for Generative AI-based Software Products

Mar 27, 2024Abstract:This paper investigates the complexities of integrating Large Language Models (LLMs) into software products, with a focus on the challenges encountered for determining their readiness for release. Our systematic review of grey literature identifies common challenges in deploying LLMs, ranging from pre-training and fine-tuning to user experience considerations. The study introduces a comprehensive checklist designed to guide practitioners in evaluating key release readiness aspects such as performance, monitoring, and deployment strategies, aiming to enhance the reliability and effectiveness of LLM-based applications in real-world settings.

Exploring the Impact of the Output Format on the Evaluation of Large Language Models for Code Translation

Mar 25, 2024

Abstract:Code translation between programming languages is a long-existing and critical task in software engineering, facilitating the modernization of legacy systems, ensuring cross-platform compatibility, and enhancing software performance. With the recent advances in large language models (LLMs) and their applications to code translation, there is an increasing need for comprehensive evaluation of these models. In this study, we empirically analyze the generated outputs of eleven popular instruct-tuned LLMs with parameters ranging from 1B up to 46.7B on 3,820 translation pairs across five languages, including C, C++, Go, Java, and Python. Our analysis found that between 26.4% and 73.7% of code translations produced by our evaluated LLMs necessitate post-processing, as these translations often include a mix of code, quotes, and text rather than being purely source code. Overlooking the output format of these models can inadvertently lead to underestimation of their actual performance. This is particularly evident when evaluating them with execution-based metrics such as Computational Accuracy (CA). Our results demonstrate that a strategic combination of prompt engineering and regular expression can effectively extract the source code from the model generation output. In particular, our method can help eleven selected models achieve an average Code Extraction Success Rate (CSR) of 92.73%. Our findings shed light on and motivate future research to conduct more reliable benchmarks of LLMs for code translation.

An Empirical Study of Challenges in Machine Learning Asset Management

Feb 28, 2024Abstract:In machine learning (ML), efficient asset management, including ML models, datasets, algorithms, and tools, is vital for resource optimization, consistent performance, and a streamlined development lifecycle. This enables quicker iterations, adaptability, reduced development-to-deployment time, and reliable outputs. Despite existing research, a significant knowledge gap remains in operational challenges like model versioning, data traceability, and collaboration, which are crucial for the success of ML projects. Our study aims to address this gap by analyzing 15,065 posts from developer forums and platforms, employing a mixed-method approach to classify inquiries, extract challenges using BERTopic, and identify solutions through open card sorting and BERTopic clustering. We uncover 133 topics related to asset management challenges, grouped into 16 macro-topics, with software dependency, model deployment, and model training being the most discussed. We also find 79 solution topics, categorized under 18 macro-topics, highlighting software dependency, feature development, and file management as key solutions. This research underscores the need for further exploration of identified pain points and the importance of collaborative efforts across academia, industry, and the research community.

The State of Documentation Practices of Third-party Machine Learning Models and Datasets

Dec 22, 2023Abstract:Model stores offer third-party ML models and datasets for easy project integration, minimizing coding efforts. One might hope to find detailed specifications of these models and datasets in the documentation, leveraging documentation standards such as model and dataset cards. In this study, we use statistical analysis and hybrid card sorting to assess the state of the practice of documenting model cards and dataset cards in one of the largest model stores in use today--Hugging Face (HF). Our findings show that only 21,902 models (39.62\%) and 1,925 datasets (28.48\%) have documentation. Furthermore, we observe inconsistency in ethics and transparency-related documentation for ML models and datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge