Ayan Biswas

Efficient Level-Crossing Probability Calculation for Gaussian Process Modeled Data

Dec 13, 2025Abstract:Almost all scientific data have uncertainties originating from different sources. Gaussian process regression (GPR) models are a natural way to model data with Gaussian-distributed uncertainties. GPR also has the benefit of reducing I/O bandwidth and storage requirements for large scientific simulations. However, the reconstruction from the GPR models suffers from high computation complexity. To make the situation worse, classic approaches for visualizing the data uncertainties, like probabilistic marching cubes, are also computationally very expensive, especially for data of high resolutions. In this paper, we accelerate the level-crossing probability calculation efficiency on GPR models by subdividing the data spatially into a hierarchical data structure and only reconstructing values adaptively in the regions that have a non-zero probability. For each region, leveraging the known GPR kernel and the saved data observations, we propose a novel approach to efficiently calculate an upper bound for the level-crossing probability inside the region and use this upper bound to make the subdivision and reconstruction decisions. We demonstrate that our value occurrence probability estimation is accurate with a low computation cost by experiments that calculate the level-crossing probability fields on different datasets.

SPUS: A Lightweight and Parameter-Efficient Foundation Model for PDEs

Oct 01, 2025Abstract:We introduce Small PDE U-Net Solver (SPUS), a compact and efficient foundation model (FM) designed as a unified neural operator for solving a wide range of partial differential equations (PDEs). Unlike existing state-of-the-art PDE FMs-primarily based on large complex transformer architectures with high computational and parameter overhead-SPUS leverages a lightweight residual U-Net-based architecture that has been largely underexplored as a foundation model architecture in this domain. To enable effective learning in this minimalist framework, we utilize a simple yet powerful auto-regressive pretraining strategy which closely replicates the behavior of numerical solvers to learn the underlying physics. SPUS is pretrained on a diverse set of fluid dynamics PDEs and evaluated across 6 challenging unseen downstream PDEs spanning various physical systems. Experimental results demonstrate that SPUS using residual U-Net based architecture achieves state-of-the-art generalization on these downstream tasks while requiring significantly fewer parameters and minimal fine-tuning data, highlighting its potential as a highly parameter-efficient FM for solving diverse PDE systems.

A Partitioned Sparse Variational Gaussian Process for Fast, Distributed Spatial Modeling

Jul 22, 2025Abstract:The next generation of Department of Energy supercomputers will be capable of exascale computation. For these machines, far more computation will be possible than that which can be saved to disk. As a result, users will be unable to rely on post-hoc access to data for uncertainty quantification and other statistical analyses and there will be an urgent need for sophisticated machine learning algorithms which can be trained in situ. Algorithms deployed in this setting must be highly scalable, memory efficient and capable of handling data which is distributed across nodes as spatially contiguous partitions. One suitable approach involves fitting a sparse variational Gaussian process (SVGP) model independently and in parallel to each spatial partition. The resulting model is scalable, efficient and generally accurate, but produces the undesirable effect of constructing discontinuous response surfaces due to the disagreement between neighboring models at their shared boundary. In this paper, we extend this idea by allowing for a small amount of communication between neighboring spatial partitions which encourages better alignment of the local models, leading to smoother spatial predictions and a better fit in general. Due to our decentralized communication scheme, the proposed extension remains highly scalable and adds very little overhead in terms of computation (and none, in terms of memory). We demonstrate this Partitioned SVGP (PSVGP) approach for the Energy Exascale Earth System Model (E3SM) and compare the results to the independent SVGP case.

Lost in OCR Translation? Vision-Based Approaches to Robust Document Retrieval

May 08, 2025Abstract:Retrieval-Augmented Generation (RAG) has become a popular technique for enhancing the reliability and utility of Large Language Models (LLMs) by grounding responses in external documents. Traditional RAG systems rely on Optical Character Recognition (OCR) to first process scanned documents into text. However, even state-of-the-art OCRs can introduce errors, especially in degraded or complex documents. Recent vision-language approaches, such as ColPali, propose direct visual embedding of documents, eliminating the need for OCR. This study presents a systematic comparison between a vision-based RAG system (ColPali) and more traditional OCR-based pipelines utilizing Llama 3.2 (90B) and Nougat OCR across varying document qualities. Beyond conventional retrieval accuracy metrics, we introduce a semantic answer evaluation benchmark to assess end-to-end question-answering performance. Our findings indicate that while vision-based RAG performs well on documents it has been fine-tuned on, OCR-based RAG is better able to generalize to unseen documents of varying quality. We highlight the key trade-offs between computational efficiency and semantic accuracy, offering practical guidance for RAG practitioners in selecting between OCR-dependent and vision-based document retrieval systems in production environments.

LLM-Assisted Translation of Legacy FORTRAN Codes to C++: A Cross-Platform Study

Apr 21, 2025Abstract:Large Language Models (LLMs) are increasingly being leveraged for generating and translating scientific computer codes by both domain-experts and non-domain experts. Fortran has served as one of the go to programming languages in legacy high-performance computing (HPC) for scientific discoveries. Despite growing adoption, LLM-based code translation of legacy code-bases has not been thoroughly assessed or quantified for its usability. Here, we studied the applicability of LLM-based translation of Fortran to C++ as a step towards building an agentic-workflow using open-weight LLMs on two different computational platforms. We statistically quantified the compilation accuracy of the translated C++ codes, measured the similarity of the LLM translated code to the human translated C++ code, and statistically quantified the output similarity of the Fortran to C++ translation.

Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation

Jul 29, 2024

Abstract:The advent of large language models (LLMs) has significantly advanced the field of code translation, enabling automated translation between programming languages. However, these models often struggle with complex translation tasks due to inadequate contextual understanding. This paper introduces a novel approach that enhances code translation through Few-Shot Learning, augmented with retrieval-based techniques. By leveraging a repository of existing code translations, we dynamically retrieve the most relevant examples to guide the model in translating new code segments. Our method, based on Retrieval-Augmented Generation (RAG), substantially improves translation quality by providing contextual examples from which the model can learn in real-time. We selected RAG over traditional fine-tuning methods due to its ability to utilize existing codebases or a locally stored corpus of code, which allows for dynamic adaptation to diverse translation tasks without extensive retraining. Extensive experiments on diverse datasets with open LLM models such as Starcoder, Llama3-70B Instruct, CodeLlama-34B Instruct, Granite-34B Code Instruct, and Mixtral-8x22B, as well as commercial LLM models like GPT-3.5 Turbo and GPT-4o, demonstrate our approach's superiority over traditional zero-shot methods, especially in translating between Fortran and CPP. We also explored varying numbers of shots i.e. examples provided during inference, specifically 1, 2, and 3 shots and different embedding models for RAG, including Nomic-Embed, Starencoder, and CodeBERT, to assess the robustness and effectiveness of our approach.

Explainable AI Integrated Feature Engineering for Wildfire Prediction

Apr 01, 2024Abstract:Wildfires present intricate challenges for prediction, necessitating the use of sophisticated machine learning techniques for effective modeling\cite{jain2020review}. In our research, we conducted a thorough assessment of various machine learning algorithms for both classification and regression tasks relevant to predicting wildfires. We found that for classifying different types or stages of wildfires, the XGBoost model outperformed others in terms of accuracy and robustness. Meanwhile, the Random Forest regression model showed superior results in predicting the extent of wildfire-affected areas, excelling in both prediction error and explained variance. Additionally, we developed a hybrid neural network model that integrates numerical data and image information for simultaneous classification and regression. To gain deeper insights into the decision-making processes of these models and identify key contributing features, we utilized eXplainable Artificial Intelligence (XAI) techniques, including TreeSHAP, LIME, Partial Dependence Plots (PDP), and Gradient-weighted Class Activation Mapping (Grad-CAM). These interpretability tools shed light on the significance and interplay of various features, highlighting the complex factors influencing wildfire predictions. Our study not only demonstrates the effectiveness of specific machine learning models in wildfire-related tasks but also underscores the critical role of model transparency and interpretability in environmental science applications.

Exploring Music Genre Classification: Algorithm Analysis and Deployment Architecture

Sep 14, 2023Abstract:Music genre classification has become increasingly critical with the advent of various streaming applications. Nowadays, we find it impossible to imagine using the artist's name and song title to search for music in a sophisticated music app. It is always difficult to classify music correctly because the information linked to music, such as region, artist, album, or non-album, is so variable. This paper presents a study on music genre classification using a combination of Digital Signal Processing (DSP) and Deep Learning (DL) techniques. A novel algorithm is proposed that utilizes both DSP and DL methods to extract relevant features from audio signals and classify them into various genres. The algorithm was tested on the GTZAN dataset and achieved high accuracy. An end-to-end deployment architecture is also proposed for integration into music-related applications. The performance of the algorithm is analyzed and future directions for improvement are discussed. The proposed DSP and DL-based music genre classification algorithm and deployment architecture demonstrate a promising approach for music genre classification.

Dynamic Data Assimilation of MPAS-O and the Global Drifter Dataset

Jan 11, 2023

Abstract:In this study, we propose a new method for combining in situ buoy measurements with Earth system models (ESMs) to improve the accuracy of temperature predictions in the ocean. The technique utilizes the dynamics and modes identified in ESMs to improve the accuracy of buoy measurements while still preserving features such as seasonality. Using this technique, errors in localized temperature predictions made by the MPAS-O model can be corrected. We demonstrate that our approach improves accuracy compared to other interpolation and data assimilation methods. We apply our method to assimilate the Model for Prediction Across Scales Ocean component (MPAS-O) with the Global Drifter Program's in-situ ocean buoy dataset.

IDLat: An Importance-Driven Latent Generation Method for Scientific Data

Aug 05, 2022

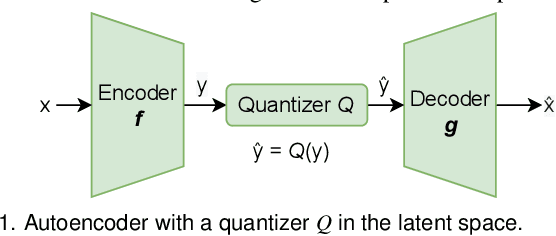

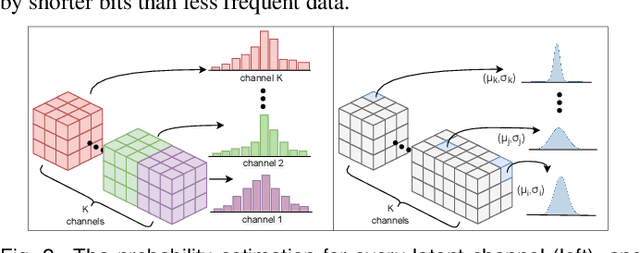

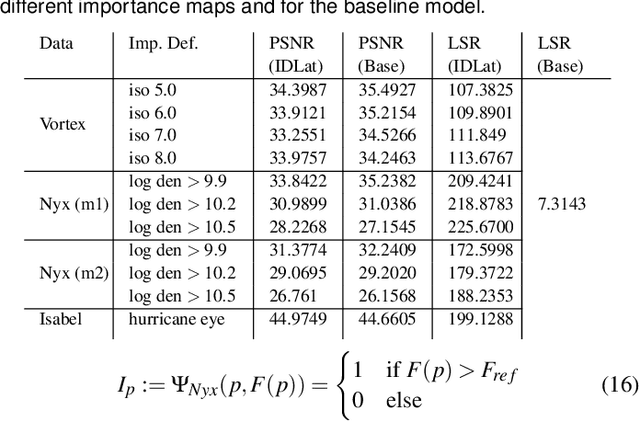

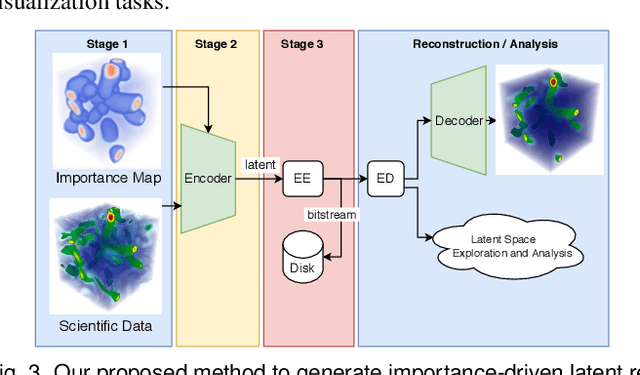

Abstract:Deep learning based latent representations have been widely used for numerous scientific visualization applications such as isosurface similarity analysis, volume rendering, flow field synthesis, and data reduction, just to name a few. However, existing latent representations are mostly generated from raw data in an unsupervised manner, which makes it difficult to incorporate domain interest to control the size of the latent representations and the quality of the reconstructed data. In this paper, we present a novel importance-driven latent representation to facilitate domain-interest-guided scientific data visualization and analysis. We utilize spatial importance maps to represent various scientific interests and take them as the input to a feature transformation network to guide latent generation. We further reduced the latent size by a lossless entropy encoding algorithm trained together with the autoencoder, improving the storage and memory efficiency. We qualitatively and quantitatively evaluate the effectiveness and efficiency of latent representations generated by our method with data from multiple scientific visualization applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge