Jiayi Xu

ZKBoost: Zero-Knowledge Verifiable Training for XGBoost

Feb 04, 2026Abstract:Gradient boosted decision trees, particularly XGBoost, are among the most effective methods for tabular data. As deployment in sensitive settings increases, cryptographic guarantees of model integrity become essential. We present ZKBoost, the first zero-knowledge proof of training (zkPoT) protocol for XGBoost, enabling model owners to prove correct training on a committed dataset without revealing data or parameters. We make three key contributions: (1) a fixed-point XGBoost implementation compatible with arithmetic circuits, enabling instantiation of efficient zkPoT, (2) a generic template of zkPoT for XGBoost, which can be instantiated with any general-purpose ZKP backend, and (3) vector oblivious linear evaluation (VOLE)-based instantiation resolving challenges in proving nonlinear fixed-point operations. Our fixed-point implementation matches standard XGBoost accuracy within 1\% while enabling practical zkPoT on real-world datasets.

Luminark: Training-free, Probabilistically-Certified Watermarking for General Vision Generative Models

Jan 03, 2026Abstract:In this paper, we introduce \emph{Luminark}, a training-free and probabilistically-certified watermarking method for general vision generative models. Our approach is built upon a novel watermark definition that leverages patch-level luminance statistics. Specifically, the service provider predefines a binary pattern together with corresponding patch-level thresholds. To detect a watermark in a given image, we evaluate whether the luminance of each patch surpasses its threshold and then verify whether the resulting binary pattern aligns with the target one. A simple statistical analysis demonstrates that the false positive rate of the proposed method can be effectively controlled, thereby ensuring certified detection. To enable seamless watermark injection across different paradigms, we leverage the widely adopted guidance technique as a plug-and-play mechanism and develop the \emph{watermark guidance}. This design enables Luminark to achieve generality across state-of-the-art generative models without compromising image quality. Empirically, we evaluate our approach on nine models spanning diffusion, autoregressive, and hybrid frameworks. Across all evaluations, Luminark consistently demonstrates high detection accuracy, strong robustness against common image transformations, and good performance on visual quality.

Meta Lattice: Model Space Redesign for Cost-Effective Industry-Scale Ads Recommendations

Dec 15, 2025Abstract:The rapidly evolving landscape of products, surfaces, policies, and regulations poses significant challenges for deploying state-of-the-art recommendation models at industry scale, primarily due to data fragmentation across domains and escalating infrastructure costs that hinder sustained quality improvements. To address this challenge, we propose Lattice, a recommendation framework centered around model space redesign that extends Multi-Domain, Multi-Objective (MDMO) learning beyond models and learning objectives. Lattice addresses these challenges through a comprehensive model space redesign that combines cross-domain knowledge sharing, data consolidation, model unification, distillation, and system optimizations to achieve significant improvements in both quality and cost-efficiency. Our deployment of Lattice at Meta has resulted in 10% revenue-driving top-line metrics gain, 11.5% user satisfaction improvement, 6% boost in conversion rate, with 20% capacity saving.

Virtual Width Networks

Nov 17, 2025

Abstract:We introduce Virtual Width Networks (VWN), a framework that delivers the benefits of wider representations without incurring the quadratic cost of increasing the hidden size. VWN decouples representational width from backbone width, expanding the embedding space while keeping backbone compute nearly constant. In our large-scale experiment, an 8-times expansion accelerates optimization by over 2 times for next-token and 3 times for next-2-token prediction. The advantage amplifies over training as both the loss gap grows and the convergence-speedup ratio increases, showing that VWN is not only token-efficient but also increasingly effective with scale. Moreover, we identify an approximately log-linear scaling relation between virtual width and loss reduction, offering an initial empirical basis and motivation for exploring virtual-width scaling as a new dimension of large-model efficiency.

Synchro-Thermography: Monitoring ~10 mK Facial Temperature Changes with Heartbeat Referencing for Physiological Sensing

Jun 05, 2025Abstract:Infrared thermography has gained interest as a tool for non-contact measurement of blood circulation and skin blood flow due to cardiac activity. Partiularly, blood vessels on the surface, such as on the back of the hand, are suited for visualization. However, standardized methodologies have not yet been established for areas such as the face and neck, where many blood vessels are lie deeper beneath the surface, and external stimulation for measurement could be harmful. Here we propose Synchro-Thermography for stable monitoring of facial temperature changes associated with heart rate variability. We conducted experiments with eight subjects and measured minute temperature changes with an amplitude of about \SI{10}{mK} on the forehead and chin. The proposed method improves the temperature resolution by a factor of 2 or more, and can stably measure skin temperature changes caused by blood flow. This skin temperature change could be applied to physiological sensing such as blood flow changes due to injury or disease, or as an indicator of stress.

Deformable Registration Framework for Augmented Reality-based Surgical Guidance in Head and Neck Tumor Resection

Mar 11, 2025Abstract:Head and neck squamous cell carcinoma (HNSCC) has one of the highest rates of recurrence cases among solid malignancies. Recurrence rates can be reduced by improving positive margins localization. Frozen section analysis (FSA) of resected specimens is the gold standard for intraoperative margin assessment. However, because of the complex 3D anatomy and the significant shrinkage of resected specimens, accurate margin relocation from specimen back onto the resection site based on FSA results remains challenging. We propose a novel deformable registration framework that uses both the pre-resection upper surface and the post-resection site of the specimen to incorporate thickness information into the registration process. The proposed method significantly improves target registration error (TRE), demonstrating enhanced adaptability to thicker specimens. In tongue specimens, the proposed framework improved TRE by up to 33% as compared to prior deformable registration. Notably, tongue specimens exhibit complex 3D anatomies and hold the highest clinical significance compared to other head and neck specimens from the buccal and skin. We analyzed distinct deformation behaviors in different specimens, highlighting the need for tailored deformation strategies. To further aid intraoperative visualization, we also integrated this framework with an augmented reality-based auto-alignment system. The combined system can accurately and automatically overlay the deformed 3D specimen mesh with positive margin annotation onto the resection site. With a pilot study of the AR guided framework involving two surgeons, the integrated system improved the surgeons' average target relocation error from 9.8 cm to 4.8 cm.

Weaver: Foundation Models for Creative Writing

Jan 30, 2024

Abstract:This work introduces Weaver, our first family of large language models (LLMs) dedicated to content creation. Weaver is pre-trained on a carefully selected corpus that focuses on improving the writing capabilities of large language models. We then fine-tune Weaver for creative and professional writing purposes and align it to the preference of professional writers using a suit of novel methods for instruction data synthesis and LLM alignment, making it able to produce more human-like texts and follow more diverse instructions for content creation. The Weaver family consists of models of Weaver Mini (1.8B), Weaver Base (6B), Weaver Pro (14B), and Weaver Ultra (34B) sizes, suitable for different applications and can be dynamically dispatched by a routing agent according to query complexity to balance response quality and computation cost. Evaluation on a carefully curated benchmark for assessing the writing capabilities of LLMs shows Weaver models of all sizes outperform generalist LLMs several times larger than them. Notably, our most-capable Weaver Ultra model surpasses GPT-4, a state-of-the-art generalist LLM, on various writing scenarios, demonstrating the advantage of training specialized LLMs for writing purposes. Moreover, Weaver natively supports retrieval-augmented generation (RAG) and function calling (tool usage). We present various use cases of these abilities for improving AI-assisted writing systems, including integration of external knowledge bases, tools, or APIs, and providing personalized writing assistance. Furthermore, we discuss and summarize a guideline and best practices for pre-training and fine-tuning domain-specific LLMs.

VMap: An Interactive Rectangular Space-filling Visualization for Map-like Vertex-centric Graph Exploration

May 31, 2023

Abstract:We present VMap, a map-like rectangular space-filling visualization, to perform vertex-centric graph exploration. Existing visualizations have limited support for quality optimization among rectangular aspect ratios, vertex-edge intersection, and data encoding accuracy. To tackle this problem, VMap integrates three novel components: (1) a desired-aspect-ratio (DAR) rectangular partitioning algorithm, (2) a two-stage rectangle adjustment algorithm, and (3) a simulated annealing based heuristic optimizer. First, to generate a rectangular space-filling layout of an input graph, we subdivide the 2D embedding of the graph into rectangles with optimization of rectangles' aspect ratios toward a desired aspect ratio. Second, to route graph edges between rectangles without vertex-edge occlusion, we devise a two-stage algorithm to adjust a rectangular layout to insert border space between rectangles. Third, to produce and arrange rectangles by considering multiple visual criteria, we design a simulated annealing based heuristic optimization to adjust vertices' 2D embedding to support trade-offs among aspect ratio quality and the encoding accuracy of vertices' weights and adjacency. We evaluated the effectiveness of VMap on both synthetic and application datasets. The resulting rectangular layout has better aspect ratio quality on synthetic data compared with the existing method for the rectangular partitioning of 2D points. On three real-world datasets, VMap achieved better encoding accuracy and attained faster generation speed compared with existing methods on graphs' rectangular layout generation. We further illustrate the usefulness of VMap for vertex-centric graph exploration through three case studies on visualizing social networks, representing academic communities, and displaying geographic information.

IDLat: An Importance-Driven Latent Generation Method for Scientific Data

Aug 05, 2022

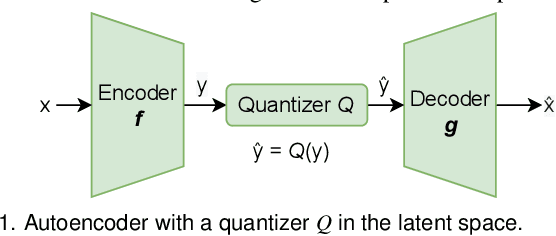

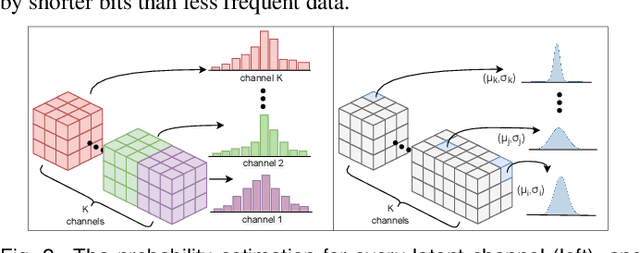

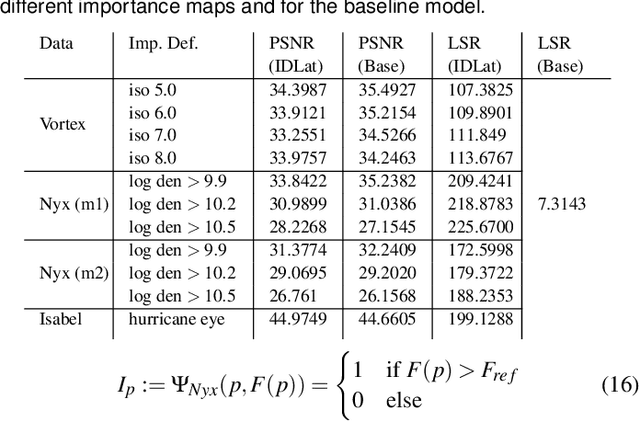

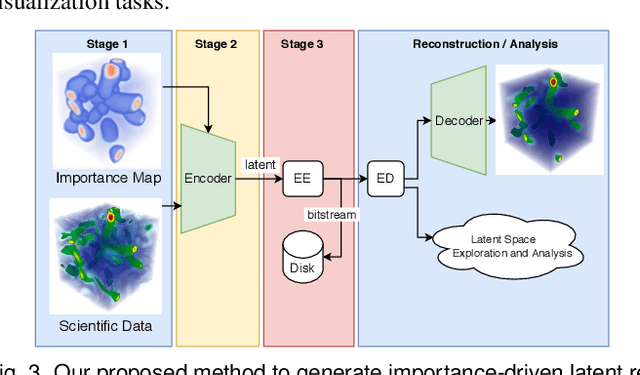

Abstract:Deep learning based latent representations have been widely used for numerous scientific visualization applications such as isosurface similarity analysis, volume rendering, flow field synthesis, and data reduction, just to name a few. However, existing latent representations are mostly generated from raw data in an unsupervised manner, which makes it difficult to incorporate domain interest to control the size of the latent representations and the quality of the reconstructed data. In this paper, we present a novel importance-driven latent representation to facilitate domain-interest-guided scientific data visualization and analysis. We utilize spatial importance maps to represent various scientific interests and take them as the input to a feature transformation network to guide latent generation. We further reduced the latent size by a lossless entropy encoding algorithm trained together with the autoencoder, improving the storage and memory efficiency. We qualitatively and quantitatively evaluate the effectiveness and efficiency of latent representations generated by our method with data from multiple scientific visualization applications.

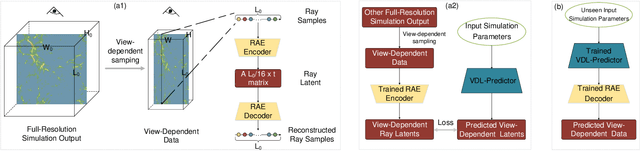

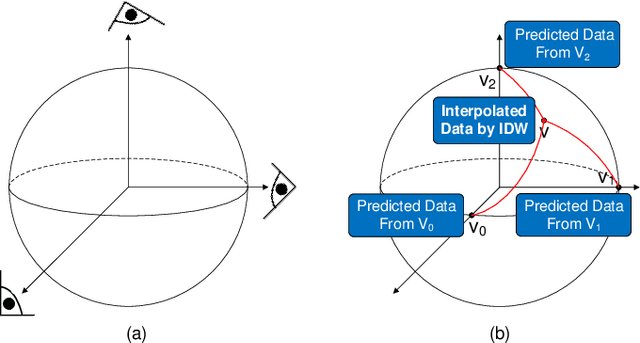

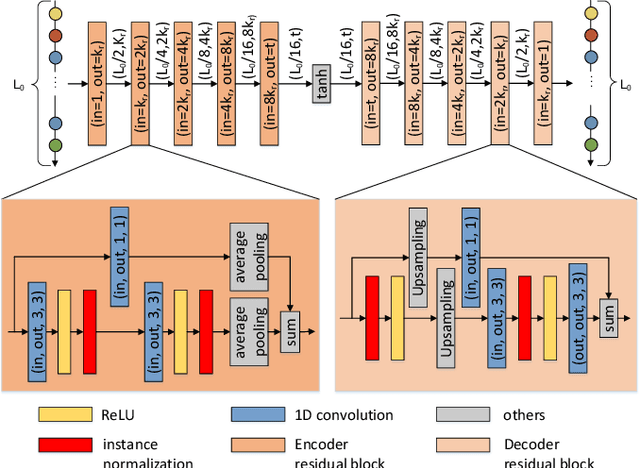

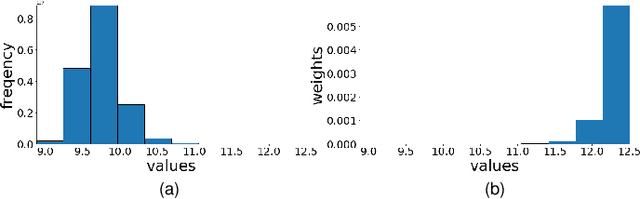

VDL-Surrogate: A View-Dependent Latent-based Model for Parameter Space Exploration of Ensemble Simulations

Jul 29, 2022

Abstract:We propose VDL-Surrogate, a view-dependent neural-network-latent-based surrogate model for parameter space exploration of ensemble simulations that allows high-resolution visualizations and user-specified visual mappings. Surrogate-enabled parameter space exploration allows domain scientists to preview simulation results without having to run a large number of computationally costly simulations. Limited by computational resources, however, existing surrogate models may not produce previews with sufficient resolution for visualization and analysis. To improve the efficient use of computational resources and support high-resolution exploration, we perform ray casting from different viewpoints to collect samples and produce compact latent representations. This latent encoding process reduces the cost of surrogate model training while maintaining the output quality. In the model training stage, we select viewpoints to cover the whole viewing sphere and train corresponding VDL-Surrogate models for the selected viewpoints. In the model inference stage, we predict the latent representations at previously selected viewpoints and decode the latent representations to data space. For any given viewpoint, we make interpolations over decoded data at selected viewpoints and generate visualizations with user-specified visual mappings. We show the effectiveness and efficiency of VDL-Surrogate in cosmological and ocean simulations with quantitative and qualitative evaluations. Source code is publicly available at https://github.com/trainsn/VDL-Surrogate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge