"facial recognition": models, code, and papers

Human Face Recognition from Part of a Facial Image based on Image Stitching

Mar 10, 2022

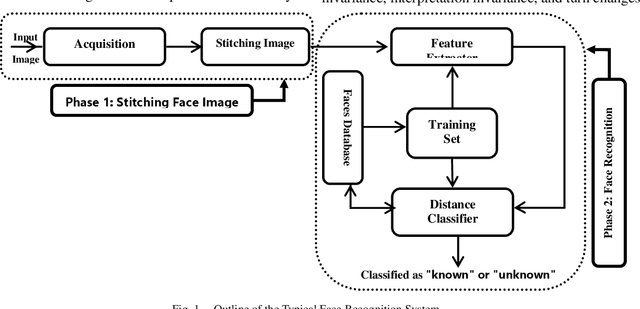

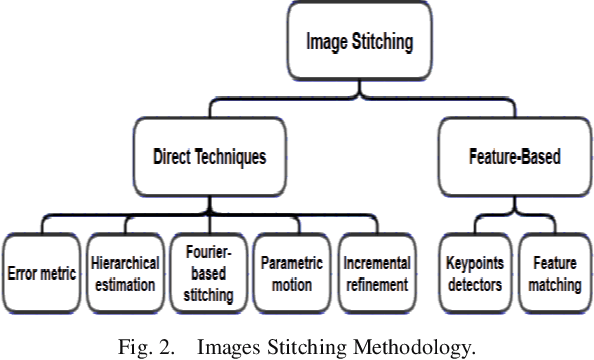

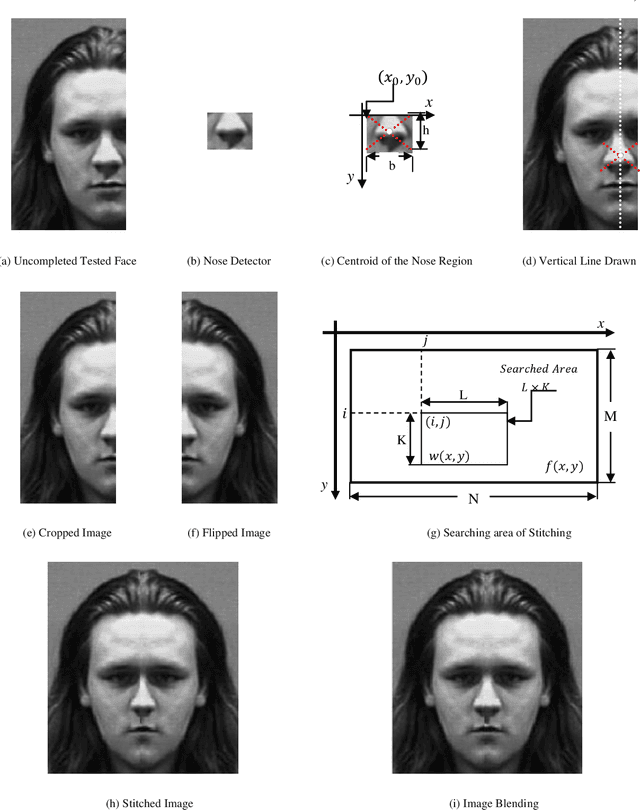

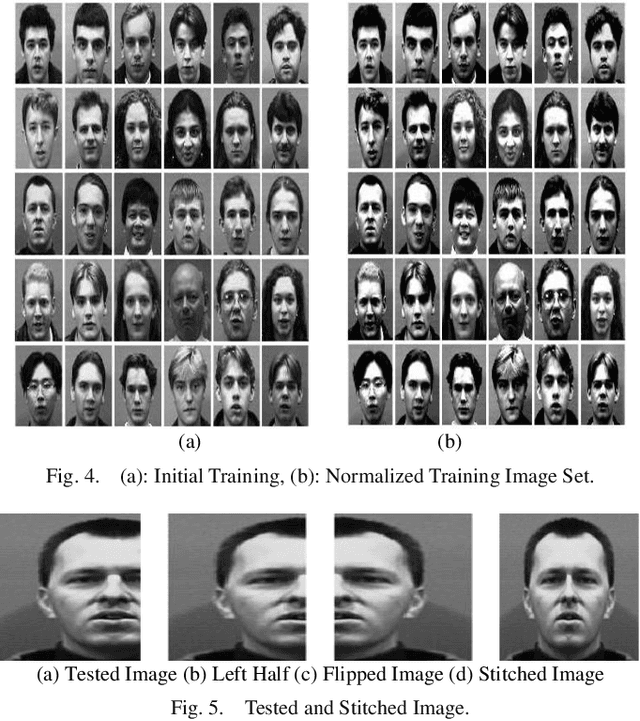

Most of the current techniques for face recognition require the presence of a full face of the person to be recognized, and this situation is difficult to achieve in practice, the required person may appear with a part of his face, which requires prediction of the part that did not appear. Most of the current forecasting processes are done by what is known as image interpolation, which does not give reliable results, especially if the missing part is large. In this work, we adopted the process of stitching the face by completing the missing part with the flipping of the part shown in the picture, depending on the fact that the human face is characterized by symmetry in most cases. To create a complete model, two facial recognition methods were used to prove the efficiency of the algorithm. The selected face recognition algorithms that are applied here are Eigenfaces and geometrical methods. Image stitching is the process during which distinctive photographic images are combined to make a complete scene or a high-resolution image. Several images are integrated to form a wide-angle panoramic image. The quality of the image stitching is determined by calculating the similarity among the stitched image and original images and by the presence of the seam lines through the stitched images. The Eigenfaces approach utilizes PCA calculation to reduce the feature vector dimensions. It provides an effective approach for discovering the lower-dimensional space. In addition, to enable the proposed algorithm to recognize the face, it also ensures a fast and effective way of classifying faces. The phase of feature extraction is followed by the classifier phase.

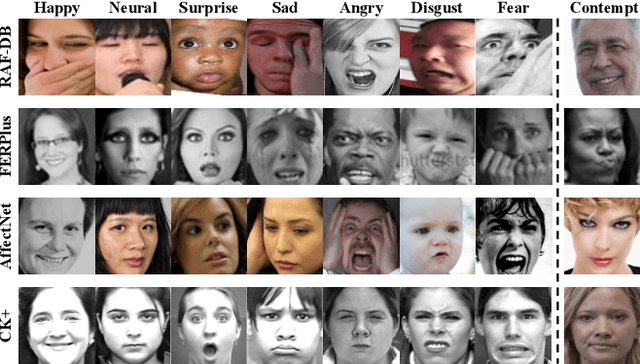

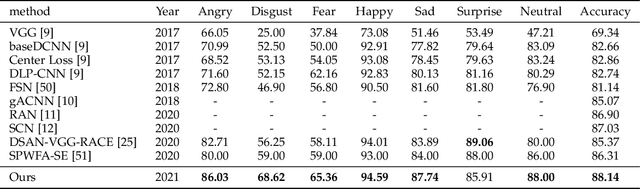

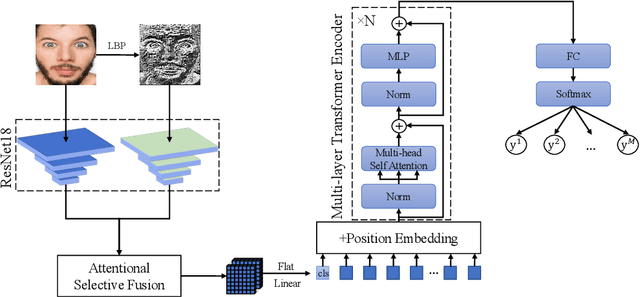

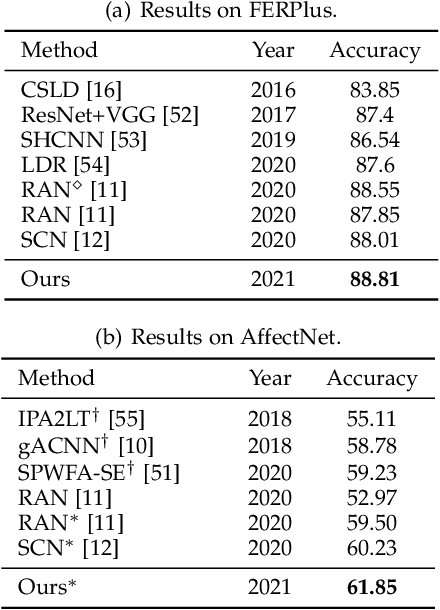

Robust Facial Expression Recognition with Convolutional Visual Transformers

Mar 31, 2021

Facial Expression Recognition (FER) in the wild is extremely challenging due to occlusions, variant head poses, face deformation and motion blur under unconstrained conditions. Although substantial progresses have been made in automatic FER in the past few decades, previous studies are mainly designed for lab-controlled FER. Real-world occlusions, variant head poses and other issues definitely increase the difficulty of FER on account of these information-deficient regions and complex backgrounds. Different from previous pure CNNs based methods, we argue that it is feasible and practical to translate facial images into sequences of visual words and perform expression recognition from a global perspective. Therefore, we propose Convolutional Visual Transformers to tackle FER in the wild by two main steps. First, we propose an attentional selective fusion (ASF) for leveraging the feature maps generated by two-branch CNNs. The ASF captures discriminative information by fusing multiple features with global-local attention. The fused feature maps are then flattened and projected into sequences of visual words. Second, inspired by the success of Transformers in natural language processing, we propose to model relationships between these visual words with global self-attention. The proposed method are evaluated on three public in-the-wild facial expression datasets (RAF-DB, FERPlus and AffectNet). Under the same settings, extensive experiments demonstrate that our method shows superior performance over other methods, setting new state of the art on RAF-DB with 88.14%, FERPlus with 88.81% and AffectNet with 61.85%. We also conduct cross-dataset evaluation on CK+ show the generalization capability of the proposed method.

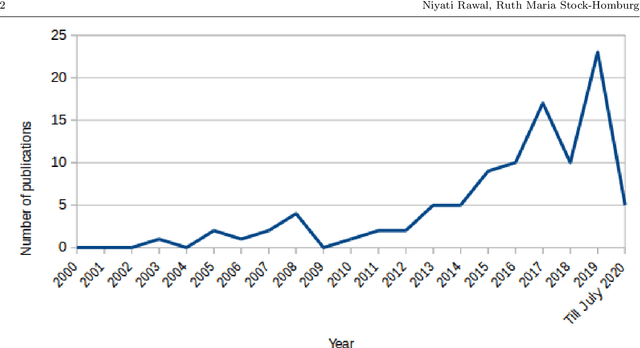

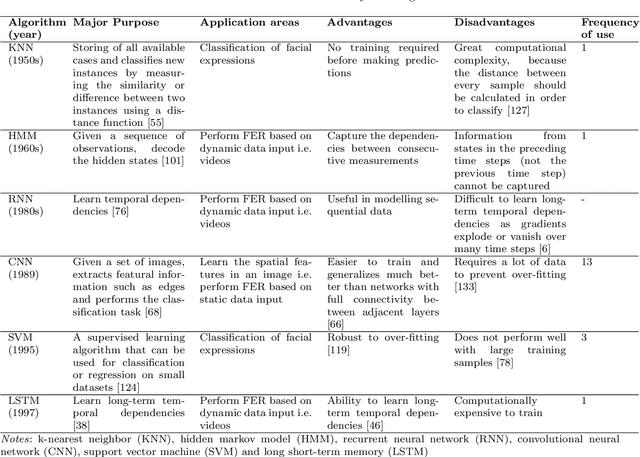

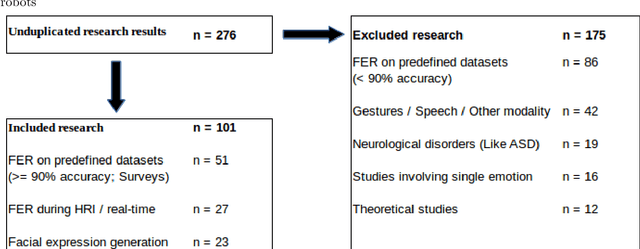

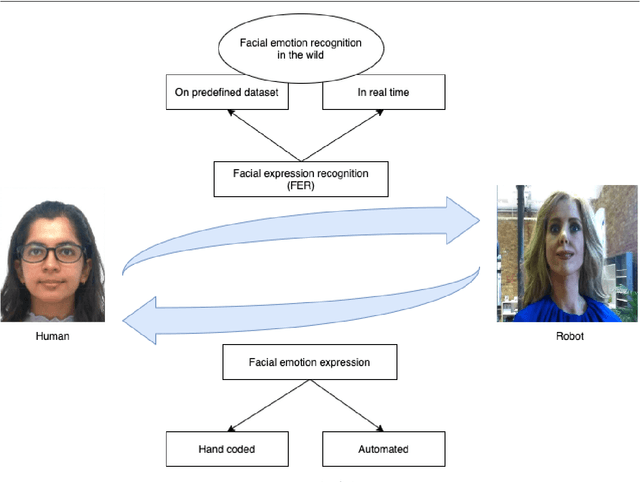

Facial emotion expressions in human-robot interaction: A survey

Mar 12, 2021

Facial expressions are an ideal means of communicating one's emotions or intentions to others. This overview will focus on human facial expression recognition as well as robotic facial expression generation. In case of human facial expression recognition, both facial expression recognition on predefined datasets as well as in real time will be covered. For robotic facial expression generation, hand coded and automated methods i.e., facial expressions of a robot are generated by moving the features (eyes, mouth) of the robot by hand coding or automatically using machine learning techniques, will also be covered. There are already plenty of studies that achieve high accuracy for emotion expression recognition on predefined datasets, but the accuracy for facial expression recognition in real time is comparatively lower. In case of expression generation in robots, while most of the robots are capable of making basic facial expressions, there are not many studies that enable robots to do so automatically.

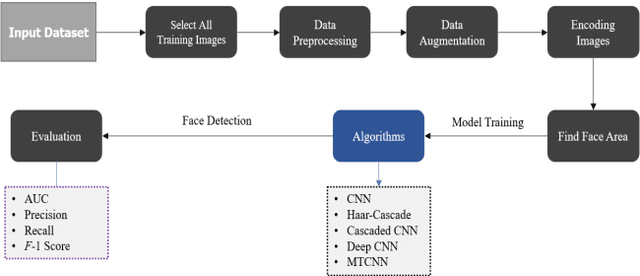

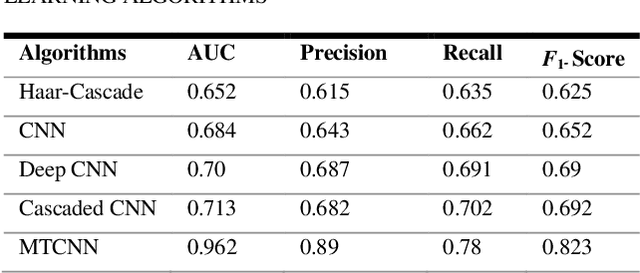

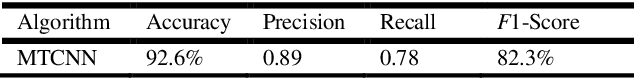

Convolutional Neural Network Based Partial Face Detection

Jun 29, 2022

Due to the massive explanation of artificial intelligence, machine learning technology is being used in various areas of our day-to-day life. In the world, there are a lot of scenarios where a simple crime can be prevented before it may even happen or find the person responsible for it. A face is one distinctive feature that we have and can differentiate easily among many other species. But not just different species, it also plays a significant role in determining someone from the same species as us, humans. Regarding this critical feature, a single problem occurs most often nowadays. When the camera is pointed, it cannot detect a person's face, and it becomes a poor image. On the other hand, where there was a robbery and a security camera installed, the robber's identity is almost indistinguishable due to the low-quality camera. But just making an excellent algorithm to work and detecting a face reduces the cost of hardware, and it doesn't cost that much to focus on that area. Facial recognition, widget control, and such can be done by detecting the face correctly. This study aims to create and enhance a machine learning model that correctly recognizes faces. Total 627 Data have been collected from different Bangladeshi people's faces on four angels. In this work, CNN, Harr Cascade, Cascaded CNN, Deep CNN & MTCNN are these five machine learning approaches implemented to get the best accuracy of our dataset. After creating and running the model, Multi-Task Convolutional Neural Network (MTCNN) achieved 96.2% best model accuracy with training data rather than other machine learning models.

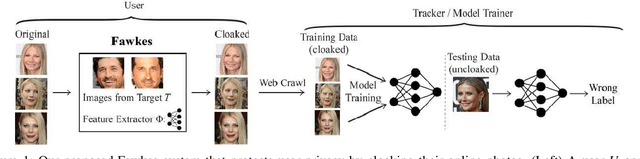

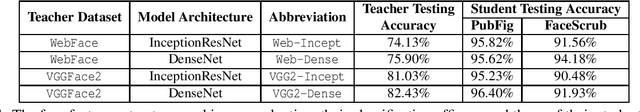

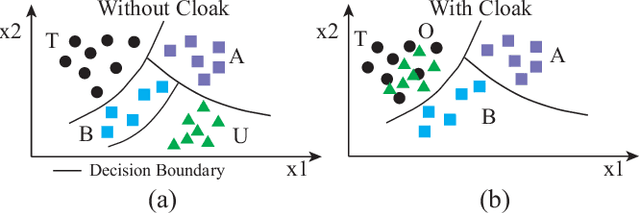

Fawkes: Protecting Personal Privacy against Unauthorized Deep Learning Models

Feb 19, 2020

Today's proliferation of powerful facial recognition models poses a real threat to personal privacy. As Clearview.ai demonstrated, anyone can canvas the Internet for data, and train highly accurate facial recognition models of us without our knowledge. We need tools to protect ourselves from unauthorized facial recognition systems and their numerous potential misuses. Unfortunately, work in related areas are limited in practicality and effectiveness. In this paper, we propose Fawkes, a system that allow individuals to inoculate themselves against unauthorized facial recognition models. Fawkes achieves this by helping users adding imperceptible pixel-level changes (we call them "cloaks") to their own photos before publishing them online. When collected by a third-party "tracker" and used to train facial recognition models, these "cloaked" images produce functional models that consistently misidentify the user. We experimentally prove that Fawkes provides 95+% protection against user recognition regardless of how trackers train their models. Even when clean, uncloaked images are "leaked" to the tracker and used for training, Fawkes can still maintain a 80+% protection success rate. In fact, we perform real experiments against today's state-of-the-art facial recognition services and achieve 100% success. Finally, we show that Fawkes is robust against a variety of countermeasures that try to detect or disrupt cloaks.

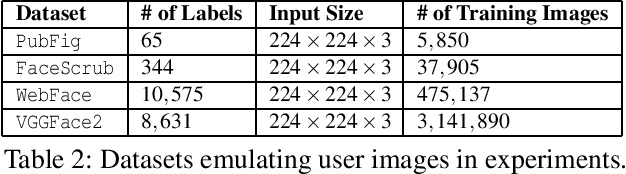

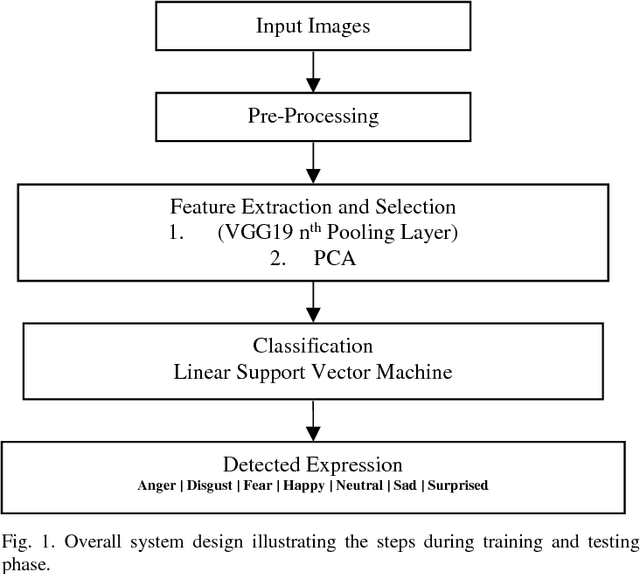

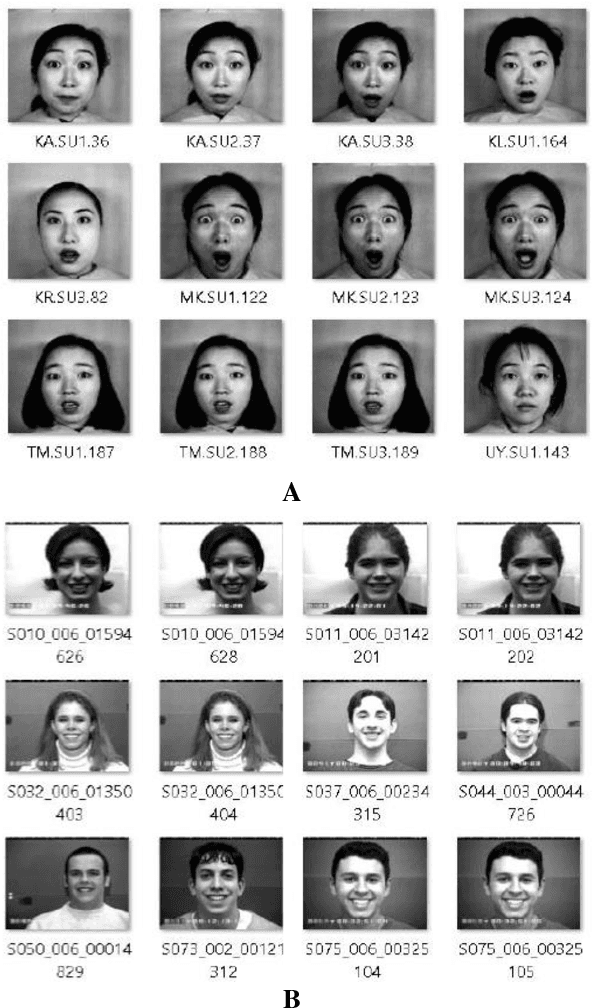

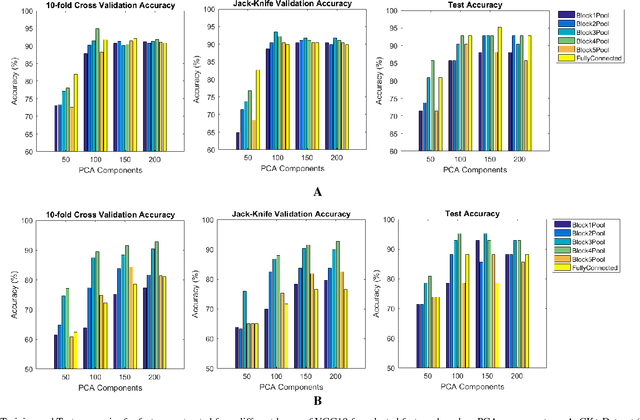

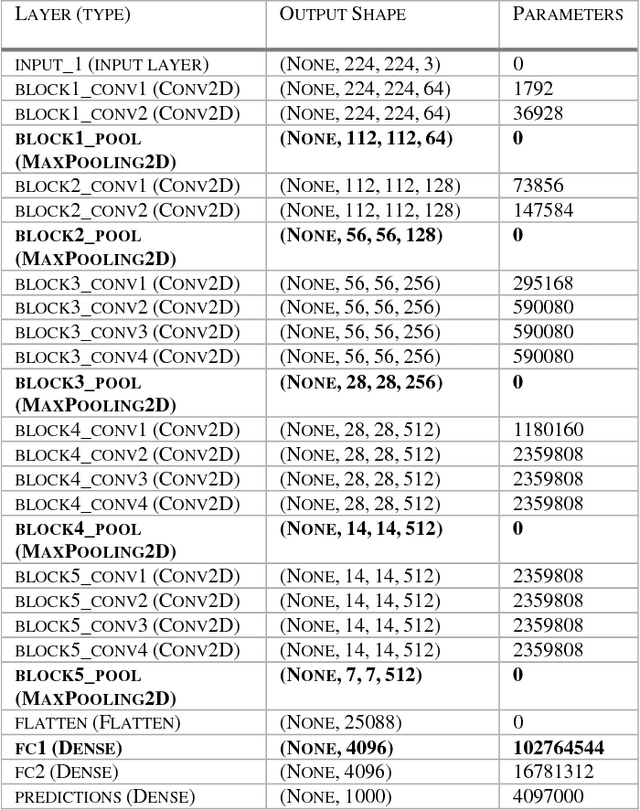

Pre-Trained Convolutional Neural Network Features for Facial Expression Recognition

Dec 16, 2018

Facial expression recognition has been an active area in computer vision with application areas including animation, social robots, personalized banking, etc. In this study, we explore the problem of image classification for detecting facial expressions based on features extracted from pre-trained convolutional neural networks trained on ImageNet database. Features are extracted and transferred to a Linear Support Vector Machine for classification. All experiments are performed on two publicly available datasets such as JAFFE and CK+ database. The results show that representations learned from pre-trained networks for a task such as object recognition can be transferred, and used for facial expression recognition. Furthermore, for a small dataset, using features from earlier layers of the VGG19 network provides better classification accuracy. Accuracies of 92.26% and 92.86% were achieved for the CK+ and JAFFE datasets respectively.

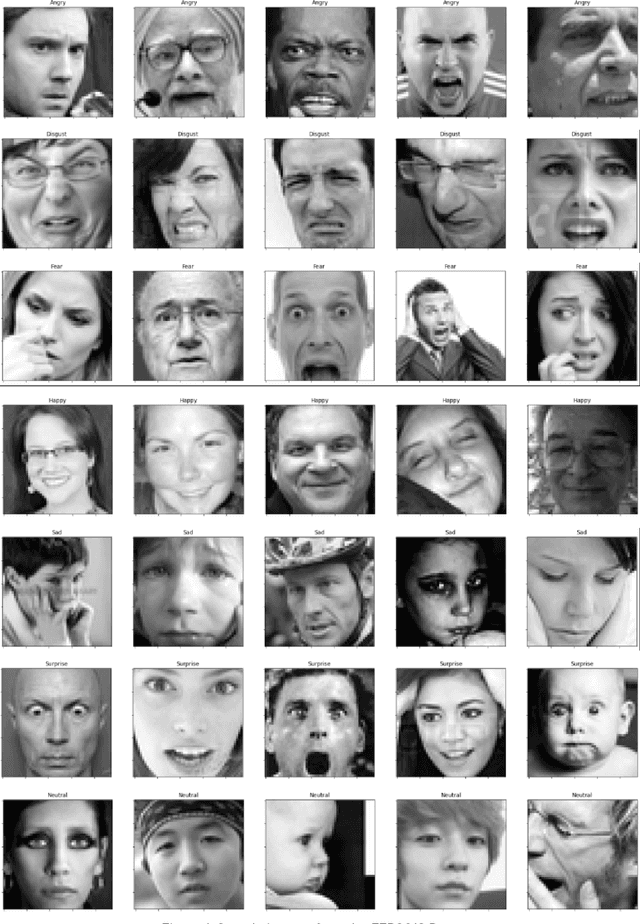

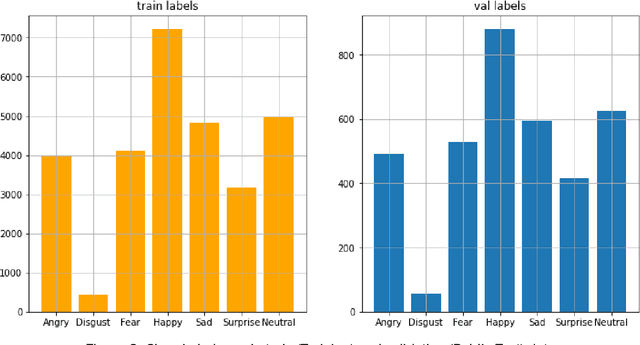

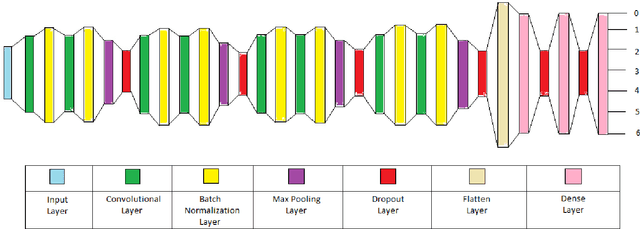

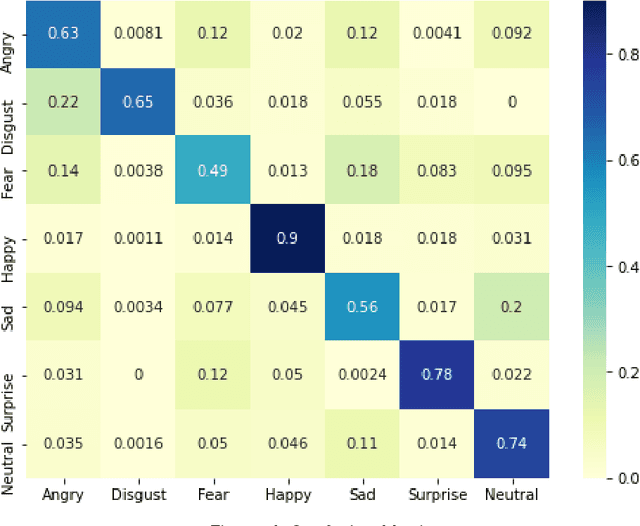

Facial Expressions Recognition with Convolutional Neural Networks

Jul 19, 2021

Over the centuries, humans have developed and acquired a number of ways to communicate. But hardly any of them can be as natural and instinctive as facial expressions. On the other hand, neural networks have taken the world by storm. And no surprises, that the area of Computer Vision and the problem of facial expressions recognitions hasn't remained untouched. Although a wide range of techniques have been applied, achieving extremely high accuracies and preparing highly robust FER systems still remains a challenge due to heterogeneous details in human faces. In this paper, we will be deep diving into implementing a system for recognition of facial expressions (FER) by leveraging neural networks, and more specifically, Convolutional Neural Networks (CNNs). We adopt the fundamental concepts of deep learning and computer vision with various architectures, fine-tune it's hyperparameters and experiment with various optimization methods and demonstrate a state-of-the-art single-network-accuracy of 70.10% on the FER2013 dataset without using any additional training data.

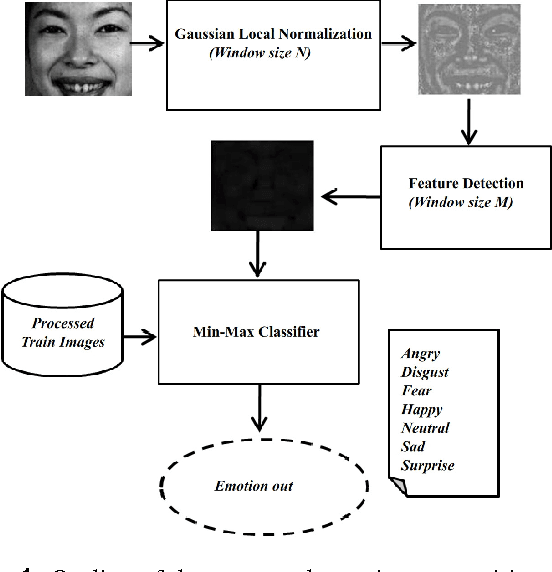

Facial emotion recognition using min-max similarity classifier

Jan 01, 2018

Recognition of human emotions from the imaging templates is useful in a wide variety of human-computer interaction and intelligent systems applications. However, the automatic recognition of facial expressions using image template matching techniques suffer from the natural variability with facial features and recording conditions. In spite of the progress achieved in facial emotion recognition in recent years, the effective and computationally simple feature selection and classification technique for emotion recognition is still an open problem. In this paper, we propose an efficient and straightforward facial emotion recognition algorithm to reduce the problem of inter-class pixel mismatch during classification. The proposed method includes the application of pixel normalization to remove intensity offsets followed-up with a Min-Max metric in a nearest neighbor classifier that is capable of suppressing feature outliers. The results indicate an improvement of recognition performance from 92.85% to 98.57% for the proposed Min-Max classification method when tested on JAFFE database. The proposed emotion recognition technique outperforms the existing template matching methods.

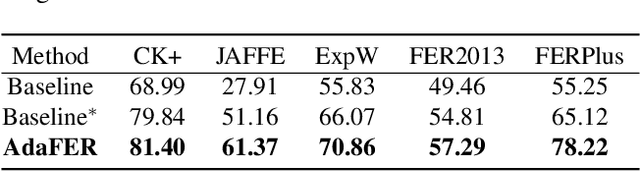

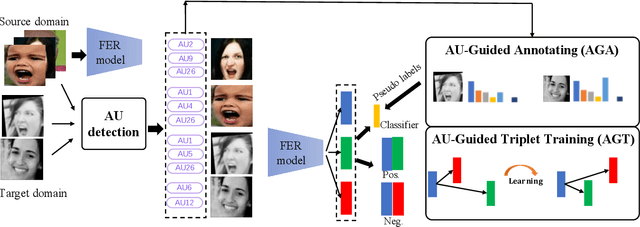

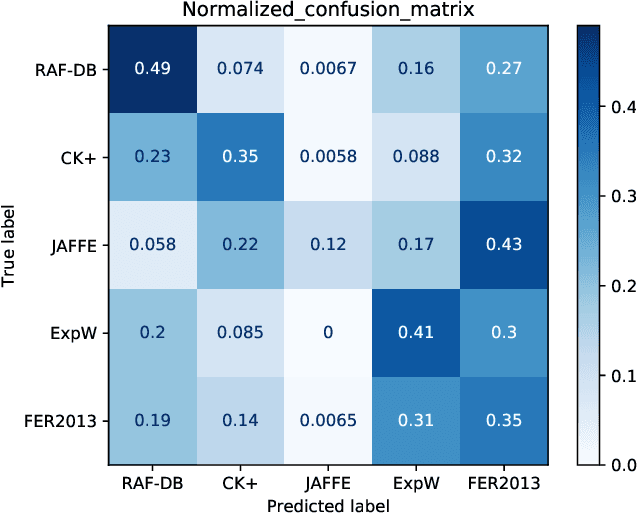

AU-Guided Unsupervised Domain Adaptive Facial Expression Recognition

Dec 18, 2020

The domain diversities including inconsistent annotation and varied image collection conditions inevitably exist among different facial expression recognition (FER) datasets, which pose an evident challenge for adapting the FER model trained on one dataset to another one. Recent works mainly focus on domain-invariant deep feature learning with adversarial learning mechanism, ignoring the sibling facial action unit (AU) detection task which has obtained great progress. Considering AUs objectively determine facial expressions, this paper proposes an AU-guided unsupervised Domain Adaptive FER (AdaFER) framework. In AdaFER, we first leverage an advanced model for AU detection on both source and target domain. Then, we compare the AU results to perform AU-guided annotating, i.e., target faces that own the same AUs with source faces would inherit the labels from source domain. Meanwhile, to achieve domain-invariant compact features, we utilize an AU-guided triplet training which randomly collects anchor-positive-negative triplets on both domains with AUs. We conduct extensive experiments on several popular benchmarks and show that AdaFER achieves state-of-the-art results on all the benchmarks.

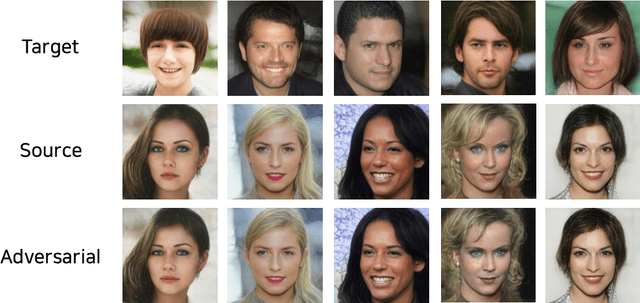

Unrestricted Black-box Adversarial Attack Using GAN with Limited Queries

Aug 24, 2022

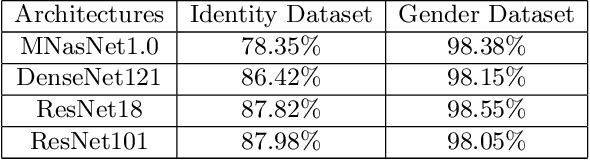

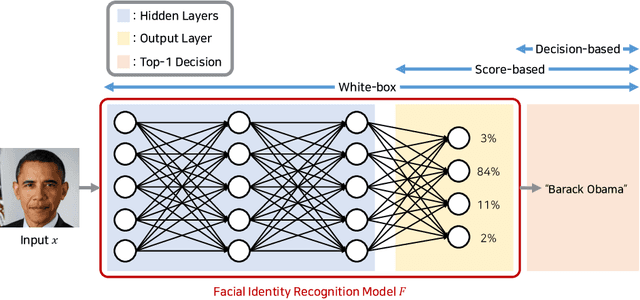

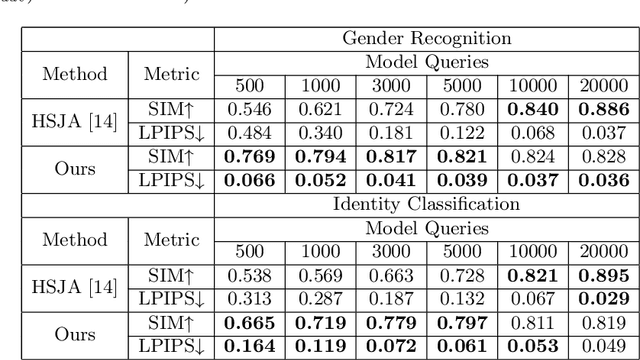

Adversarial examples are inputs intentionally generated for fooling a deep neural network. Recent studies have proposed unrestricted adversarial attacks that are not norm-constrained. However, the previous unrestricted attack methods still have limitations to fool real-world applications in a black-box setting. In this paper, we present a novel method for generating unrestricted adversarial examples using GAN where an attacker can only access the top-1 final decision of a classification model. Our method, Latent-HSJA, efficiently leverages the advantages of a decision-based attack in the latent space and successfully manipulates the latent vectors for fooling the classification model. With extensive experiments, we demonstrate that our proposed method is efficient in evaluating the robustness of classification models with limited queries in a black-box setting. First, we demonstrate that our targeted attack method is query-efficient to produce unrestricted adversarial examples for a facial identity recognition model that contains 307 identities. Then, we demonstrate that the proposed method can also successfully attack a real-world celebrity recognition service.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge