Time Series Analysis

Time series analysis comprises statistical methods for analyzing a sequence of data points collected over an interval of time to identify interesting patterns and trends.

Papers and Code

Towards an Introspective Dynamic Model of Globally Distributed Computing Infrastructures

Jun 24, 2025Large-scale scientific collaborations like ATLAS, Belle II, CMS, DUNE, and others involve hundreds of research institutes and thousands of researchers spread across the globe. These experiments generate petabytes of data, with volumes soon expected to reach exabytes. Consequently, there is a growing need for computation, including structured data processing from raw data to consumer-ready derived data, extensive Monte Carlo simulation campaigns, and a wide range of end-user analysis. To manage these computational and storage demands, centralized workflow and data management systems are implemented. However, decisions regarding data placement and payload allocation are often made disjointly and via heuristic means. A significant obstacle in adopting more effective heuristic or AI-driven solutions is the absence of a quick and reliable introspective dynamic model to evaluate and refine alternative approaches. In this study, we aim to develop such an interactive system using real-world data. By examining job execution records from the PanDA workflow management system, we have pinpointed key performance indicators such as queuing time, error rate, and the extent of remote data access. The dataset includes five months of activity. Additionally, we are creating a generative AI model to simulate time series of payloads, which incorporate visible features like category, event count, and submitting group, as well as hidden features like the total computational load-derived from existing PanDA records and computing site capabilities. These hidden features, which are not visible to job allocators, whether heuristic or AI-driven, influence factors such as queuing times and data movement.

Forecasting Multivariate Urban Data via Decomposition and Spatio-Temporal Graph Analysis

May 28, 2025The forecasting of multivariate urban data presents a complex challenge due to the intricate dependencies between various urban metrics such as weather, air pollution, carbon intensity, and energy demand. This paper introduces a novel multivariate time-series forecasting model that utilizes advanced Graph Neural Networks (GNNs) to capture spatial dependencies among different time-series variables. The proposed model incorporates a decomposition-based preprocessing step, isolating trend, seasonal, and residual components to enhance the accuracy and interpretability of forecasts. By leveraging the dynamic capabilities of GNNs, the model effectively captures interdependencies and improves the forecasting performance. Extensive experiments on real-world datasets, including electricity usage, weather metrics, carbon intensity, and air pollution data, demonstrate the effectiveness of the proposed approach across various forecasting scenarios. The results highlight the potential of the model to optimize smart infrastructure systems, contributing to energy-efficient urban development and enhanced public well-being.

One Rank at a Time: Cascading Error Dynamics in Sequential Learning

May 28, 2025Sequential learning -- where complex tasks are broken down into simpler, hierarchical components -- has emerged as a paradigm in AI. This paper views sequential learning through the lens of low-rank linear regression, focusing specifically on how errors propagate when learning rank-1 subspaces sequentially. We present an analysis framework that decomposes the learning process into a series of rank-1 estimation problems, where each subsequent estimation depends on the accuracy of previous steps. Our contribution is a characterization of the error propagation in this sequential process, establishing bounds on how errors -- e.g., due to limited computational budgets and finite precision -- affect the overall model accuracy. We prove that these errors compound in predictable ways, with implications for both algorithmic design and stability guarantees.

Synthetic Time Series Forecasting with Transformer Architectures: Extensive Simulation Benchmarks

May 26, 2025

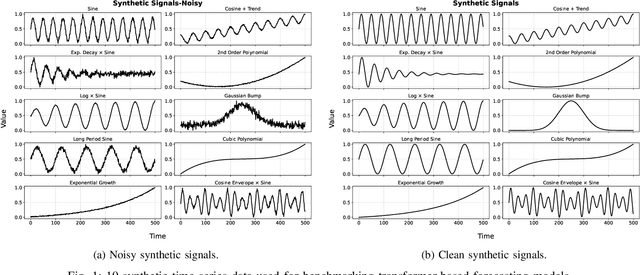

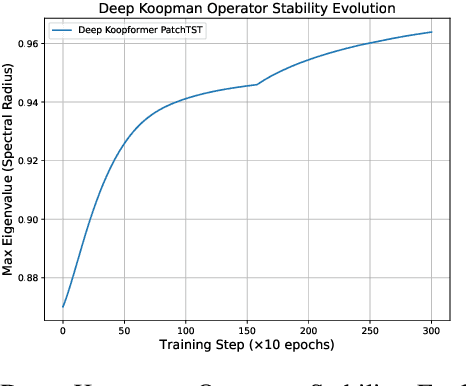

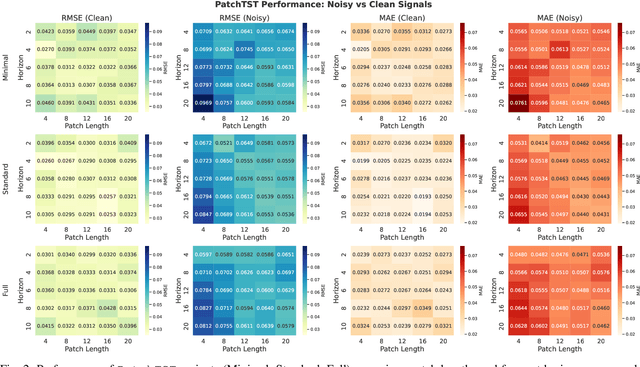

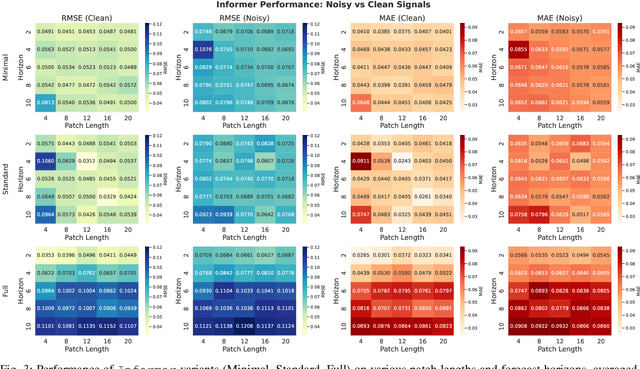

Time series forecasting plays a critical role in domains such as energy, finance, and healthcare, where accurate predictions inform decision-making under uncertainty. Although Transformer-based models have demonstrated success in sequential modeling, their adoption for time series remains limited by challenges such as noise sensitivity, long-range dependencies, and a lack of inductive bias for temporal structure. In this work, we present a unified and principled framework for benchmarking three prominent Transformer forecasting architectures-Autoformer, Informer, and Patchtst-each evaluated through three architectural variants: Minimal, Standard, and Full, representing increasing levels of complexity and modeling capacity. We conduct over 1500 controlled experiments on a suite of ten synthetic signals, spanning five patch lengths and five forecast horizons under both clean and noisy conditions. Our analysis reveals consistent patterns across model families. To advance this landscape further, we introduce the Koopman-enhanced Transformer framework, Deep Koopformer, which integrates operator-theoretic latent state modeling to improve stability and interpretability. We demonstrate its efficacy on nonlinear and chaotic dynamical systems. Our results highlight Koopman based Transformer as a promising hybrid approach for robust, interpretable, and theoretically grounded time series forecasting in noisy and complex real-world conditions.

crossMoDA Challenge: Evolution of Cross-Modality Domain Adaptation Techniques for Vestibular Schwannoma and Cochlea Segmentation from 2021 to 2023

Jun 13, 2025The cross-Modality Domain Adaptation (crossMoDA) challenge series, initiated in 2021 in conjunction with the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), focuses on unsupervised cross-modality segmentation, learning from contrast-enhanced T1 (ceT1) and transferring to T2 MRI. The task is an extreme example of domain shift chosen to serve as a meaningful and illustrative benchmark. From a clinical application perspective, it aims to automate Vestibular Schwannoma (VS) and cochlea segmentation on T2 scans for more cost-effective VS management. Over time, the challenge objectives have evolved to enhance its clinical relevance. The challenge evolved from using single-institutional data and basic segmentation in 2021 to incorporating multi-institutional data and Koos grading in 2022, and by 2023, it included heterogeneous routine data and sub-segmentation of intra- and extra-meatal tumour components. In this work, we report the findings of the 2022 and 2023 editions and perform a retrospective analysis of the challenge progression over the years. The observations from the successive challenge contributions indicate that the number of outliers decreases with an expanding dataset. This is notable since the diversity of scanning protocols of the datasets concurrently increased. The winning approach of the 2023 edition reduced the number of outliers on the 2021 and 2022 testing data, demonstrating how increased data heterogeneity can enhance segmentation performance even on homogeneous data. However, the cochlea Dice score declined in 2023, likely due to the added complexity from tumour sub-annotations affecting overall segmentation performance. While progress is still needed for clinically acceptable VS segmentation, the plateauing performance suggests that a more challenging cross-modal task may better serve future benchmarking.

Online Conformal Model Selection for Nonstationary Time Series

Jun 05, 2025This paper introduces the MPS (Model Prediction Set), a novel framework for online model selection for nonstationary time series. Classical model selection methods, such as information criteria and cross-validation, rely heavily on the stationarity assumption and often fail in dynamic environments which undergo gradual or abrupt changes over time. Yet real-world data are rarely stationary, and model selection under nonstationarity remains a largely open problem. To tackle this challenge, we combine conformal inference with model confidence sets to develop a procedure that adaptively selects models best suited to the evolving dynamics at any given time. Concretely, the MPS updates in real time a confidence set of candidate models that covers the best model for the next time period with a specified long-run probability, while adapting to nonstationarity of unknown forms. Through simulations and real-world data analysis, we demonstrate that MPS reliably and efficiently identifies optimal models under nonstationarity, an essential capability lacking in offline methods. Moreover, MPS frequently produces high-quality sets with small cardinality, whose evolution offers deeper insights into changing dynamics. As a generic framework, MPS accommodates any data-generating process, data structure, model class, training method, and evaluation metric, making it broadly applicable across diverse problem settings.

Sonnet: Spectral Operator Neural Network for Multivariable Time Series Forecasting

May 21, 2025Multivariable time series forecasting methods can integrate information from exogenous variables, leading to significant prediction accuracy gains. Transformer architecture has been widely applied in various time series forecasting models due to its ability to capture long-range sequential dependencies. However, a na\"ive application of transformers often struggles to effectively model complex relationships among variables over time. To mitigate against this, we propose a novel architecture, namely the Spectral Operator Neural Network (Sonnet). Sonnet applies learnable wavelet transformations to the input and incorporates spectral analysis using the Koopman operator. Its predictive skill relies on the Multivariable Coherence Attention (MVCA), an operation that leverages spectral coherence to model variable dependencies. Our empirical analysis shows that Sonnet yields the best performance on $34$ out of $47$ forecasting tasks with an average mean absolute error (MAE) reduction of $1.1\%$ against the most competitive baseline (different per task). We further show that MVCA -- when put in place of the na\"ive attention used in various deep learning models -- can remedy its deficiencies, reducing MAE by $10.7\%$ on average in the most challenging forecasting tasks.

On the use of Graphs for Satellite Image Time Series

May 22, 2025

The Earth's surface is subject to complex and dynamic processes, ranging from large-scale phenomena such as tectonic plate movements to localized changes associated with ecosystems, agriculture, or human activity. Satellite images enable global monitoring of these processes with extensive spatial and temporal coverage, offering advantages over in-situ methods. In particular, resulting satellite image time series (SITS) datasets contain valuable information. To handle their large volume and complexity, some recent works focus on the use of graph-based techniques that abandon the regular Euclidean structure of satellite data to work at an object level. Besides, graphs enable modelling spatial and temporal interactions between identified objects, which are crucial for pattern detection, classification and regression tasks. This paper is an effort to examine the integration of graph-based methods in spatio-temporal remote-sensing analysis. In particular, it aims to present a versatile graph-based pipeline to tackle SITS analysis. It focuses on the construction of spatio-temporal graphs from SITS and their application to downstream tasks. The paper includes a comprehensive review and two case studies, which highlight the potential of graph-based approaches for land cover mapping and water resource forecasting. It also discusses numerous perspectives to resolve current limitations and encourage future developments.

Universal Domain Adaptation Benchmark for Time Series Data Representation

May 23, 2025Deep learning models have significantly improved the ability to detect novelties in time series (TS) data. This success is attributed to their strong representation capabilities. However, due to the inherent variability in TS data, these models often struggle with generalization and robustness. To address this, a common approach is to perform Unsupervised Domain Adaptation, particularly Universal Domain Adaptation (UniDA), to handle domain shifts and emerging novel classes. While extensively studied in computer vision, UniDA remains underexplored for TS data. This work provides a comprehensive implementation and comparison of state-of-the-art TS backbones in a UniDA framework. We propose a reliable protocol to evaluate their robustness and generalization across different domains. The goal is to provide practitioners with a framework that can be easily extended to incorporate future advancements in UniDA and TS architectures. Our results highlight the critical influence of backbone selection in UniDA performance and enable a robustness analysis across various datasets and architectures.

FRIREN: Beyond Trajectories -- A Spectral Lens on Time

May 23, 2025Long-term time-series forecasting (LTSF) models are often presented as general-purpose solutions that can be applied across domains, implicitly assuming that all data is pointwise predictable. Using chaotic systems such as Lorenz-63 as a case study, we argue that geometric structure - not pointwise prediction - is the right abstraction for a dynamic-agnostic foundational model. Minimizing the Wasserstein-2 distance (W2), which captures geometric changes, and providing a spectral view of dynamics are essential for long-horizon forecasting. Our model, FRIREN (Flow-inspired Representations via Interpretable Eigen-networks), implements an augmented normalizing-flow block that embeds data into a normally distributed latent representation. It then generates a W2-efficient optimal path that can be decomposed into rotation, scaling, inverse rotation, and translation. This architecture yields locally generated, geometry-preserving predictions that are independent of the underlying dynamics, and a global spectral representation that functions as a finite Koopman operator with a small modification. This enables practitioners to identify which modes grow, decay, or oscillate, both locally and system-wide. FRIREN achieves an MSE of 11.4, MAE of 1.6, and SWD of 0.96 on Lorenz-63 in a 336-in, 336-out, dt=0.01 setting, surpassing TimeMixer (MSE 27.3, MAE 2.8, SWD 2.1). The model maintains effective prediction for 274 out of 336 steps, approximately 2.5 Lyapunov times. On Rossler (96-in, 336-out), FRIREN achieves an MSE of 0.0349, MAE of 0.0953, and SWD of 0.0170, outperforming TimeMixer's MSE of 4.3988, MAE of 0.886, and SWD of 3.2065. FRIREN is also competitive on standard LTSF datasets such as ETT and Weather. By connecting modern generative flows with classical spectral analysis, FRIREN makes long-term forecasting both accurate and interpretable, setting a new benchmark for LTSF model design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge