Time Series Analysis

Time series analysis comprises statistical methods for analyzing a sequence of data points collected over an interval of time to identify interesting patterns and trends.

Papers and Code

A Kernel-Based Approach for Accurate Steady-State Detection in Performance Time Series

Jun 04, 2025This paper addresses the challenge of accurately detecting the transition from the warmup phase to the steady state in performance metric time series, which is a critical step for effective benchmarking. The goal is to introduce a method that avoids premature or delayed detection, which can lead to inaccurate or inefficient performance analysis. The proposed approach adapts techniques from the chemical reactors domain, detecting steady states online through the combination of kernel-based step detection and statistical methods. By using a window-based approach, it provides detailed information and improves the accuracy of identifying phase transitions, even in noisy or irregular time series. Results show that the new approach reduces total error by 14.5% compared to the state-of-the-art method. It offers more reliable detection of the steady-state onset, delivering greater precision for benchmarking tasks. For users, the new approach enhances the accuracy and stability of performance benchmarking, efficiently handling diverse time series data. Its robustness and adaptability make it a valuable tool for real-world performance evaluation, ensuring consistent and reproducible results.

Almost-Optimal Local-Search Methods for Sparse Tensor PCA

Jun 11, 2025Local-search methods are widely employed in statistical applications, yet interestingly, their theoretical foundations remain rather underexplored, compared to other classes of estimators such as low-degree polynomials and spectral methods. Of note, among the few existing results recent studies have revealed a significant "local-computational" gap in the context of a well-studied sparse tensor principal component analysis (PCA), where a broad class of local Markov chain methods exhibits a notable underperformance relative to other polynomial-time algorithms. In this work, we propose a series of local-search methods that provably "close" this gap to the best known polynomial-time procedures in multiple regimes of the model, including and going beyond the previously studied regimes in which the broad family of local Markov chain methods underperforms. Our framework includes: (1) standard greedy and randomized greedy algorithms applied to the (regularized) posterior of the model; and (2) novel random-threshold variants, in which the randomized greedy algorithm accepts a proposed transition if and only if the corresponding change in the Hamiltonian exceeds a random Gaussian threshold-rather that if and only if it is positive, as is customary. The introduction of the random thresholds enables a tight mathematical analysis of the randomized greedy algorithm's trajectory by crucially breaking the dependencies between the iterations, and could be of independent interest to the community.

Temporal cross-validation impacts multivariate time series subsequence anomaly detection evaluation

Jun 13, 2025Evaluating anomaly detection in multivariate time series (MTS) requires careful consideration of temporal dependencies, particularly when detecting subsequence anomalies common in fault detection scenarios. While time series cross-validation (TSCV) techniques aim to preserve temporal ordering during model evaluation, their impact on classifier performance remains underexplored. This study systematically investigates the effect of TSCV strategy on the precision-recall characteristics of classifiers trained to detect fault-like anomalies in MTS datasets. We compare walk-forward (WF) and sliding window (SW) methods across a range of validation partition configurations and classifier types, including shallow learners and deep learning (DL) classifiers. Results show that SW consistently yields higher median AUC-PR scores and reduced fold-to-fold performance variance, particularly for deep architectures sensitive to localized temporal continuity. Furthermore, we find that classifier generalization is sensitive to the number and structure of temporal partitions, with overlapping windows preserving fault signatures more effectively at lower fold counts. A classifier-level stratified analysis reveals that certain algorithms, such as random forests (RF), maintain stable performance across validation schemes, whereas others exhibit marked sensitivity. This study demonstrates that TSCV design in benchmarking anomaly detection models on streaming time series and provide guidance for selecting evaluation strategies in temporally structured learning environments.

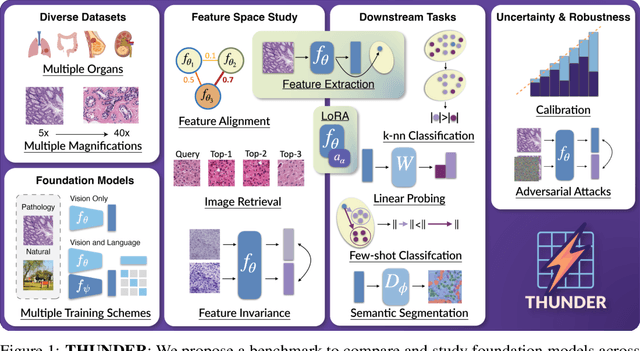

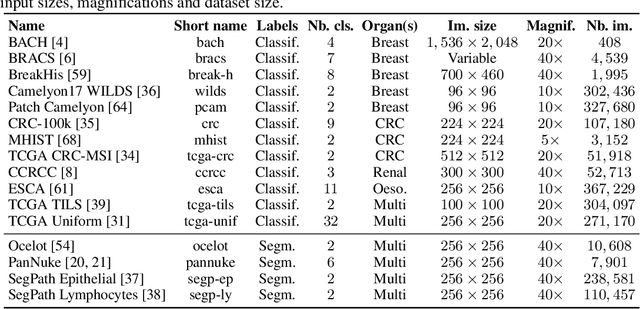

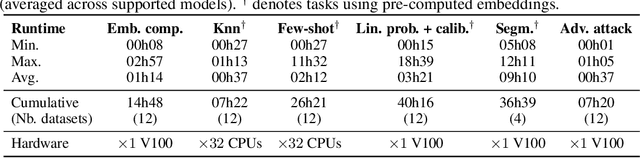

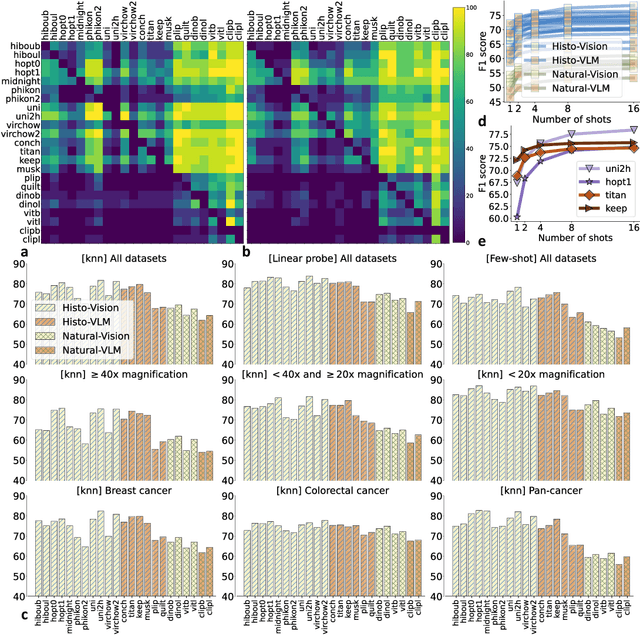

THUNDER: Tile-level Histopathology image UNDERstanding benchmark

Jul 10, 2025

Progress in a research field can be hard to assess, in particular when many concurrent methods are proposed in a short period of time. This is the case in digital pathology, where many foundation models have been released recently to serve as feature extractors for tile-level images, being used in a variety of downstream tasks, both for tile- and slide-level problems. Benchmarking available methods then becomes paramount to get a clearer view of the research landscape. In particular, in critical domains such as healthcare, a benchmark should not only focus on evaluating downstream performance, but also provide insights about the main differences between methods, and importantly, further consider uncertainty and robustness to ensure a reliable usage of proposed models. For these reasons, we introduce THUNDER, a tile-level benchmark for digital pathology foundation models, allowing for efficient comparison of many models on diverse datasets with a series of downstream tasks, studying their feature spaces and assessing the robustness and uncertainty of predictions informed by their embeddings. THUNDER is a fast, easy-to-use, dynamic benchmark that can already support a large variety of state-of-the-art foundation, as well as local user-defined models for direct tile-based comparison. In this paper, we provide a comprehensive comparison of 23 foundation models on 16 different datasets covering diverse tasks, feature analysis, and robustness. The code for THUNDER is publicly available at https://github.com/MICS-Lab/thunder.

Multivariate Long-term Time Series Forecasting with Fourier Neural Filter

Jun 10, 2025Multivariate long-term time series forecasting has been suffering from the challenge of capturing both temporal dependencies within variables and spatial correlations across variables simultaneously. Current approaches predominantly repurpose backbones from natural language processing or computer vision (e.g., Transformers), which fail to adequately address the unique properties of time series (e.g., periodicity). The research community lacks a dedicated backbone with temporal-specific inductive biases, instead relying on domain-agnostic backbones supplemented with auxiliary techniques (e.g., signal decomposition). We introduce FNF as the backbone and DBD as the architecture to provide excellent learning capabilities and optimal learning pathways for spatio-temporal modeling, respectively. Our theoretical analysis proves that FNF unifies local time-domain and global frequency-domain information processing within a single backbone that extends naturally to spatial modeling, while information bottleneck theory demonstrates that DBD provides superior gradient flow and representation capacity compared to existing unified or sequential architectures. Our empirical evaluation across 11 public benchmark datasets spanning five domains (energy, meteorology, transportation, environment, and nature) confirms state-of-the-art performance with consistent hyperparameter settings. Notably, our approach achieves these results without any auxiliary techniques, suggesting that properly designed neural architectures can capture the inherent properties of time series, potentially transforming time series modeling in scientific and industrial applications.

Strategic Intelligence in Large Language Models: Evidence from evolutionary Game Theory

Jul 03, 2025Are Large Language Models (LLMs) a new form of strategic intelligence, able to reason about goals in competitive settings? We present compelling supporting evidence. The Iterated Prisoner's Dilemma (IPD) has long served as a model for studying decision-making. We conduct the first ever series of evolutionary IPD tournaments, pitting canonical strategies (e.g., Tit-for-Tat, Grim Trigger) against agents from the leading frontier AI companies OpenAI, Google, and Anthropic. By varying the termination probability in each tournament (the "shadow of the future"), we introduce complexity and chance, confounding memorisation. Our results show that LLMs are highly competitive, consistently surviving and sometimes even proliferating in these complex ecosystems. Furthermore, they exhibit distinctive and persistent "strategic fingerprints": Google's Gemini models proved strategically ruthless, exploiting cooperative opponents and retaliating against defectors, while OpenAI's models remained highly cooperative, a trait that proved catastrophic in hostile environments. Anthropic's Claude emerged as the most forgiving reciprocator, showing remarkable willingness to restore cooperation even after being exploited or successfully defecting. Analysis of nearly 32,000 prose rationales provided by the models reveals that they actively reason about both the time horizon and their opponent's likely strategy, and we demonstrate that this reasoning is instrumental to their decisions. This work connects classic game theory with machine psychology, offering a rich and granular view of algorithmic decision-making under uncertainty.

Active Learning for Multiple Change Point Detection in Non-stationary Time Series with Deep Gaussian Processes

May 26, 2025Multiple change point (MCP) detection in non-stationary time series is challenging due to the variety of underlying patterns. To address these challenges, we propose a novel algorithm that integrates Active Learning (AL) with Deep Gaussian Processes (DGPs) for robust MCP detection. Our method leverages spectral analysis to identify potential changes and employs AL to strategically select new sampling points for improved efficiency. By incorporating the modeling flexibility of DGPs with the change-identification capabilities of spectral methods, our approach adapts to diverse spectral change behaviors and effectively localizes multiple change points. Experiments on both simulated and real-world data demonstrate that our method outperforms existing techniques in terms of detection accuracy and sampling efficiency for non-stationary time series.

Squeeze the Soaked Sponge: Efficient Off-policy Reinforcement Finetuning for Large Language Model

Jul 09, 2025

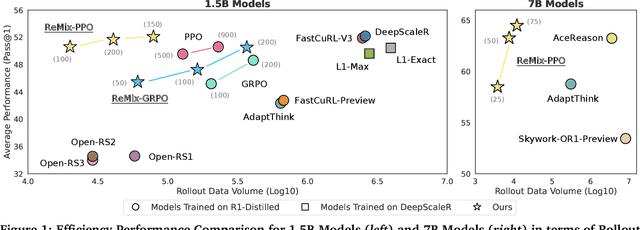

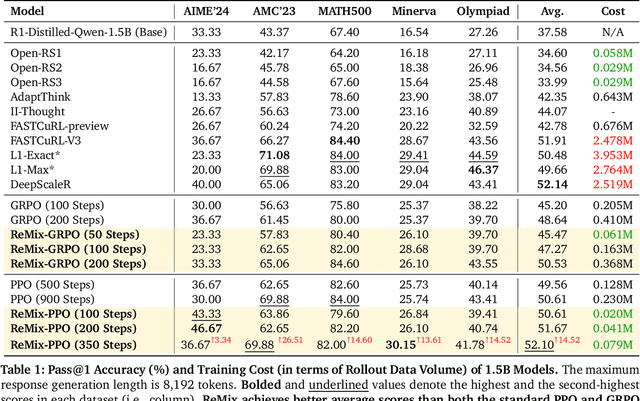

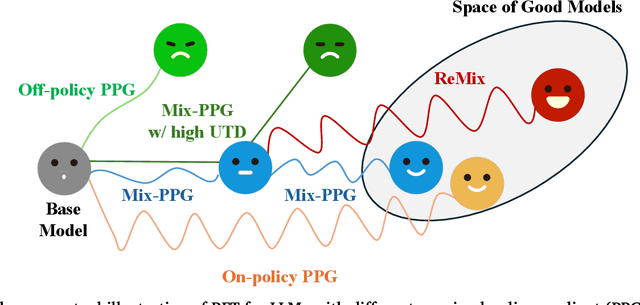

Reinforcement Learning (RL) has demonstrated its potential to improve the reasoning ability of Large Language Models (LLMs). One major limitation of most existing Reinforcement Finetuning (RFT) methods is that they are on-policy RL in nature, i.e., data generated during the past learning process is not fully utilized. This inevitably comes at a significant cost of compute and time, posing a stringent bottleneck on continuing economic and efficient scaling. To this end, we launch the renaissance of off-policy RL and propose Reincarnating Mix-policy Proximal Policy Gradient (ReMix), a general approach to enable on-policy RFT methods like PPO and GRPO to leverage off-policy data. ReMix consists of three major components: (1) Mix-policy proximal policy gradient with an increased Update-To-Data (UTD) ratio for efficient training; (2) KL-Convex policy constraint to balance the trade-off between stability and flexibility; (3) Policy reincarnation to achieve a seamless transition from efficient early-stage learning to steady asymptotic improvement. In our experiments, we train a series of ReMix models upon PPO, GRPO and 1.5B, 7B base models. ReMix shows an average Pass@1 accuracy of 52.10% (for 1.5B model) with 0.079M response rollouts, 350 training steps and achieves 63.27%/64.39% (for 7B model) with 0.007M/0.011M response rollouts, 50/75 training steps, on five math reasoning benchmarks (i.e., AIME'24, AMC'23, Minerva, OlympiadBench, and MATH500). Compared with 15 recent advanced models, ReMix shows SOTA-level performance with an over 30x to 450x reduction in training cost in terms of rollout data volume. In addition, we reveal insightful findings via multifaceted analysis, including the implicit preference for shorter responses due to the Whipping Effect of off-policy discrepancy, the collapse mode of self-reflection behavior under the presence of severe off-policyness, etc.

Event Classification of Accelerometer Data for Industrial Package Monitoring with Embedded Deep Learning

Jun 05, 2025Package monitoring is an important topic in industrial applications, with significant implications for operational efficiency and ecological sustainability. In this study, we propose an approach that employs an embedded system, placed on reusable packages, to detect their state (on a Forklift, in a Truck, or in an undetermined location). We aim to design a system with a lifespan of several years, corresponding to the lifespan of reusable packages. Our analysis demonstrates that maximizing device lifespan requires minimizing wake time. We propose a pipeline that includes data processing, training, and evaluation of the deep learning model designed for imbalanced, multiclass time series data collected from an embedded sensor. The method uses a one-dimensional Convolutional Neural Network architecture to classify accelerometer data from the IoT device. Before training, two data augmentation techniques are tested to solve the imbalance problem of the dataset: the Synthetic Minority Oversampling TEchnique and the ADAptive SYNthetic sampling approach. After training, compression techniques are implemented to have a small model size. On the considered twoclass problem, the methodology yields a precision of 94.54% for the first class and 95.83% for the second class, while compression techniques reduce the model size by a factor of four. The trained model is deployed on the IoT device, where it operates with a power consumption of 316 mW during inference.

Degree-Optimized Cumulative Polynomial Kolmogorov-Arnold Networks

May 21, 2025We introduce cumulative polynomial Kolmogorov-Arnold networks (CP-KAN), a neural architecture combining Chebyshev polynomial basis functions and quadratic unconstrained binary optimization (QUBO). Our primary contribution involves reformulating the degree selection problem as a QUBO task, reducing the complexity from $O(D^N)$ to a single optimization step per layer. This approach enables efficient degree selection across neurons while maintaining computational tractability. The architecture performs well in regression tasks with limited data, showing good robustness to input scales and natural regularization properties from its polynomial basis. Additionally, theoretical analysis establishes connections between CP-KAN's performance and properties of financial time series. Our empirical validation across multiple domains demonstrates competitive performance compared to several traditional architectures tested, especially in scenarios where data efficiency and numerical stability are important. Our implementation, including strategies for managing computational overhead in larger networks is available in Ref.~\citep{cpkan_implementation}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge