Sentiment Analysis

Sentiment analysis is the process of determining the sentiment of a piece of text, such as a tweet or a review.

Papers and Code

Continuous sentiment scores for literary and multilingual contexts

Aug 20, 2025

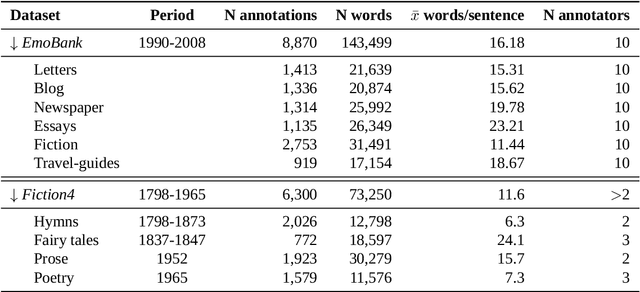

Sentiment Analysis is widely used to quantify sentiment in text, but its application to literary texts poses unique challenges due to figurative language, stylistic ambiguity, as well as sentiment evocation strategies. Traditional dictionary-based tools often underperform, especially for low-resource languages, and transformer models, while promising, typically output coarse categorical labels that limit fine-grained analysis. We introduce a novel continuous sentiment scoring method based on concept vector projection, trained on multilingual literary data, which more effectively captures nuanced sentiment expressions across genres, languages, and historical periods. Our approach outperforms existing tools on English and Danish texts, producing sentiment scores whose distribution closely matches human ratings, enabling more accurate analysis and sentiment arc modeling in literature.

Speech Emotion Recognition via Entropy-Aware Score Selection

Aug 28, 2025In this paper, we propose a multimodal framework for speech emotion recognition that leverages entropy-aware score selection to combine speech and textual predictions. The proposed method integrates a primary pipeline that consists of an acoustic model based on wav2vec2.0 and a secondary pipeline that consists of a sentiment analysis model using RoBERTa-XLM, with transcriptions generated via Whisper-large-v3. We propose a late score fusion approach based on entropy and varentropy thresholds to overcome the confidence constraints of primary pipeline predictions. A sentiment mapping strategy translates three sentiment categories into four target emotion classes, enabling coherent integration of multimodal predictions. The results on the IEMOCAP and MSP-IMPROV datasets show that the proposed method offers a practical and reliable enhancement over traditional single-modality systems.

LLaMA-Based Models for Aspect-Based Sentiment Analysis

Aug 12, 2025While large language models (LLMs) show promise for various tasks, their performance in compound aspect-based sentiment analysis (ABSA) tasks lags behind fine-tuned models. However, the potential of LLMs fine-tuned for ABSA remains unexplored. This paper examines the capabilities of open-source LLMs fine-tuned for ABSA, focusing on LLaMA-based models. We evaluate the performance across four tasks and eight English datasets, finding that the fine-tuned Orca~2 model surpasses state-of-the-art results in all tasks. However, all models struggle in zero-shot and few-shot scenarios compared to fully fine-tuned ones. Additionally, we conduct error analysis to identify challenges faced by fine-tuned models.

Personality Matters: User Traits Predict LLM Preferences in Multi-Turn Collaborative Tasks

Aug 29, 2025As Large Language Models (LLMs) increasingly integrate into everyday workflows, where users shape outcomes through multi-turn collaboration, a critical question emerges: do users with different personality traits systematically prefer certain LLMs over others? We conducted a study with 32 participants evenly distributed across four Keirsey personality types, evaluating their interactions with GPT-4 and Claude 3.5 across four collaborative tasks: data analysis, creative writing, information retrieval, and writing assistance. Results revealed significant personality-driven preferences: Rationals strongly preferred GPT-4, particularly for goal-oriented tasks, while idealists favored Claude 3.5, especially for creative and analytical tasks. Other personality types showed task-dependent preferences. Sentiment analysis of qualitative feedback confirmed these patterns. Notably, aggregate helpfulness ratings were similar across models, showing how personality-based analysis reveals LLM differences that traditional evaluations miss.

The Promise of Large Language Models in Digital Health: Evidence from Sentiment Analysis in Online Health Communities

Aug 19, 2025Digital health analytics face critical challenges nowadays. The sophisticated analysis of patient-generated health content, which contains complex emotional and medical contexts, requires scarce domain expertise, while traditional ML approaches are constrained by data shortage and privacy limitations in healthcare settings. Online Health Communities (OHCs) exemplify these challenges with mixed-sentiment posts, clinical terminology, and implicit emotional expressions that demand specialised knowledge for accurate Sentiment Analysis (SA). To address these challenges, this study explores how Large Language Models (LLMs) can integrate expert knowledge through in-context learning for SA, providing a scalable solution for sophisticated health data analysis. Specifically, we develop a structured codebook that systematically encodes expert interpretation guidelines, enabling LLMs to apply domain-specific knowledge through targeted prompting rather than extensive training. Six GPT models validated alongside DeepSeek and LLaMA 3.1 are compared with pre-trained language models (BioBERT variants) and lexicon-based methods, using 400 expert-annotated posts from two OHCs. LLMs achieve superior performance while demonstrating expert-level agreement. This high agreement, with no statistically significant difference from inter-expert agreement levels, suggests knowledge integration beyond surface-level pattern recognition. The consistent performance across diverse LLM models, supported by in-context learning, offers a promising solution for digital health analytics. This approach addresses the critical challenge of expert knowledge shortage in digital health research, enabling real-time, expert-quality analysis for patient monitoring, intervention assessment, and evidence-based health strategies.

Research Challenges in Relational Database Management Systems for LLM Queries

Aug 28, 2025Large language models (LLMs) have become essential for applications such as text summarization, sentiment analysis, and automated question-answering. Recently, LLMs have also been integrated into relational database management systems to enhance querying and support advanced data processing. Companies such as Amazon, Databricks, Google, and Snowflake offer LLM invocation directly within SQL, denoted as LLM queries, to boost data insights. However, open-source solutions currently have limited functionality and poor performance. In this work, we present an early exploration of two open-source systems and one enterprise platform, using five representative queries to expose functional, performance, and scalability limits in today's SQL-invoked LLM integrations. We identify three main issues: enforcing structured outputs, optimizing resource utilization, and improving query planning. We implemented initial solutions and observed improvements in accommodating LLM powered SQL queries. These early gains demonstrate that tighter integration of LLM+DBMS is the key to scalable and efficient processing of LLM queries.

TradingGroup: A Multi-Agent Trading System with Self-Reflection and Data-Synthesis

Aug 25, 2025Recent advancements in large language models (LLMs) have enabled powerful agent-based applications in finance, particularly for sentiment analysis, financial report comprehension, and stock forecasting. However, existing systems often lack inter-agent coordination, structured self-reflection, and access to high-quality, domain-specific post-training data such as data from trading activities including both market conditions and agent decisions. These data are crucial for agents to understand the market dynamics, improve the quality of decision-making and promote effective coordination. We introduce TradingGroup, a multi-agent trading system designed to address these limitations through a self-reflective architecture and an end-to-end data-synthesis pipeline. TradingGroup consists of specialized agents for news sentiment analysis, financial report interpretation, stock trend forecasting, trading style adaptation, and a trading decision making agent that merges all signals and style preferences to produce buy, sell or hold decisions. Specifically, we design self-reflection mechanisms for the stock forecasting, style, and decision-making agents to distill past successes and failures for similar reasoning in analogous future scenarios and a dynamic risk-management model to offer configurable dynamic stop-loss and take-profit mechanisms. In addition, TradingGroup embeds an automated data-synthesis and annotation pipeline that generates high-quality post-training data for further improving the agent performance through post-training. Our backtesting experiments across five real-world stock datasets demonstrate TradingGroup's superior performance over rule-based, machine learning, reinforcement learning, and existing LLM-based trading strategies.

Evaluating Compositional Approaches for Focus and Sentiment Analysis

Aug 11, 2025

This paper summarizes the results of evaluating a compositional approach for Focus Analysis (FA) in Linguistics and Sentiment Analysis (SA) in Natural Language Processing (NLP). While quantitative evaluations of compositional and non-compositional approaches in SA exist in NLP, similar quantitative evaluations are very rare in FA in Linguistics that deal with linguistic expressions representing focus or emphasis such as "it was John who left". We fill this gap in research by arguing that compositional rules in SA also apply to FA because FA and SA are closely related meaning that SA is part of FA. Our compositional approach in SA exploits basic syntactic rules such as rules of modification, coordination, and negation represented in the formalism of Universal Dependencies (UDs) in English and applied to words representing sentiments from sentiment dictionaries. Some of the advantages of our compositional analysis method for SA in contrast to non-compositional analysis methods are interpretability and explainability. We test the accuracy of our compositional approach and compare it with a non-compositional approach VADER that uses simple heuristic rules to deal with negation, coordination and modification. In contrast to previous related work that evaluates compositionality in SA on long reviews, this study uses more appropriate datasets to evaluate compositionality. In addition, we generalize the results of compositional approaches in SA to compositional approaches in FA.

Few-shot Cross-lingual Aspect-Based Sentiment Analysis with Sequence-to-Sequence Models

Aug 11, 2025

Aspect-based sentiment analysis (ABSA) has received substantial attention in English, yet challenges remain for low-resource languages due to the scarcity of labelled data. Current cross-lingual ABSA approaches often rely on external translation tools and overlook the potential benefits of incorporating a small number of target language examples into training. In this paper, we evaluate the effect of adding few-shot target language examples to the training set across four ABSA tasks, six target languages, and two sequence-to-sequence models. We show that adding as few as ten target language examples significantly improves performance over zero-shot settings and achieves a similar effect to constrained decoding in reducing prediction errors. Furthermore, we demonstrate that combining 1,000 target language examples with English data can even surpass monolingual baselines. These findings offer practical insights for improving cross-lingual ABSA in low-resource and domain-specific settings, as obtaining ten high-quality annotated examples is both feasible and highly effective.

Improving Generative Cross-lingual Aspect-Based Sentiment Analysis with Constrained Decoding

Aug 14, 2025While aspect-based sentiment analysis (ABSA) has made substantial progress, challenges remain for low-resource languages, which are often overlooked in favour of English. Current cross-lingual ABSA approaches focus on limited, less complex tasks and often rely on external translation tools. This paper introduces a novel approach using constrained decoding with sequence-to-sequence models, eliminating the need for unreliable translation tools and improving cross-lingual performance by 5\% on average for the most complex task. The proposed method also supports multi-tasking, which enables solving multiple ABSA tasks with a single model, with constrained decoding boosting results by more than 10\%. We evaluate our approach across seven languages and six ABSA tasks, surpassing state-of-the-art methods and setting new benchmarks for previously unexplored tasks. Additionally, we assess large language models (LLMs) in zero-shot, few-shot, and fine-tuning scenarios. While LLMs perform poorly in zero-shot and few-shot settings, fine-tuning achieves competitive results compared to smaller multilingual models, albeit at the cost of longer training and inference times. We provide practical recommendations for real-world applications, enhancing the understanding of cross-lingual ABSA methodologies. This study offers valuable insights into the strengths and limitations of cross-lingual ABSA approaches, advancing the state-of-the-art in this challenging research domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge