Sentiment

Papers and Code

Cross-modal Prompting for Balanced Incomplete Multi-modal Emotion Recognition

Dec 12, 2025Incomplete multi-modal emotion recognition (IMER) aims at understanding human intentions and sentiments by comprehensively exploring the partially observed multi-source data. Although the multi-modal data is expected to provide more abundant information, the performance gap and modality under-optimization problem hinder effective multi-modal learning in practice, and are exacerbated in the confrontation of the missing data. To address this issue, we devise a novel Cross-modal Prompting (ComP) method, which emphasizes coherent information by enhancing modality-specific features and improves the overall recognition accuracy by boosting each modality's performance. Specifically, a progressive prompt generation module with a dynamic gradient modulator is proposed to produce concise and consistent modality semantic cues. Meanwhile, cross-modal knowledge propagation selectively amplifies the consistent information in modality features with the delivered prompts to enhance the discrimination of the modality-specific output. Additionally, a coordinator is designed to dynamically re-weight the modality outputs as a complement to the balance strategy to improve the model's efficacy. Extensive experiments on 4 datasets with 7 SOTA methods under different missing rates validate the effectiveness of our proposed method.

Quantifying Emotional Tone in Tolkien's The Hobbit: Dialogue Sentiment Analysis with RegEx, NRC-VAD, and Python

Dec 11, 2025This study analyzes the emotional tone of dialogue in J. R. R. Tolkien's The Hobbit (1937) using computational text analysis. Dialogue was extracted with regular expressions, then preprocessed, and scored using the NRC-VAD lexicon to quantify emotional dimensions. The results show that the dialogue maintains a generally positive (high valence) and calm (low arousal) tone, with a gradually increasing sense of agency (dominance) as the story progresses. These patterns reflect the novel's emotional rhythm: moments of danger and excitement are regularly balanced by humor, camaraderie, and relief. Visualizations -- including emotional trajectory graphs and word clouds -- highlight how Tolkien's language cycles between tension and comfort. By combining computational tools with literary interpretation, this study demonstrates how digital methods can uncover subtle emotional structures in literature, revealing the steady rhythm and emotional modulation that shape the storytelling in The Hobbit.

Applying NLP to iMessages: Understanding Topic Avoidance, Responsiveness, and Sentiment

Dec 11, 2025What is your messaging data used for? While many users do not often think about the information companies can gather based off of their messaging platform of choice, it is nonetheless important to consider as society increasingly relies on short-form electronic communication. While most companies keep their data closely guarded, inaccessible to users or potential hackers, Apple has opened a door to their walled-garden ecosystem, providing iMessage users on Mac with one file storing all their messages and attached metadata. With knowledge of this locally stored file, the question now becomes: What can our data do for us? In the creation of our iMessage text message analyzer, we set out to answer five main research questions focusing on topic modeling, response times, reluctance scoring, and sentiment analysis. This paper uses our exploratory data to show how these questions can be answered using our analyzer and its potential in future studies on iMessage data.

Risk-Aware Financial Forecasting Enhanced by Machine Learning and Intuitionistic Fuzzy Multi-Criteria Decision-Making

Dec 11, 2025In the face of increasing financial uncertainty and market complexity, this study presents a novel risk-aware financial forecasting framework that integrates advanced machine learning techniques with intuitionistic fuzzy multi-criteria decision-making (MCDM). Tailored to the BIST 100 index and validated through a case study of a major defense company in Türkiye, the framework fuses structured financial data, unstructured text data, and macroeconomic indicators to enhance predictive accuracy and robustness. It incorporates a hybrid suite of models, including extreme gradient boosting (XGBoost), long short-term memory (LSTM) network, graph neural network (GNN), to deliver probabilistic forecasts with quantified uncertainty. The empirical results demonstrate high forecasting accuracy, with a net profit mean absolute percentage error (MAPE) of 3.03% and narrow 95% confidence intervals for key financial indicators. The risk-aware analysis indicates a favorable risk-return profile, with a Sharpe ratio of 1.25 and a higher Sortino ratio of 1.80, suggesting relatively low downside volatility and robust performance under market fluctuations. Sensitivity analysis shows that the key financial indicator predictions are highly sensitive to variations of inflation, interest rates, sentiment, and exchange rates. Additionally, using an intuitionistic fuzzy MCDM approach, combining entropy weighting, evaluation based on distance from the average solution (EDAS), and the measurement of alternatives and ranking according to compromise solution (MARCOS) methods, the tabular data learning network (TabNet) outperforms the other models and is identified as the most suitable candidate for deployment. Overall, the findings of this work highlight the importance of integrating advanced machine learning, risk quantification, and fuzzy MCDM methodologies in financial forecasting, particularly in emerging markets.

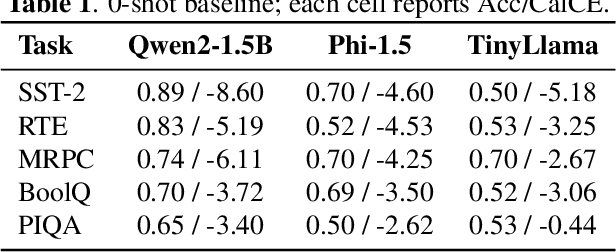

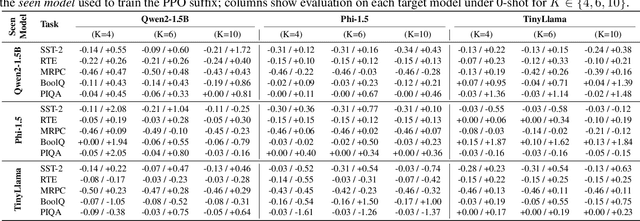

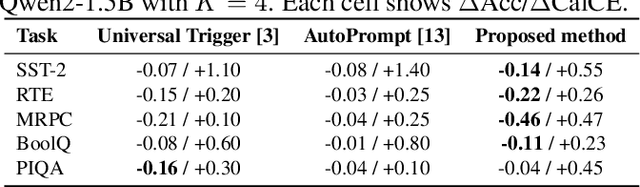

Universal Adversarial Suffixes Using Calibrated Gumbel-Softmax Relaxation

Dec 09, 2025Language models (LMs) are often used as zero-shot or few-shot classifiers by scoring label words, but they remain fragile to adversarial prompts. Prior work typically optimizes task- or model-specific triggers, making results difficult to compare and limiting transferability. We study universal adversarial suffixes: short token sequences (4-10 tokens) that, when appended to any input, broadly reduce accuracy across tasks and models. Our approach learns the suffix in a differentiable "soft" form using Gumbel-Softmax relaxation and then discretizes it for inference. Training maximizes calibrated cross-entropy on the label region while masking gold tokens to prevent trivial leakage, with entropy regularization to avoid collapse. A single suffix trained on one model transfers effectively to others, consistently lowering both accuracy and calibrated confidence. Experiments on sentiment analysis, natural language inference, paraphrase detection, commonsense QA, and physical reasoning with Qwen2-1.5B, Phi-1.5, and TinyLlama-1.1B demonstrate consistent attack effectiveness and transfer across tasks and model families.

A Hybrid Model for Stock Market Forecasting: Integrating News Sentiment and Time Series Data with Graph Neural Networks

Dec 09, 2025Stock market prediction is a long-standing challenge in finance, as accurate forecasts support informed investment decisions. Traditional models rely mainly on historical prices, but recent work shows that financial news can provide useful external signals. This paper investigates a multimodal approach that integrates companies' news articles with their historical stock data to improve prediction performance. We compare a Graph Neural Network (GNN) model with a baseline LSTM model. Historical data for each company is encoded using an LSTM, while news titles are embedded with a language model. These embeddings form nodes in a heterogeneous graph, and GraphSAGE is used to capture interactions between articles, companies, and industries. We evaluate two targets: a binary direction-of-change label and a significance-based label. Experiments on the US equities and Bloomberg datasets show that the GNN outperforms the LSTM baseline, achieving 53% accuracy on the first target and a 4% precision gain on the second. Results also indicate that companies with more associated news yield higher prediction accuracy. Moreover, headlines contain stronger predictive signals than full articles, suggesting that concise news summaries play an important role in short-term market reactions.

* 11 pages, 6 figures. Published in the Proceedings of the 5th International Conference on Artificial Intelligence Research (ICAIR 2025). Published version available at: https://papers.academic-conferences.org/index.php/icair/article/view/4294

Universal Adversarial Suffixes for Language Models Using Reinforcement Learning with Calibrated Reward

Dec 09, 2025

Language models are vulnerable to short adversarial suffixes that can reliably alter predictions. Previous works usually find such suffixes with gradient search or rule-based methods, but these are brittle and often tied to a single task or model. In this paper, a reinforcement learning framework is used where the suffix is treated as a policy and trained with Proximal Policy Optimization against a frozen model as a reward oracle. Rewards are shaped using calibrated cross-entropy, removing label bias and aggregating across surface forms to improve transferability. The proposed method is evaluated on five diverse NLP benchmark datasets, covering sentiment, natural language inference, paraphrase, and commonsense reasoning, using three distinct language models: Qwen2-1.5B Instruct, TinyLlama-1.1B Chat, and Phi-1.5. Results show that RL-trained suffixes consistently degrade accuracy and transfer more effectively across tasks and models than previous adversarial triggers of similar genres.

Is GPT-OSS All You Need? Benchmarking Large Language Models for Financial Intelligence and the Surprising Efficiency Paradox

Dec 09, 2025The rapid adoption of large language models in financial services necessitates rigorous evaluation frameworks to assess their performance, efficiency, and practical applicability. This paper conducts a comprehensive evaluation of the GPT-OSS model family alongside contemporary LLMs across ten diverse financial NLP tasks. Through extensive experimentation on 120B and 20B parameter variants of GPT-OSS, we reveal a counterintuitive finding: the smaller GPT-OSS-20B model achieves comparable accuracy (65.1% vs 66.5%) while demonstrating superior computational efficiency with 198.4 Token Efficiency Score and 159.80 tokens per second processing speed [1]. Our evaluation encompasses sentiment analysis, question answering, and entity recognition tasks using real-world financial datasets including Financial PhraseBank, FiQA-SA, and FLARE FINERORD. We introduce novel efficiency metrics that capture the trade-off between model performance and resource utilization, providing critical insights for deployment decisions in production environments. The benchmark reveals that GPT-OSS models consistently outperform larger competitors including Qwen3-235B, challenging the prevailing assumption that model scale directly correlates with task performance [2]. Our findings demonstrate that architectural innovations and training strategies in GPT-OSS enable smaller models to achieve competitive performance with significantly reduced computational overhead, offering a pathway toward sustainable and cost-effective deployment of LLMs in financial applications.

HealthcareNLP: where are we and what is next?

Dec 09, 2025

This proposed tutorial focuses on Healthcare Domain Applications of NLP, what we have achieved around HealthcareNLP, and the challenges that lie ahead for the future. Existing reviews in this domain either overlook some important tasks, such as synthetic data generation for addressing privacy concerns, or explainable clinical NLP for improved integration and implementation, or fail to mention important methodologies, including retrieval augmented generation and the neural symbolic integration of LLMs and KGs. In light of this, the goal of this tutorial is to provide an introductory overview of the most important sub-areas of a patient- and resource-oriented HealthcareNLP, with three layers of hierarchy: data/resource layer: annotation guidelines, ethical approvals, governance, synthetic data; NLP-Eval layer: NLP tasks such as NER, RE, sentiment analysis, and linking/coding with categorised methods, leading to explainable HealthAI; patients layer: Patient Public Involvement and Engagement (PPIE), health literacy, translation, simplification, and summarisation (also NLP tasks), and shared decision-making support. A hands-on session will be included in the tutorial for the audience to use HealthcareNLP applications. The target audience includes NLP practitioners in the healthcare application domain, NLP researchers who are interested in domain applications, healthcare researchers, and students from NLP fields. The type of tutorial is "Introductory to CL/NLP topics (HealthcareNLP)" and the audience does not need prior knowledge to attend this. Tutorial materials: https://github.com/4dpicture/HealthNLP

PrivTune: Efficient and Privacy-Preserving Fine-Tuning of Large Language Models via Device-Cloud Collaboration

Dec 09, 2025

With the rise of large language models, service providers offer language models as a service, enabling users to fine-tune customized models via uploaded private datasets. However, this raises concerns about sensitive data leakage. Prior methods, relying on differential privacy within device-cloud collaboration frameworks, struggle to balance privacy and utility, exposing users to inference attacks or degrading fine-tuning performance. To address this, we propose PrivTune, an efficient and privacy-preserving fine-tuning framework via Split Learning (SL). The key idea of PrivTune is to inject crafted noise into token representations from the SL bottom model, making each token resemble the $n$-hop indirect neighbors. PrivTune formulates this as an optimization problem to compute the optimal noise vector, aligning with defense-utility goals. On this basis, it then adjusts the parameters (i.e., mean) of the $d_χ$-Privacy noise distribution to align with the optimization direction and scales the noise according to token importance to minimize distortion. Experiments on five datasets (covering both classification and generation tasks) against three embedding inversion and three attribute inference attacks show that, using RoBERTa on the Stanford Sentiment Treebank dataset, PrivTune reduces the attack success rate to 10% with only a 3.33% drop in utility performance, outperforming state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge