Ziyang Hong

AquaticVision: Benchmarking Visual SLAM in Underwater Environment with Events and Frames

May 06, 2025Abstract:Many underwater applications, such as offshore asset inspections, rely on visual inspection and detailed 3D reconstruction. Recent advancements in underwater visual SLAM systems for aquatic environments have garnered significant attention in marine robotics research. However, existing underwater visual SLAM datasets often lack groundtruth trajectory data, making it difficult to objectively compare the performance of different SLAM algorithms based solely on qualitative results or COLMAP reconstruction. In this paper, we present a novel underwater dataset that includes ground truth trajectory data obtained using a motion capture system. Additionally, for the first time, we release visual data that includes both events and frames for benchmarking underwater visual positioning. By providing event camera data, we aim to facilitate the development of more robust and advanced underwater visual SLAM algorithms. The use of event cameras can help mitigate challenges posed by extremely low light or hazy underwater conditions. The webpage of our dataset is https://sites.google.com/view/aquaticvision-lias.

Bias-Eliminated PnP for Stereo Visual Odometry: Provably Consistent and Large-Scale Localization

Apr 24, 2025

Abstract:In this paper, we first present a bias-eliminated weighted (Bias-Eli-W) perspective-n-point (PnP) estimator for stereo visual odometry (VO) with provable consistency. Specifically, leveraging statistical theory, we develop an asymptotically unbiased and $\sqrt {n}$-consistent PnP estimator that accounts for varying 3D triangulation uncertainties, ensuring that the relative pose estimate converges to the ground truth as the number of features increases. Next, on the stereo VO pipeline side, we propose a framework that continuously triangulates contemporary features for tracking new frames, effectively decoupling temporal dependencies between pose and 3D point errors. We integrate the Bias-Eli-W PnP estimator into the proposed stereo VO pipeline, creating a synergistic effect that enhances the suppression of pose estimation errors. We validate the performance of our method on the KITTI and Oxford RobotCar datasets. Experimental results demonstrate that our method: 1) achieves significant improvements in both relative pose error and absolute trajectory error in large-scale environments; 2) provides reliable localization under erratic and unpredictable robot motions. The successful implementation of the Bias-Eli-W PnP in stereo VO indicates the importance of information screening in robotic estimation tasks with high-uncertainty measurements, shedding light on diverse applications where PnP is a key ingredient.

SCORE: Saturated Consensus Relocalization in Semantic Line Maps

Mar 05, 2025

Abstract:This is the arxiv version for our paper submitted to IEEE/RSJ IROS 2025. We propose a scene-agnostic and light-weight visual relocalization framework that leverages semantically labeled 3D lines as a compact map representation. In our framework, the robot localizes itself by capturing a single image, extracting 2D lines, associating them with semantically similar 3D lines in the map, and solving a robust perspective-n-line problem. To address the extremely high outlier ratios~(exceeding 99.5\%) caused by one-to-many ambiguities in semantic matching, we introduce the Saturated Consensus Maximization~(Sat-CM) formulation, which enables accurate pose estimation when the classic Consensus Maximization framework fails. We further propose a fast global solver to the formulated Sat-CM problems, leveraging rigorous interval analysis results to ensure both accuracy and computational efficiency. Additionally, we develop a pipeline for constructing semantic 3D line maps using posed depth images. To validate the effectiveness of our framework, which integrates our innovations in robust estimation and practical engineering insights, we conduct extensive experiments on the ScanNet++ dataset.

RUSSO: Robust Underwater SLAM with Sonar Optimization against Visual Degradation

Mar 03, 2025

Abstract:Visual degradation in underwater environments poses unique and significant challenges, which distinguishes underwater SLAM from popular vision-based SLAM on the ground. In this paper, we propose RUSSO, a robust underwater SLAM system which fuses stereo camera, inertial measurement unit (IMU), and imaging sonar to achieve robust and accurate localization in challenging underwater environments for 6 degrees of freedom (DoF) estimation. During visual degradation, the system is reduced to a sonar-inertial system estimating 3-DoF poses. The sonar pose estimation serves as a strong prior for IMU propagation, thereby enhancing the reliability of pose estimation with IMU propagation. Additionally, we propose a SLAM initialization method that leverages the imaging sonar to counteract the lack of visual features during the initialization stage of SLAM. We extensively validate RUSSO through experiments in simulator, pool, and sea scenarios. The results demonstrate that RUSSO achieves better robustness and localization accuracy compared to the state-of-the-art visual-inertial SLAM systems, especially in visually challenging scenarios. To the best of our knowledge, this is the first time fusing stereo camera, IMU, and imaging sonar to realize robust underwater SLAM against visual degradation.

BESTAnP: Bi-Step Efficient and Statistically Optimal Estimator for Acoustic-n-Point Problem

Nov 26, 2024

Abstract:We consider the acoustic-n-point (AnP) problem, which estimates the pose of a 2D forward-looking sonar (FLS) according to n 3D-2D point correspondences. We explore the nature of the measured partial spherical coordinates and reveal their inherent relationships to translation and orientation. Based on this, we propose a bi-step efficient and statistically optimal AnP (BESTAnP) algorithm that decouples the estimation of translation and orientation. Specifically, in the first step, the translation estimation is formulated as the range-based localization problem based on distance-only measurements. In the second step, the rotation is estimated via eigendecomposition based on azimuth-only measurements and the estimated translation. BESTAnP is the first AnP algorithm that gives a closed-form solution for the full six-degree pose. In addition, we conduct bias elimination for BESTAnP such that it owns the statistical property of consistency. Through simulation and real-world experiments, we demonstrate that compared with the state-of-the-art (SOTA) methods, BESTAnP is over ten times faster and features real-time capacity in resource-constrained platforms while exhibiting comparable accuracy. Moreover, for the first time, we embed BESTAnP into a sonar-based odometry which shows its effectiveness for trajectory estimation.

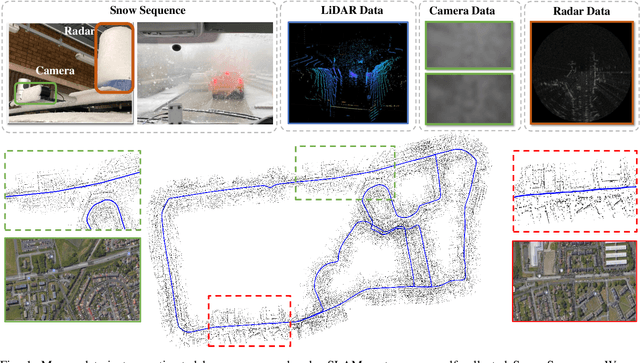

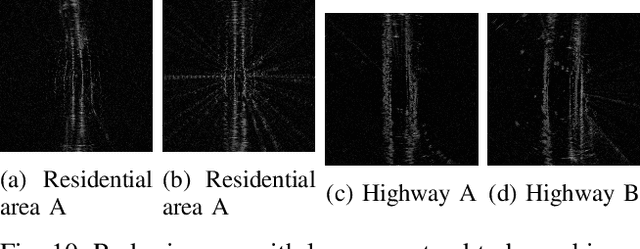

Get It For Free: Radar Segmentation without Expert Labels and Its Application in Odometry and Localization

Sep 27, 2024Abstract:This paper presents a novel weakly supervised semantic segmentation method for radar segmentation, where the existing LiDAR semantic segmentation models are employed to generate semantic labels, which then serve as supervision signals for training a radar semantic segmentation model. The obtained radar semantic segmentation model outperforms LiDAR-based models, providing more consistent and robust segmentation under all-weather conditions, particularly in the snow, rain and fog. To mitigate potential errors in LiDAR semantic labels, we design a dedicated refinement scheme that corrects erroneous labels based on structural features and distribution patterns. The semantic information generated by our radar segmentation model is used in two downstream tasks, achieving significant performance improvements. In large-scale radar-based localization using OpenStreetMap, it leads to localization error reduction by 20.55\% over prior methods. For the odometry task, it improves translation accuracy by 16.4\% compared to the second-best method, securing the first place in the radar odometry competition at the Radar in Robotics workshop of ICRA 2024, Japan

EFEAR-4D: Ego-Velocity Filtering for Efficient and Accurate 4D radar Odometry

May 16, 2024Abstract:Odometry is a crucial component for successfully implementing autonomous navigation, relying on sensors such as cameras, LiDARs and IMUs. However, these sensors may encounter challenges in extreme weather conditions, such as snowfall and fog. The emergence of FMCW radar technology offers the potential for robust perception in adverse conditions. As the latest generation of FWCW radars, the 4D mmWave radar provides point cloud with range, azimuth, elevation, and Doppler velocity information, despite inherent sparsity and noises in the point cloud. In this paper, we propose EFEAR-4D, an accurate, highly efficient, and learning-free method for large-scale 4D radar odometry estimation. EFEAR-4D exploits Doppler velocity information delicately for robust ego-velocity estimation, resulting in a highly accurate prior guess. EFEAR-4D maintains robustness against point-cloud sparsity and noises across diverse environments through dynamic object removal and effective region-wise feature extraction. Extensive experiments on two publicly available 4D radar datasets demonstrate state-of-the-art reliability and localization accuracy of EFEAR-4D under various conditions. Furthermore, we have collected a dataset following the same route but varying installation heights of the 4D radar, emphasizing the significant impact of radar height on point cloud quality - a crucial consideration for real-world deployments. Our algorithm and dataset will be available soon at https://github.com/CLASS-Lab/EFEAR-4D.

Cross-Dimensional Refined Learning for Real-Time 3D Visual Perception from Monocular Video

Mar 16, 2023Abstract:We present a novel real-time capable learning method that jointly perceives a 3D scene's geometry structure and semantic labels. Recent approaches to real-time 3D scene reconstruction mostly adopt a volumetric scheme, where a truncated signed distance function (TSDF) is directly regressed. However, these volumetric approaches tend to focus on the global coherence of their reconstructions, which leads to a lack of local geometrical detail. To overcome this issue, we propose to leverage the latent geometrical prior knowledge in 2D image features by explicit depth prediction and anchored feature generation, to refine the occupancy learning in TSDF volume. Besides, we find that this cross-dimensional feature refinement methodology can also be adopted for the semantic segmentation task. Hence, we proposed an end-to-end cross-dimensional refinement neural network (CDRNet) to extract both 3D mesh and 3D semantic labeling in real time. The experiment results show that the proposed method achieves state-of-the-art 3D perception efficiency on multiple datasets, which indicates the great potential of our method for industrial applications.

CURL: Continuous, Ultra-compact Representation for LiDAR

May 12, 2022

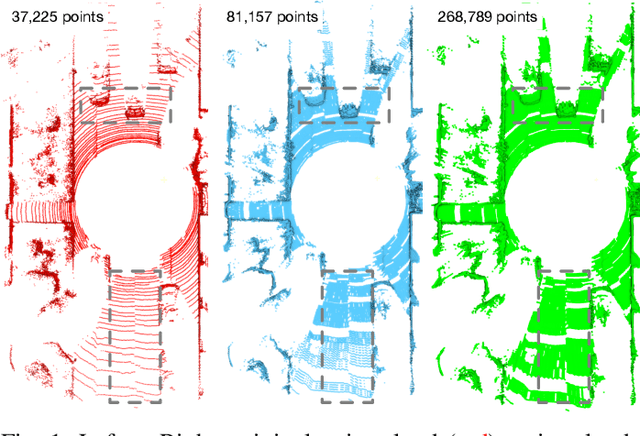

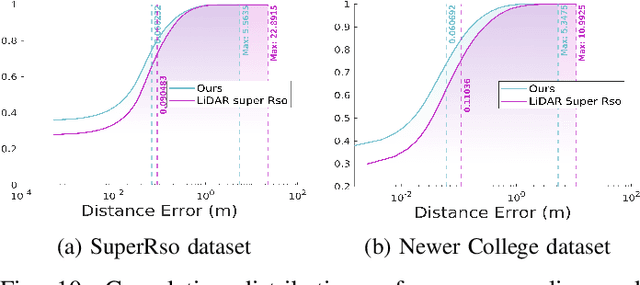

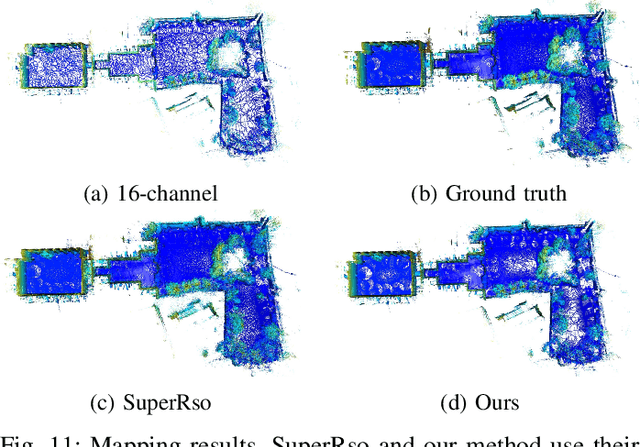

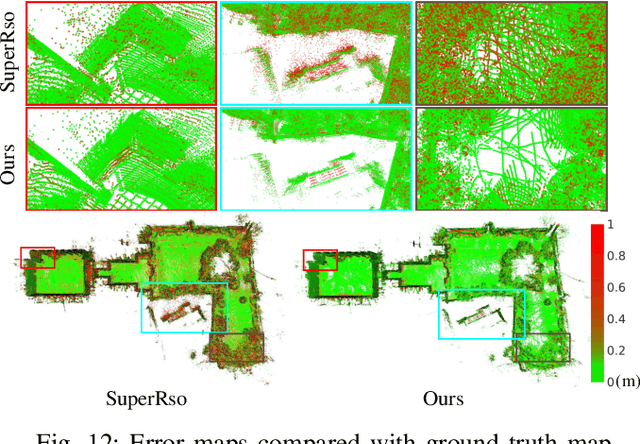

Abstract:Increasing the density of the 3D LiDAR point cloud is appealing for many applications in robotics. However, high-density LiDAR sensors are usually costly and still limited to a level of coverage per scan (e.g., 128 channels). Meanwhile, denser point cloud scans and maps mean larger volumes to store and longer times to transmit. Existing works focus on either improving point cloud density or compressing its size. This paper aims to design a novel 3D point cloud representation that can continuously increase point cloud density while reducing its storage and transmitting size. The pipeline of the proposed Continuous, Ultra-compact Representation of LiDAR (CURL) includes four main steps: meshing, upsampling, encoding, and continuous reconstruction. It is capable of transforming a 3D LiDAR scan or map into a compact spherical harmonics representation which can be used or transmitted in low latency to continuously reconstruct a much denser 3D point cloud. Extensive experiments on four public datasets, covering college gardens, city streets, and indoor rooms, demonstrate that much denser 3D point clouds can be accurately reconstructed using the proposed CURL representation while achieving up to 80% storage space-saving. We open-source the CURL codes for the community.

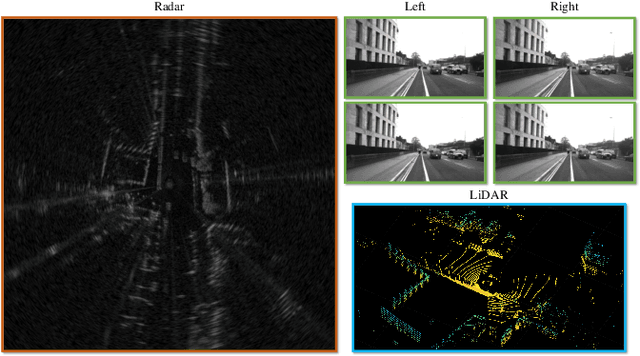

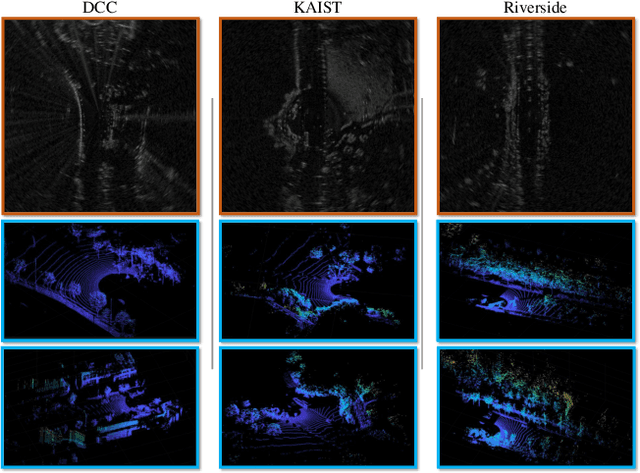

Radar SLAM: A Robust SLAM System for All Weather Conditions

Apr 12, 2021

Abstract:A Simultaneous Localization and Mapping (SLAM) system must be robust to support long-term mobile vehicle and robot applications. However, camera and LiDAR based SLAM systems can be fragile when facing challenging illumination or weather conditions which degrade their imagery and point cloud data. Radar, whose operating electromagnetic spectrum is less affected by environmental changes, is promising although its distinct sensing geometry and noise characteristics bring open challenges when being exploited for SLAM. % However, there are still open challenges since most existing visual and LiDAR SLAM systems do not operate in bad weathers. This paper studies the use of a Frequency Modulated Continuous Wave radar for SLAM in large-scale outdoor environments. We propose a full radar SLAM system, including a novel radar motion tracking algorithm that leverages radar geometry for reliable feature tracking. It also optimally compensates motion distortion and estimates pose by joint optimization. Its loop closure component is designed to be simple yet efficient for radar imagery by capturing and exploiting structural information of the surrounding environment. % while a scheme to reject ambiguous loop closure candidates is also designed specifically for radar. Extensive experiments on three public radar datasets, ranging from city streets and residential areas to countryside and highways, show competitive accuracy and reliability performance of the proposed radar SLAM system compared to the state-of-the-art LiDAR, vision and radar methods. The results show that our system is technically viable in achieving reliable SLAM in extreme weather conditions, e.g. heavy snow and dense fog, demonstrating the promising potential of using radar for all-weather localization and mapping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge