Yuncheng Zhou

Trustworthy Contrast-enhanced Brain MRI Synthesis

Jul 10, 2024

Abstract:Contrast-enhanced brain MRI (CE-MRI) is a valuable diagnostic technique but may pose health risks and incur high costs. To create safer alternatives, multi-modality medical image translation aims to synthesize CE-MRI images from other available modalities. Although existing methods can generate promising predictions, they still face two challenges, i.e., exhibiting over-confidence and lacking interpretability on predictions. To address the above challenges, this paper introduces TrustI2I, a novel trustworthy method that reformulates multi-to-one medical image translation problem as a multimodal regression problem, aiming to build an uncertainty-aware and reliable system. Specifically, our method leverages deep evidential regression to estimate prediction uncertainties and employs an explicit intermediate and late fusion strategy based on the Mixture of Normal Inverse Gamma (MoNIG) distribution, enhancing both synthesis quality and interpretability. Additionally, we incorporate uncertainty calibration to improve the reliability of uncertainty. Validation on the BraTS2018 dataset demonstrates that our approach surpasses current methods, producing higher-quality images with rational uncertainty estimation.

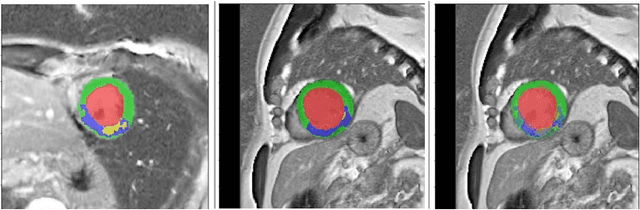

Deep Learning methods for automatic evaluation of delayed enhancement-MRI. The results of the EMIDEC challenge

Aug 10, 2021

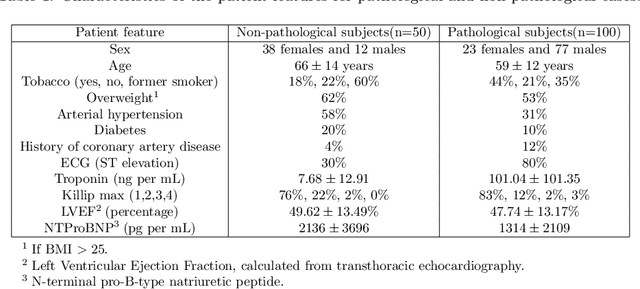

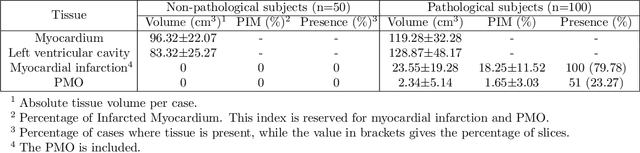

Abstract:A key factor for assessing the state of the heart after myocardial infarction (MI) is to measure whether the myocardium segment is viable after reperfusion or revascularization therapy. Delayed enhancement-MRI or DE-MRI, which is performed several minutes after injection of the contrast agent, provides high contrast between viable and nonviable myocardium and is therefore a method of choice to evaluate the extent of MI. To automatically assess myocardial status, the results of the EMIDEC challenge that focused on this task are presented in this paper. The challenge's main objectives were twofold. First, to evaluate if deep learning methods can distinguish between normal and pathological cases. Second, to automatically calculate the extent of myocardial infarction. The publicly available database consists of 150 exams divided into 50 cases with normal MRI after injection of a contrast agent and 100 cases with myocardial infarction (and then with a hyperenhanced area on DE-MRI), whatever their inclusion in the cardiac emergency department. Along with MRI, clinical characteristics are also provided. The obtained results issued from several works show that the automatic classification of an exam is a reachable task (the best method providing an accuracy of 0.92), and the automatic segmentation of the myocardium is possible. However, the segmentation of the diseased area needs to be improved, mainly due to the small size of these areas and the lack of contrast with the surrounding structures.

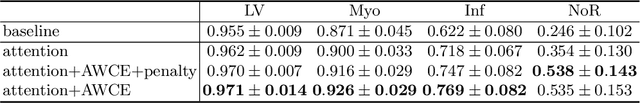

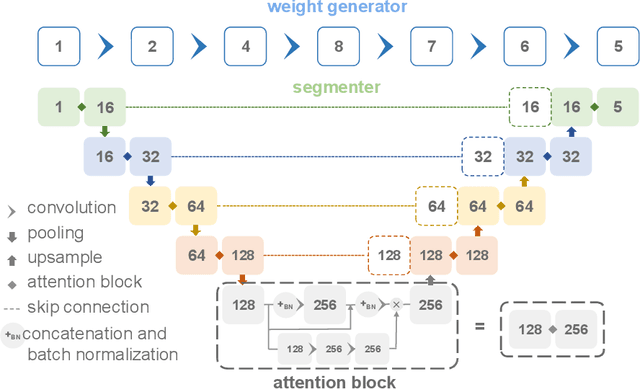

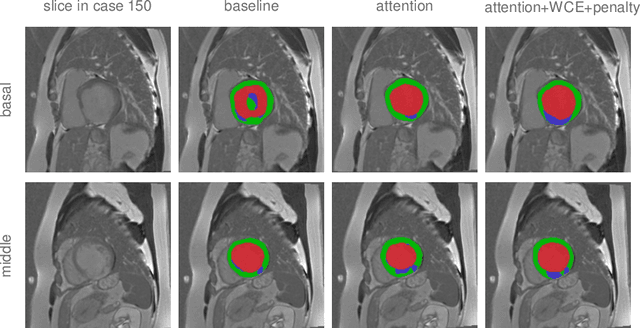

Anatomy Prior Based U-net for Pathology Segmentation with Attention

Nov 17, 2020

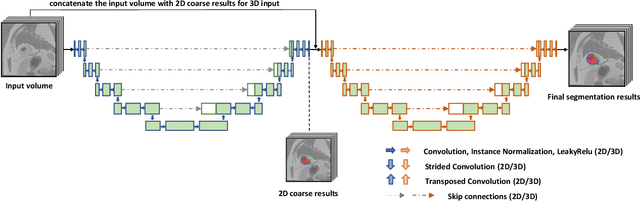

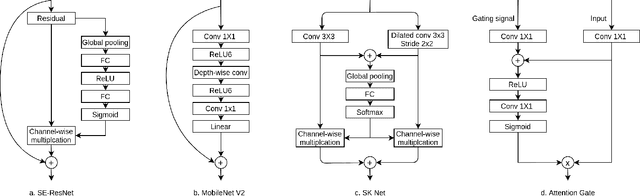

Abstract:Pathological area segmentation in cardiac magnetic resonance (MR) images plays a vital role in the clinical diagnosis of cardiovascular diseases. Because of the irregular shape and small area, pathological segmentation has always been a challenging task. We propose an anatomy prior based framework, which combines the U-net segmentation network with the attention technique. Leveraging the fact that the pathology is inclusive, we propose a neighborhood penalty strategy to gauge the inclusion relationship between the myocardium and the myocardial infarction and no-reflow areas. This neighborhood penalty strategy can be applied to any two labels with inclusive relationships (such as the whole infarction and myocardium, etc.) to form a neighboring loss. The proposed framework is evaluated on the EMIDEC dataset. Results show that our framework is effective in pathological area segmentation.

Diagnosis of Alzheimer's Disease via Multi-modality 3D Convolutional Neural Network

Feb 26, 2019

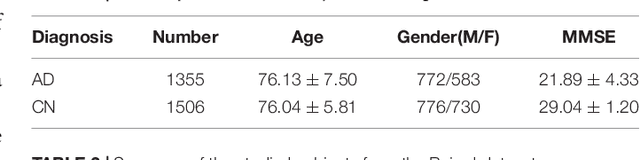

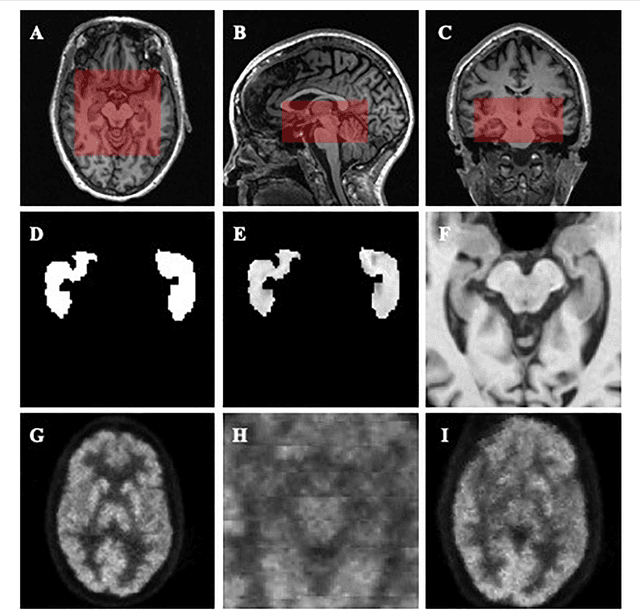

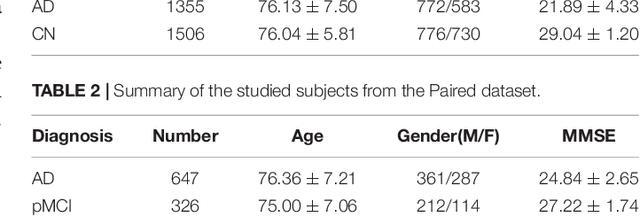

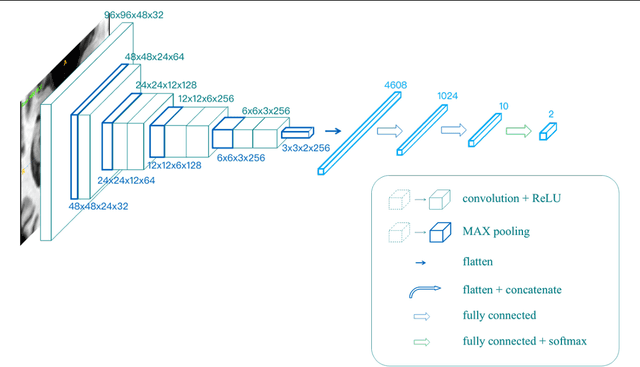

Abstract:Alzheimer's Disease (AD) is one of the most concerned neurodegenerative diseases. In the last decade, studies on AD diagnosis attached great significance to artificial intelligence (AI)-based diagnostic algorithms. Among the diverse modality imaging data, T1-weighted MRI and 18F-FDGPET are widely researched for this task. In this paper, we propose a novel convolutional neural network (CNN) to fuse the multi-modality information including T1-MRI and FDG-PDT images around the hippocampal area for the diagnosis of AD. Different from the traditional machine learning algorithms, this method does not require manually extracted features, and utilizes the stateof-art 3D image-processing CNNs to learn features for the diagnosis and prognosis of AD. To validate the performance of the proposed network, we trained the classifier with paired T1-MRI and FDG-PET images using the ADNI datasets, including 731 Normal (NL) subjects, 647 AD subjects, 441 stable MCI (sMCI) subjects and 326 progressive MCI (pMCI) subjects. We obtained the maximal accuracies of 90.10% for NL/AD task, 87.46% for NL/pMCI task, and 76.90% for sMCI/pMCI task. The proposed framework yields comparative results against state-of-the-art approaches. Moreover, the experimental results have demonstrated that (1) segmentation is not a prerequisite by using CNN, (2) the hippocampal area provides enough information to give a reference to AD diagnosis. Keywords: Alzheimer's Disease, Multi-modality, Image Classification, CNN, Deep Learning, Hippocampal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge