Youneng Bao

DynaQuant: Dynamic Mixed-Precision Quantization for Learned Image Compression

Nov 11, 2025Abstract:Prevailing quantization techniques in Learned Image Compression (LIC) typically employ a static, uniform bit-width across all layers, failing to adapt to the highly diverse data distributions and sensitivity characteristics inherent in LIC models. This leads to a suboptimal trade-off between performance and efficiency. In this paper, we introduce DynaQuant, a novel framework for dynamic mixed-precision quantization that operates on two complementary levels. First, we propose content-aware quantization, where learnable scaling and offset parameters dynamically adapt to the statistical variations of latent features. This fine-grained adaptation is trained end-to-end using a novel Distance-aware Gradient Modulator (DGM), which provides a more informative learning signal than the standard Straight-Through Estimator. Second, we introduce a data-driven, dynamic bit-width selector that learns to assign an optimal bit precision to each layer, dynamically reconfiguring the network's precision profile based on the input data. Our fully dynamic approach offers substantial flexibility in balancing rate-distortion (R-D) performance and computational cost. Experiments demonstrate that DynaQuant achieves rd performance comparable to full-precision models while significantly reducing computational and storage requirements, thereby enabling the practical deployment of advanced LIC on diverse hardware platforms.

Dataset Distillation as Data Compression: A Rate-Utility Perspective

Jul 23, 2025Abstract:Driven by the ``scale-is-everything'' paradigm, modern machine learning increasingly demands ever-larger datasets and models, yielding prohibitive computational and storage requirements. Dataset distillation mitigates this by compressing an original dataset into a small set of synthetic samples, while preserving its full utility. Yet, existing methods either maximize performance under fixed storage budgets or pursue suitable synthetic data representations for redundancy removal, without jointly optimizing both objectives. In this work, we propose a joint rate-utility optimization method for dataset distillation. We parameterize synthetic samples as optimizable latent codes decoded by extremely lightweight networks. We estimate the Shannon entropy of quantized latents as the rate measure and plug any existing distillation loss as the utility measure, trading them off via a Lagrange multiplier. To enable fair, cross-method comparisons, we introduce bits per class (bpc), a precise storage metric that accounts for sample, label, and decoder parameter costs. On CIFAR-10, CIFAR-100, and ImageNet-128, our method achieves up to $170\times$ greater compression than standard distillation at comparable accuracy. Across diverse bpc budgets, distillation losses, and backbone architectures, our approach consistently establishes better rate-utility trade-offs.

Motion Matters: Compact Gaussian Streaming for Free-Viewpoint Video Reconstruction

May 22, 2025Abstract:3D Gaussian Splatting (3DGS) has emerged as a high-fidelity and efficient paradigm for online free-viewpoint video (FVV) reconstruction, offering viewers rapid responsiveness and immersive experiences. However, existing online methods face challenge in prohibitive storage requirements primarily due to point-wise modeling that fails to exploit the motion properties. To address this limitation, we propose a novel Compact Gaussian Streaming (ComGS) framework, leveraging the locality and consistency of motion in dynamic scene, that models object-consistent Gaussian point motion through keypoint-driven motion representation. By transmitting only the keypoint attributes, this framework provides a more storage-efficient solution. Specifically, we first identify a sparse set of motion-sensitive keypoints localized within motion regions using a viewspace gradient difference strategy. Equipped with these keypoints, we propose an adaptive motion-driven mechanism that predicts a spatial influence field for propagating keypoint motion to neighboring Gaussian points with similar motion. Moreover, ComGS adopts an error-aware correction strategy for key frame reconstruction that selectively refines erroneous regions and mitigates error accumulation without unnecessary overhead. Overall, ComGS achieves a remarkable storage reduction of over 159 X compared to 3DGStream and 14 X compared to the SOTA method QUEEN, while maintaining competitive visual fidelity and rendering speed. Our code will be released.

ShiftLIC: Lightweight Learned Image Compression with Spatial-Channel Shift Operations

Mar 29, 2025Abstract:Learned Image Compression (LIC) has attracted considerable attention due to their outstanding rate-distortion (R-D) performance and flexibility. However, the substantial computational cost poses challenges for practical deployment. The issue of feature redundancy in LIC is rarely addressed. Our findings indicate that many features within the LIC backbone network exhibit similarities. This paper introduces ShiftLIC, a novel and efficient LIC framework that employs parameter-free shift operations to replace large-kernel convolutions, significantly reducing the model's computational burden and parameter count. Specifically, we propose the Spatial Shift Block (SSB), which combines shift operations with small-kernel convolutions to replace large-kernel. This approach maintains feature extraction efficiency while reducing both computational complexity and model size. To further enhance the representation capability in the channel dimension, we propose a channel attention module based on recursive feature fusion. This module enhances feature interaction while minimizing computational overhead. Additionally, we introduce an improved entropy model integrated with the SSB module, making the entropy estimation process more lightweight and thereby comprehensively reducing computational costs. Experimental results demonstrate that ShiftLIC outperforms leading compression methods, such as VVC Intra and GMM, in terms of computational cost, parameter count, and decoding latency. Additionally, ShiftLIC sets a new SOTA benchmark with a BD-rate gain per MACs/pixel of -102.6\%, showcasing its potential for practical deployment in resource-constrained environments. The code is released at https://github.com/baoyu2020/ShiftLIC.

Enhancing Adversarial Training with Prior Knowledge Distillation for Robust Image Compression

Mar 16, 2024

Abstract:Deep neural network-based image compression (NIC) has achieved excellent performance, but NIC method models have been shown to be susceptible to backdoor attacks. Adversarial training has been validated in image compression models as a common method to enhance model robustness. However, the improvement effect of adversarial training on model robustness is limited. In this paper, we propose a prior knowledge-guided adversarial training framework for image compression models. Specifically, first, we propose a gradient regularization constraint for training robust teacher models. Subsequently, we design a knowledge distillation based strategy to generate a priori knowledge from the teacher model to the student model for guiding adversarial training. Experimental results show that our method improves the reconstruction quality by about 9dB when the Kodak dataset is elected as the backdoor attack object for psnr attack. Compared with Ma2023, our method has a 5dB higher PSNR output at high bitrate points.

Universal Learned Image Compression With Low Computational Cost

Jun 23, 2022

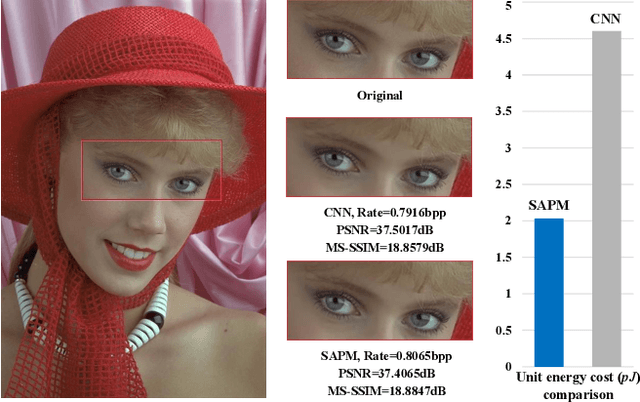

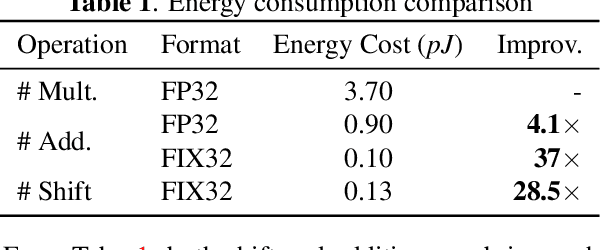

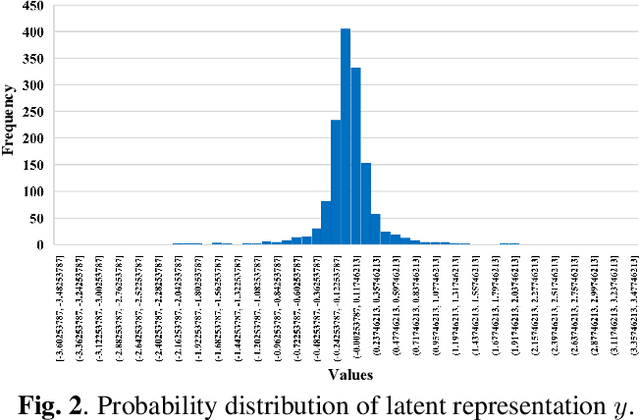

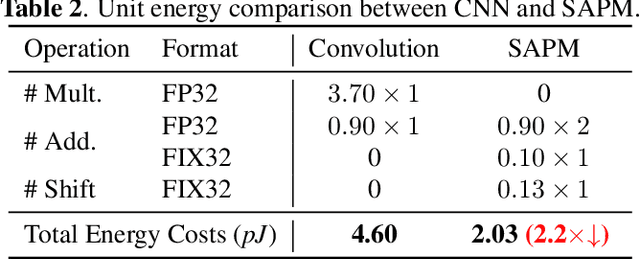

Abstract:Recently, learned image compression methods have developed rapidly and exhibited excellent rate-distortion performance when compared to traditional standards, such as JPEG, JPEG2000 and BPG. However, the learning-based methods suffer from high computational costs, which is not beneficial for deployment on devices with limited resources. To this end, we propose shift-addition parallel modules (SAPMs), including SAPM-E for the encoder and SAPM-D for the decoder, to largely reduce the energy consumption. To be specific, they can be taken as plug-and-play components to upgrade existing CNN-based architectures, where the shift branch is used to extract large-grained features as compared to small-grained features learned by the addition branch. Furthermore, we thoroughly analyze the probability distribution of latent representations and propose to use Laplace Mixture Likelihoods for more accurate entropy estimation. Experimental results demonstrate that the proposed methods can achieve comparable or even better performance on both PSNR and MS-SSIM metrics to that of the convolutional counterpart with an about 2x energy reduction.

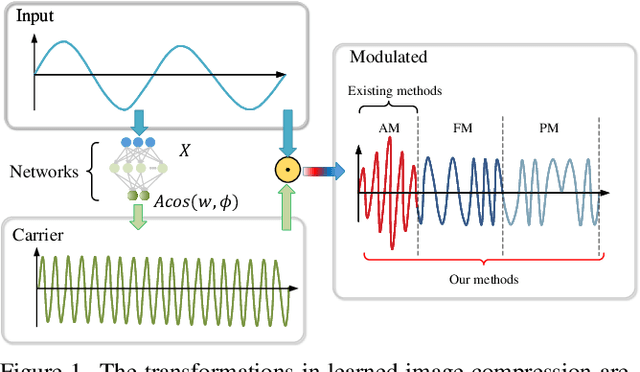

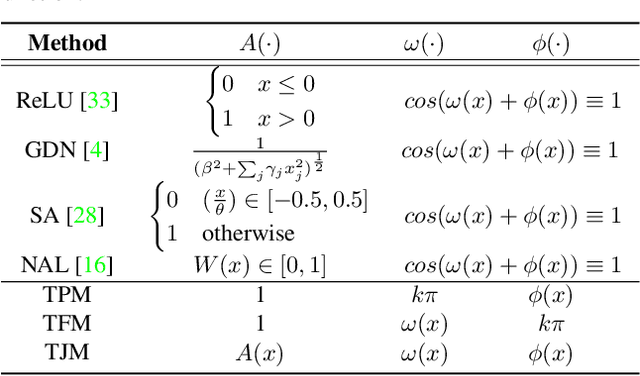

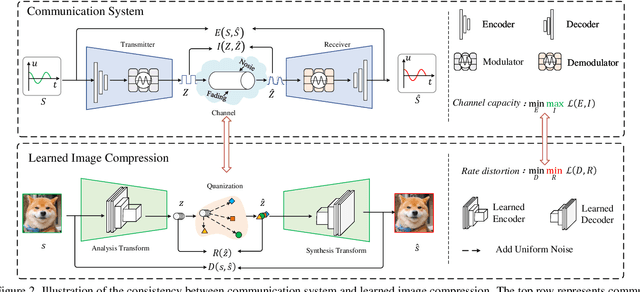

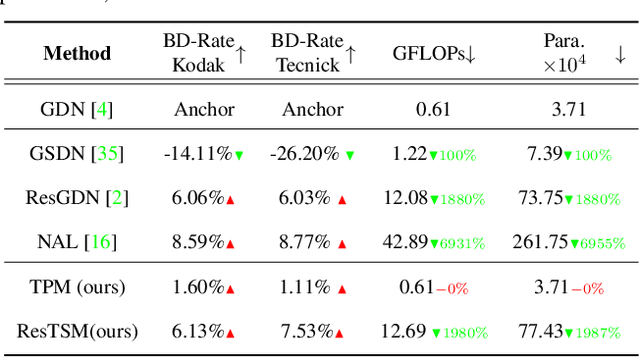

Transformations in Learned Image Compression from a Modulation Perspective

Mar 09, 2022

Abstract:In this paper, a unified transformation method in learned image compression(LIC) is proposed from the perspective of modulation. Firstly, the quantization in LIC is considered as a generalized channel with additive uniform noise. Moreover, the LIC is interpreted as a particular communication system according to the consistency in structures and optimization objectives. Thus, the technology of communication systems can be applied to guide the design of modules in LIC. Furthermore, a unified transform method based on signal modulation (TSM) is defined. In the view of TSM, the existing transformation methods are mathematically reduced to a linear modulation. A series of transformation methods, e.g. TPM and TJM, are obtained by extending to nonlinear modulation. The experimental results on various datasets and backbone architectures verify that the effectiveness and robustness of the proposed method. More importantly, it further confirms the feasibility of guiding LIC design from a communication perspective. For example, when backbone architecture is hyperprior combining context model, our method achieves 3.52$\%$ BD-rate reduction over GDN on Kodak dataset without increasing complexity.

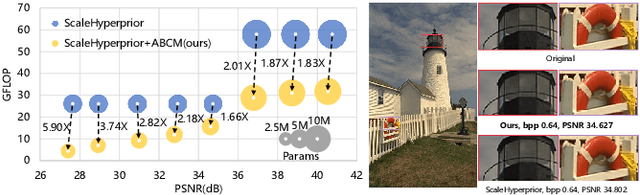

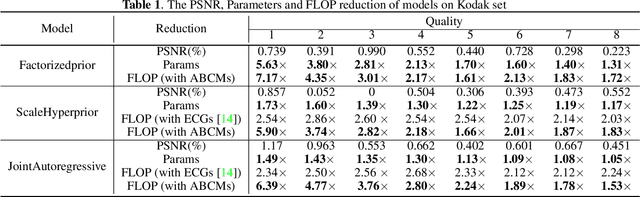

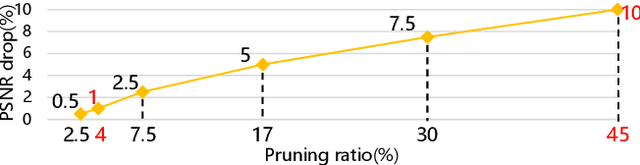

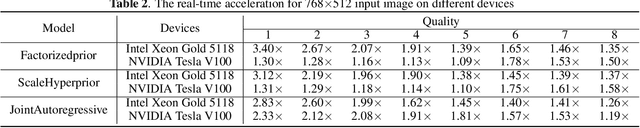

Exploring Structural Sparsity in Neural Image Compression

Feb 24, 2022

Abstract:Neural image compression have reached or out-performed traditional methods (such as JPEG, BPG, WebP). However,their sophisticated network structures with cascaded convolution layers bring heavy computational burden for practical deployment. In this paper, we explore the structural sparsity in neural image compression network to obtain real-time acceleration without any specialized hardware design or algorithm. We propose a simple plug-in adaptive binary channel masking(ABCM) to judge the importance of each convolution channel and introduce sparsity during training. During inference, the unimportant channels are pruned to obtain slimmer network and less computation. We implement our method into three neural image compression networks with different entropy models to verify its effectiveness and generalization, the experiment results show that up to 7x computation reduction and 3x acceleration can be achieved with negligible performance drop.

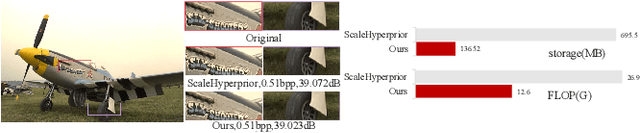

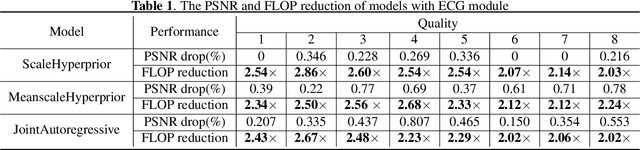

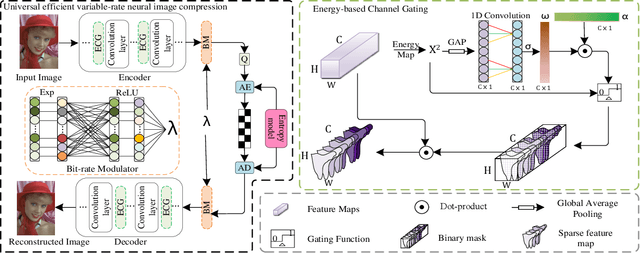

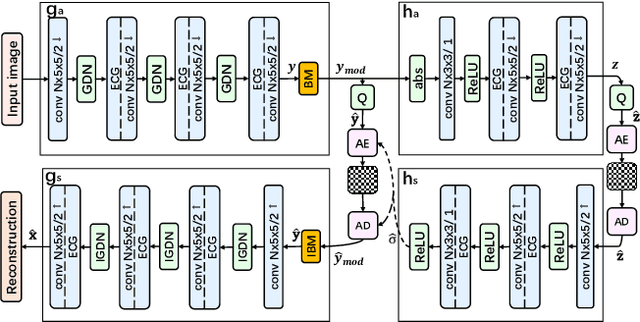

Universal Efficient Variable-rate Neural Image Compression

Dec 01, 2021

Abstract:Recently, Learning-based image compression has reached comparable performance with traditional image codecs(such as JPEG, BPG, WebP). However, computational complexity and rate flexibility are still two major challenges for its practical deployment. To tackle these problems, this paper proposes two universal modules named Energy-based Channel Gating(ECG) and Bit-rate Modulator(BM), which can be directly embedded into existing end-to-end image compression models. ECG uses dynamic pruning to reduce FLOPs for more than 50\% in convolution layers, and a BM pair can modulate the latent representation to control the bit-rate in a channel-wise manner. By implementing these two modules, existing learning-based image codecs can obtain ability to output arbitrary bit-rate with a single model and reduced computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge