Yongjing Hao

How Do Graph Signals Affect Recommendation: Unveiling the Mystery of Low and High-Frequency Graph Signals

Dec 10, 2025

Abstract:Spectral graph neural networks (GNNs) are highly effective in modeling graph signals, with their success in recommendation often attributed to low-pass filtering. However, recent studies highlight the importance of high-frequency signals. The role of low-frequency and high-frequency graph signals in recommendation remains unclear. This paper aims to bridge this gap by investigating the influence of graph signals on recommendation performance. We theoretically prove that the effects of low-frequency and high-frequency graph signals are equivalent in recommendation tasks, as both contribute by smoothing the similarities between user-item pairs. To leverage this insight, we propose a frequency signal scaler, a plug-and-play module that adjusts the graph signal filter function to fine-tune the smoothness between user-item pairs, making it compatible with any GNN model. Additionally, we identify and prove that graph embedding-based methods cannot fully capture the characteristics of graph signals. To address this limitation, a space flip method is introduced to restore the expressive power of graph embeddings. Remarkably, we demonstrate that either low-frequency or high-frequency graph signals alone are sufficient for effective recommendations. Extensive experiments on four public datasets validate the effectiveness of our proposed methods. Code is avaliable at https://github.com/mojosey/SimGCF.

Amplify Graph Learning for Recommendation via Sparsity Completion

Jun 27, 2024

Abstract:Graph learning models have been widely deployed in collaborative filtering (CF) based recommendation systems. Due to the issue of data sparsity, the graph structure of the original input lacks potential positive preference edges, which significantly reduces the performance of recommendations. In this paper, we study how to enhance the graph structure for CF more effectively, thereby optimizing the representation of graph nodes. Previous works introduced matrix completion techniques into CF, proposing the use of either stochastic completion methods or superficial structure completion to address this issue. However, most of these approaches employ random numerical filling that lack control over noise perturbations and limit the in-depth exploration of higher-order interaction features of nodes, resulting in biased graph representations. In this paper, we propose an Amplify Graph Learning framework based on Sparsity Completion (called AGL-SC). First, we utilize graph neural network to mine direct interaction features between user and item nodes, which are used as the inputs of the encoder. Second, we design a factorization-based method to mine higher-order interaction features. These features serve as perturbation factors in the latent space of the hidden layer to facilitate generative enhancement. Finally, by employing the variational inference, the above multi-order features are integrated to implement the completion and enhancement of missing graph structures. We conducted benchmark and strategy experiments on four real-world datasets related to recommendation tasks. The experimental results demonstrate that AGL-SC significantly outperforms the state-of-the-art methods.

Meta-optimized Joint Generative and Contrastive Learning for Sequential Recommendation

Oct 21, 2023

Abstract:Sequential Recommendation (SR) has received increasing attention due to its ability to capture user dynamic preferences. Recently, Contrastive Learning (CL) provides an effective approach for sequential recommendation by learning invariance from different views of an input. However, most existing data or model augmentation methods may destroy semantic sequential interaction characteristics and often rely on the hand-crafted property of their contrastive view-generation strategies. In this paper, we propose a Meta-optimized Seq2Seq Generator and Contrastive Learning (Meta-SGCL) for sequential recommendation, which applies the meta-optimized two-step training strategy to adaptive generate contrastive views. Specifically, Meta-SGCL first introduces a simple yet effective augmentation method called Sequence-to-Sequence (Seq2Seq) generator, which treats the Variational AutoEncoders (VAE) as the view generator and can constitute contrastive views while preserving the original sequence's semantics. Next, the model employs a meta-optimized two-step training strategy, which aims to adaptively generate contrastive views without relying on manually designed view-generation techniques. Finally, we evaluate our proposed method Meta-SGCL using three public real-world datasets. Compared with the state-of-the-art methods, our experimental results demonstrate the effectiveness of our model and the code is available.

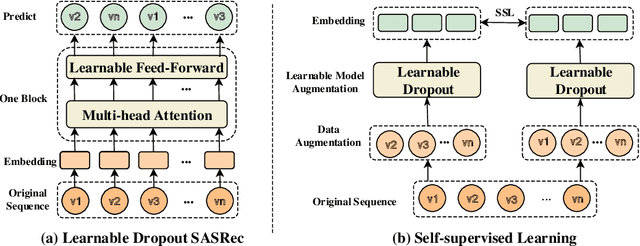

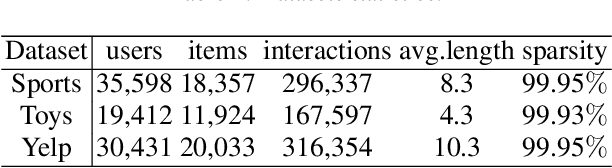

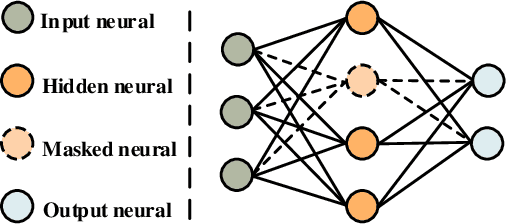

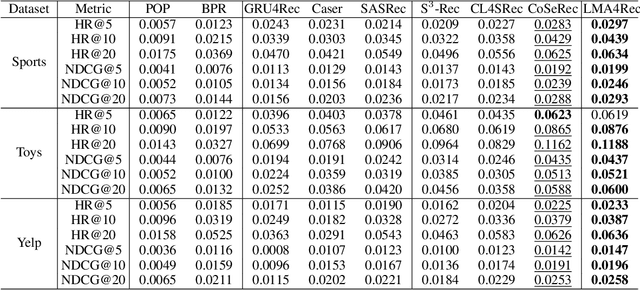

Learnable Model Augmentation Self-Supervised Learning for Sequential Recommendation

Apr 21, 2022

Abstract:Sequential Recommendation aims to predict the next item based on user behaviour. Recently, Self-Supervised Learning (SSL) has been proposed to improve recommendation performance. However, most of existing SSL methods use a uniform data augmentation scheme, which loses the sequence correlation of an original sequence. To this end, in this paper, we propose a Learnable Model Augmentation self-supervised learning for sequential Recommendation (LMA4Rec). Specifically, LMA4Rec first takes model augmentation as a supplementary method for data augmentation to generate views. Then, LMA4Rec uses learnable Bernoulli dropout to implement model augmentation learnable operations. Next, self-supervised learning is used between the contrastive views to extract self-supervised signals from an original sequence. Finally, experiments on three public datasets show that the LMA4Rec method effectively improves sequential recommendation performance compared with baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge