Yiyan Chen

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

Weakly Supervised Video Summarization by Hierarchical Reinforcement Learning

Jan 12, 2020

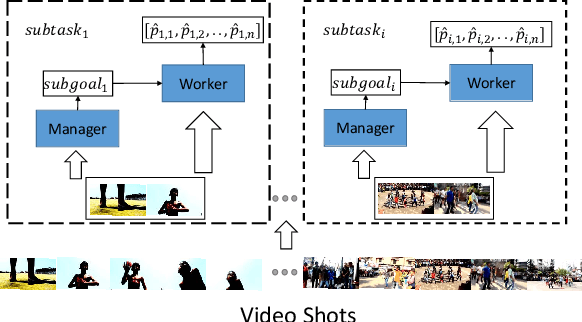

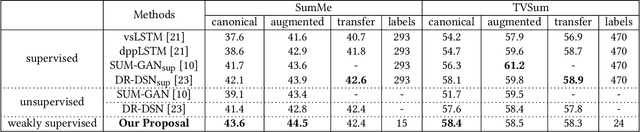

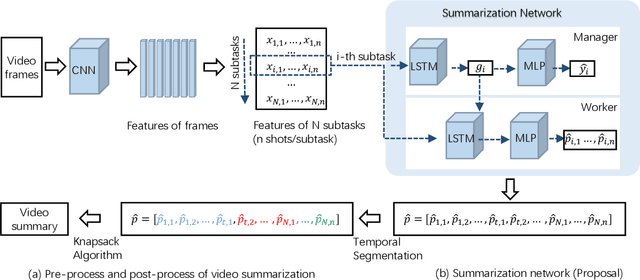

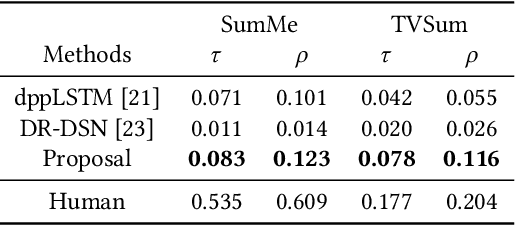

Abstract:Conventional video summarization approaches based on reinforcement learning have the problem that the reward can only be received after the whole summary is generated. Such kind of reward is sparse and it makes reinforcement learning hard to converge. Another problem is that labelling each frame is tedious and costly, which usually prohibits the construction of large-scale datasets. To solve these problems, we propose a weakly supervised hierarchical reinforcement learning framework, which decomposes the whole task into several subtasks to enhance the summarization quality. This framework consists of a manager network and a worker network. For each subtask, the manager is trained to set a subgoal only by a task-level binary label, which requires much fewer labels than conventional approaches. With the guide of the subgoal, the worker predicts the importance scores for video frames in the subtask by policy gradient according to both global reward and innovative defined sub-rewards to overcome the sparse problem. Experiments on two benchmark datasets show that our proposal has achieved the best performance, even better than supervised approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge