Xuming Ran

Key-Value Pair-Free Continual Learner via Task-Specific Prompt-Prototype

Jan 08, 2026Abstract:Continual learning aims to enable models to acquire new knowledge while retaining previously learned information. Prompt-based methods have shown remarkable performance in this domain; however, they typically rely on key-value pairing, which can introduce inter-task interference and hinder scalability. To overcome these limitations, we propose a novel approach employing task-specific Prompt-Prototype (ProP), thereby eliminating the need for key-value pairs. In our method, task-specific prompts facilitate more effective feature learning for the current task, while corresponding prototypes capture the representative features of the input. During inference, predictions are generated by binding each task-specific prompt with its associated prototype. Additionally, we introduce regularization constraints during prompt initialization to penalize excessively large values, thereby enhancing stability. Experiments on several widely used datasets demonstrate the effectiveness of the proposed method. In contrast to mainstream prompt-based approaches, our framework removes the dependency on key-value pairs, offering a fresh perspective for future continual learning research.

Distillation-Guided Structural Transfer for Continual Learning Beyond Sparse Distributed Memory

Dec 17, 2025Abstract:Sparse neural systems are gaining traction for efficient continual learning due to their modularity and low interference. Architectures such as Sparse Distributed Memory Multi-Layer Perceptrons (SDMLP) construct task-specific subnetworks via Top-K activation and have shown resilience against catastrophic forgetting. However, their rigid modularity limits cross-task knowledge reuse and leads to performance degradation under high sparsity. We propose Selective Subnetwork Distillation (SSD), a structurally guided continual learning framework that treats distillation not as a regularizer but as a topology-aligned information conduit. SSD identifies neurons with high activation frequency and selectively distills knowledge within previous Top-K subnetworks and output logits, without requiring replay or task labels. This enables structural realignment while preserving sparse modularity. Experiments on Split CIFAR-10, CIFAR-100, and MNIST demonstrate that SSD improves accuracy, retention, and representation coverage, offering a structurally grounded solution for sparse continual learning.

AVM: Towards Structure-Preserving Neural Response Modeling in the Visual Cortex Across Stimuli and Individuals

Dec 17, 2025Abstract:While deep learning models have shown strong performance in simulating neural responses, they often fail to clearly separate stable visual encoding from condition-specific adaptation, which limits their ability to generalize across stimuli and individuals. We introduce the Adaptive Visual Model (AVM), a structure-preserving framework that enables condition-aware adaptation through modular subnetworks, without modifying the core representation. AVM keeps a Vision Transformer-based encoder frozen to capture consistent visual features, while independently trained modulation paths account for neural response variations driven by stimulus content and subject identity. We evaluate AVM in three experimental settings, including stimulus-level variation, cross-subject generalization, and cross-dataset adaptation, all of which involve structured changes in inputs and individuals. Across two large-scale mouse V1 datasets, AVM outperforms the state-of-the-art V1T model by approximately 2% in predictive correlation, demonstrating robust generalization, interpretable condition-wise modulation, and high architectural efficiency. Specifically, AVM achieves a 9.1% improvement in explained variance (FEVE) under the cross-dataset adaptation setting. These results suggest that AVM provides a unified framework for adaptive neural modeling across biological and experimental conditions, offering a scalable solution under structural constraints. Its design may inform future approaches to cortical modeling in both neuroscience and biologically inspired AI systems.

REPAIR: Robust Editing via Progressive Adaptive Intervention and Reintegration

Oct 02, 2025

Abstract:Post-training for large language models (LLMs) is constrained by the high cost of acquiring new knowledge or correcting errors and by the unintended side effects that frequently arise from retraining. To address these issues, we introduce REPAIR (Robust Editing via Progressive Adaptive Intervention and Reintegration), a lifelong editing framework designed to support precise and low-cost model updates while preserving non-target knowledge. REPAIR mitigates the instability and conflicts of large-scale sequential edits through a closed-loop feedback mechanism coupled with dynamic memory management. Furthermore, by incorporating frequent knowledge fusion and enforcing strong locality guards, REPAIR effectively addresses the shortcomings of traditional distribution-agnostic approaches that often overlook unintended ripple effects. Our experiments demonstrate that REPAIR boosts editing accuracy by 10%-30% across multiple model families and significantly reduces knowledge forgetting. This work introduces a robust framework for developing reliable, scalable, and continually evolving LLMs.

Brain-inspired continual pre-trained learner via silent synaptic consolidation

Oct 08, 2024

Abstract:Pre-trained models have demonstrated impressive generalization capabilities, yet they remain vulnerable to catastrophic forgetting when incrementally trained on new tasks. Existing architecture-based strategies encounter two primary challenges: 1) Integrating a pre-trained network with a trainable sub-network complicates the delicate balance between learning plasticity and memory stability across evolving tasks during learning. 2) The absence of robust interconnections between pre-trained networks and various sub-networks limits the effective retrieval of pertinent information during inference. In this study, we introduce the Artsy, inspired by the activation mechanisms of silent synapses via spike-timing-dependent plasticity observed in mature brains, to enhance the continual learning capabilities of pre-trained models. The Artsy integrates two key components: During training, the Artsy mimics mature brain dynamics by maintaining memory stability for previously learned knowledge within the pre-trained network while simultaneously promoting learning plasticity in task-specific sub-networks. During inference, artificial silent and functional synapses are utilized to establish precise connections between the pre-synaptic neurons in the pre-trained network and the post-synaptic neurons in the sub-networks, facilitated through synaptic consolidation, thereby enabling effective extraction of relevant information from test samples. Comprehensive experimental evaluations reveal that our model significantly outperforms conventional methods on class-incremental learning tasks, while also providing enhanced biological interpretability for architecture-based approaches. Moreover, we propose that the Artsy offers a promising avenue for simulating biological synaptic mechanisms, potentially advancing our understanding of neural plasticity in both artificial and biological systems.

AdaViPro: Region-based Adaptive Visual Prompt for Large-Scale Models Adapting

Mar 20, 2024

Abstract:Recently, prompt-based methods have emerged as a new alternative `parameter-efficient fine-tuning' paradigm, which only fine-tunes a small number of additional parameters while keeping the original model frozen. However, despite achieving notable results, existing prompt methods mainly focus on `what to add', while overlooking the equally important aspect of `where to add', typically relying on the manually crafted placement. To this end, we propose a region-based Adaptive Visual Prompt, named AdaViPro, which integrates the `where to add' optimization of the prompt into the learning process. Specifically, we reconceptualize the `where to add' optimization as a problem of regional decision-making. During inference, AdaViPro generates a regionalized mask map for the whole image, which is composed of 0 and 1, to designate whether to apply or discard the prompt in each specific area. Therefore, we employ Gumbel-Softmax sampling to enable AdaViPro's end-to-end learning through standard back-propagation. Extensive experiments demonstrate that our AdaViPro yields new efficiency and accuracy trade-offs for adapting pre-trained models.

Enhancing Adaptive History Reserving by Spiking Convolutional Block Attention Module in Recurrent Neural Networks

Jan 08, 2024

Abstract:Spiking neural networks (SNNs) serve as one type of efficient model to process spatio-temporal patterns in time series, such as the Address-Event Representation data collected from Dynamic Vision Sensor (DVS). Although convolutional SNNs have achieved remarkable performance on these AER datasets, benefiting from the predominant spatial feature extraction ability of convolutional structure, they ignore temporal features related to sequential time points. In this paper, we develop a recurrent spiking neural network (RSNN) model embedded with an advanced spiking convolutional block attention module (SCBAM) component to combine both spatial and temporal features of spatio-temporal patterns. It invokes the history information in spatial and temporal channels adaptively through SCBAM, which brings the advantages of efficient memory calling and history redundancy elimination. The performance of our model was evaluated in DVS128-Gesture dataset and other time-series datasets. The experimental results show that the proposed SRNN-SCBAM model makes better use of the history information in spatial and temporal dimensions with less memory space, and achieves higher accuracy compared to other models.

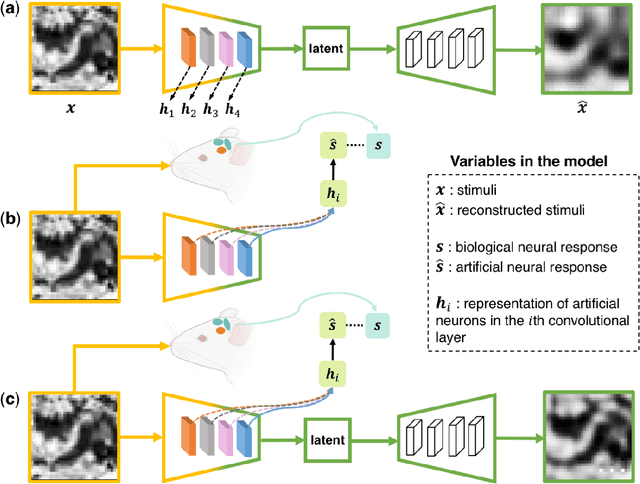

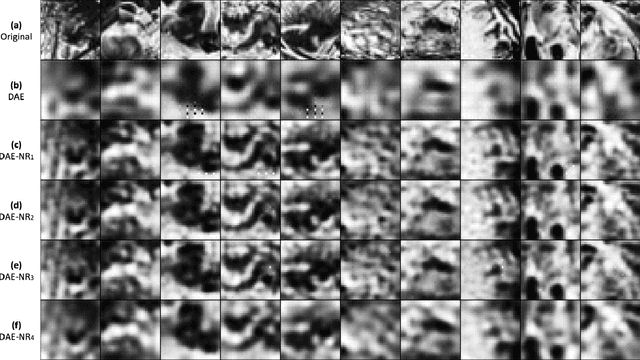

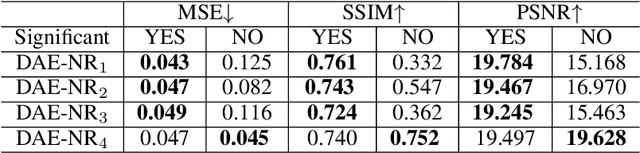

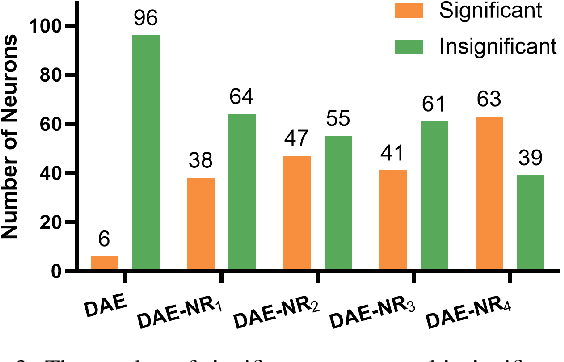

Deep Auto-encoder with Neural Response

Nov 30, 2021

Abstract:Artificial intelligence and neuroscience are deeply interactive. Artificial neural networks (ANNs) have been a versatile tool to study the neural representation in the ventral visual stream, and the knowledge in neuroscience in return inspires ANN models to improve performance in the task. However, how to merge these two directions into a unified model has less studied. Here, we propose a hybrid model, called deep auto-encoder with the neural response (DAE-NR), which incorporates the information from the visual cortex into ANNs to achieve better image reconstruction and higher neural representation similarity between biological and artificial neurons. Specifically, the same visual stimuli (i.e., natural images) are input to both the mice brain and DAE-NR. The DAE-NR jointly learns to map a specific layer of the encoder network to the biological neural responses in the ventral visual stream by a mapping function and to reconstruct the visual input by the decoder. Our experiments demonstrate that if and only if with the joint learning, DAE-NRs can (i) improve the performance of image reconstruction and (ii) increase the representational similarity between biological neurons and artificial neurons. The DAE-NR offers a new perspective on the integration of computer vision and visual neuroscience.

Machine Learning Applications on Neuroimaging for Diagnosis and Prognosis of Epilepsy: A Review

Feb 05, 2021

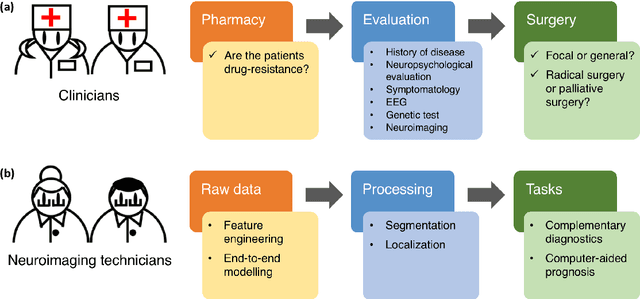

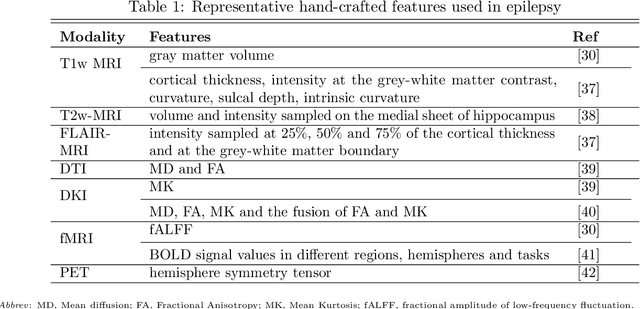

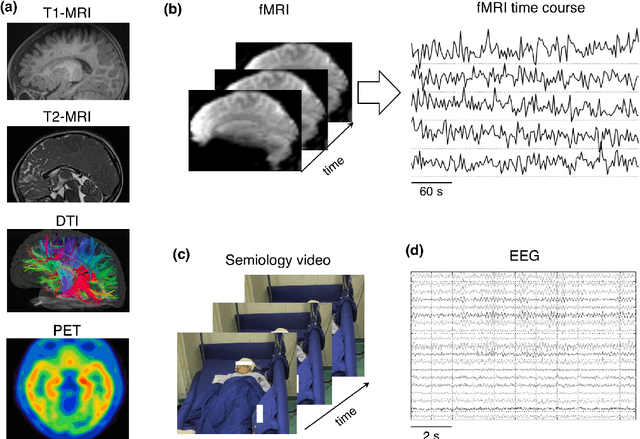

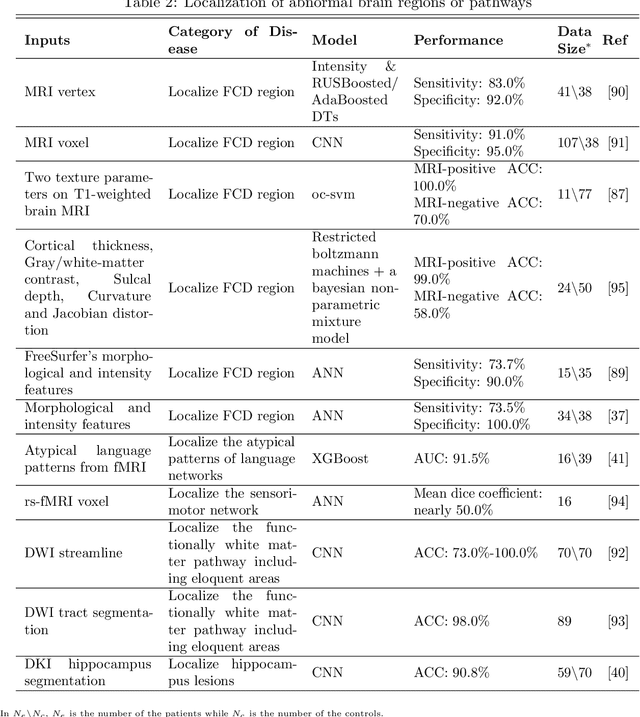

Abstract:Machine learning is playing an increasing important role in medical image analysis, spawning new advances in neuroimaging clinical applications. However, previous work and reviews were mainly focused on the electrophysiological signals like EEG or SEEG; the potential of neuroimaging in epilepsy research has been largely overlooked despite of its wide use in clinical practices. In this review, we highlight the interactions between neuroimaging and machine learning in the context of the epilepsy diagnosis and prognosis. We firstly outline typical neuroimaging modalities used in epilepsy clinics, \textit{e.g} MRI, DTI, fMRI and PET. We then introduce two approaches to apply machine learning methods to neuroimaging data: the two-step compositional approach which combines feature engineering and machine learning classifier, and the end-to-end approach which is usually toward deep learning. Later a detailed review on the machine learning tasks on epileptic images is presented, such as segmentation, localization and lateralization tasks, as well as the tasks directly related to the diagnosis and prognosis. In the end, we discuss current achievements, challenges, potential future directions in the field, with the hope to pave a way to computer-aided diagnosis and prognosis of epilepsy.

Bigeminal Priors Variational auto-encoder

Oct 05, 2020

Abstract:Variational auto-encoders (VAEs) are an influential and generally-used class of likelihood-based generative models in unsupervised learning. The likelihood-based generative models have been reported to be highly robust to the out-of-distribution (OOD) inputs and can be a detector by assuming that the model assigns higher likelihoods to the samples from the in-distribution (ID) dataset than an OOD dataset. However, recent works reported a phenomenon that VAE recognizes some OOD samples as ID by assigning a higher likelihood to the OOD inputs compared to the one from ID. In this work, we introduce a new model, namely Bigeminal Priors Variational auto-encoder (BPVAE), to address this phenomenon. The BPVAE aims to enhance the robustness of the VAEs by combing the power of VAE with the two independent priors that belong to the training dataset and simple dataset, which complexity is lower than the training dataset, respectively. BPVAE learns two datasets'features, assigning a higher likelihood for the training dataset than the simple dataset. In this way, we can use BPVAE's density estimate for detecting the OOD samples. Quantitative experimental results suggest that our model has better generalization capability and stronger robustness than the standard VAEs, proving the effectiveness of the proposed approach of hybrid learning by collaborative priors. Overall, this work paves a new avenue to potentially overcome the OOD problem via multiple latent priors modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge