Xiaoming Tao

Efficient Equivariant High-Order Crystal Tensor Prediction via Cartesian Local-Environment Many-Body Coupling

Feb 04, 2026Abstract:End-to-end prediction of high-order crystal tensor properties from atomic structures remains challenging: while spherical-harmonic equivariant models are expressive, their Clebsch-Gordan tensor products incur substantial compute and memory costs for higher-order targets. We propose the Cartesian Environment Interaction Tensor Network (CEITNet), an approach that constructs a multi-channel Cartesian local environment tensor for each atom and performs flexible many-body mixing via a learnable channel-space interaction. By performing learning in channel space and using Cartesian tensor bases to assemble equivariant outputs, CEITNet enables efficient construction of high-order tensor. Across benchmark datasets for order-2 dielectric, order-3 piezoelectric, and order-4 elastic tensor prediction, CEITNet surpasses prior high-order prediction methods on key accuracy criteria while offering high computational efficiency.

Assisted Refinement Network Based on Channel Information Interaction for Camouflaged and Salient Object Detection

Dec 12, 2025Abstract:Camouflaged Object Detection (COD) stands as a significant challenge in computer vision, dedicated to identifying and segmenting objects visually highly integrated with their backgrounds. Current mainstream methods have made progress in cross-layer feature fusion, but two critical issues persist during the decoding stage. The first is insufficient cross-channel information interaction within the same-layer features, limiting feature expressiveness. The second is the inability to effectively co-model boundary and region information, making it difficult to accurately reconstruct complete regions and sharp boundaries of objects. To address the first issue, we propose the Channel Information Interaction Module (CIIM), which introduces a horizontal-vertical integration mechanism in the channel dimension. This module performs feature reorganization and interaction across channels to effectively capture complementary cross-channel information. To address the second issue, we construct a collaborative decoding architecture guided by prior knowledge. This architecture generates boundary priors and object localization maps through Boundary Extraction (BE) and Region Extraction (RE) modules, then employs hybrid attention to collaboratively calibrate decoded features, effectively overcoming semantic ambiguity and imprecise boundaries. Additionally, the Multi-scale Enhancement (MSE) module enriches contextual feature representations. Extensive experiments on four COD benchmark datasets validate the effectiveness and state-of-the-art performance of the proposed model. We further transferred our model to the Salient Object Detection (SOD) task and demonstrated its adaptability across downstream tasks, including polyp segmentation, transparent object detection, and industrial and road defect detection. Code and experimental results are publicly available at: https://github.com/akuan1234/ARNet-v2.

Joint Semantic-Channel Coding and Modulation for Token Communications

Nov 19, 2025Abstract:In recent years, the Transformer architecture has achieved outstanding performance across a wide range of tasks and modalities. Token is the unified input and output representation in Transformer-based models, which has become a fundamental information unit. In this work, we consider the problem of token communication, studying how to transmit tokens efficiently and reliably. Point cloud, a prevailing three-dimensional format which exhibits a more complex spatial structure compared to image or video, is chosen to be the information source. We utilize the set abstraction method to obtain point tokens. Subsequently, to get a more informative and transmission-friendly representation based on tokens, we propose a joint semantic-channel and modulation (JSCCM) scheme for the token encoder, mapping point tokens to standard digital constellation points (modulated tokens). Specifically, the JSCCM consists of two parallel Point Transformer-based encoders and a differential modulator which combines the Gumel-softmax and soft quantization methods. Besides, the rate allocator and channel adapter are developed, facilitating adaptive generation of high-quality modulated tokens conditioned on both semantic information and channel conditions. Extensive simulations demonstrate that the proposed method outperforms both joint semantic-channel coding and traditional separate coding, achieving over 1dB gain in reconstruction and more than 6x compression ratio in modulated symbols.

Magnitude-Modulated Equivariant Adapter for Parameter-Efficient Fine-Tuning of Equivariant Graph Neural Networks

Nov 10, 2025Abstract:Pretrained equivariant graph neural networks based on spherical harmonics offer efficient and accurate alternatives to computationally expensive ab-initio methods, yet adapting them to new tasks and chemical environments still requires fine-tuning. Conventional parameter-efficient fine-tuning (PEFT) techniques, such as Adapters and LoRA, typically break symmetry, making them incompatible with those equivariant architectures. ELoRA, recently proposed, is the first equivariant PEFT method. It achieves improved parameter efficiency and performance on many benchmarks. However, the relatively high degrees of freedom it retains within each tensor order can still perturb pretrained feature distributions and ultimately degrade performance. To address this, we present Magnitude-Modulated Equivariant Adapter (MMEA), a novel equivariant fine-tuning method which employs lightweight scalar gating to modulate feature magnitudes on a per-order and per-multiplicity basis. We demonstrate that MMEA preserves strict equivariance and, across multiple benchmarks, consistently improves energy and force predictions to state-of-the-art levels while training fewer parameters than competing approaches. These results suggest that, in many practical scenarios, modulating channel magnitudes is sufficient to adapt equivariant models to new chemical environments without breaking symmetry, pointing toward a new paradigm for equivariant PEFT design.

Few-shot Semantic Encoding and Decoding for Video Surveillance

May 12, 2025Abstract:With the continuous increase in the number and resolution of video surveillance cameras, the burden of transmitting and storing surveillance video is growing. Traditional communication methods based on Shannon's theory are facing optimization bottlenecks. Semantic communication, as an emerging communication method, is expected to break through this bottleneck and reduce the storage and transmission consumption of video. Existing semantic decoding methods often require many samples to train the neural network for each scene, which is time-consuming and labor-intensive. In this study, a semantic encoding and decoding method for surveillance video is proposed. First, the sketch was extracted as semantic information, and a sketch compression method was proposed to reduce the bit rate of semantic information. Then, an image translation network was proposed to translate the sketch into a video frame with a reference frame. Finally, a few-shot sketch decoding network was proposed to reconstruct video from sketch. Experimental results showed that the proposed method achieved significantly better video reconstruction performance than baseline methods. The sketch compression method could effectively reduce the storage and transmission consumption of semantic information with little compromise on video quality. The proposed method provides a novel semantic encoding and decoding method that only needs a few training samples for each surveillance scene, thus improving the practicality of the semantic communication system.

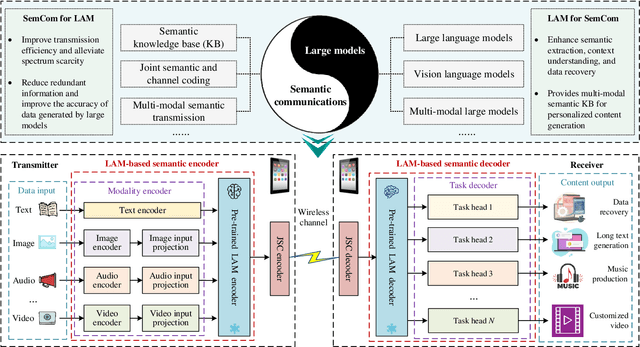

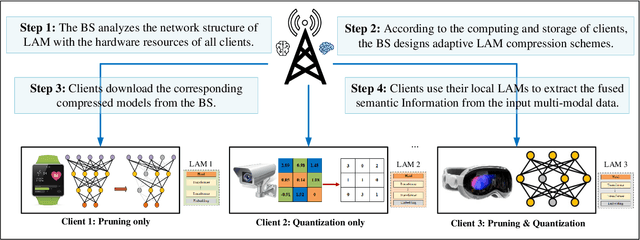

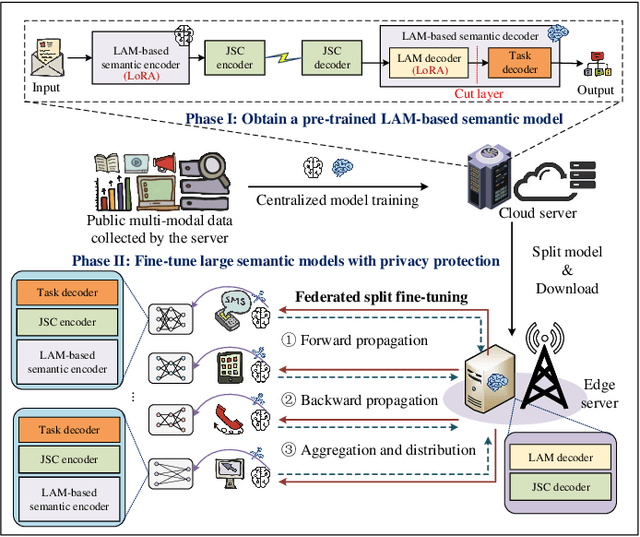

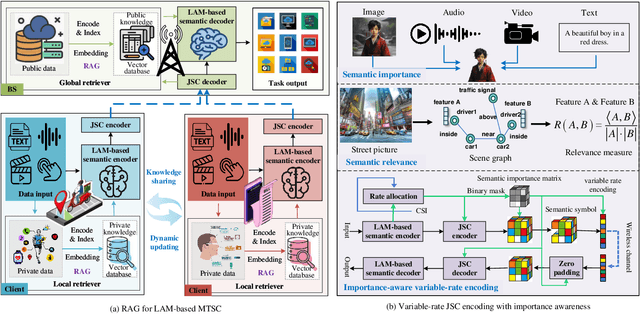

Multi-Task Semantic Communications via Large Models

Mar 28, 2025

Abstract:Artificial intelligence (AI) promises to revolutionize the design, optimization and management of next-generation communication systems. In this article, we explore the integration of large AI models (LAMs) into semantic communications (SemCom) by leveraging their multi-modal data processing and generation capabilities. Although LAMs bring unprecedented abilities to extract semantics from raw data, this integration entails multifaceted challenges including high resource demands, model complexity, and the need for adaptability across diverse modalities and tasks. To overcome these challenges, we propose a LAM-based multi-task SemCom (MTSC) architecture, which includes an adaptive model compression strategy and a federated split fine-tuning approach to facilitate the efficient deployment of LAM-based semantic models in resource-limited networks. Furthermore, a retrieval-augmented generation scheme is implemented to synthesize the most recent local and global knowledge bases to enhance the accuracy of semantic extraction and content generation, thereby improving the inference performance. Finally, simulation results demonstrate the efficacy of the proposed LAM-based MTSC architecture, highlighting the performance enhancements across various downstream tasks under varying channel conditions.

Corrections to "Computer Vision Aided mmWave Beam Alignment in V2X Communications"

Oct 08, 2024

Abstract:In this document, we revise the results of [1] based on more reasonable assumptions regarding data shuffling and parameter setup of deep neural networks (DNNs). Thus, the simulation results can now more reasonably demonstrate the performance of both the proposed and compared beam alignment methods. We revise the simulation steps and make moderate modifications to the design of the vehicle distribution feature (VDF) for the proposed vision based beam alignment when the MS location is available (VBALA). Specifically, we replace the 2D grids of the VDF with 3D grids and utilize the vehicle locations to expand the dimensions of the VDF. Then, we revise the simulation results of Fig. 11, Fig. 12, Fig. 13, Fig. 14, and Fig. 15 in [1] to reaffirm the validity of the conclusions.

Synchronous Multi-modal Semantic CommunicationSystem with Packet-level Coding

Aug 08, 2024

Abstract:Although the semantic communication with joint semantic-channel coding design has shown promising performance in transmitting data of different modalities over physical layer channels, the synchronization and packet-level forward error correction of multimodal semantics have not been well studied. Due to the independent design of semantic encoders, synchronizing multimodal features in both the semantic and time domains is a challenging problem. In this paper, we take the facial video and speech transmission as an example and propose a Synchronous Multimodal Semantic Communication System (SyncSC) with Packet-Level Coding. To achieve semantic and time synchronization, 3D Morphable Mode (3DMM) coefficients and text are transmitted as semantics, and we propose a semantic codec that achieves similar quality of reconstruction and synchronization with lower bandwidth, compared to traditional methods. To protect semantic packets under the erasure channel, we propose a packet-Level Forward Error Correction (FEC) method, called PacSC, that maintains a certain visual quality performance even at high packet loss rates. Particularly, for text packets, a text packet loss concealment module, called TextPC, based on Bidirectional Encoder Representations from Transformers (BERT) is proposed, which significantly improves the performance of traditional FEC methods. The simulation results show that our proposed SyncSC reduce transmission overhead and achieve high-quality synchronous transmission of video and speech over the packet loss network.

Object-Attribute-Relation Representation based Video Semantic Communication

Jun 15, 2024

Abstract:With the rapid growth of multimedia data volume, there is an increasing need for efficient video transmission in applications such as virtual reality and future video streaming services. Semantic communication is emerging as a vital technique for ensuring efficient and reliable transmission in low-bandwidth, high-noise settings. However, most current approaches focus on joint source-channel coding (JSCC) that depends on end-to-end training. These methods often lack an interpretable semantic representation and struggle with adaptability to various downstream tasks. In this paper, we introduce the use of object-attribute-relation (OAR) as a semantic framework for videos to facilitate low bit-rate coding and enhance the JSCC process for more effective video transmission. We utilize OAR sequences for both low bit-rate representation and generative video reconstruction. Additionally, we incorporate OAR into the image JSCC model to prioritize communication resources for areas more critical to downstream tasks. Our experiments on traffic surveillance video datasets assess the effectiveness of our approach in terms of video transmission performance. The empirical findings demonstrate that our OAR-based video coding method not only outperforms H.265 coding at lower bit-rates but also synergizes with JSCC to deliver robust and efficient video transmission.

Semantic MIMO Systems for Speech-to-Text Transmission

May 13, 2024Abstract:Semantic communications have been utilized to execute numerous intelligent tasks by transmitting task-related semantic information instead of bits. In this article, we propose a semantic-aware speech-to-text transmission system for the single-user multiple-input multiple-output (MIMO) and multi-user MIMO communication scenarios, named SAC-ST. Particularly, a semantic communication system to serve the speech-to-text task at the receiver is first designed, which compresses the semantic information and generates the low-dimensional semantic features by leveraging the transformer module. In addition, a novel semantic-aware network is proposed to facilitate the transmission with high semantic fidelity to identify the critical semantic information and guarantee it is recovered accurately. Furthermore, we extend the SAC-ST with a neural network-enabled channel estimation network to mitigate the dependence on accurate channel state information and validate the feasibility of SAC-ST in practical communication environments. Simulation results will show that the proposed SAC-ST outperforms the communication framework without the semantic-aware network for speech-to-text transmission over the MIMO channels in terms of the speech-to-text metrics, especially in the low signal-to-noise regime. Moreover, the SAC-ST with the developed channel estimation network is comparable to the SAC-ST with perfect channel state information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge