Xiaolin Huang

Random Features for Kernel Approximation: A Survey in Algorithms, Theory, and Beyond

Apr 24, 2020

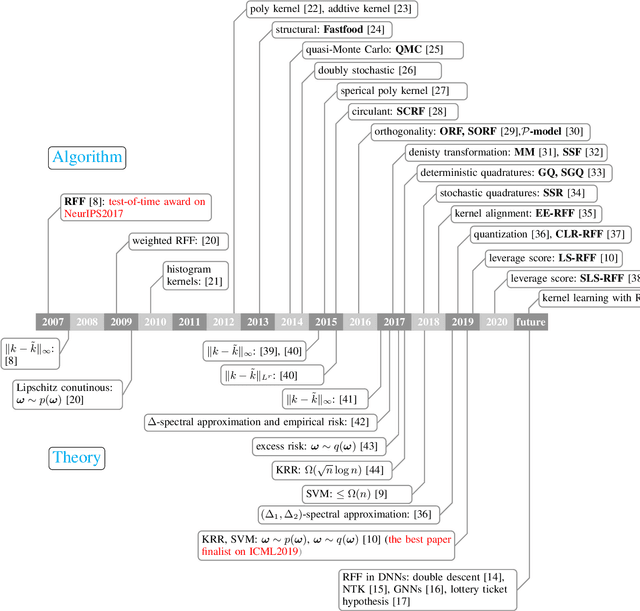

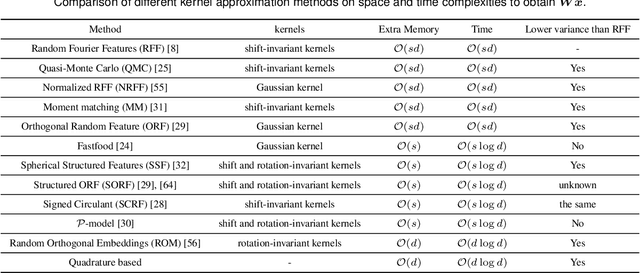

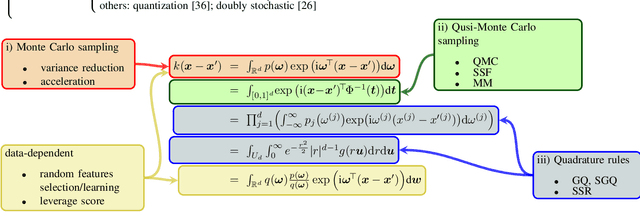

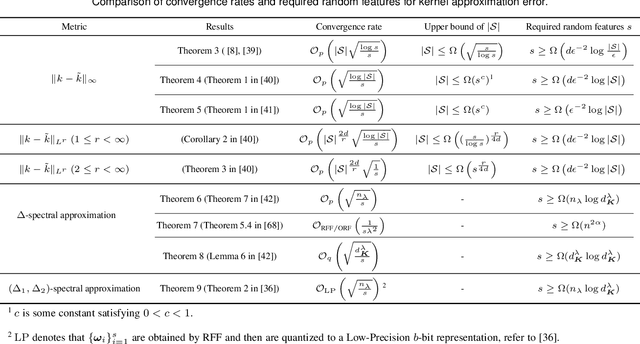

Abstract:Random features is one of the most sought-after research topics in statistical machine learning to speed up kernel methods in large-scale situations. Related works have won the NeurIPS test-of-time award in 2017 and the ICML best paper finalist in 2019. However, comprehensive studies on this topic seem to be missing, which results in different, sometimes conflicting, statements. In this survey, we attempt to throughout and systematically review the past ten years work on random features regarding to algorithmic and theoretical aspects. First, the fundamental characteristics, primary motivations, and contributions of representative random features based algorithms are summarized according to their sampling scheme, learning procedure, variance reduction, and exploitation of training data. Second, we review theoretical results of random features to answer the key question: how many random features are needed to ensure a high approximation quality or no loss of empirical risk and expected risk in a learning estimator. Third, popular random features based algorithms are comprehensively evaluated on several large scale benchmark datasets on the approximation quality and the prediction performance for classification and regression. Last, we link random features to current over-parameterized deep neural networks (DNNs) by investigating their relationships, the usage of random features to analysis over-parameterized networks, and the gap in the current theoretical results. As a result, this survey could be a gentle use guide for practitioners to follow this topic, apply representative algorithms, and grasp theoretical results under various technical assumptions. We think that this survey helps to facilitate a discussion on ongoing issues for this topic, and specifically, it sheds light on promising research directions.

Sparse Generalized Canonical Correlation Analysis: Distributed Alternating Iteration based Approach

Apr 23, 2020

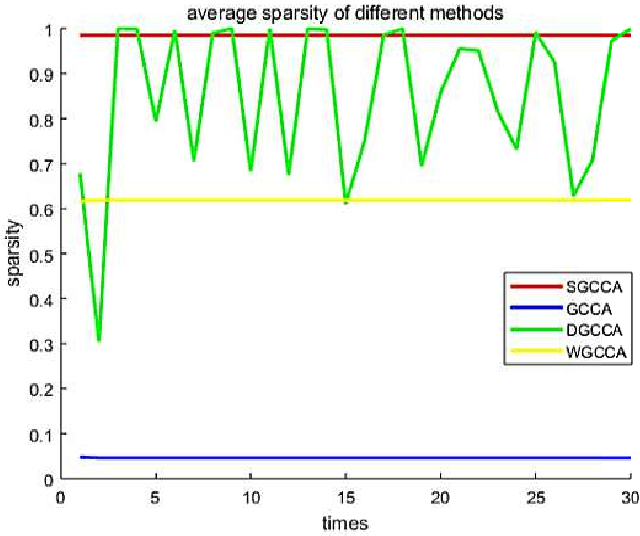

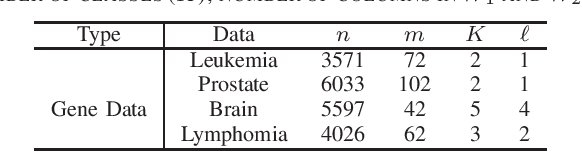

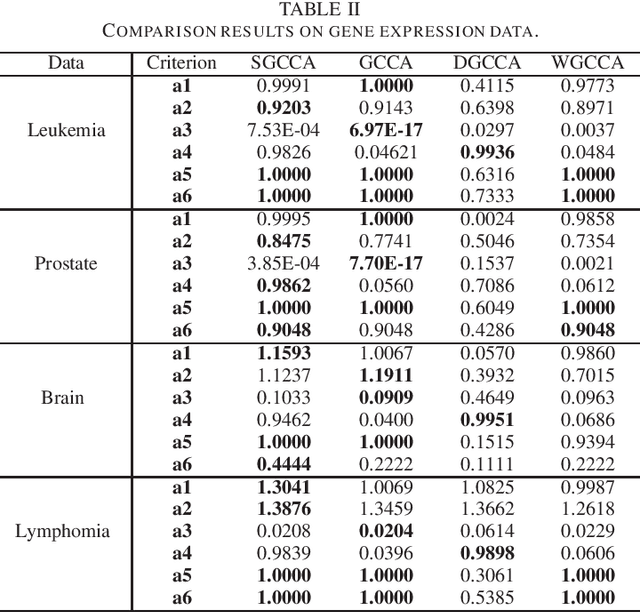

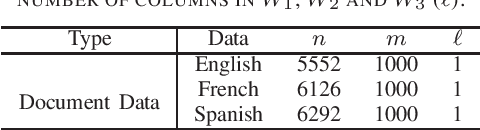

Abstract:Sparse canonical correlation analysis (CCA) is a useful statistical tool to detect latent information with sparse structures. However, sparse CCA works only for two datasets, i.e., there are only two views or two distinct objects. To overcome this limitation, in this paper, we propose a sparse generalized canonical correlation analysis (GCCA), which could detect the latent relations of multiview data with sparse structures. Moreover, the introduced sparsity could be considered as Laplace prior on the canonical variates. Specifically, we convert the GCCA into a linear system of equations and impose $\ell_1$ minimization penalty for sparsity pursuit. This results in a nonconvex problem on Stiefel manifold, which is difficult to solve. Motivated by Boyd's consensus problem, an algorithm based on distributed alternating iteration approach is developed and theoretical consistency analysis is investigated elaborately under mild conditions. Experiments on several synthetic and real world datasets demonstrate the effectiveness of the proposed algorithm.

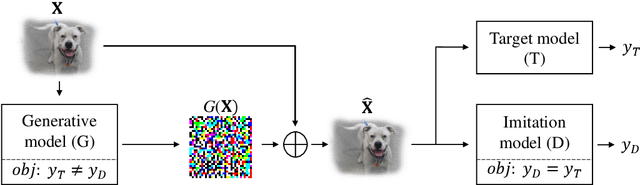

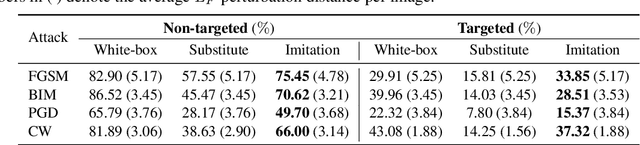

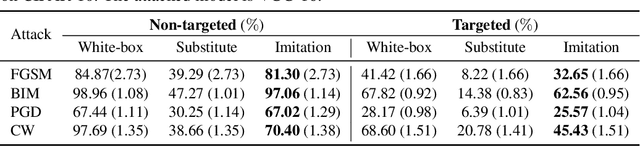

Adversarial Imitation Attack

Mar 31, 2020

Abstract:Deep learning models are known to be vulnerable to adversarial examples. A practical adversarial attack should require as little as possible knowledge of attacked models. Current substitute attacks need pre-trained models to generate adversarial examples and their attack success rates heavily rely on the transferability of adversarial examples. Current score-based and decision-based attacks require lots of queries for the attacked models. In this study, we propose a novel adversarial imitation attack. First, it produces a replica of the attacked model by a two-player game like the generative adversarial networks (GANs). The objective of the generative model is to generate examples that lead the imitation model returning different outputs with the attacked model. The objective of the imitation model is to output the same labels with the attacked model under the same inputs. Then, the adversarial examples generated by the imitation model are utilized to fool the attacked model. Compared with the current substitute attacks, imitation attacks can use less training data to produce a replica of the attacked model and improve the transferability of adversarial examples. Experiments demonstrate that our imitation attack requires less training data than the black-box substitute attacks, but achieves an attack success rate close to the white-box attack on unseen data with no query.

Stereo Endoscopic Image Super-Resolution Using Disparity-Constrained Parallel Attention

Mar 19, 2020

Abstract:With the popularity of stereo cameras in computer assisted surgery techniques, a second viewpoint would provide additional information in surgery. However, how to effectively access and use stereo information for the super-resolution (SR) purpose is often a challenge. In this paper, we propose a disparity-constrained stereo super-resolution network (DCSSRnet) to simultaneously compute a super-resolved image in a stereo image pair. In particular, we incorporate a disparity-based constraint mechanism into the generation of SR images in a deep neural network framework with an additional atrous parallax-attention modules. Experiment results on laparoscopic images demonstrate that the proposed framework outperforms current SR methods on both quantitative and qualitative evaluations. Our DCSSRnet provides a promising solution on enhancing spatial resolution of stereo image pairs, which will be extremely beneficial for the endoscopic surgery.

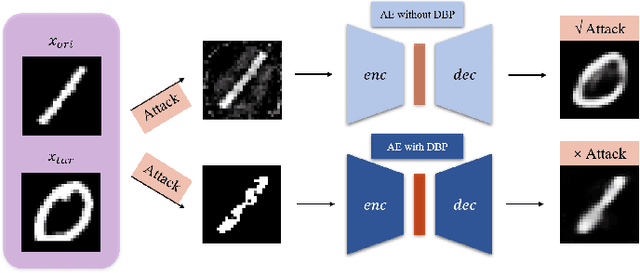

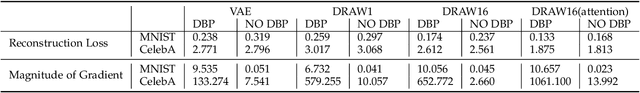

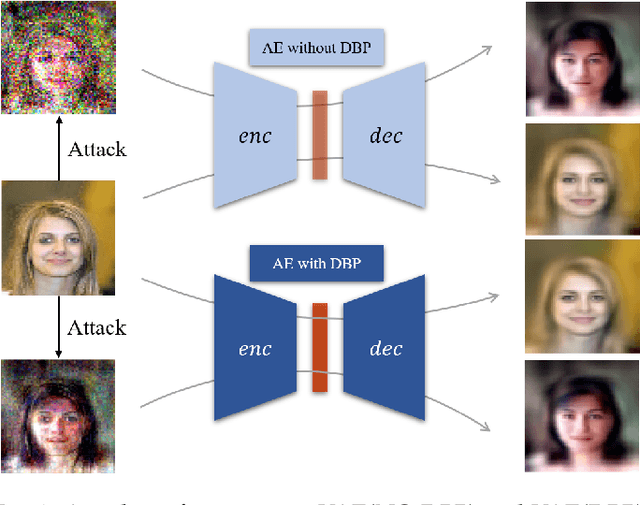

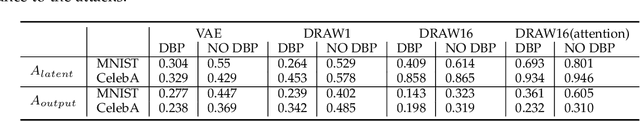

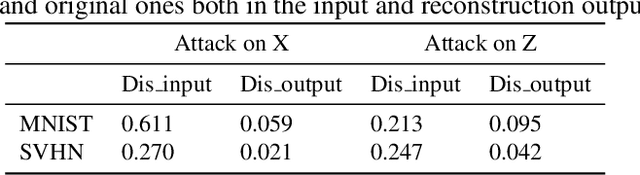

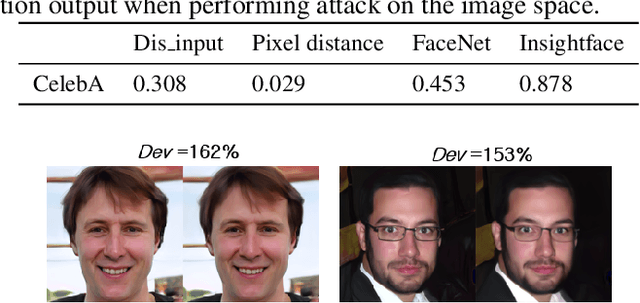

Double Backpropagation for Training Autoencoders against Adversarial Attack

Mar 04, 2020

Abstract:Deep learning, as widely known, is vulnerable to adversarial samples. This paper focuses on the adversarial attack on autoencoders. Safety of the autoencoders (AEs) is important because they are widely used as a compression scheme for data storage and transmission, however, the current autoencoders are easily attacked, i.e., one can slightly modify an input but has totally different codes. The vulnerability is rooted the sensitivity of the autoencoders and to enhance the robustness, we propose to adopt double backpropagation (DBP) to secure autoencoder such as VAE and DRAW. We restrict the gradient from the reconstruction image to the original one so that the autoencoder is not sensitive to trivial perturbation produced by the adversarial attack. After smoothing the gradient by DBP, we further smooth the label by Gaussian Mixture Model (GMM), aiming for accurate and robust classification. We demonstrate in MNIST, CelebA, SVHN that our method leads to a robust autoencoder resistant to attack and a robust classifier able for image transition and immune to adversarial attack if combined with GMM.

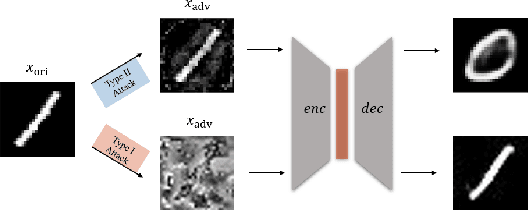

Type I Attack for Generative Models

Mar 04, 2020

Abstract:Generative models are popular tools with a wide range of applications. Nevertheless, it is as vulnerable to adversarial samples as classifiers. The existing attack methods mainly focus on generating adversarial examples by adding imperceptible perturbations to input, which leads to wrong result. However, we focus on another aspect of attack, i.e., cheating models by significant changes. The former induces Type II error and the latter causes Type I error. In this paper, we propose Type I attack to generative models such as VAE and GAN. One example given in VAE is that we can change an original image significantly to a meaningless one but their reconstruction results are similar. To implement the Type I attack, we destroy the original one by increasing the distance in input space while keeping the output similar because different inputs may correspond to similar features for the property of deep neural network. Experimental results show that our attack method is effective to generate Type I adversarial examples for generative models on large-scale image datasets.

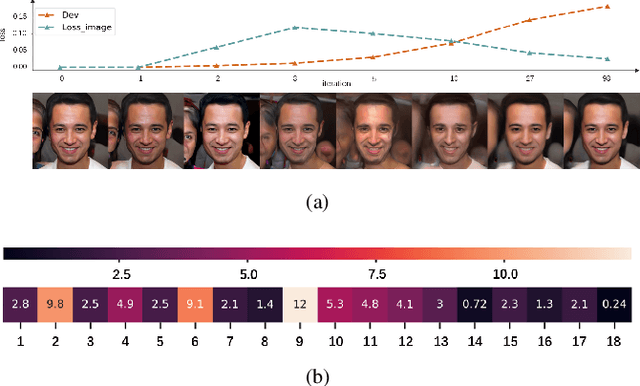

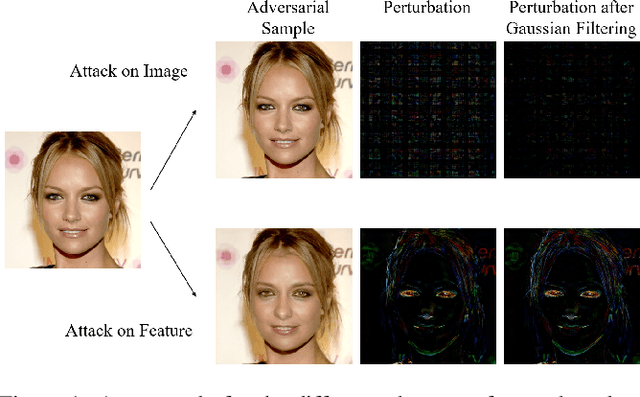

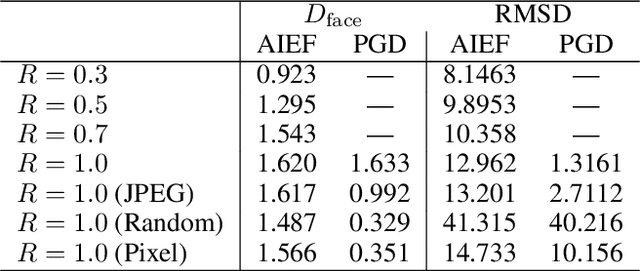

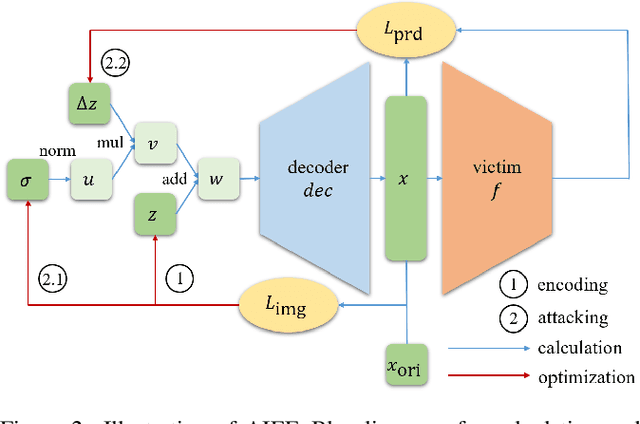

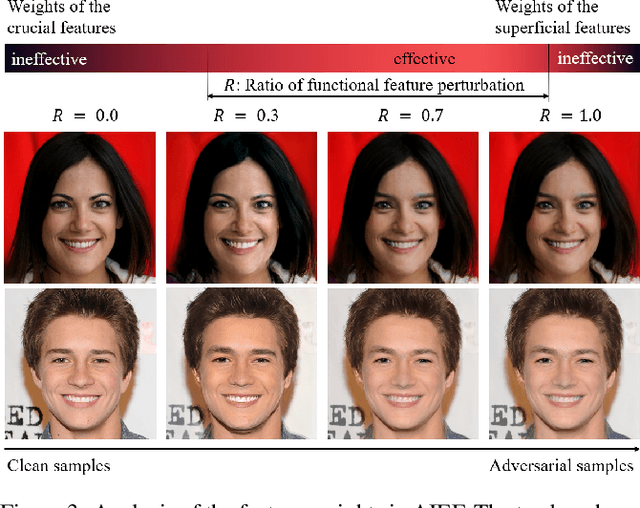

Generate High-Resolution Adversarial Samples by Identifying Effective Features

Jan 21, 2020

Abstract:As the prevalence of deep learning in computer vision, adversarial samples that weaken the neural networks emerge in large numbers, revealing their deep-rooted defects. Most adversarial attacks calculate an imperceptible perturbation in image space to fool the DNNs. In this strategy, the perturbation looks like noise and thus could be mitigated. Attacks in feature space produce semantic perturbation, but they could only deal with low resolution samples. The reason lies in the great number of coupled features to express a high-resolution image. In this paper, we propose Attack by Identifying Effective Features (AIEF), which learns different weights for features to attack. Effective features, those with great weights, influence the victim model much but distort the image little, and thus are more effective for attack. By attacking mostly on them, AIEF produces high resolution adversarial samples with acceptable distortions. We demonstrate the effectiveness of AIEF by attacking on different tasks with different generative models.

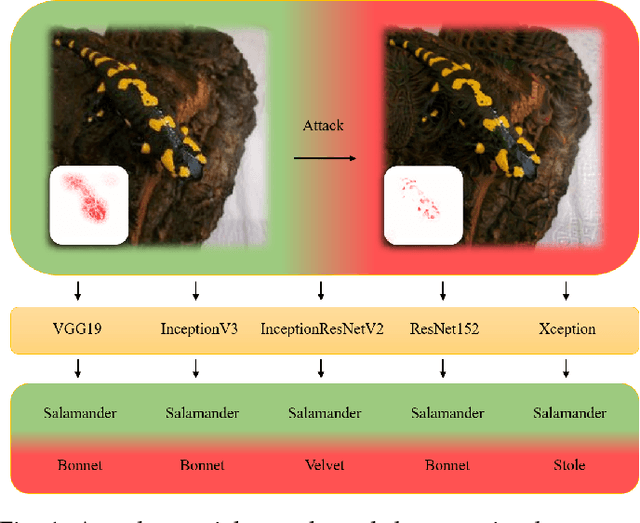

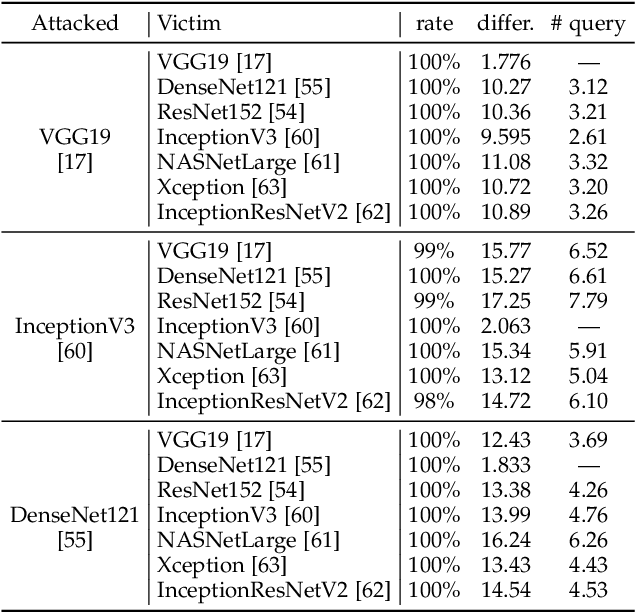

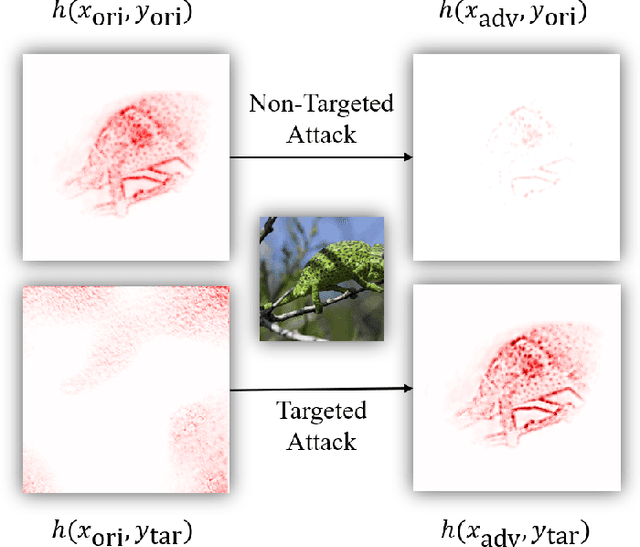

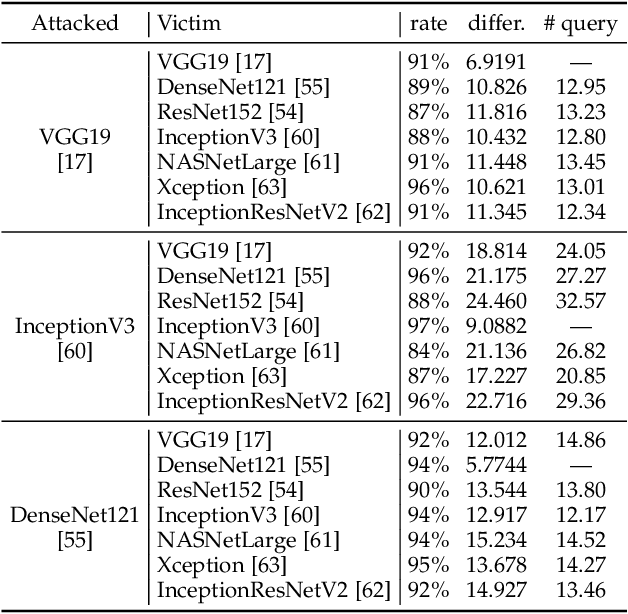

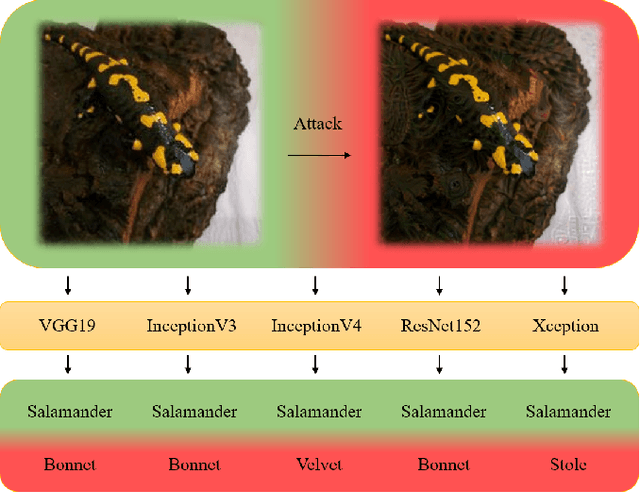

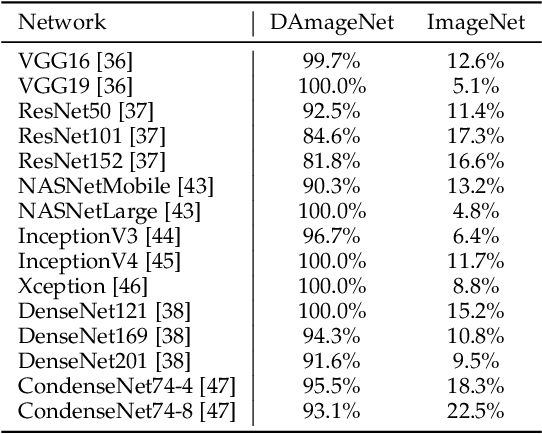

Universal Adversarial Attack on Attention and the Resulting Dataset DAmageNet

Jan 16, 2020

Abstract:Adversarial attacks on deep neural networks (DNNs) have been found for several years. However, the existing adversarial attacks have high success rates only when the information of the attacked DNN is well-known or could be estimated by structure similarity or massive queries. In this paper, we propose an \emph{Attack on Attention} (AoA), a semantic feature commonly shared by DNNs. The transferability of AoA is quite high. With no more than 10 queries of the decision only, AoA can achieve almost 100\% success rate when attacking on many popular DNNs. Even without query, AoA could keep a surprisingly high attack performance. We apply AoA to generate 96020 adversarial samples from ImageNet to defeat many neural networks, and thus name the dataset as \emph{DAmageNet}. 20 well-trained DNNs are tested on DAmageNet. Without adversarial training, most of the tested DNNs have an error rate over 90\%. DAmageNet is the first universal adversarial dataset and it could serve as a benchmark for robustness testing and adversarial training.

Mixed-Precision Quantized Neural Network with Progressively Decreasing Bitwidth For Image Classification and Object Detection

Dec 29, 2019

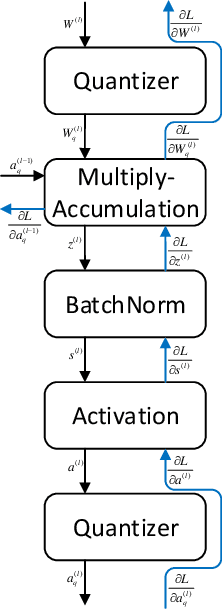

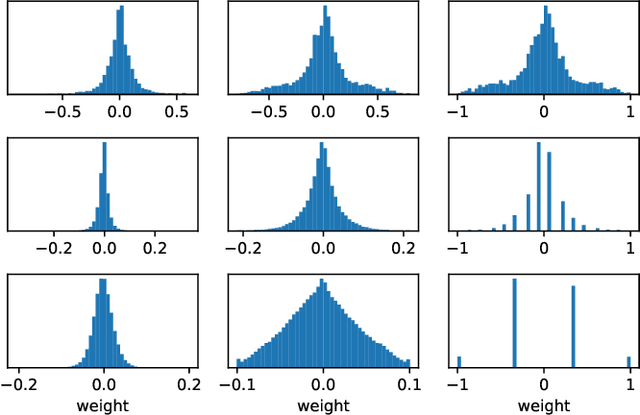

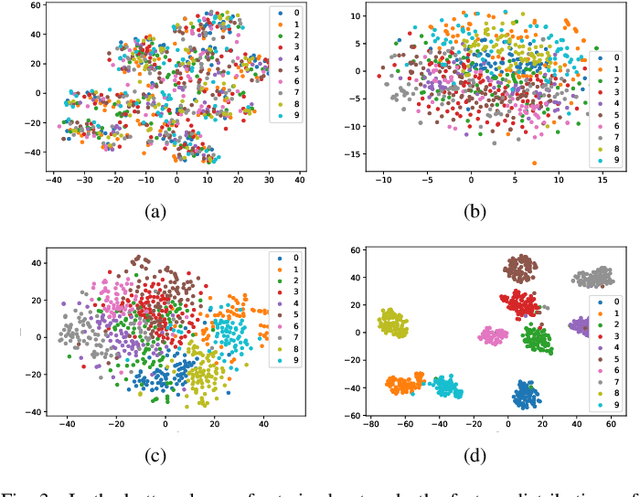

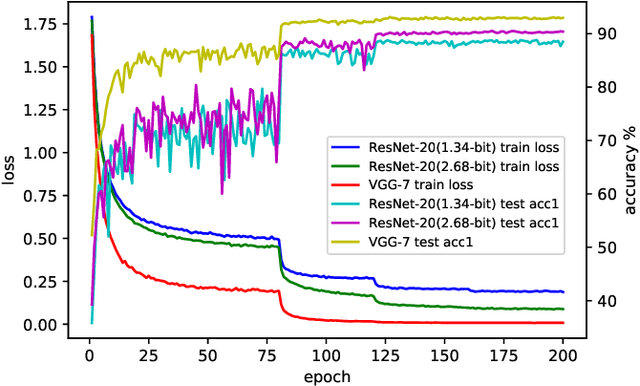

Abstract:Efficient model inference is an important and practical issue in the deployment of deep neural network on resource constraint platforms. Network quantization addresses this problem effectively by leveraging low-bit representation and arithmetic that could be conducted on dedicated embedded systems. In the previous works, the parameter bitwidth is set homogeneously and there is a trade-off between superior performance and aggressive compression. Actually the stacked network layers, which are generally regarded as hierarchical feature extractors, contribute diversely to the overall performance. For a well-trained neural network, the feature distributions of different categories differentiate gradually as the network propagates forward. Hence the capability requirement on the subsequent feature extractors is reduced. It indicates that the neurons in posterior layers could be assigned with lower bitwidth for quantized neural networks. Based on this observation, a simple but effective mixed-precision quantized neural network with progressively ecreasing bitwidth is proposed to improve the trade-off between accuracy and compression. Extensive experiments on typical network architectures and benchmark datasets demonstrate that the proposed method could achieve better or comparable results while reducing the memory space for quantized parameters by more than 30\% in comparison with the homogeneous counterparts. In addition, the results also demonstrate that the higher-precision bottom layers could boost the 1-bit network performance appreciably due to a better preservation of the original image information while the lower-precision posterior layers contribute to the regularization of $k-$bit networks.

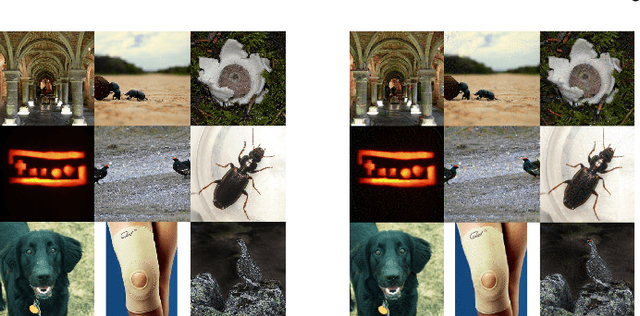

DAmageNet: A Universal Adversarial Dataset

Dec 16, 2019

Abstract:It is now well known that deep neural networks (DNNs) are vulnerable to adversarial attack. Adversarial samples are similar to the clean ones, but are able to cheat the attacked DNN to produce incorrect predictions in high confidence. But most of the existing adversarial attacks have high success rate only when the information of the attacked DNN is well-known or could be estimated by massive queries. A promising way is to generate adversarial samples with high transferability. By this way, we generate 96020 transferable adversarial samples from original ones in ImageNet. The average difference, measured by root means squared deviation, is only around 3.8 on average. However, the adversarial samples are misclassified by various models with an error rate up to 90\%. Since the images are generated independently with the attacked DNNs, this is essentially zero-query adversarial attack. We call the dataset \emph{DAmageNet}, which is the first universal adversarial dataset that beats many models trained in ImageNet. By finding the drawbacks, DAmageNet could serve as a benchmark to study and improve robustness of DNNs. DAmageNet could be downloaded in http://www.pami.sjtu.edu.cn/Show/56/122.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge