Xiaoan Zhang

OmniIndoor3D: Comprehensive Indoor 3D Reconstruction

May 27, 2025Abstract:We propose a novel framework for comprehensive indoor 3D reconstruction using Gaussian representations, called OmniIndoor3D. This framework enables accurate appearance, geometry, and panoptic reconstruction of diverse indoor scenes captured by a consumer-level RGB-D camera. Since 3DGS is primarily optimized for photorealistic rendering, it lacks the precise geometry critical for high-quality panoptic reconstruction. Therefore, OmniIndoor3D first combines multiple RGB-D images to create a coarse 3D reconstruction, which is then used to initialize the 3D Gaussians and guide the 3DGS training. To decouple the optimization conflict between appearance and geometry, we introduce a lightweight MLP that adjusts the geometric properties of 3D Gaussians. The introduced lightweight MLP serves as a low-pass filter for geometry reconstruction and significantly reduces noise in indoor scenes. To improve the distribution of Gaussian primitives, we propose a densification strategy guided by panoptic priors to encourage smoothness on planar surfaces. Through the joint optimization of appearance, geometry, and panoptic reconstruction, OmniIndoor3D provides comprehensive 3D indoor scene understanding, which facilitates accurate and robust robotic navigation. We perform thorough evaluations across multiple datasets, and OmniIndoor3D achieves state-of-the-art results in appearance, geometry, and panoptic reconstruction. We believe our work bridges a critical gap in indoor 3D reconstruction. The code will be released at: https://ucwxb.github.io/OmniIndoor3D/

EmbodiedOcc++: Boosting Embodied 3D Occupancy Prediction with Plane Regularization and Uncertainty Sampler

Apr 13, 2025Abstract:Online 3D occupancy prediction provides a comprehensive spatial understanding of embodied environments. While the innovative EmbodiedOcc framework utilizes 3D semantic Gaussians for progressive indoor occupancy prediction, it overlooks the geometric characteristics of indoor environments, which are primarily characterized by planar structures. This paper introduces EmbodiedOcc++, enhancing the original framework with two key innovations: a Geometry-guided Refinement Module (GRM) that constrains Gaussian updates through plane regularization, along with a Semantic-aware Uncertainty Sampler (SUS) that enables more effective updates in overlapping regions between consecutive frames. GRM regularizes the position update to align with surface normals. It determines the adaptive regularization weight using curvature-based and depth-based constraints, allowing semantic Gaussians to align accurately with planar surfaces while adapting in complex regions. To effectively improve geometric consistency from different views, SUS adaptively selects proper Gaussians to update. Comprehensive experiments on the EmbodiedOcc-ScanNet benchmark demonstrate that EmbodiedOcc++ achieves state-of-the-art performance across different settings. Our method demonstrates improved edge accuracy and retains more geometric details while ensuring computational efficiency, which is essential for online embodied perception. The code will be released at: https://github.com/PKUHaoWang/EmbodiedOcc2.

Computer-Aided Diagnosis of Label-Free 3-D Optical Coherence Microscopy Images of Human Cervical Tissue

Sep 17, 2018

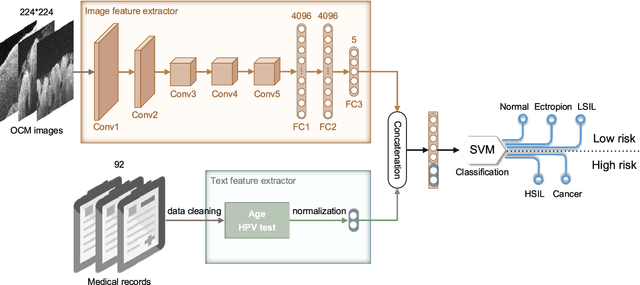

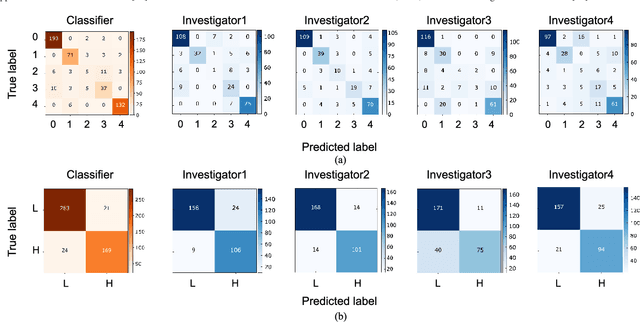

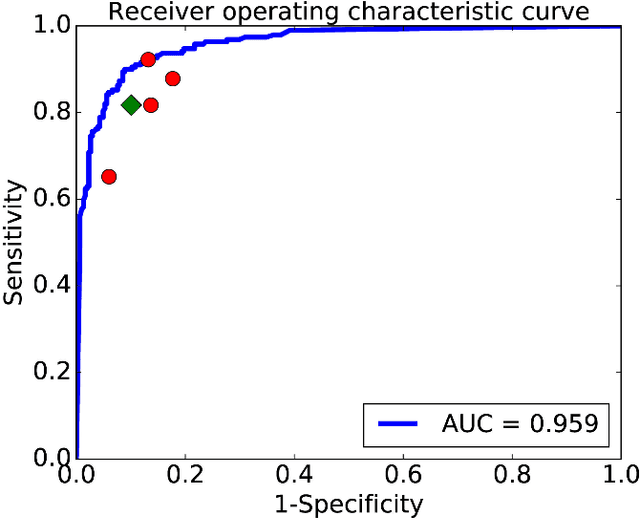

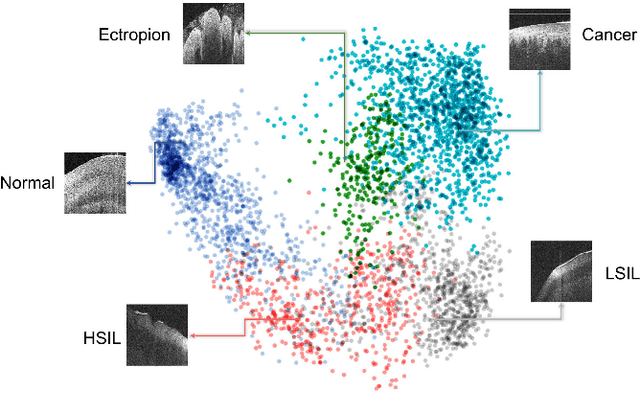

Abstract:Objective: Ultrahigh-resolution optical coherence microscopy (OCM) has recently demonstrated its potential for accurate diagnosis of human cervical diseases. One major challenge for clinical adoption, however, is the steep learning curve clinicians need to overcome to interpret OCM images. Developing an intelligent technique for computer-aided diagnosis (CADx) to accurately interpret OCM images will facilitate clinical adoption of the technology and improve patient care. Methods: 497 high-resolution 3-D OCM volumes (600 cross-sectional images each) were collected from 159 ex vivo specimens of 92 female patients. OCM image features were extracted using a convolutional neural network (CNN) model, concatenated with patient information (e.g., age, HPV results), and classified using a support vector machine classifier. Ten-fold cross-validations were utilized to test the performance of the CADx method in a five-class classification task and a binary classification task. Results: An 88.3 plus or minus 4.9% classification accuracy was achieved for five fine-grained classes of cervical tissue, namely normal, ectropion, low-grade and high-grade squamous intraepithelial lesions (LSIL and HSIL), and cancer. In the binary classification task (low-risk [normal, ectropion and LSIL] vs. high-risk [HSIL and cancer]), the CADx method achieved an area-under-the-curve (AUC) value of 0.959 with an 86.7 plus or minus 11.4% sensitivity and 93.5 plus or minus 3.8% specificity. Conclusion: The proposed deep-learning based CADx method outperformed three human experts. It was also able to identify morphological characteristics in OCM images that were consistent with histopathological interpretations. Significance: Label-free OCM imaging, combined with deep-learning based CADx methods, hold a great promise to be used in clinical settings for the effective screening and diagnosis of cervical diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge