Multimodal Attention Fusion for Target Speaker Extraction

Feb 02, 2021Hiroshi Sato, Tsubasa Ochiai, Keisuke Kinoshita, Marc Delcroix, Tomohiro Nakatani, Shoko Araki

Target speaker extraction, which aims at extracting a target speaker's voice from a mixture of voices using audio, visual or locational clues, has received much interest. Recently an audio-visual target speaker extraction has been proposed that extracts target speech by using complementary audio and visual clues. Although audio-visual target speaker extraction offers a more stable performance than single modality methods for simulated data, its adaptation towards realistic situations has not been fully explored as well as evaluations on real recorded mixtures. One of the major issues to handle realistic situations is how to make the system robust to clue corruption because in real recordings both clues may not be equally reliable, e.g. visual clues may be affected by occlusions. In this work, we propose a novel attention mechanism for multi-modal fusion and its training methods that enable to effectively capture the reliability of the clues and weight the more reliable ones. Our proposals improve signal to distortion ratio (SDR) by 1.0 dB over conventional fusion mechanisms on simulated data. Moreover, we also record an audio-visual dataset of simultaneous speech with realistic visual clue corruption and show that audio-visual target speaker extraction with our proposals successfully work on real data.

* 7 pages, 5 figures

A Joint Diagonalization Based Efficient Approach to Underdetermined Blind Audio Source Separation Using the Multichannel Wiener Filter

Jan 21, 2021Nobutaka Ito, Rintaro Ikeshita, Hiroshi Sawada, Tomohiro Nakatani

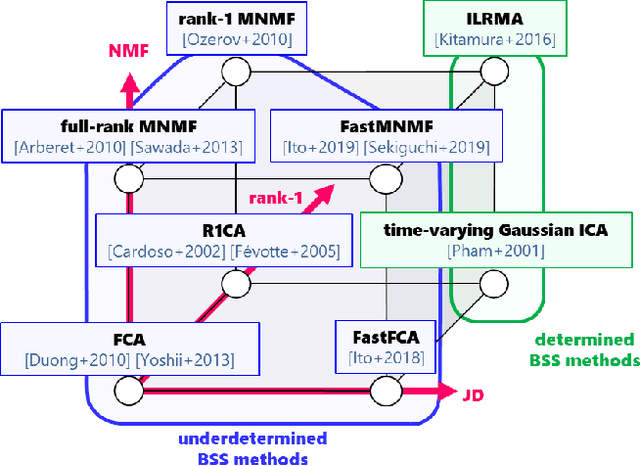

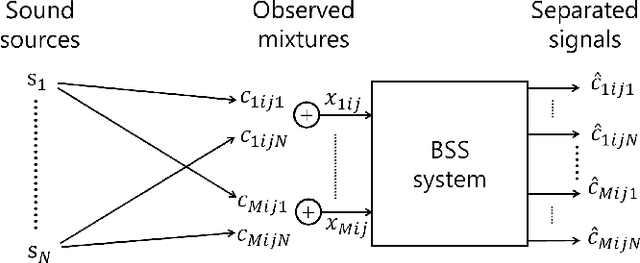

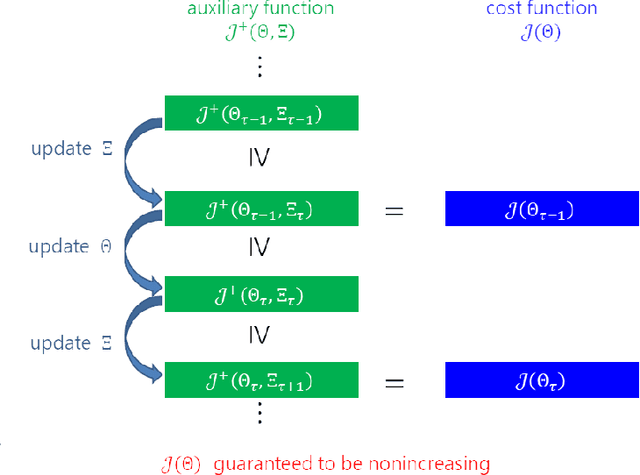

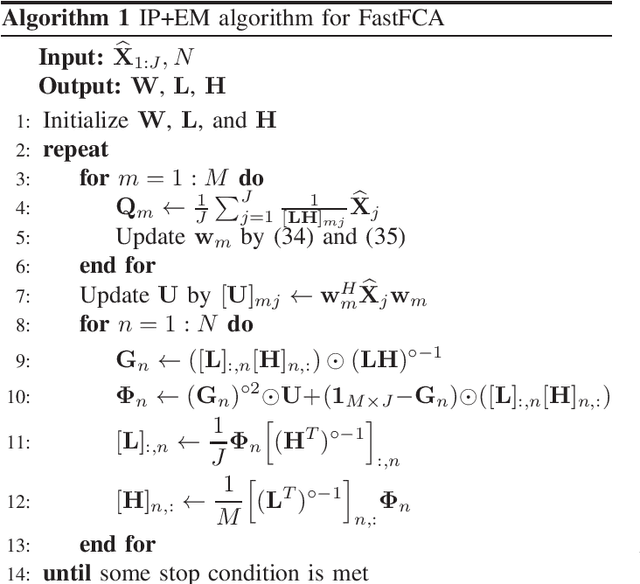

This paper presents a computationally efficient approach to blind source separation (BSS) of audio signals, applicable even when there are more sources than microphones (i.e., the underdetermined case). When there are as many sources as microphones (i.e., the determined case), BSS can be performed computationally efficiently by independent component analysis (ICA). Unfortunately, however, ICA is basically inapplicable to the underdetermined case. Another BSS approach using the multichannel Wiener filter (MWF) is applicable even to this case, and encompasses full-rank spatial covariance analysis (FCA) and multichannel non-negative matrix factorization (MNMF). However, these methods require massive numbers of matrix inversions to design the MWF, and are thus computationally inefficient. To overcome this drawback, we exploit the well-known property of diagonal matrices that matrix inversion amounts to mere inversion of the diagonal elements and can thus be performed computationally efficiently. This makes it possible to drastically reduce the computational cost of the above matrix inversions based on a joint diagonalization (JD) idea, leading to computationally efficient BSS. Specifically, we restrict the N spatial covariance matrices (SCMs) of all N sources to a class of (exactly) jointly diagonalizable matrices. Based on this approach, we present FastFCA, a computationally efficient extension of FCA. We also present a unified framework for underdetermined and determined audio BSS, which highlights a theoretical connection between FastFCA and other methods. Moreover, we reveal that FastFCA can be regarded as a regularized version of approximate joint diagonalization (AJD).

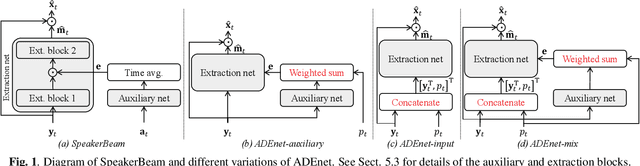

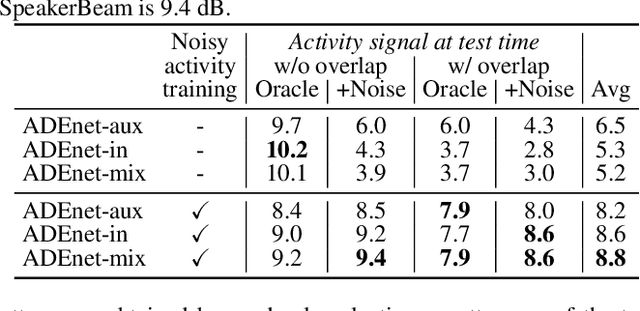

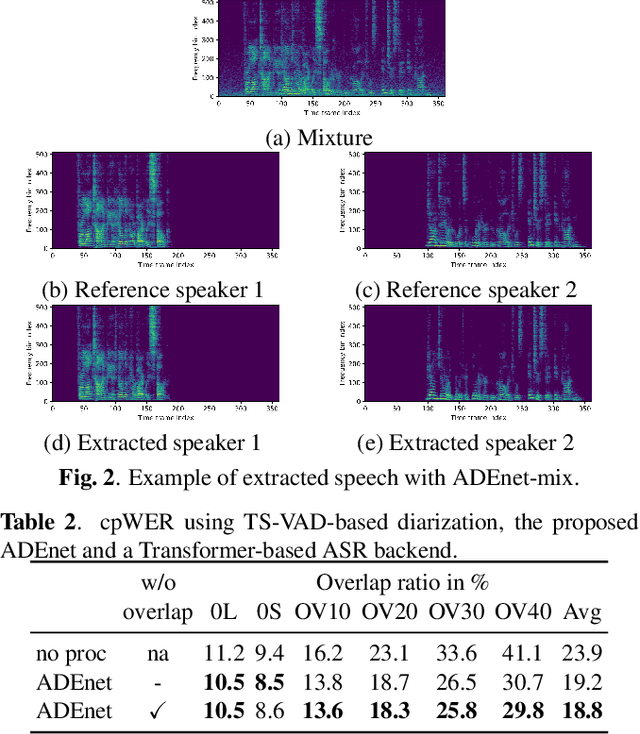

Speaker activity driven neural speech extraction

Jan 14, 2021Marc Delcroix, Katerina Zmolikova, Tsubasa Ochiai, Keisuke Kinoshita, Tomohiro Nakatani

Target speech extraction, which extracts the speech of a target speaker in a mixture given auxiliary speaker clues, has recently received increased interest. Various clues have been investigated such as pre-recorded enrollment utterances, direction information, or video of the target speaker. In this paper, we explore the use of speaker activity information as an auxiliary clue for single-channel neural network-based speech extraction. We propose a speaker activity driven speech extraction neural network (ADEnet) and show that it can achieve performance levels competitive with enrollment-based approaches, without the need for pre-recordings. We further demonstrate the potential of the proposed approach for processing meeting-like recordings, where speaker activity obtained from a diarization system is used as a speaker clue for ADEnet. We show that this simple yet practical approach can successfully extract speakers after diarization, which leads to improved ASR performance when using a single microphone, especially in high overlapping conditions, with a relative word error rate reduction of up to 25 %.

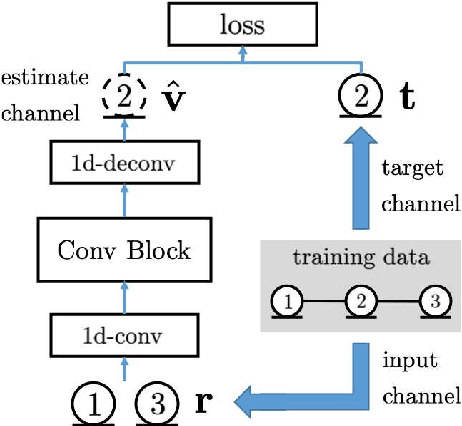

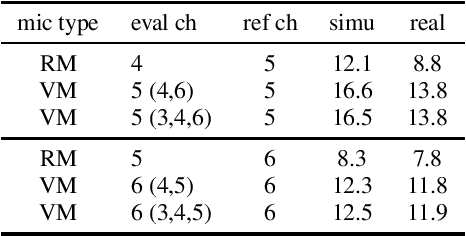

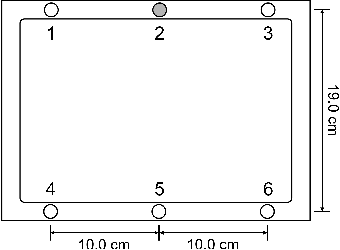

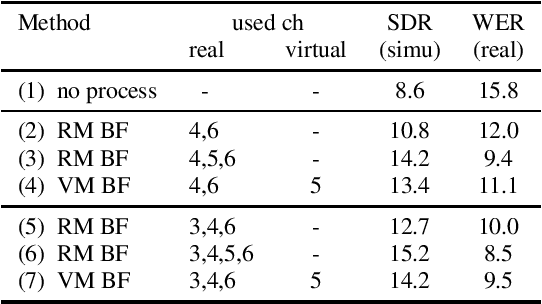

Neural Network-based Virtual Microphone Estimator

Jan 12, 2021Tsubasa Ochiai, Marc Delcroix, Tomohiro Nakatani, Rintaro Ikeshita, Keisuke Kinoshita, Shoko Araki

Developing microphone array technologies for a small number of microphones is important due to the constraints of many devices. One direction to address this situation consists of virtually augmenting the number of microphone signals, e.g., based on several physical model assumptions. However, such assumptions are not necessarily met in realistic conditions. In this paper, as an alternative approach, we propose a neural network-based virtual microphone estimator (NN-VME). The NN-VME estimates virtual microphone signals directly in the time domain, by utilizing the precise estimation capability of the recent time-domain neural networks. We adopt a fully supervised learning framework that uses actual observations at the locations of the virtual microphones at training time. Consequently, the NN-VME can be trained using only multi-channel observations and thus directly on real recordings, avoiding the need for unrealistic physical model-based assumptions. Experiments on the CHiME-4 corpus show that the proposed NN-VME achieves high virtual microphone estimation performance even for real recordings and that a beamformer augmented with the NN-VME improves both the speech enhancement and recognition performance.

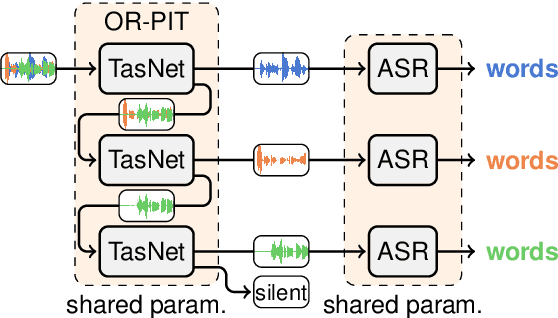

Multi-talker ASR for an unknown number of sources: Joint training of source counting, separation and ASR

Jun 04, 2020Thilo von Neumann, Christoph Boeddeker, Lukas Drude, Keisuke Kinoshita, Marc Delcroix, Tomohiro Nakatani, Reinhold Haeb-Umbach

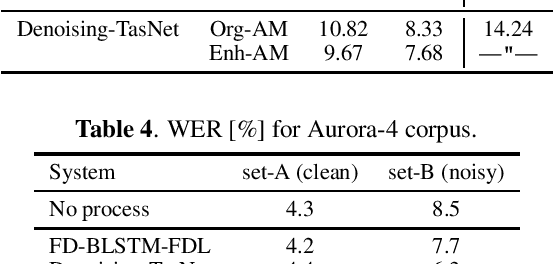

Most approaches to multi-talker overlapped speech separation and recognition assume that the number of simultaneously active speakers is given, but in realistic situations, it is typically unknown. To cope with this, we extend an iterative speech extraction system with mechanisms to count the number of sources and combine it with a single-talker speech recognizer to form the first end-to-end multi-talker automatic speech recognition system for an unknown number of active speakers. Our experiments show very promising performance in counting accuracy, source separation and speech recognition on simulated clean mixtures from WSJ0-2mix and WSJ0-3mix. Among others, we set a new state-of-the-art word error rate on the WSJ0-2mix database. Furthermore, our system generalizes well to a larger number of speakers than it ever saw during training, as shown in experiments with the WSJ0-4mix database.

Improving noise robust automatic speech recognition with single-channel time-domain enhancement network

Mar 09, 2020Keisuke Kinoshita, Tsubasa Ochiai, Marc Delcroix, Tomohiro Nakatani

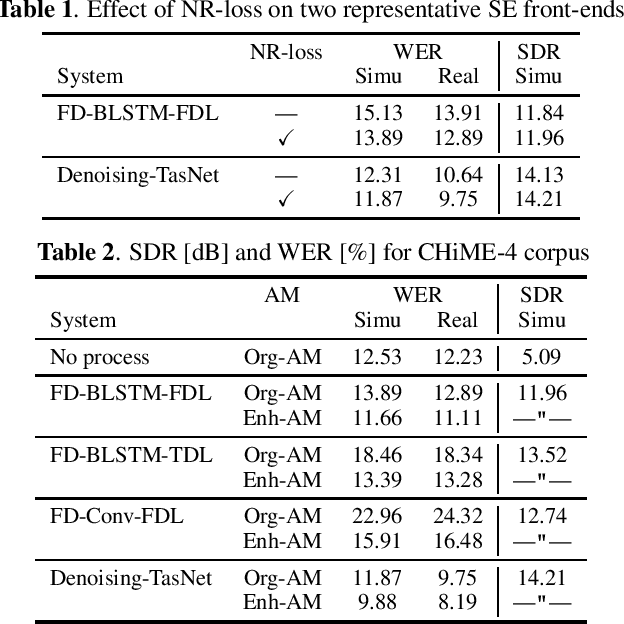

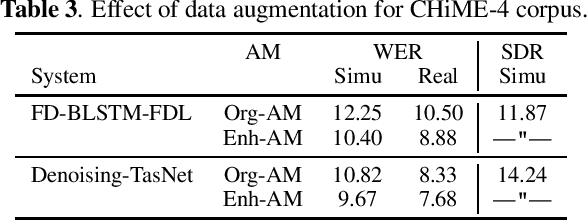

With the advent of deep learning, research on noise-robust automatic speech recognition (ASR) has progressed rapidly. However, ASR performance in noisy conditions of single-channel systems remains unsatisfactory. Indeed, most single-channel speech enhancement (SE) methods (denoising) have brought only limited performance gains over state-of-the-art ASR back-end trained on multi-condition training data. Recently, there has been much research on neural network-based SE methods working in the time-domain showing levels of performance never attained before. However, it has not been established whether the high enhancement performance achieved by such time-domain approaches could be translated into ASR. In this paper, we show that a single-channel time-domain denoising approach can significantly improve ASR performance, providing more than 30 % relative word error reduction over a strong ASR back-end on the real evaluation data of the single-channel track of the CHiME-4 dataset. These positive results demonstrate that single-channel noise reduction can still improve ASR performance, which should open the door to more research in that direction.

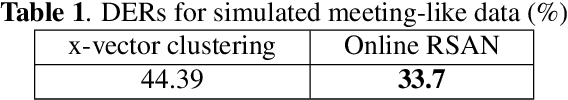

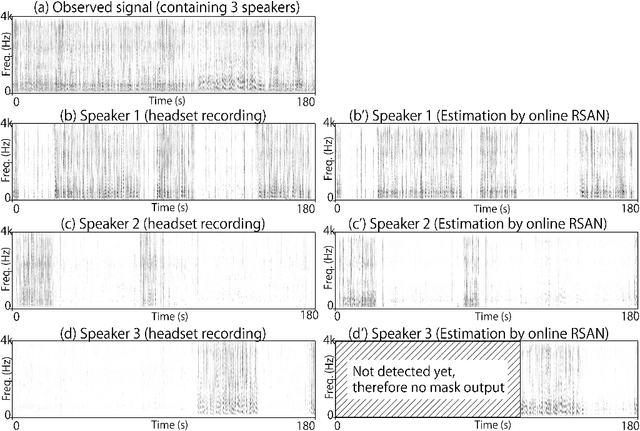

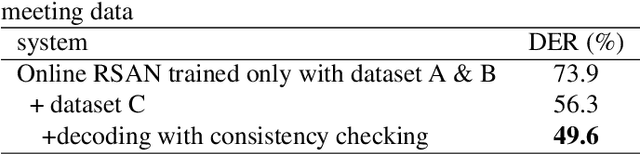

Tackling real noisy reverberant meetings with all-neural source separation, counting, and diarization system

Mar 09, 2020Keisuke Kinoshita, Marc Delcroix, Shoko Araki, Tomohiro Nakatani

Automatic meeting analysis is an essential fundamental technology required to let, e.g. smart devices follow and respond to our conversations. To achieve an optimal automatic meeting analysis, we previously proposed an all-neural approach that jointly solves source separation, speaker diarization and source counting problems in an optimal way (in a sense that all the 3 tasks can be jointly optimized through error back-propagation). It was shown that the method could well handle simulated clean (noiseless and anechoic) dialog-like data, and achieved very good performance in comparison with several conventional methods. However, it was not clear whether such all-neural approach would be successfully generalized to more complicated real meeting data containing more spontaneously-speaking speakers, severe noise and reverberation, and how it performs in comparison with the state-of-the-art systems in such scenarios. In this paper, we first consider practical issues required for improving the robustness of the all-neural approach, and then experimentally show that, even in real meeting scenarios, the all-neural approach can perform effective speech enhancement, and simultaneously outperform state-of-the-art systems.

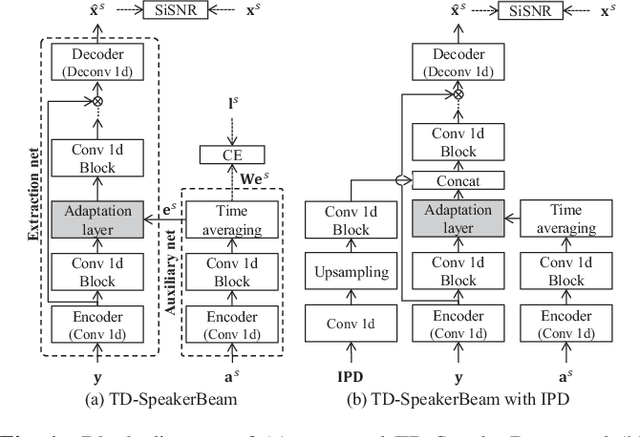

Improving speaker discrimination of target speech extraction with time-domain SpeakerBeam

Jan 23, 2020Marc Delcroix, Tsubasa Ochiai, Katerina Zmolikova, Keisuke Kinoshita, Naohiro Tawara, Tomohiro Nakatani, Shoko Araki

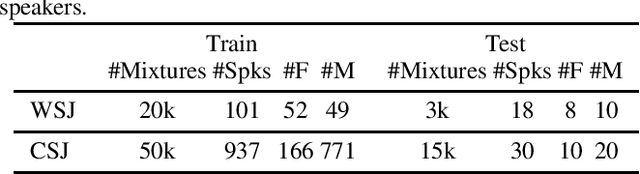

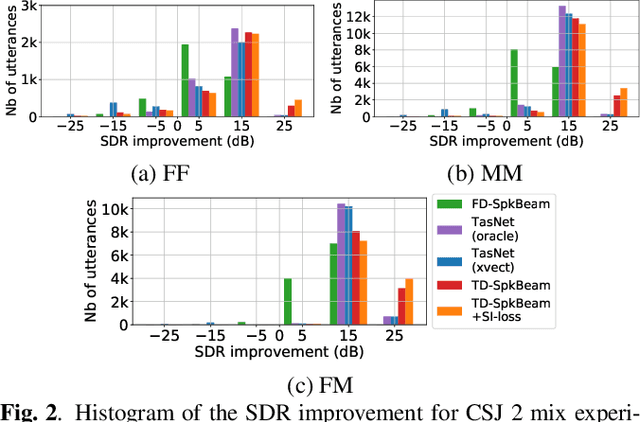

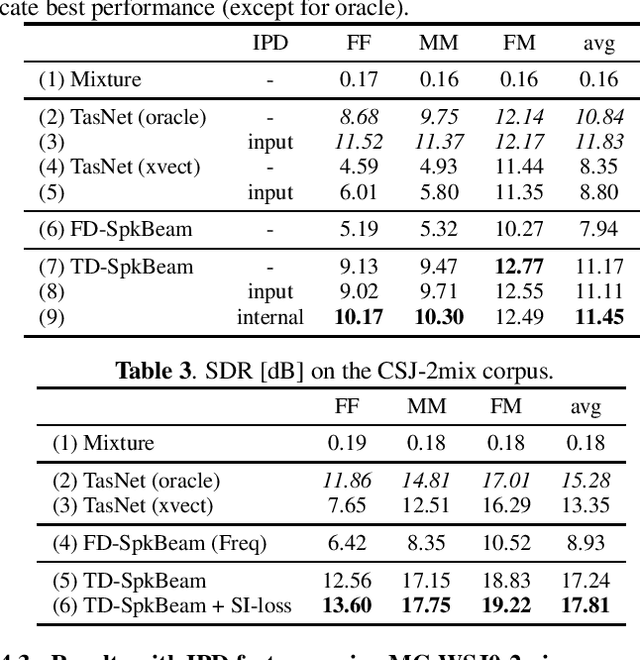

Target speech extraction, which extracts a single target source in a mixture given clues about the target speaker, has attracted increasing attention. We have recently proposed SpeakerBeam, which exploits an adaptation utterance of the target speaker to extract his/her voice characteristics that are then used to guide a neural network towards extracting speech of that speaker. SpeakerBeam presents a practical alternative to speech separation as it enables tracking speech of a target speaker across utterances, and achieves promising speech extraction performance. However, it sometimes fails when speakers have similar voice characteristics, such as in same-gender mixtures, because it is difficult to discriminate the target speaker from the interfering speakers. In this paper, we investigate strategies for improving the speaker discrimination capability of SpeakerBeam. First, we propose a time-domain implementation of SpeakerBeam similar to that proposed for a time-domain audio separation network (TasNet), which has achieved state-of-the-art performance for speech separation. Besides, we investigate (1) the use of spatial features to better discriminate speakers when microphone array recordings are available, (2) adding an auxiliary speaker identification loss for helping to learn more discriminative voice characteristics. We show experimentally that these strategies greatly improve speech extraction performance, especially for same-gender mixtures, and outperform TasNet in terms of target speech extraction.

End-to-end training of time domain audio separation and recognition

Dec 25, 2019Thilo von Neumann, Keisuke Kinoshita, Lukas Drude, Christoph Boeddeker, Marc Delcroix, Tomohiro Nakatani, Reinhold Haeb-Umbach

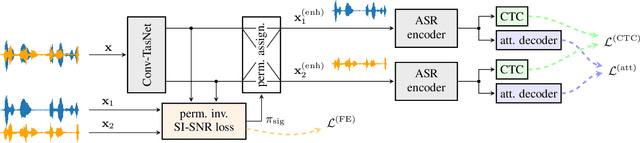

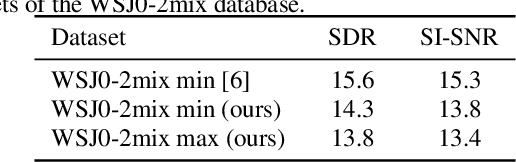

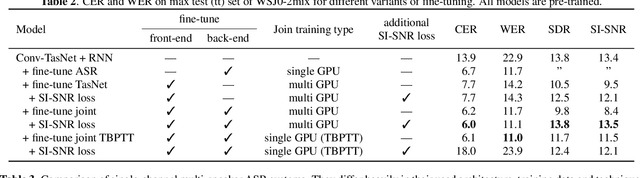

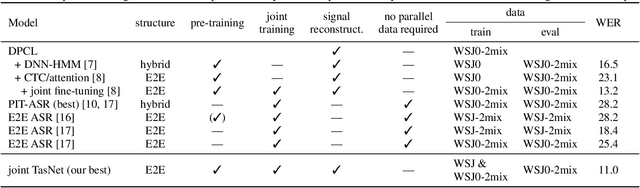

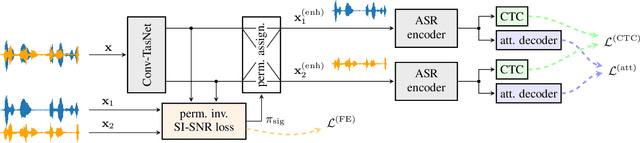

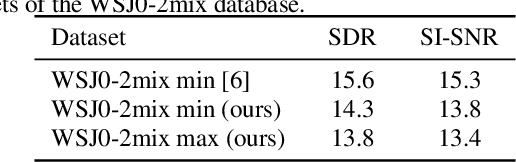

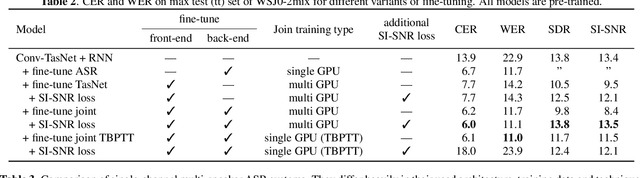

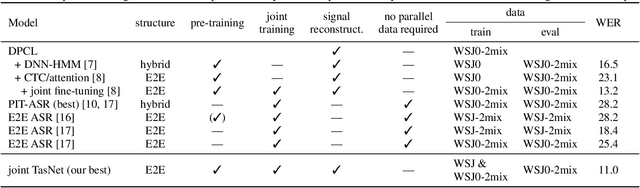

The rising interest in single-channel multi-speaker speech separation sparked development of End-to-End (E2E) approaches to multi-speaker speech recognition. However, up until now, state-of-the-art neural network-based time domain source separation has not yet been combined with E2E speech recognition. We here demonstrate how to combine a separation module based on a Convolutional Time domain Audio Separation Network (Conv-TasNet) with an E2E speech recognizer and how to train such a model jointly by distributing it over multiple GPUs or by approximating truncated back-propagation for the convolutional front-end. To put this work into perspective and illustrate the complexity of the design space, we provide a compact overview of single-channel multi-speaker recognition systems. Our experiments show a word error rate of 11.0% on WSJ0-2mix and indicate that our joint time domain model can yield substantial improvements over cascade DNN-HMM and monolithic E2E frequency domain systems proposed so far.

Ene-to-end training of time domain audio separation and recognition

Dec 18, 2019Thilo von Neumann, Keisuke Kinoshita, Lukas Drude, Christoph Boeddeker, Marc Delcroix, Tomohiro Nakatani, Reinhold Haeb-Umbach

The rising interest in single-channel multi-speaker speech separation sparked development of End-to-End (E2E) approaches to multi-speaker speech recognition. However, up until now, state-of-the-art neural network-based time domain source separation has not yet been combined with E2E speech recognition. We here demonstrate how to combine a separation module based on a Convolutional Time domain Audio Separation Network (Conv-TasNet) with an E2E speech recognizer and how to train such a model jointly by distributing it over multiple GPUs or by approximating truncated back-propagation for the convolutional front-end. To put this work into perspective and illustrate the complexity of the design space, we provide a compact overview of single-channel multi-speaker recognition systems. Our experiments show a word error rate of 11.0% on WSJ0-2mix and indicate that our joint time domain model can yield substantial improvements over cascade DNN-HMM and monolithic E2E frequency domain systems proposed so far.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge