Daichi Kitamura

Audio Spotforming Using Nonnegative Tensor Factorization with Attractor-Based Regularization

Jul 12, 2024

Abstract:Spotforming is a target-speaker extraction technique that uses multiple microphone arrays. This method applies beamforming (BF) to each microphone array, and the common components among the BF outputs are estimated as the target source. This study proposes a new common component extraction method based on nonnegative tensor factorization (NTF) for higher model interpretability and more robust spotforming against hyperparameters. Moreover, attractor-based regularization was introduced to facilitate the automatic selection of optimal target bases in the NTF. Experimental results show that the proposed method performs better than conventional methods in spotforming performance and also shows some characteristics suitable for practical use.

NoisyILRMA: Diffuse-Noise-Aware Independent Low-Rank Matrix Analysis for Fast Blind Source Extraction

Jun 22, 2023

Abstract:In this paper, we address the multichannel blind source extraction (BSE) of a single source in diffuse noise environments. To solve this problem even faster than by fast multichannel nonnegative matrix factorization (FastMNMF) and its variant, we propose a BSE method called NoisyILRMA, which is a modification of independent low-rank matrix analysis (ILRMA) to account for diffuse noise. NoisyILRMA can achieve considerably fast BSE by incorporating an algorithm developed for independent vector extraction. In addition, to improve the BSE performance of NoisyILRMA, we propose a mechanism to switch the source model with ILRMA-like nonnegative matrix factorization to a more expressive source model during optimization. In the experiment, we show that NoisyILRMA runs faster than a FastMNMF algorithm while maintaining the BSE performance. We also confirm that the switching mechanism improves the BSE performance of NoisyILRMA.

Differentiable Digital Signal Processing Mixture Model for Synthesis Parameter Extraction from Mixture of Harmonic Sounds

Feb 01, 2022

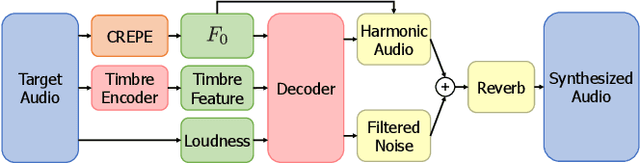

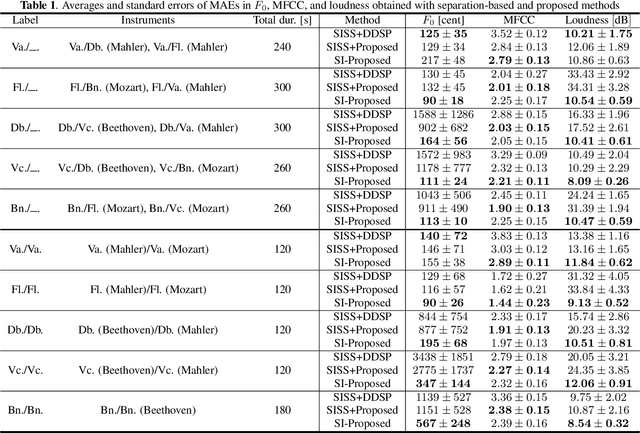

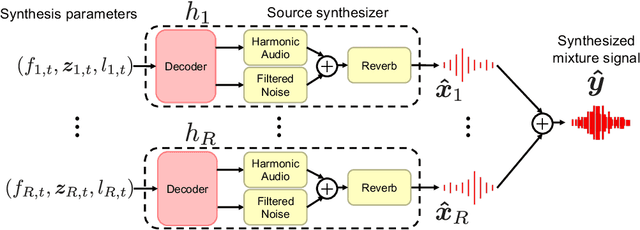

Abstract:A differentiable digital signal processing (DDSP) autoencoder is a musical sound synthesizer that combines a deep neural network (DNN) and spectral modeling synthesis. It allows us to flexibly edit sounds by changing the fundamental frequency, timbre feature, and loudness (synthesis parameters) extracted from an input sound. However, it is designed for a monophonic harmonic sound and cannot handle mixtures of harmonic sounds. In this paper, we propose a model (DDSP mixture model) that represents a mixture as the sum of the outputs of multiple pretrained DDSP autoencoders. By fitting the output of the proposed model to the observed mixture, we can directly estimate the synthesis parameters of each source. Through synthesis parameter extraction experiments, we show that the proposed method has high and stable performance compared with a straightforward method that applies the DDSP autoencoder to the signals separated by an audio source separation method.

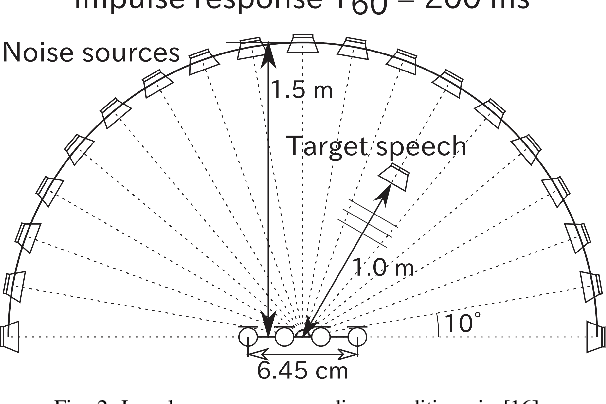

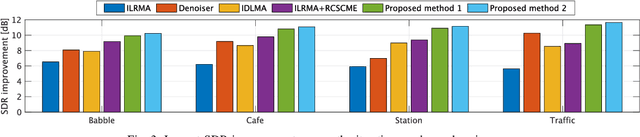

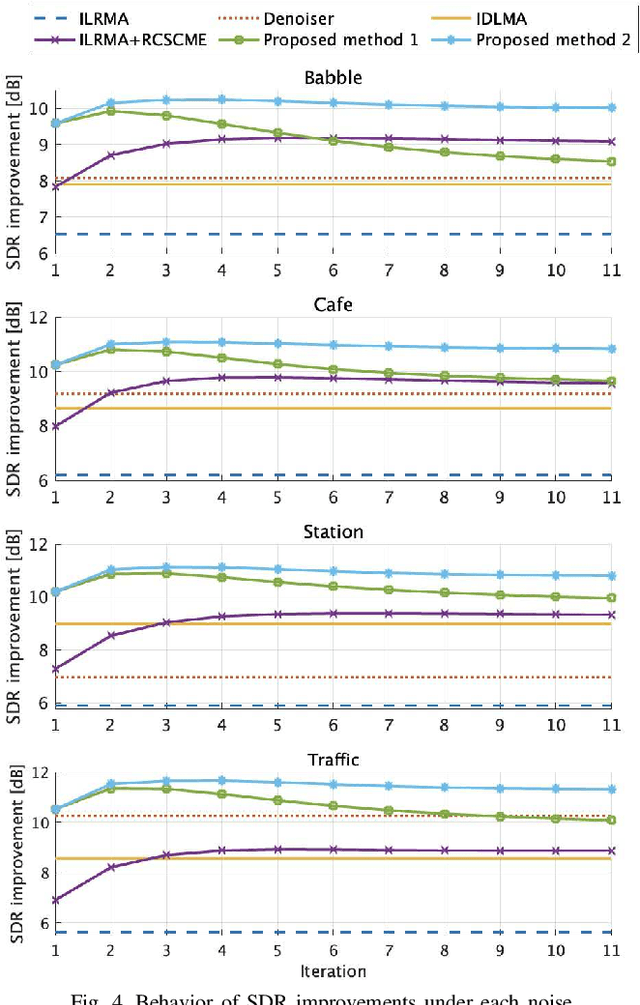

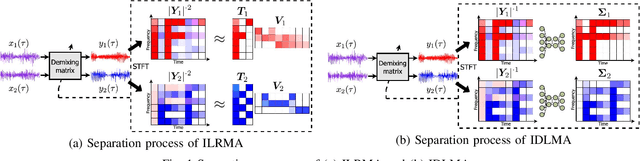

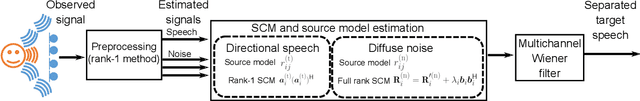

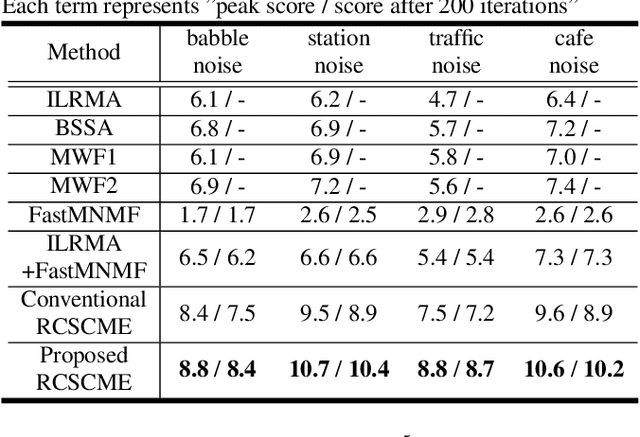

Speech Enhancement by Noise Self-Supervised Rank-Constrained Spatial Covariance Matrix Estimation via Independent Deeply Learned Matrix Analysis

Sep 10, 2021

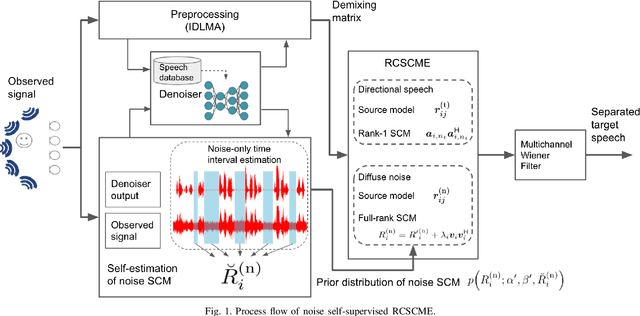

Abstract:Rank-constrained spatial covariance matrix estimation (RCSCME) is a method for the situation that the directional target speech and the diffuse noise are mixed. In conventional RCSCME, independent low-rank matrix analysis (ILRMA) is used as the preprocessing method. We propose RCSCME using independent deeply learned matrix analysis (IDLMA), which is a supervised extension of ILRMA. In this method, IDLMA requires deep neural networks (DNNs) to separate the target speech and the noise. We use Denoiser, which is a single-channel speech enhancement DNN, in IDLMA to estimate not only the target speech but also the noise. We also propose noise self-supervised RCSCME, in which we estimate the noise-only time intervals using the output of Denoiser and design the prior distribution of the noise spatial covariance matrix for RCSCME. We confirm that the proposed methods outperform the conventional methods under several noise conditions.

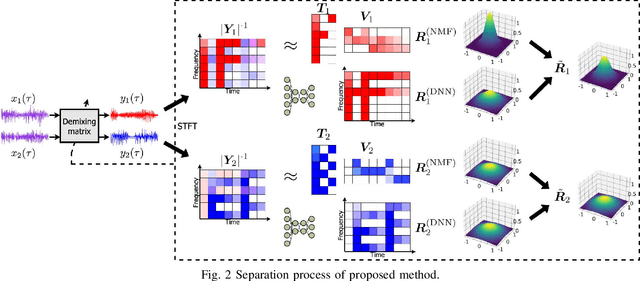

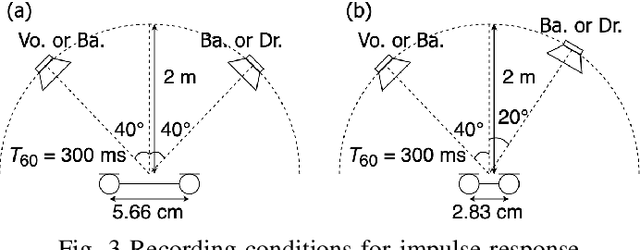

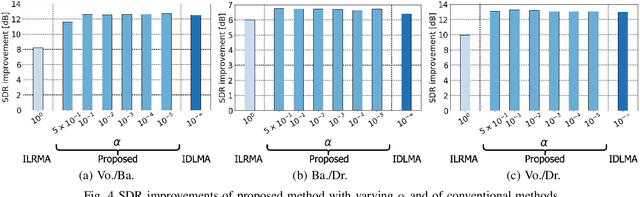

Multichannel Audio Source Separation with Independent Deeply Learned Matrix Analysis Using Product of Source Models

Sep 02, 2021

Abstract:Independent deeply learned matrix analysis (IDLMA) is one of the state-of-the-art multichannel audio source separation methods using the source power estimation based on deep neural networks (DNNs). The DNN-based power estimation works well for sounds having timbres similar to the DNN training data. However, the sounds to which IDLMA is applied do not always have such timbres, and the timbral mismatch causes the performance degradation of IDLMA. To tackle this problem, we focus on a blind source separation counterpart of IDLMA, independent low-rank matrix analysis. It uses nonnegative matrix factorization (NMF) as the source model, which can capture source spectral components that only appear in the target mixture, using the low-rank structure of the source spectrogram as a clue. We thus extend the DNN-based source model to encompass the NMF-based source model on the basis of the product-of-expert concept, which we call the product of source models (PoSM). For the proposed PoSM-based IDLMA, we derive a computationally efficient parameter estimation algorithm based on an optimization principle called the majorization-minimization algorithm. Experimental evaluations show the effectiveness of the proposed method.

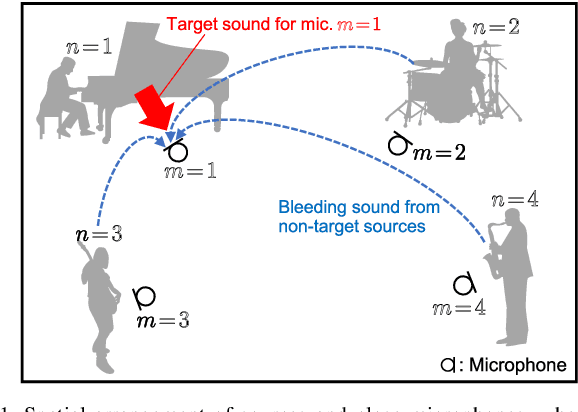

Prior Distribution Design for Music Bleeding-Sound Reduction Based on Nonnegative Matrix Factorization

Sep 01, 2021

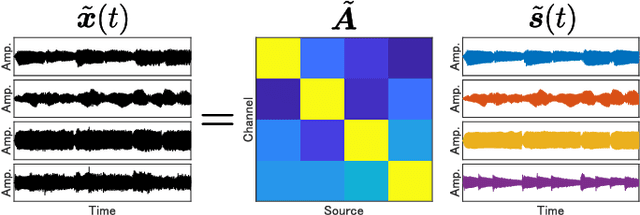

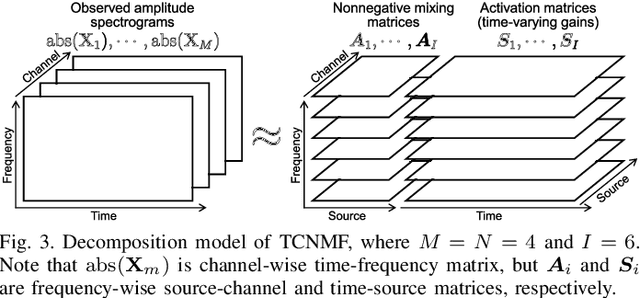

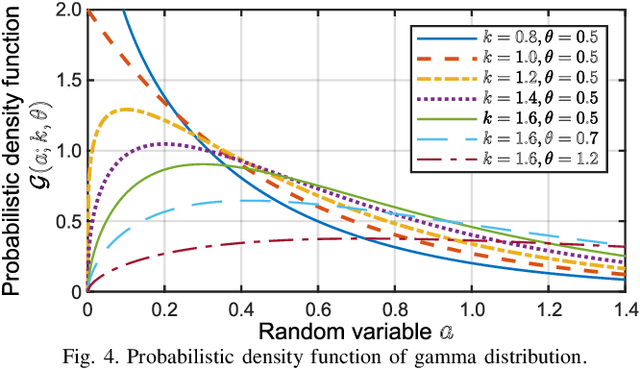

Abstract:When we place microphones close to a sound source near other sources in audio recording, the obtained audio signal includes undesired sound from the other sources, which is often called cross-talk or bleeding sound. For many audio applications including onstage sound reinforcement and sound editing after a live performance, it is important to reduce the bleeding sound in each recorded signal. However, since microphones are spatially apart from each other in this situation, typical phase-aware blind source separation (BSS) methods cannot be used. We propose a phase-insensitive method for blind bleeding-sound reduction. This method is based on time-channel nonnegative matrix factorization, which is a BSS method using only amplitude spectrograms. With the proposed method, we introduce the gamma-distribution-based prior for leakage levels of bleeding sounds. Its optimization can be interpreted as maximum a posteriori estimation. The experimental results of music bleeding-sound reduction indicate that the proposed method is more effective for bleeding-sound reduction of music signals compared with other BSS methods.

Independent Deeply Learned Tensor Analysis for Determined Audio Source Separation

Jun 10, 2021

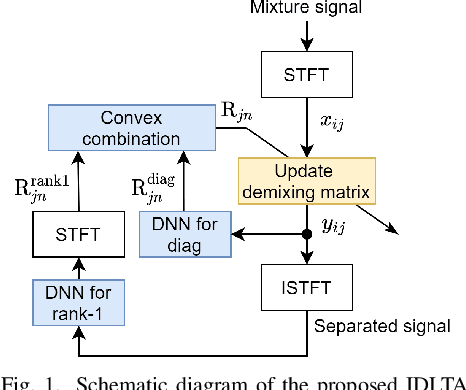

Abstract:We address the determined audio source separation problem in the time-frequency domain. In independent deeply learned matrix analysis (IDLMA), it is assumed that the inter-frequency correlation of each source spectrum is zero, which is inappropriate for modeling nonstationary signals such as music signals. To account for the correlation between frequencies, independent positive semidefinite tensor analysis has been proposed. This unsupervised (blind) method, however, severely restrict the structure of frequency covariance matrices (FCMs) to reduce the number of model parameters. As an extension of these conventional approaches, we here propose a supervised method that models FCMs using deep neural networks (DNNs). It is difficult to directly infer FCMs using DNNs. Therefore, we also propose a new FCM model represented as a convex combination of a diagonal FCM and a rank-1 FCM. Our FCM model is flexible enough to not only consider inter-frequency correlation, but also capture the dynamics of time-varying FCMs of nonstationary signals. We infer the proposed FCMs using two DNNs: DNN for power spectrum estimation and DNN for time-domain signal estimation. An experimental result of separating music signals shows that the proposed method provides higher separation performance than IDLMA.

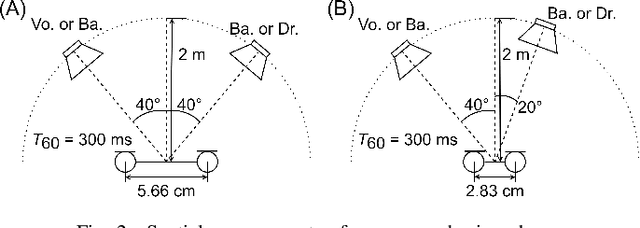

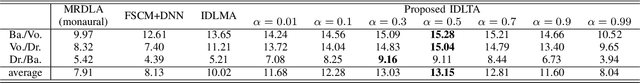

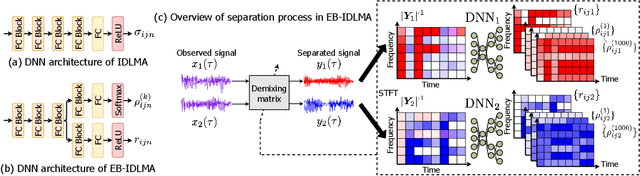

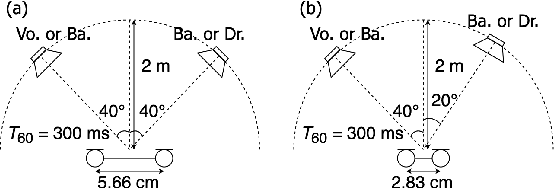

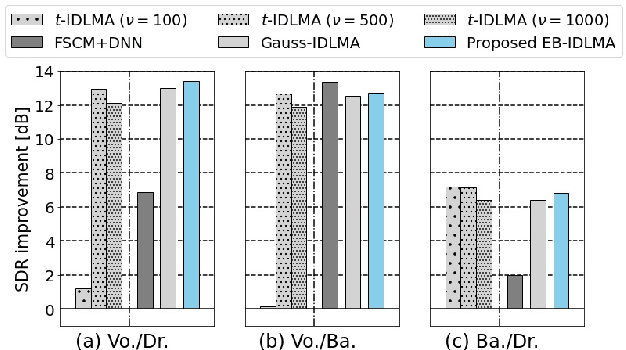

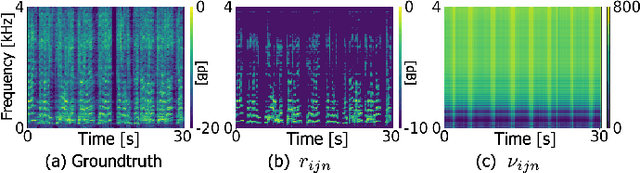

Empirical Bayesian Independent Deeply Learned Matrix Analysis For Multichannel Audio Source Separation

Jun 07, 2021

Abstract:Independent deeply learned matrix analysis (IDLMA) is one of the state-of-the-art supervised multichannel audio source separation methods. It blindly estimates the demixing filters on the basis of source independence, using the source model estimated by the deep neural network (DNN). However, since the ratios of the source to interferer signals vary widely among time-frequency (TF) slots, it is difficult to obtain reliable estimated power spectrograms of sources at all TF slots. In this paper, we propose an IDLMA extension, empirical Bayesian IDLMA (EB-IDLMA), by introducing a prior distribution of source power spectrograms and treating the source power spectrograms as latent random variables. This treatment allows us to implicitly consider the reliability of the estimated source power spectrograms for the estimation of demixing filters through the hyperparameters of the prior distribution estimated by the DNN. Experimental evaluations show the effectiveness of EB-IDLMA and the importance of introducing the reliability of the estimated source power spectrograms.

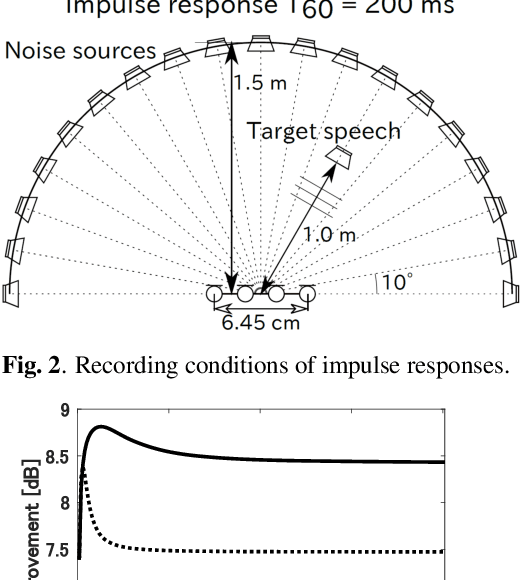

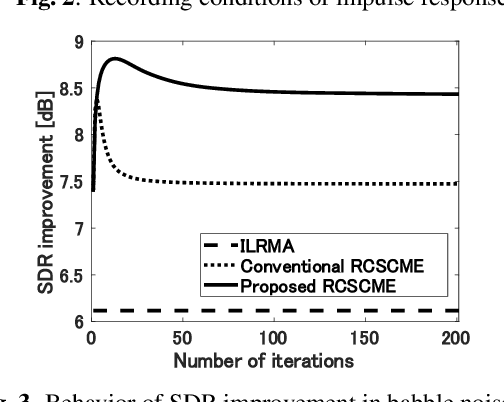

Deficient Basis Estimation of Noise Spatial Covariance Matrix for Rank-Constrained Spatial Covariance Matrix Estimation Method in Blind Speech Extraction

May 06, 2021

Abstract:Rank-constrained spatial covariance matrix estimation (RCSCME) is a state-of-the-art blind speech extraction method applied to cases where one directional target speech and diffuse noise are mixed. In this paper, we proposed a new algorithmic extension of RCSCME. RCSCME complements a deficient one rank of the diffuse noise spatial covariance matrix, which cannot be estimated via preprocessing such as independent low-rank matrix analysis, and estimates the source model parameters simultaneously. In the conventional RCSCME, a direction of the deficient basis is fixed in advance and only the scale is estimated; however, the candidate of this deficient basis is not unique in general. In the proposed RCSCME model, the deficient basis itself can be accurately estimated as a vector variable by solving a vector optimization problem. Also, we derive new update rules based on the EM algorithm. We confirm that the proposed method outperforms conventional methods under several noise conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge