Tingting Jiang

Contrastive and Selective Hidden Embeddings for Medical Image Segmentation

Jan 21, 2022

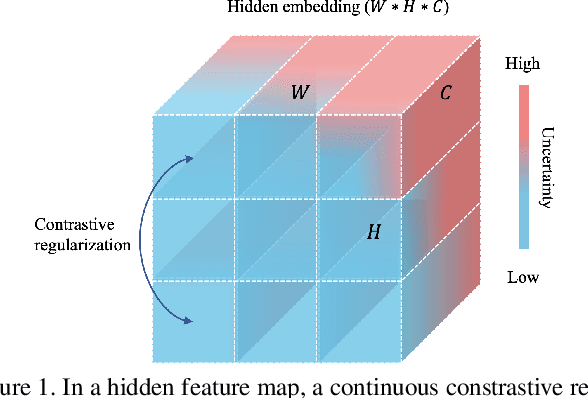

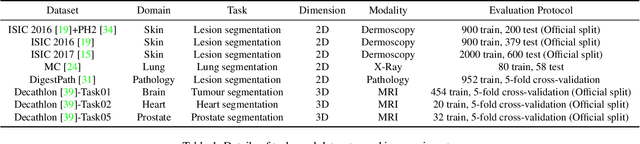

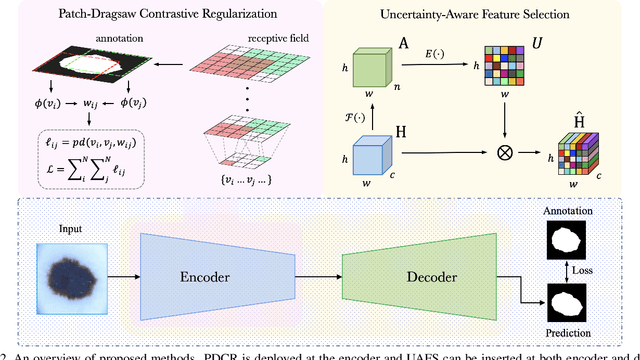

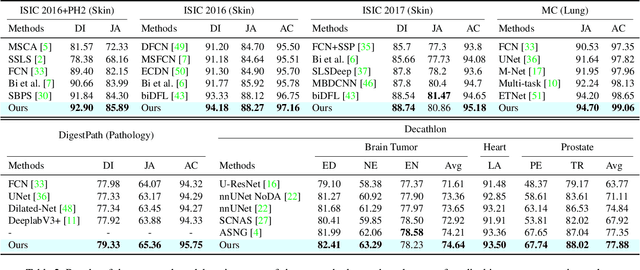

Abstract:Medical image segmentation has been widely recognized as a pivot procedure for clinical diagnosis, analysis, and treatment planning. However, the laborious and expensive annotation process lags down the speed of further advances. Contrastive learning-based weight pre-training provides an alternative by leveraging unlabeled data to learn a good representation. In this paper, we investigate how contrastive learning benefits the general supervised medical segmentation tasks. To this end, patch-dragsaw contrastive regularization (PDCR) is proposed to perform patch-level tugging and repulsing with the extent controlled by a continuous affinity score. And a new structure dubbed uncertainty-aware feature selection block (UAFS) is designed to perform the feature selection process, which can handle the learning target shift caused by minority features with high uncertainty. By plugging the proposed 2 modules into the existing segmentation architecture, we achieve state-of-the-art results across 8 public datasets from 6 domains. Newly designed modules further decrease the amount of training data to a quarter while achieving comparable, if not better, performances. From this perspective, we take the opposite direction of the original self/un-supervised contrastive learning by further excavating information contained within the label.

ASFormer: Transformer for Action Segmentation

Oct 16, 2021

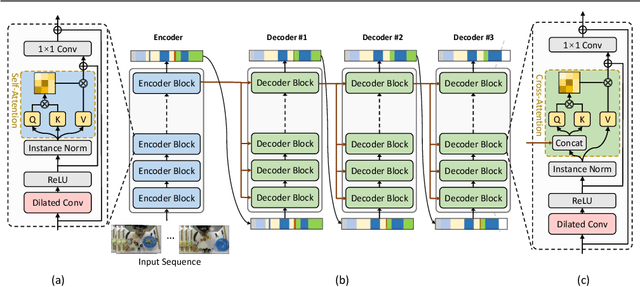

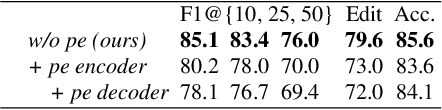

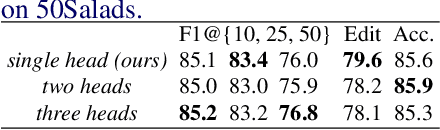

Abstract:Algorithms for the action segmentation task typically use temporal models to predict what action is occurring at each frame for a minute-long daily activity. Recent studies have shown the potential of Transformer in modeling the relations among elements in sequential data. However, there are several major concerns when directly applying the Transformer to the action segmentation task, such as the lack of inductive biases with small training sets, the deficit in processing long input sequence, and the limitation of the decoder architecture to utilize temporal relations among multiple action segments to refine the initial predictions. To address these concerns, we design an efficient Transformer-based model for action segmentation task, named ASFormer, with three distinctive characteristics: (i) We explicitly bring in the local connectivity inductive priors because of the high locality of features. It constrains the hypothesis space within a reliable scope, and is beneficial for the action segmentation task to learn a proper target function with small training sets. (ii) We apply a pre-defined hierarchical representation pattern that efficiently handles long input sequences. (iii) We carefully design the decoder to refine the initial predictions from the encoder. Extensive experiments on three public datasets demonstrate that effectiveness of our methods. Code is available at \url{https://github.com/ChinaYi/ASFormer}.

Not End-to-End: Explore Multi-Stage Architecture for Online Surgical Phase Recognition

Jul 10, 2021

Abstract:Surgical phase recognition is of particular interest to computer assisted surgery systems, in which the goal is to predict what phase is occurring at each frame for a surgery video. Networks with multi-stage architecture have been widely applied in many computer vision tasks with rich patterns, where a predictor stage first outputs initial predictions and an additional refinement stage operates on the initial predictions to perform further refinement. Existing works show that surgical video contents are well ordered and contain rich temporal patterns, making the multi-stage architecture well suited for the surgical phase recognition task. However, we observe that when simply applying the multi-stage architecture to the surgical phase recognition task, the end-to-end training manner will make the refinement ability fall short of its wishes. To address the problem, we propose a new non end-to-end training strategy and explore different designs of multi-stage architecture for surgical phase recognition task. For the non end-to-end training strategy, the refinement stage is trained separately with proposed two types of disturbed sequences. Meanwhile, we evaluate three different choices of refinement models to show that our analysis and solution are robust to the choices of specific multi-stage models. We conduct experiments on two public benchmarks, the M2CAI16 Workflow Challenge, and the Cholec80 dataset. Results show that multi-stage architecture trained with our strategy largely boosts the performance of the current state-of-the-art single-stage model. Code is available at \url{https://github.com/ChinaYi/casual_tcn}.

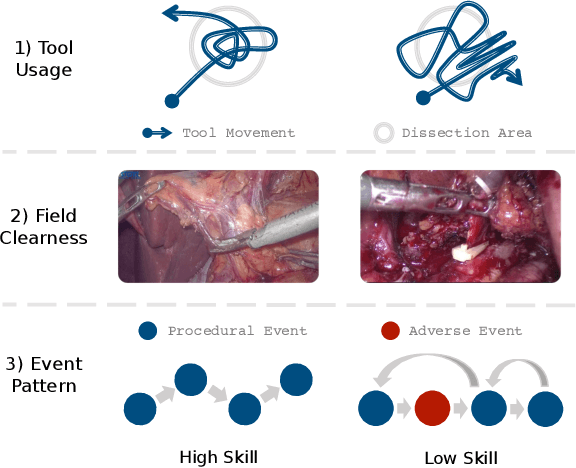

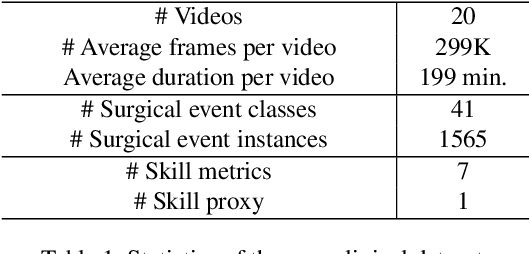

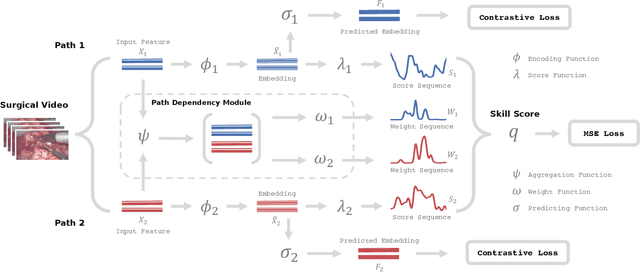

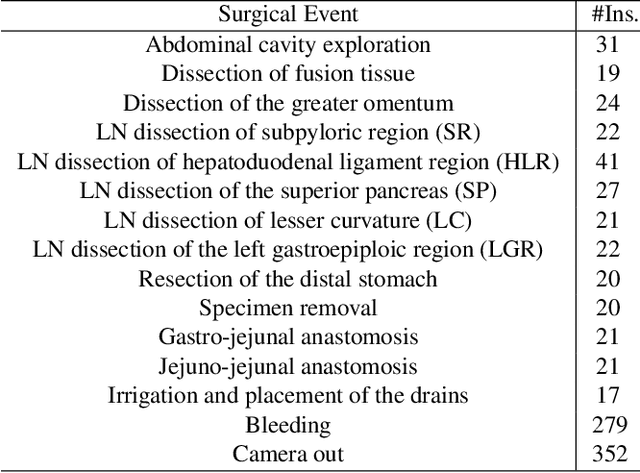

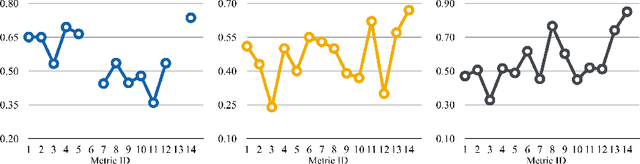

Towards Unified Surgical Skill Assessment

Jun 02, 2021

Abstract:Surgical skills have a great influence on surgical safety and patients' well-being. Traditional assessment of surgical skills involves strenuous manual efforts, which lacks efficiency and repeatability. Therefore, we attempt to automatically predict how well the surgery is performed using the surgical video. In this paper, a unified multi-path framework for automatic surgical skill assessment is proposed, which takes care of multiple composing aspects of surgical skills, including surgical tool usage, intraoperative event pattern, and other skill proxies. The dependency relationships among these different aspects are specially modeled by a path dependency module in the framework. We conduct extensive experiments on the JIGSAWS dataset of simulated surgical tasks, and a new clinical dataset of real laparoscopic surgeries. The proposed framework achieves promising results on both datasets, with the state-of-the-art on the simulated dataset advanced from 0.71 Spearman's correlation to 0.80. It is also shown that combining multiple skill aspects yields better performance than relying on a single aspect.

Unified Quality Assessment of In-the-Wild Videos with Mixed Datasets Training

Nov 15, 2020

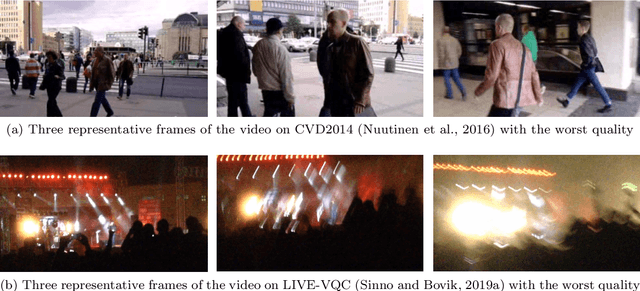

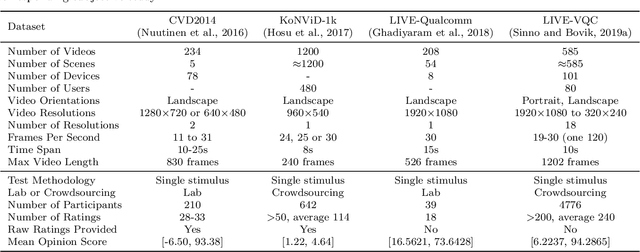

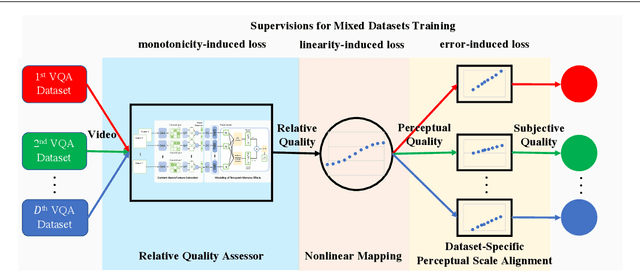

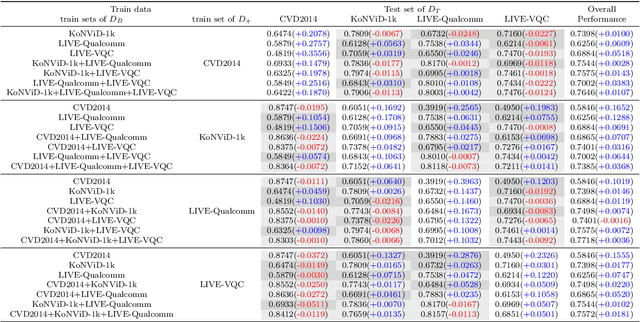

Abstract:Video quality assessment (VQA) is an important problem in computer vision. The videos in computer vision applications are usually captured in the wild. We focus on automatically assessing the quality of in-the-wild videos, which is a challenging problem due to the absence of reference videos, the complexity of distortions, and the diversity of video contents. Moreover, the video contents and distortions among existing datasets are quite different, which leads to poor performance of data-driven methods in the cross-dataset evaluation setting. To improve the performance of quality assessment models, we borrow intuitions from human perception, specifically, content dependency and temporal-memory effects of human visual system. To face the cross-dataset evaluation challenge, we explore a mixed datasets training strategy for training a single VQA model with multiple datasets. The proposed unified framework explicitly includes three stages: relative quality assessor, nonlinear mapping, and dataset-specific perceptual scale alignment, to jointly predict relative quality, perceptual quality, and subjective quality. Experiments are conducted on four publicly available datasets for VQA in the wild, i.e., LIVE-VQC, LIVE-Qualcomm, KoNViD-1k, and CVD2014. The experimental results verify the effectiveness of the mixed datasets training strategy and prove the superior performance of the unified model in comparison with the state-of-the-art models. For reproducible research, we make the PyTorch implementation of our method available at https://github.com/lidq92/MDTVSFA.

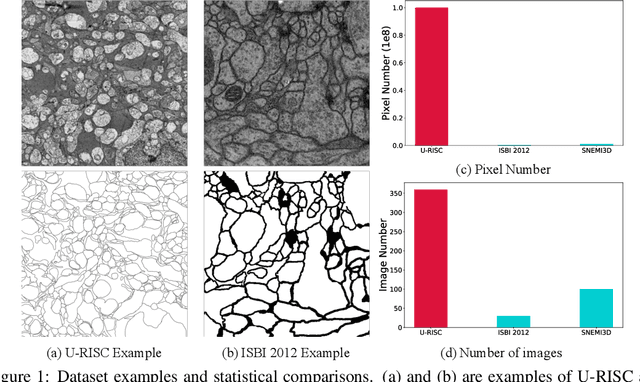

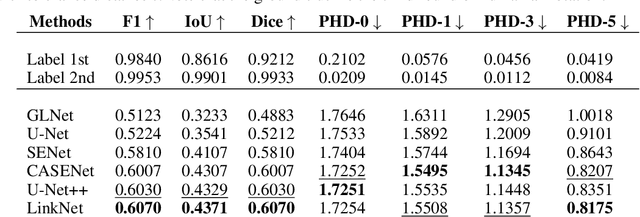

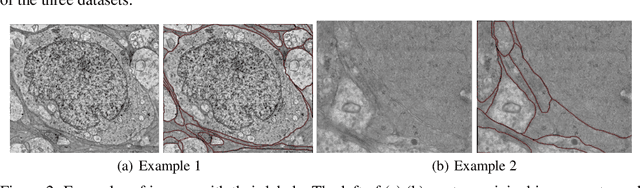

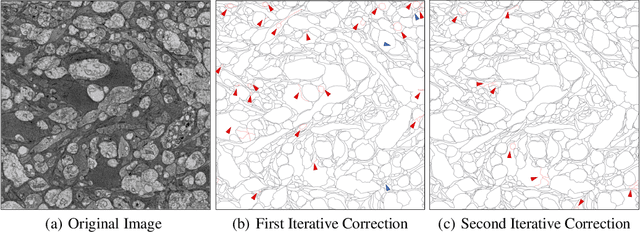

Human Perception-based Evaluation Criterion for Ultra-high Resolution Cell Membrane Segmentation

Oct 16, 2020

Abstract:Computer vision technology is widely used in biological and medical data analysis and understanding. However, there are still two major bottlenecks in the field of cell membrane segmentation, which seriously hinder further research: lack of sufficient high-quality data and lack of suitable evaluation criteria. In order to solve these two problems, this paper first proposes an Ultra-high Resolution Image Segmentation dataset for the Cell membrane, called U-RISC, the largest annotated Electron Microscopy (EM) dataset for the Cell membrane with multiple iterative annotations and uncompressed high-resolution raw data. During the analysis process of the U-RISC, we found that the current popular segmentation evaluation criteria are inconsistent with human perception. This interesting phenomenon is confirmed by a subjective experiment involving twenty people. Furthermore, to resolve this inconsistency, we propose a new evaluation criterion called Perceptual Hausdorff Distance (PHD) to measure the quality of cell membrane segmentation results. Detailed performance comparison and discussion of classic segmentation methods along with two iterative manual annotation results under existing evaluation criteria and PHD is given.

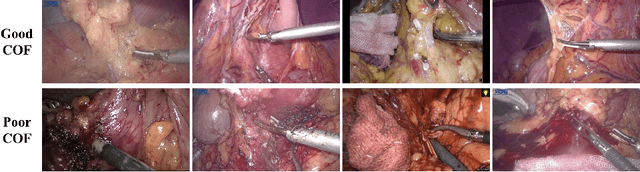

Surgical Skill Assessment on In-Vivo Clinical Data via the Clearness of Operating Field

Aug 27, 2020

Abstract:Surgical skill assessment is important for surgery training and quality control. Prior works on this task largely focus on basic surgical tasks such as suturing and knot tying performed in simulation settings. In contrast, surgical skill assessment is studied in this paper on a real clinical dataset, which consists of fifty-seven in-vivo laparoscopic surgeries and corresponding skill scores annotated by six surgeons. From analyses on this dataset, the clearness of operating field (COF) is identified as a good proxy for overall surgical skills, given its strong correlation with overall skills and high inter-annotator consistency. Then an objective and automated framework based on neural network is proposed to predict surgical skills through the proxy of COF. The neural network is jointly trained with a supervised regression loss and an unsupervised rank loss. In experiments, the proposed method achieves 0.55 Spearman's correlation with the ground truth of overall technical skill, which is even comparable with the human performance of junior surgeons.

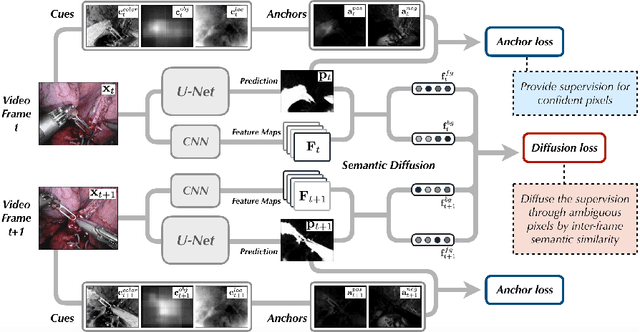

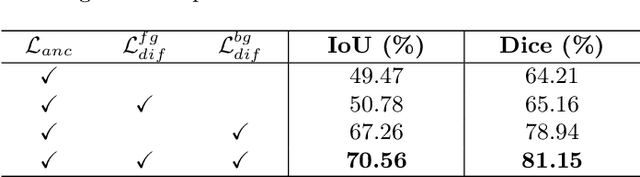

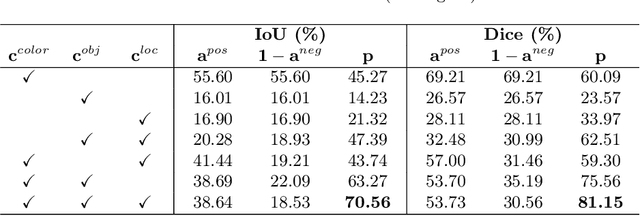

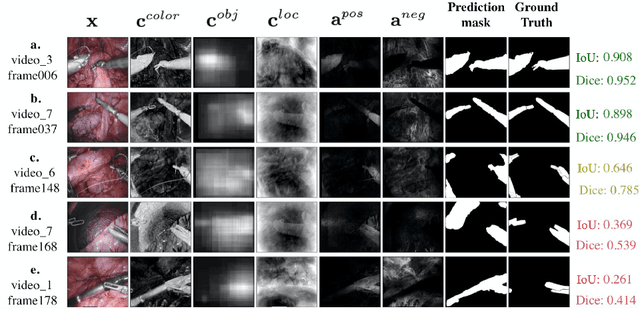

Unsupervised Surgical Instrument Segmentation via Anchor Generation and Semantic Diffusion

Aug 27, 2020

Abstract:Surgical instrument segmentation is a key component in developing context-aware operating rooms. Existing works on this task heavily rely on the supervision of a large amount of labeled data, which involve laborious and expensive human efforts. In contrast, a more affordable unsupervised approach is developed in this paper. To train our model, we first generate anchors as pseudo labels for instruments and background tissues respectively by fusing coarse handcrafted cues. Then a semantic diffusion loss is proposed to resolve the ambiguity in the generated anchors via the feature correlation between adjacent video frames. In the experiments on the binary instrument segmentation task of the 2017 MICCAI EndoVis Robotic Instrument Segmentation Challenge dataset, the proposed method achieves 0.71 IoU and 0.81 Dice score without using a single manual annotation, which is promising to show the potential of unsupervised learning for surgical tool segmentation.

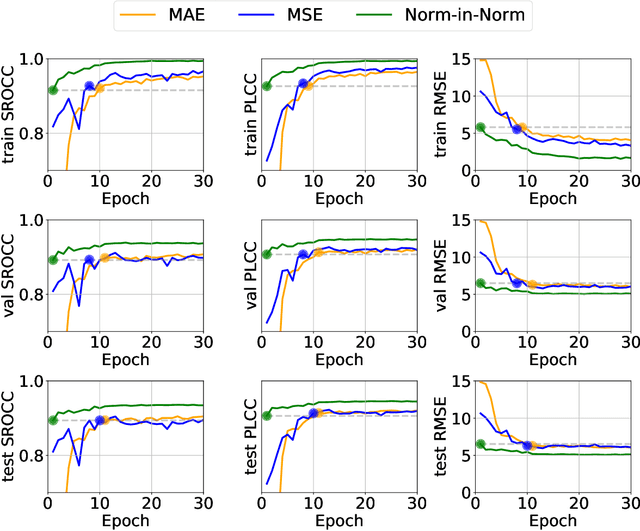

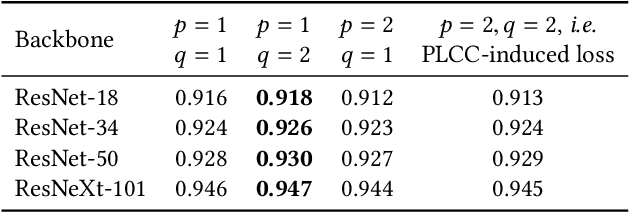

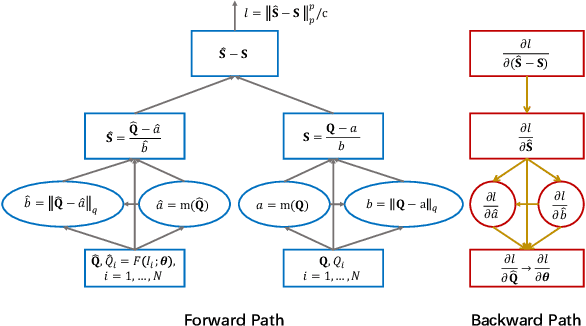

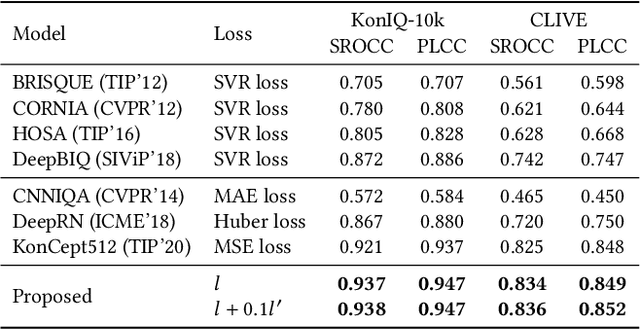

Norm-in-Norm Loss with Faster Convergence and Better Performance for Image Quality Assessment

Aug 10, 2020

Abstract:Currently, most image quality assessment (IQA) models are supervised by the MAE or MSE loss with empirically slow convergence. It is well-known that normalization can facilitate fast convergence. Therefore, we explore normalization in the design of loss functions for IQA. Specifically, we first normalize the predicted quality scores and the corresponding subjective quality scores. Then, the loss is defined based on the norm of the differences between these normalized values. The resulting "Norm-in-Norm'' loss encourages the IQA model to make linear predictions with respect to subjective quality scores. After training, the least squares regression is applied to determine the linear mapping from the predicted quality to the subjective quality. It is shown that the new loss is closely connected with two common IQA performance criteria (PLCC and RMSE). Through theoretical analysis, it is proved that the embedded normalization makes the gradients of the loss function more stable and more predictable, which is conducive to the faster convergence of the IQA model. Furthermore, to experimentally verify the effectiveness of the proposed loss, it is applied to solve a challenging problem: quality assessment of in-the-wild images. Experiments on two relevant datasets (KonIQ-10k and CLIVE) show that, compared to MAE or MSE loss, the new loss enables the IQA model to converge about 10 times faster and the final model achieves better performance. The proposed model also achieves state-of-the-art prediction performance on this challenging problem. For reproducible scientific research, our code is publicly available at https://github.com/lidq92/LinearityIQA.

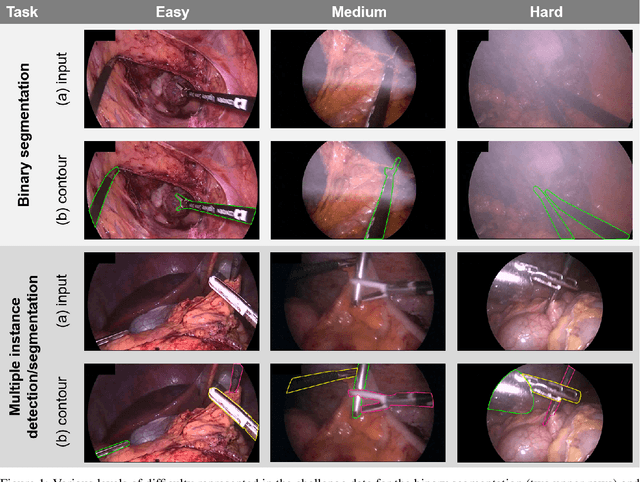

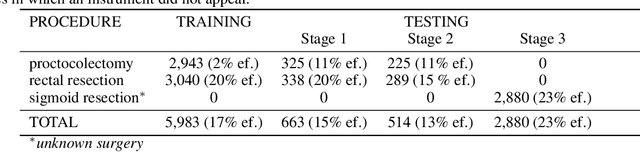

Robust Medical Instrument Segmentation Challenge 2019

Mar 23, 2020

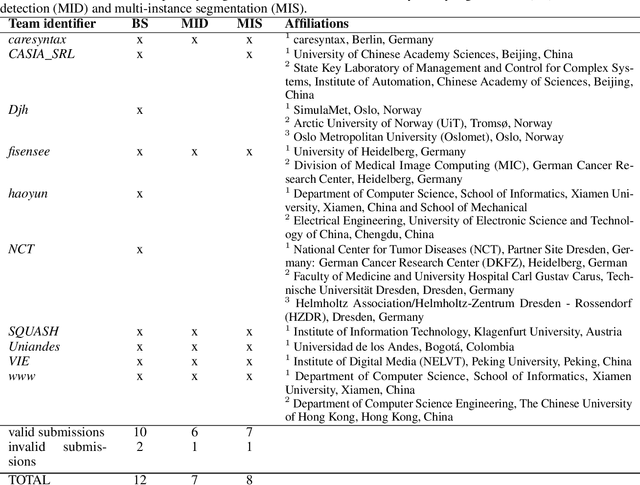

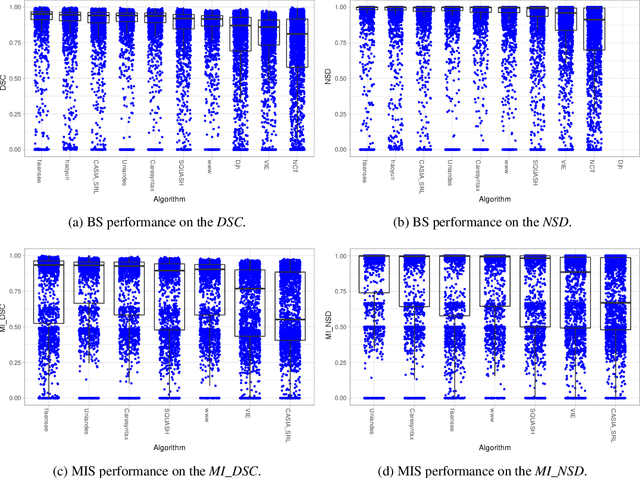

Abstract:Intraoperative tracking of laparoscopic instruments is often a prerequisite for computer and robotic-assisted interventions. While numerous methods for detecting, segmenting and tracking of medical instruments based on endoscopic video images have been proposed in the literature, key limitations remain to be addressed: Firstly, robustness, that is, the reliable performance of state-of-the-art methods when run on challenging images (e.g. in the presence of blood, smoke or motion artifacts). Secondly, generalization; algorithms trained for a specific intervention in a specific hospital should generalize to other interventions or institutions. In an effort to promote solutions for these limitations, we organized the Robust Medical Instrument Segmentation (ROBUST-MIS) challenge as an international benchmarking competition with a specific focus on the robustness and generalization capabilities of algorithms. For the first time in the field of endoscopic image processing, our challenge included a task on binary segmentation and also addressed multi-instance detection and segmentation. The challenge was based on a surgical data set comprising 10,040 annotated images acquired from a total of 30 surgical procedures from three different types of surgery. The validation of the competing methods for the three tasks (binary segmentation, multi-instance detection and multi-instance segmentation) was performed in three different stages with an increasing domain gap between the training and the test data. The results confirm the initial hypothesis, namely that algorithm performance degrades with an increasing domain gap. While the average detection and segmentation quality of the best-performing algorithms is high, future research should concentrate on detection and segmentation of small, crossing, moving and transparent instrument(s) (parts).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge