Tao Lu

An Unsupervised Attentive-Adversarial Learning Framework for Single Image Deraining

Feb 19, 2022

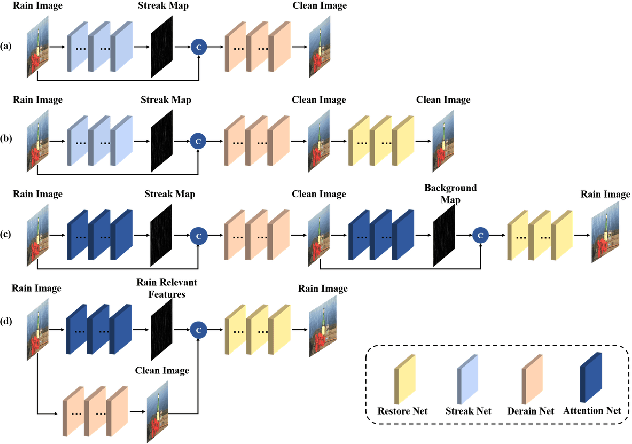

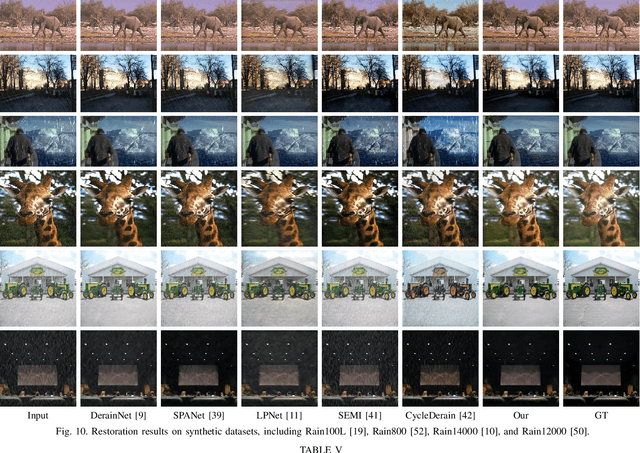

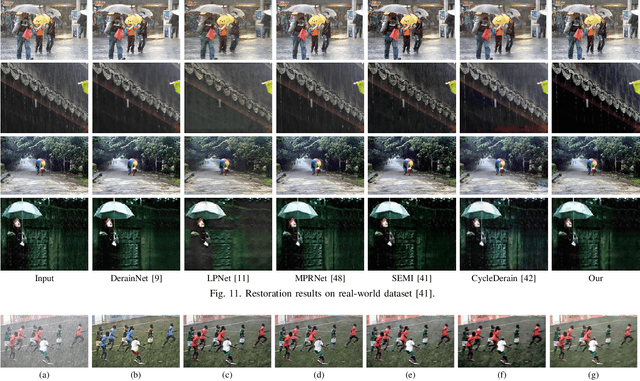

Abstract:Single image deraining has been an important topic in low-level computer vision tasks. The atmospheric veiling effect (which is generated by rain accumulation, similar to fog) usually appears with the rain. Most deep learning-based single image deraining methods mainly focus on rain streak removal by disregarding this effect, which leads to low-quality deraining performance. In addition, these methods are trained only on synthetic data, hence they do not take into account real-world rainy images. To address the above issues, we propose a novel unsupervised attentive-adversarial learning framework (UALF) for single image deraining that trains on both synthetic and real rainy images while simultaneously capturing both rain streaks and rain accumulation features. UALF consists of a Rain-fog2Clean (R2C) transformation block and a Clean2Rain-fog (C2R) transformation block. In R2C, to better characterize the rain-fog fusion feature and to achieve high-quality deraining performance, we employ an attention rain-fog feature extraction network (ARFE) to exploit the self-similarity of global and local rain-fog information by learning the spatial feature correlations. Moreover, to improve the transformation ability of C2R, we design a rain-fog feature decoupling and reorganization network (RFDR) by embedding a rainy image degradation model and a mixed discriminator to preserve richer texture details. Extensive experiments on benchmark rain-fog and rain datasets show that UALF outperforms state-of-the-art deraining methods. We also conduct defogging performance evaluation experiments to further demonstrate the effectiveness of UALF

Holistic Attention-Fusion Adversarial Network for Single Image Defogging

Feb 19, 2022

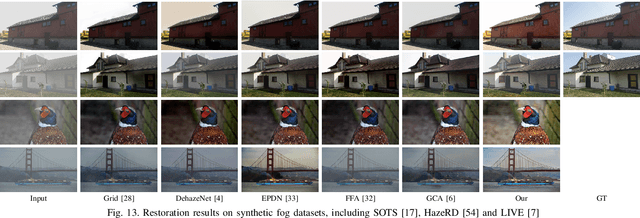

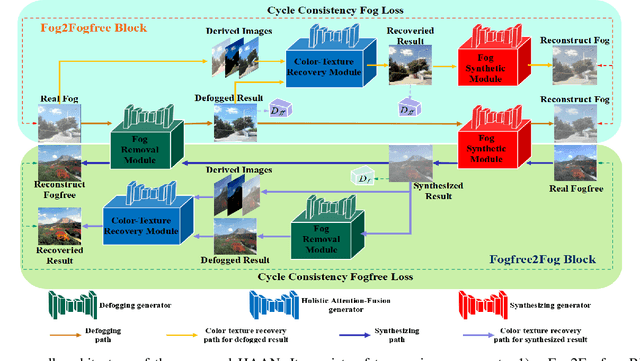

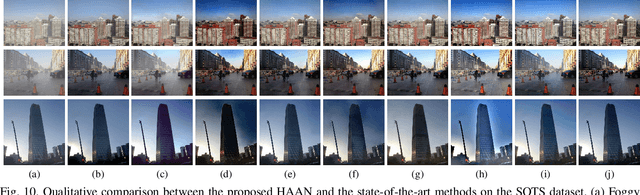

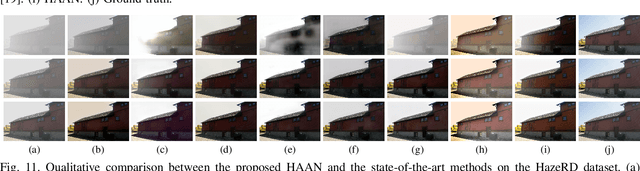

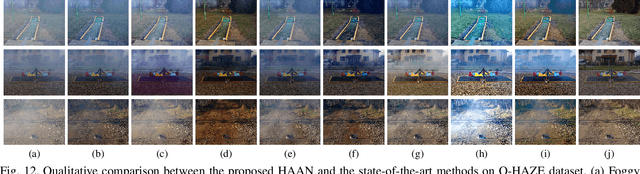

Abstract:Adversarial learning-based image defogging methods have been extensively studied in computer vision due to their remarkable performance. However, most existing methods have limited defogging capabilities for real cases because they are trained on the paired clear and synthesized foggy images of the same scenes. In addition, they have limitations in preserving vivid color and rich textual details in defogging. To address these issues, we develop a novel generative adversarial network, called holistic attention-fusion adversarial network (HAAN), for single image defogging. HAAN consists of a Fog2Fogfree block and a Fogfree2Fog block. In each block, there are three learning-based modules, namely, fog removal, color-texture recovery, and fog synthetic, that are constrained each other to generate high quality images. HAAN is designed to exploit the self-similarity of texture and structure information by learning the holistic channel-spatial feature correlations between the foggy image with its several derived images. Moreover, in the fog synthetic module, we utilize the atmospheric scattering model to guide it to improve the generative quality by focusing on an atmospheric light optimization with a novel sky segmentation network. Extensive experiments on both synthetic and real-world datasets show that HAAN outperforms state-of-the-art defogging methods in terms of quantitative accuracy and subjective visual quality.

Passive Indoor Localization with WiFi Fingerprints

Nov 29, 2021

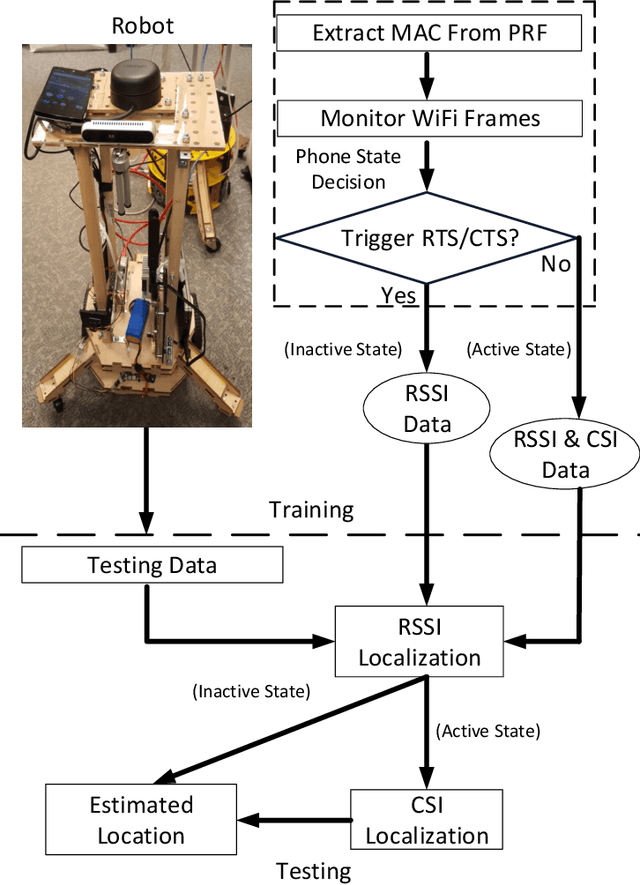

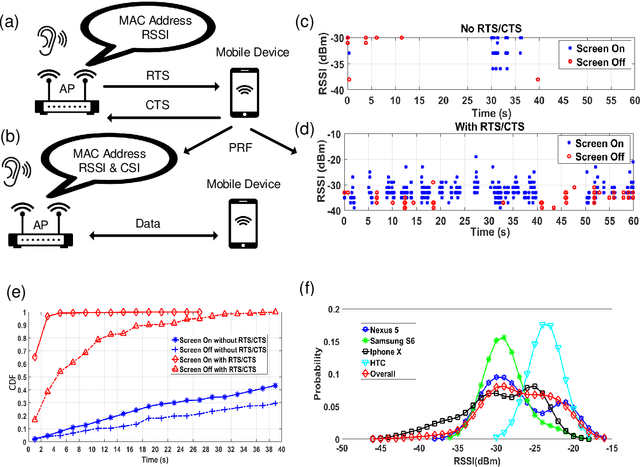

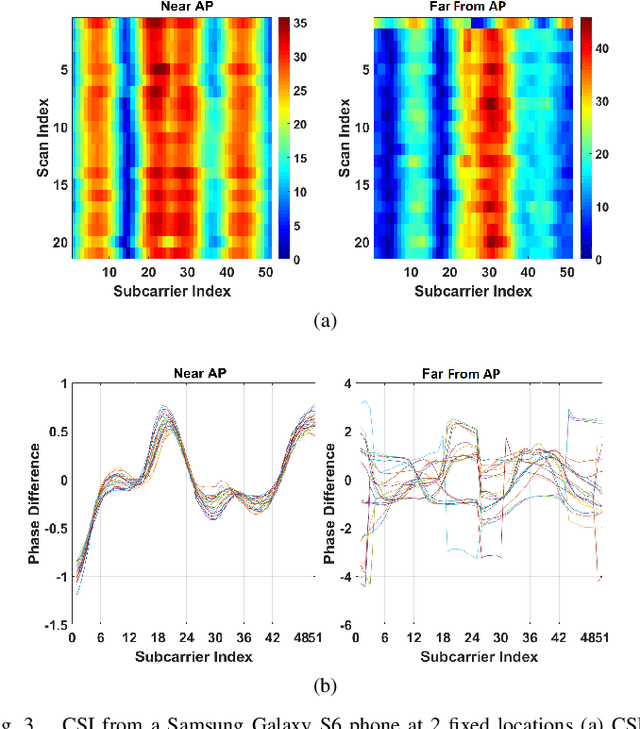

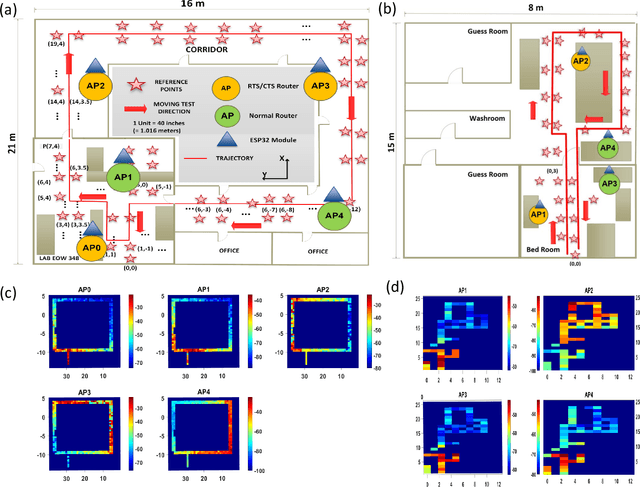

Abstract:This paper proposes passive WiFi indoor localization. Instead of using WiFi signals received by mobile devices as fingerprints, we use signals received by routers to locate the mobile carrier. Consequently, software installation on the mobile device is not required. To resolve the data insufficiency problem, flow control signals such as request to send (RTS) and clear to send (CTS) are utilized. In our model, received signal strength indicator (RSSI) and channel state information (CSI) are used as fingerprints for several algorithms, including deterministic, probabilistic and neural networks localization algorithms. We further investigated localization algorithms performance through extensive on-site experiments with various models of phones at hundreds of testing locations. We demonstrate that our passive scheme achieves an average localization error of 0.8 m when the phone is actively transmitting data frames and 1.5 m when it is not transmitting data frames.

CamLiFlow: Bidirectional Camera-LiDAR Fusion for Joint Optical Flow and Scene Flow Estimation

Nov 20, 2021

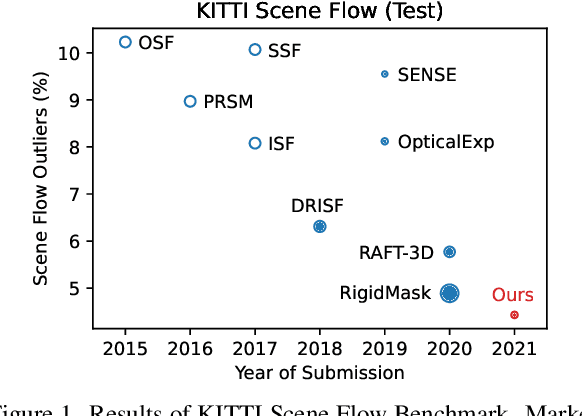

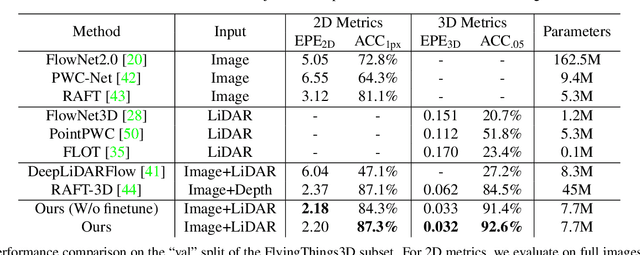

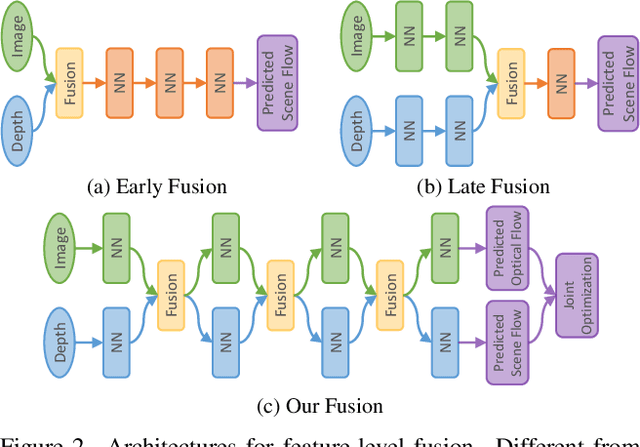

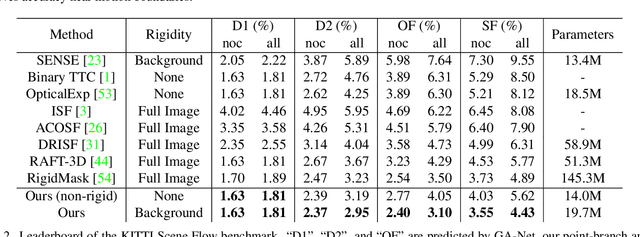

Abstract:In this paper, we study the problem of jointly estimating the optical flow and scene flow from synchronized 2D and 3D data. Previous methods either employ a complex pipeline which splits the joint task into independent stages, or fuse 2D and 3D information in an ``early-fusion'' or ``late-fusion'' manner. Such one-size-fits-all approaches suffer from a dilemma of failing to fully utilize the characteristic of each modality or to maximize the inter-modality complementarity. To address the problem, we propose a novel end-to-end framework, called CamLiFlow. It consists of 2D and 3D branches with multiple bidirectional connections between them in specific layers. Different from previous work, we apply a point-based 3D branch to better extract the geometric features and design a symmetric learnable operator to fuse dense image features and sparse point features. We also propose a transformation for point clouds to solve the non-linear issue of 3D-2D projection. Experiments show that CamLiFlow achieves better performance with fewer parameters. Our method ranks 1st on the KITTI Scene Flow benchmark, outperforming the previous art with 1/7 parameters. Code will be made available.

TANet: A new Paradigm for Global Face Super-resolution via Transformer-CNN Aggregation Network

Sep 16, 2021

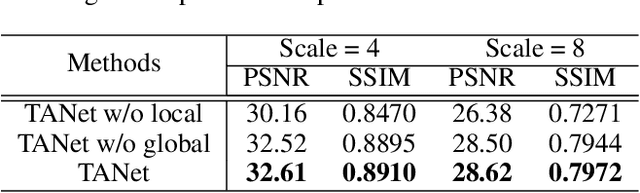

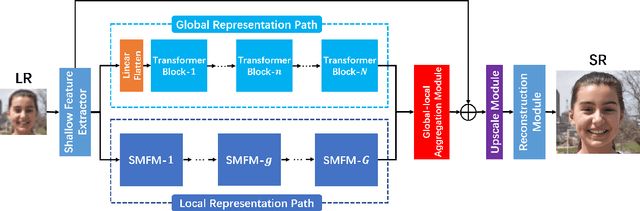

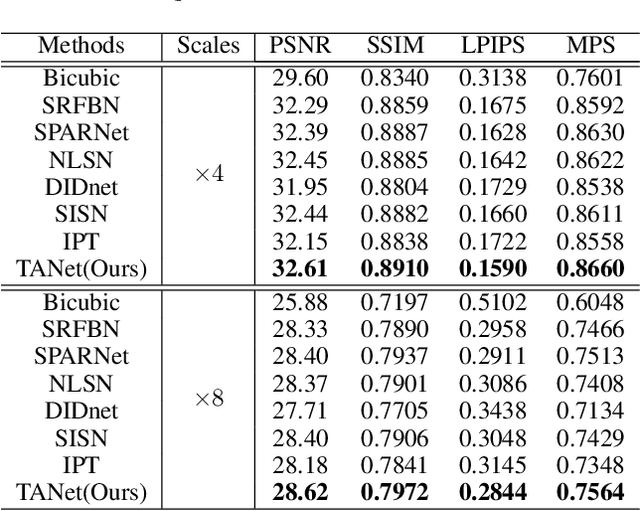

Abstract:Recently, face super-resolution (FSR) methods either feed whole face image into convolutional neural networks (CNNs) or utilize extra facial priors (e.g., facial parsing maps, facial landmarks) to focus on facial structure, thereby maintaining the consistency of the facial structure while restoring facial details. However, the limited receptive fields of CNNs and inaccurate facial priors will reduce the naturalness and fidelity of the reconstructed face. In this paper, we propose a novel paradigm based on the self-attention mechanism (i.e., the core of Transformer) to fully explore the representation capacity of the facial structure feature. Specifically, we design a Transformer-CNN aggregation network (TANet) consisting of two paths, in which one path uses CNNs responsible for restoring fine-grained facial details while the other utilizes a resource-friendly Transformer to capture global information by exploiting the long-distance visual relation modeling. By aggregating the features from the above two paths, the consistency of global facial structure and fidelity of local facial detail restoration are strengthened simultaneously. Experimental results of face reconstruction and recognition verify that the proposed method can significantly outperform the state-of-the-art methods.

GLSD: The Global Large-Scale Ship Database and Baseline Evaluations

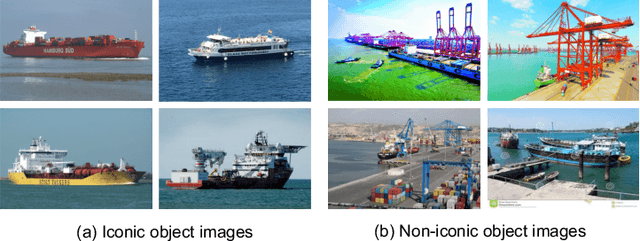

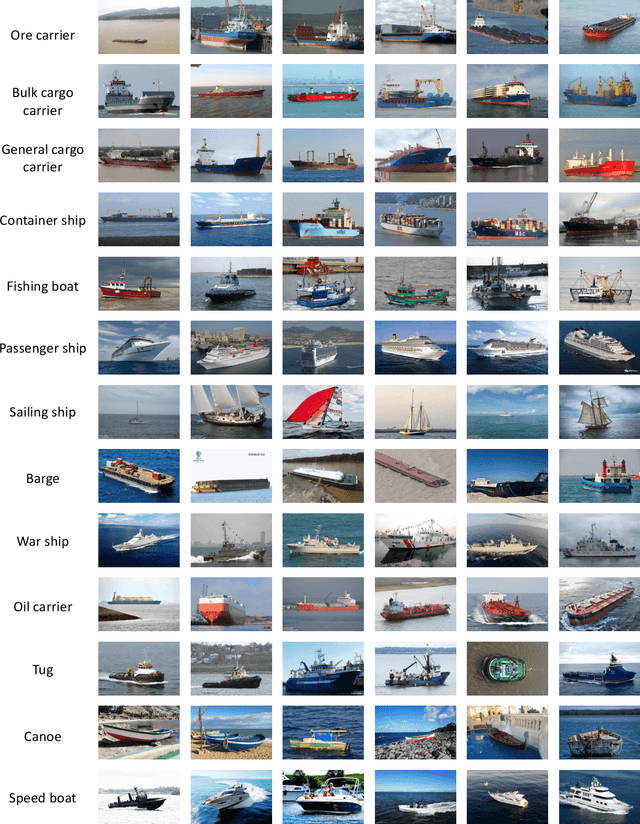

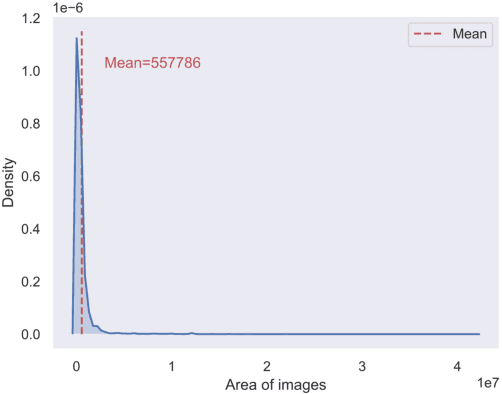

Jun 05, 2021

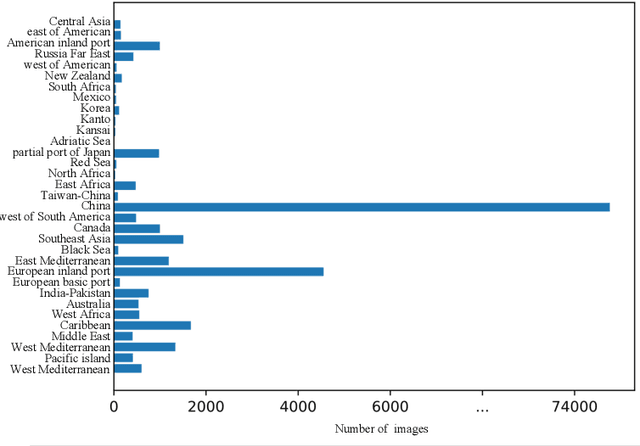

Abstract:In this paper, we introduce a challenging global large-scale ship database (called GLSD), designed specifically for ship detection tasks. The designed GLSD database includes a total of 140,616 annotated instances from 100,729 images. Based on the collected images, we propose 13 categories that widely exists in international routes. These categories include sailing boat, fishing boat, passenger ship, war ship, general cargo ship, container ship, bulk cargo carrier, barge, ore carrier, speed boat, canoe, oil carrier, and tug. The motivations of developing GLSD include the following: 1) providing a refined ship detection database; 2) providing the worldwide researchers of ship detection and exhaustive label information (bounding box and ship class label) in one uniform global database; and 3) providing a large-scale ship database with geographic information (port and country information) that benefits multi-modal analysis. In addition, we discuss the evaluation protocols given image characteristics in GLSD and analyze the performance of selected state-of-the-art object detection algorithms on GSLD, providing baselines for future studies. More information regarding the designed GLSD can be found at https://github.com/jiaming-wang/GLSD.

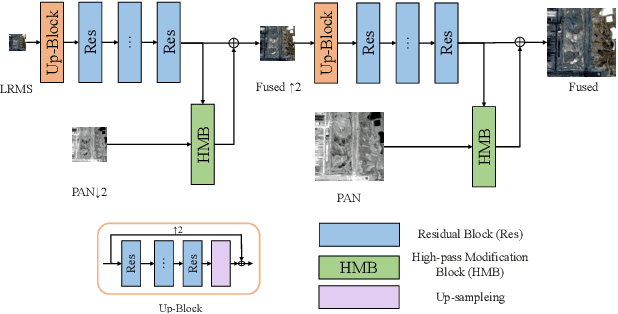

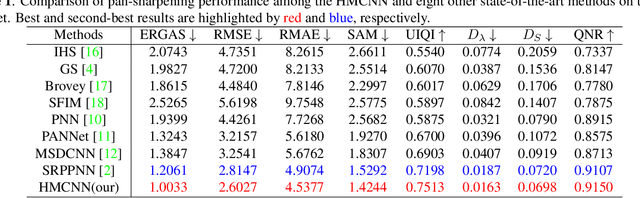

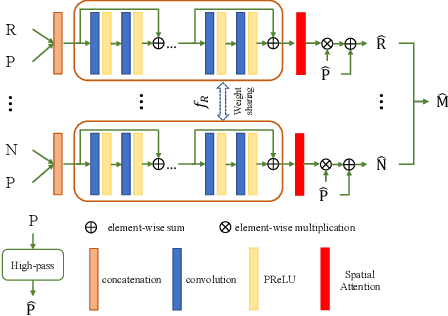

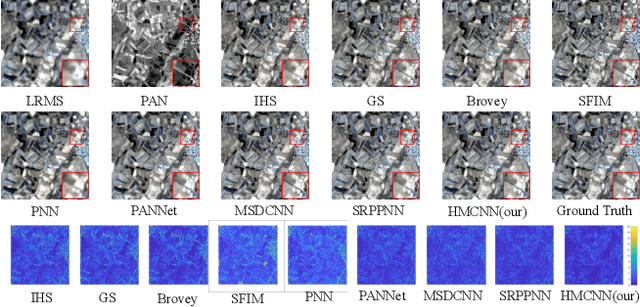

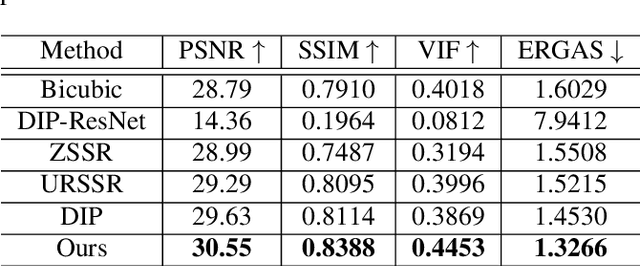

Pan-sharpening via High-pass Modification Convolutional Neural Network

May 24, 2021

Abstract:Most existing deep learning-based pan-sharpening methods have several widely recognized issues, such as spectral distortion and insufficient spatial texture enhancement, we propose a novel pan-sharpening convolutional neural network based on a high-pass modification block. Different from existing methods, the proposed block is designed to learn the high-pass information, leading to enhance spatial information in each band of the multi-spectral-resolution images. To facilitate the generation of visually appealing pan-sharpened images, we propose a perceptual loss function and further optimize the model based on high-level features in the near-infrared space. Experiments demonstrate the superior performance of the proposed method compared to the state-of-the-art pan-sharpening methods, both quantitatively and qualitatively. The proposed model is open-sourced at https://github.com/jiaming-wang/HMB.

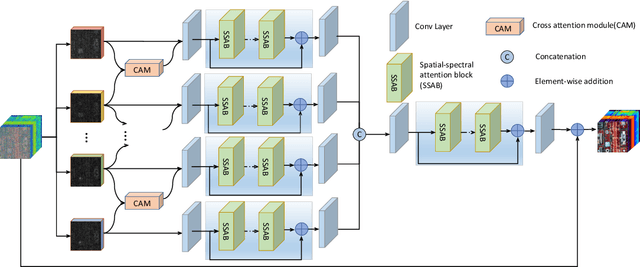

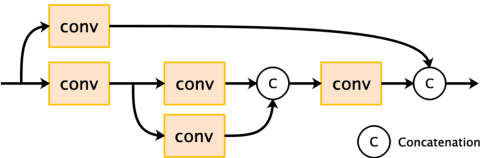

SSCAN: A Spatial-spectral Cross Attention Network for Hyperspectral Image Denoising

May 23, 2021

Abstract:Hyperspectral images (HSIs) have been widely used in a variety of applications thanks to the rich spectral information they are able to provide. Among all HSI processing tasks, HSI denoising is a crucial step. Recently, deep learning-based image denoising methods have made great progress and achieved great performance. However, existing methods tend to ignore the correlations between adjacent spectral bands, leading to problems such as spectral distortion and blurred edges in denoised results. In this study, we propose a novel HSI denoising network, termed SSCAN, that combines group convolutions and attention modules. Specifically, we use a group convolution with a spatial attention module to facilitate feature extraction by directing models' attention to band-wise important features. We propose a spectral-spatial attention block (SSAB) to exploit the spatial and spectral information in hyperspectral images in an effective manner. In addition, we adopt residual learning operations with skip connections to ensure training stability. The experimental results indicate that the proposed SSCAN outperforms several state-of-the-art HSI denoising algorithms.

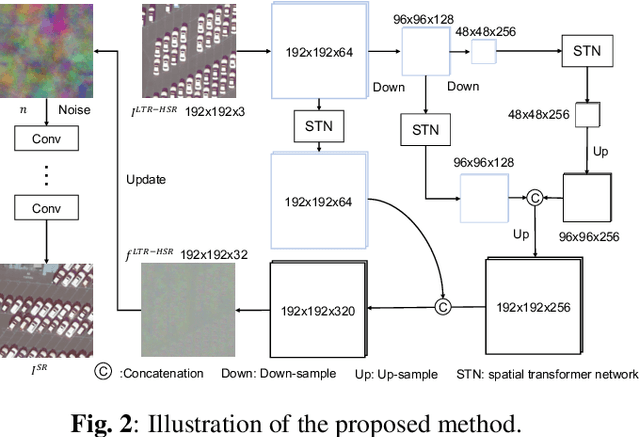

Unsupervised Remote Sensing Super-Resolution via Migration Image Prior

May 23, 2021

Abstract:Recently, satellites with high temporal resolution have fostered wide attention in various practical applications. Due to limitations of bandwidth and hardware cost, however, the spatial resolution of such satellites is considerably low, largely limiting their potentials in scenarios that require spatially explicit information. To improve image resolution, numerous approaches based on training low-high resolution pairs have been proposed to address the super-resolution (SR) task. Despite their success, however, low/high spatial resolution pairs are usually difficult to obtain in satellites with a high temporal resolution, making such approaches in SR impractical to use. In this paper, we proposed a new unsupervised learning framework, called "MIP", which achieves SR tasks without low/high resolution image pairs. First, random noise maps are fed into a designed generative adversarial network (GAN) for reconstruction. Then, the proposed method converts the reference image to latent space as the migration image prior. Finally, we update the input noise via an implicit method, and further transfer the texture and structured information from the reference image. Extensive experimental results on the Draper dataset show that MIP achieves significant improvements over state-of-the-art methods both quantitatively and qualitatively. The proposed MIP is open-sourced at http://github.com/jiaming-wang/MIP.

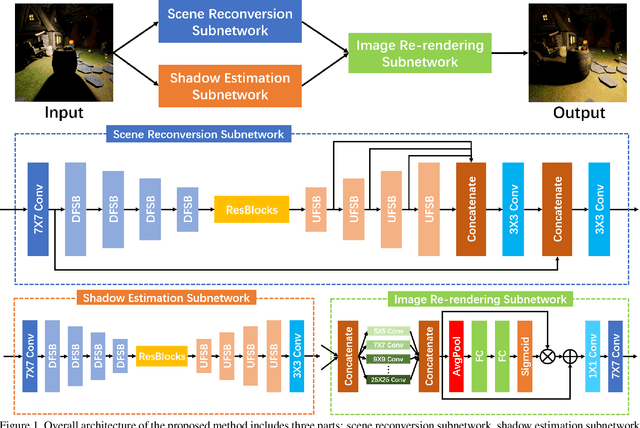

Multi-scale Self-calibrated Network for Image Light Source Transfer

Apr 18, 2021

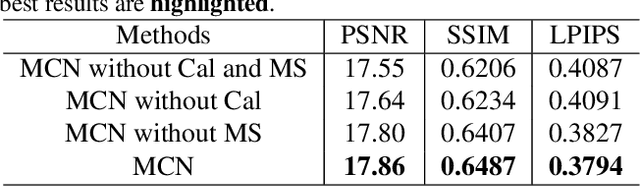

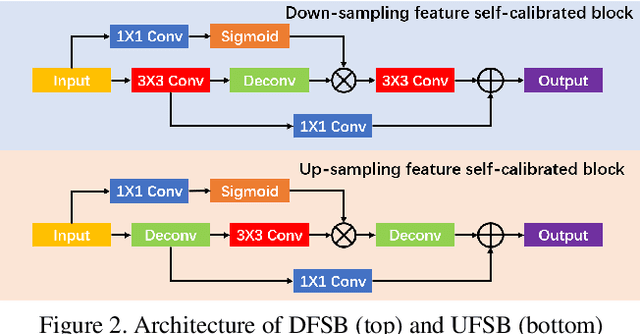

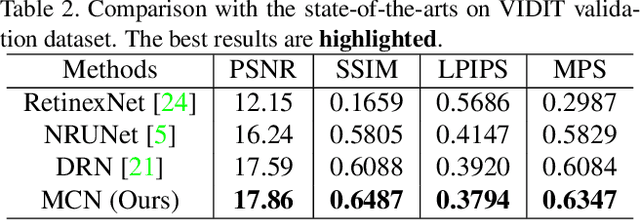

Abstract:Image light source transfer (LLST), as the most challenging task in the domain of image relighting, has attracted extensive attention in recent years. In the latest research, LLST is decomposed three sub-tasks: scene reconversion, shadow estimation, and image re-rendering, which provides a new paradigm for image relighting. However, many problems for scene reconversion and shadow estimation tasks, including uncalibrated feature information and poor semantic information, are still unresolved, thereby resulting in insufficient feature representation. In this paper, we propose novel down-sampling feature self-calibrated block (DFSB) and up-sampling feature self-calibrated block (UFSB) as the basic blocks of feature encoder and decoder to calibrate feature representation iteratively because the LLST is similar to the recalibration of image light source. In addition, we fuse the multi-scale features of the decoder in scene reconversion task to further explore and exploit more semantic information, thereby providing more accurate primary scene structure for image re-rendering. Experimental results in the VIDIT dataset show that the proposed approach significantly improves the performance for LLST.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge