Suranga Seneviratne

The University of Sydney, Australia

Eliciting Least-to-Most Reasoning for Phishing URL Detection

Jan 28, 2026Abstract:Phishing continues to be one of the most prevalent attack vectors, making accurate classification of phishing URLs essential. Recently, large language models (LLMs) have demonstrated promising results in phishing URL detection. However, their reasoning capabilities that enabled such performance remain underexplored. To this end, in this paper, we propose a Least-to-Most prompting framework for phishing URL detection. In particular, we introduce an "answer sensitivity" mechanism that guides Least-to-Most's iterative approach to enhance reasoning and yield higher prediction accuracy. We evaluate our framework using three URL datasets and four state-of-the-art LLMs, comparing against a one-shot approach and a supervised model. We demonstrate that our framework outperforms the one-shot baseline while achieving performance comparable to that of the supervised model, despite requiring significantly less training data. Furthermore, our in-depth analysis highlights how the iterative reasoning enabled by Least-to-Most, and reinforced by our answer sensitivity mechanism, drives these performance gains. Overall, we show that this simple yet powerful prompting strategy consistently outperforms both one-shot and supervised approaches, despite requiring minimal training or few-shot guidance. Our experimental setup can be found in our Github repository github.sydney.edu.au/htri0928/least-to-most-phishing-detection.

Personalizing Federated Learning for Hierarchical Edge Networks with Non-IID Data

Apr 11, 2025Abstract:Accommodating edge networks between IoT devices and the cloud server in Hierarchical Federated Learning (HFL) enhances communication efficiency without compromising data privacy. However, devices connected to the same edge often share geographic or contextual similarities, leading to varying edge-level data heterogeneity with different subsets of labels per edge, on top of device-level heterogeneity. This hierarchical non-Independent and Identically Distributed (non-IID) nature, which implies that each edge has its own optimization goal, has been overlooked in HFL research. Therefore, existing edge-accommodated HFL demonstrates inconsistent performance across edges in various hierarchical non-IID scenarios. To ensure robust performance with diverse edge-level non-IID data, we propose a Personalized Hierarchical Edge-enabled Federated Learning (PHE-FL), which personalizes each edge model to perform well on the unique class distributions specific to each edge. We evaluated PHE-FL across 4 scenarios with varying levels of edge-level non-IIDness, with extreme IoT device level non-IIDness. To accurately assess the effectiveness of our personalization approach, we deployed test sets on each edge server instead of the cloud server, and used both balanced and imbalanced test sets. Extensive experiments show that PHE-FL achieves up to 83 percent higher accuracy compared to existing federated learning approaches that incorporate edge networks, given the same number of training rounds. Moreover, PHE-FL exhibits improved stability, as evidenced by reduced accuracy fluctuations relative to the state-of-the-art FedAvg with two-level (edge and cloud) aggregation.

A Framework to Assess Multilingual Vulnerabilities of LLMs

Mar 17, 2025

Abstract:Large Language Models (LLMs) are acquiring a wider range of capabilities, including understanding and responding in multiple languages. While they undergo safety training to prevent them from answering illegal questions, imbalances in training data and human evaluation resources can make these models more susceptible to attacks in low-resource languages (LRL). This paper proposes a framework to automatically assess the multilingual vulnerabilities of commonly used LLMs. Using our framework, we evaluated six LLMs across eight languages representing varying levels of resource availability. We validated the assessments generated by our automated framework through human evaluation in two languages, demonstrating that the framework's results align with human judgments in most cases. Our findings reveal vulnerabilities in LRL; however, these may pose minimal risk as they often stem from the model's poor performance, resulting in incoherent responses.

Federated Koopman-Reservoir Learning for Large-Scale Multivariate Time-Series Anomaly Detection

Mar 14, 2025

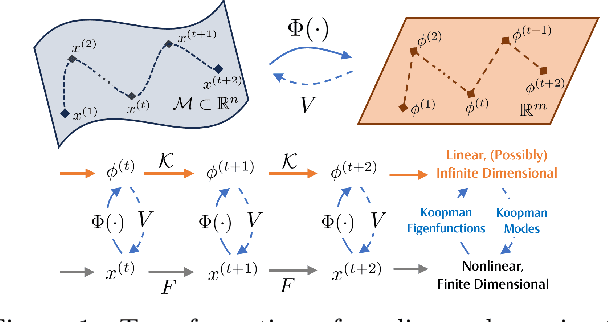

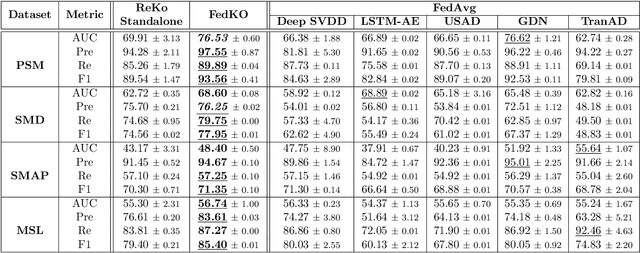

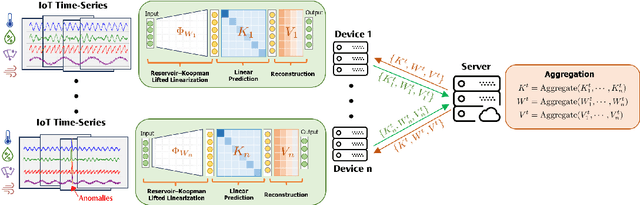

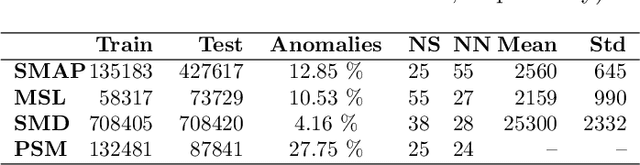

Abstract:The proliferation of edge devices has dramatically increased the generation of multivariate time-series (MVTS) data, essential for applications from healthcare to smart cities. Such data streams, however, are vulnerable to anomalies that signal crucial problems like system failures or security incidents. Traditional MVTS anomaly detection methods, encompassing statistical and centralized machine learning approaches, struggle with the heterogeneity, variability, and privacy concerns of large-scale, distributed environments. In response, we introduce FedKO, a novel unsupervised Federated Learning framework that leverages the linear predictive capabilities of Koopman operator theory along with the dynamic adaptability of Reservoir Computing. This enables effective spatiotemporal processing and privacy preservation for MVTS data. FedKO is formulated as a bi-level optimization problem, utilizing a specific federated algorithm to explore a shared Reservoir-Koopman model across diverse datasets. Such a model is then deployable on edge devices for efficient detection of anomalies in local MVTS streams. Experimental results across various datasets showcase FedKO's superior performance against state-of-the-art methods in MVTS anomaly detection. Moreover, FedKO reduces up to 8x communication size and 2x memory usage, making it highly suitable for large-scale systems.

An Empirical Study of Code Obfuscation Practices in the Google Play Store

Feb 07, 2025

Abstract:The Android ecosystem is vulnerable to issues such as app repackaging, counterfeiting, and piracy, threatening both developers and users. To mitigate these risks, developers often employ code obfuscation techniques. However, while effective in protecting legitimate applications, obfuscation also hinders security investigations as it is often exploited for malicious purposes. As such, it is important to understand code obfuscation practices in Android apps. In this paper, we analyze over 500,000 Android APKs from Google Play, spanning an eight-year period, to investigate the evolution and prevalence of code obfuscation techniques. First, we propose a set of classifiers to detect obfuscated code, tools, and techniques and then conduct a longitudinal analysis to identify trends. Our results show a 13% increase in obfuscation from 2016 to 2023, with ProGuard and Allatori as the most commonly used tools. We also show that obfuscation is more prevalent in top-ranked apps and gaming genres such as Casino apps. To our knowledge, this is the first large-scale study of obfuscation adoption in the Google Play Store, providing insights for developers and security analysts.

Entailment-Driven Privacy Policy Classification with LLMs

Sep 25, 2024

Abstract:While many online services provide privacy policies for end users to read and understand what personal data are being collected, these documents are often lengthy and complicated. As a result, the vast majority of users do not read them at all, leading to data collection under uninformed consent. Several attempts have been made to make privacy policies more user friendly by summarising them, providing automatic annotations or labels for key sections, or by offering chat interfaces to ask specific questions. With recent advances in Large Language Models (LLMs), there is an opportunity to develop more effective tools to parse privacy policies and help users make informed decisions. In this paper, we propose an entailment-driven LLM based framework to classify paragraphs of privacy policies into meaningful labels that are easily understood by users. The results demonstrate that our framework outperforms traditional LLM methods, improving the F1 score in average by 11.2%. Additionally, our framework provides inherently explainable and meaningful predictions.

LLMs are One-Shot URL Classifiers and Explainers

Sep 22, 2024Abstract:Malicious URL classification represents a crucial aspect of cyber security. Although existing work comprises numerous machine learning and deep learning-based URL classification models, most suffer from generalisation and domain-adaptation issues arising from the lack of representative training datasets. Furthermore, these models fail to provide explanations for a given URL classification in natural human language. In this work, we investigate and demonstrate the use of Large Language Models (LLMs) to address this issue. Specifically, we propose an LLM-based one-shot learning framework that uses Chain-of-Thought (CoT) reasoning to predict whether a given URL is benign or phishing. We evaluate our framework using three URL datasets and five state-of-the-art LLMs and show that one-shot LLM prompting indeed provides performances close to supervised models, with GPT 4-Turbo being the best model, followed by Claude 3 Opus. We conduct a quantitative analysis of the LLM explanations and show that most of the explanations provided by LLMs align with the post-hoc explanations of the supervised classifiers, and the explanations have high readability, coherency, and informativeness.

Federated PCA on Grassmann Manifold for IoT Anomaly Detection

Jul 10, 2024

Abstract:With the proliferation of the Internet of Things (IoT) and the rising interconnectedness of devices, network security faces significant challenges, especially from anomalous activities. While traditional machine learning-based intrusion detection systems (ML-IDS) effectively employ supervised learning methods, they possess limitations such as the requirement for labeled data and challenges with high dimensionality. Recent unsupervised ML-IDS approaches such as AutoEncoders and Generative Adversarial Networks (GAN) offer alternative solutions but pose challenges in deployment onto resource-constrained IoT devices and in interpretability. To address these concerns, this paper proposes a novel federated unsupervised anomaly detection framework, FedPCA, that leverages Principal Component Analysis (PCA) and the Alternating Directions Method Multipliers (ADMM) to learn common representations of distributed non-i.i.d. datasets. Building on the FedPCA framework, we propose two algorithms, FEDPE in Euclidean space and FEDPG on Grassmann manifolds. Our approach enables real-time threat detection and mitigation at the device level, enhancing network resilience while ensuring privacy. Moreover, the proposed algorithms are accompanied by theoretical convergence rates even under a subsampling scheme, a novel result. Experimental results on the UNSW-NB15 and TON-IoT datasets show that our proposed methods offer performance in anomaly detection comparable to nonlinear baselines, while providing significant improvements in communication and memory efficiency, underscoring their potential for securing IoT networks.

* Accepted for publication at IEEE/ACM Transactions on Networking

A Survey of Deep Long-Tail Classification Advancements

Apr 24, 2024Abstract:Many data distributions in the real world are hardly uniform. Instead, skewed and long-tailed distributions of various kinds are commonly observed. This poses an interesting problem for machine learning, where most algorithms assume or work well with uniformly distributed data. The problem is further exacerbated by current state-of-the-art deep learning models requiring large volumes of training data. As such, learning from imbalanced data remains a challenging research problem and a problem that must be solved as we move towards more real-world applications of deep learning. In the context of class imbalance, state-of-the-art (SOTA) accuracies on standard benchmark datasets for classification typically fall less than 75%, even for less challenging datasets such as CIFAR100. Nonetheless, there has been progress in this niche area of deep learning. To this end, in this survey, we provide a taxonomy of various methods proposed for addressing the problem of long-tail classification, focusing on works that happened in the last few years under a single mathematical framework. We also discuss standard performance metrics, convergence studies, feature distribution and classifier analysis. We also provide a quantitative comparison of the performance of different SOTA methods and conclude the survey by discussing the remaining challenges and future research direction.

Out-of-Distribution Data: An Acquaintance of Adversarial Examples -- A Survey

Apr 08, 2024

Abstract:Deep neural networks (DNNs) deployed in real-world applications can encounter out-of-distribution (OOD) data and adversarial examples. These represent distinct forms of distributional shifts that can significantly impact DNNs' reliability and robustness. Traditionally, research has addressed OOD detection and adversarial robustness as separate challenges. This survey focuses on the intersection of these two areas, examining how the research community has investigated them together. Consequently, we identify two key research directions: robust OOD detection and unified robustness. Robust OOD detection aims to differentiate between in-distribution (ID) data and OOD data, even when they are adversarially manipulated to deceive the OOD detector. Unified robustness seeks a single approach to make DNNs robust against both adversarial attacks and OOD inputs. Accordingly, first, we establish a taxonomy based on the concept of distributional shifts. This framework clarifies how robust OOD detection and unified robustness relate to other research areas addressing distributional shifts, such as OOD detection, open set recognition, and anomaly detection. Subsequently, we review existing work on robust OOD detection and unified robustness. Finally, we highlight the limitations of the existing work and propose promising research directions that explore adversarial and OOD inputs within a unified framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge