Sonse Shimaoka

NeurIPS 2020 EfficientQA Competition: Systems, Analyses and Lessons Learned

Jan 01, 2021

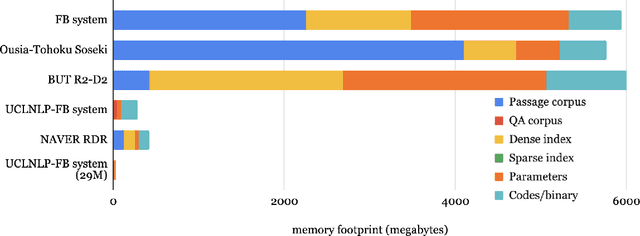

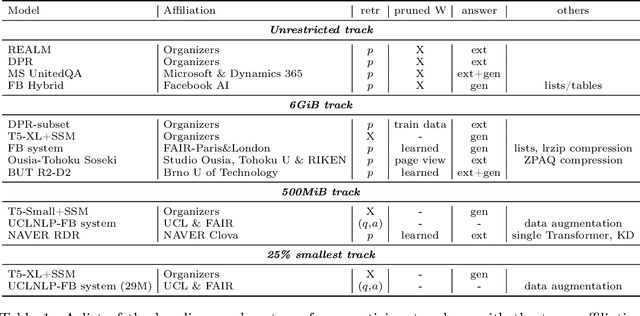

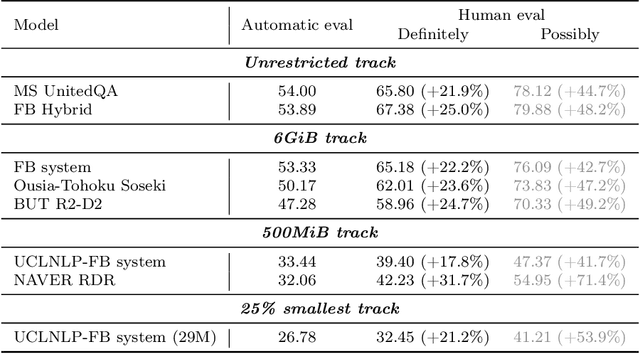

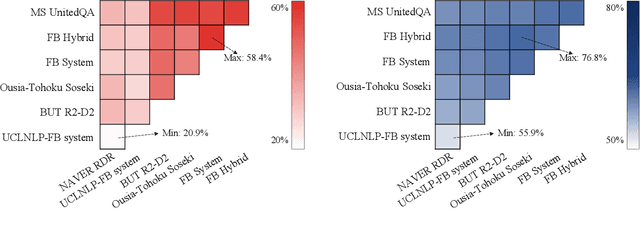

Abstract:We review the EfficientQA competition from NeurIPS 2020. The competition focused on open-domain question answering (QA), where systems take natural language questions as input and return natural language answers. The aim of the competition was to build systems that can predict correct answers while also satisfying strict on-disk memory budgets. These memory budgets were designed to encourage contestants to explore the trade-off between storing large, redundant, retrieval corpora or the parameters of large learned models. In this report, we describe the motivation and organization of the competition, review the best submissions, and analyze system predictions to inform a discussion of evaluation for open-domain QA.

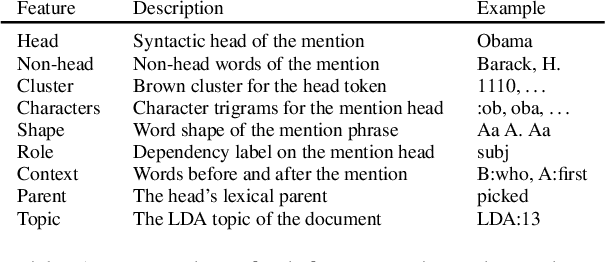

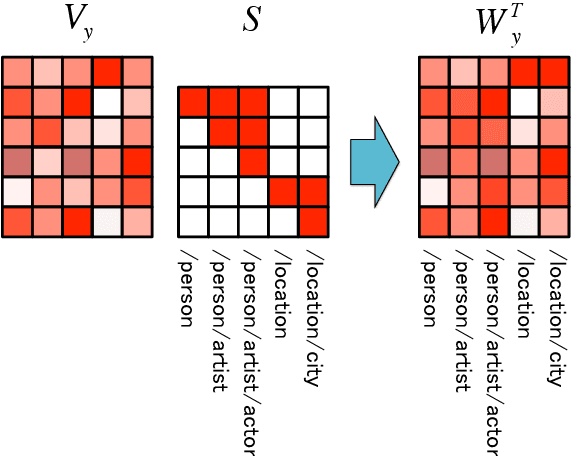

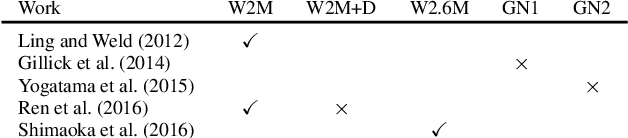

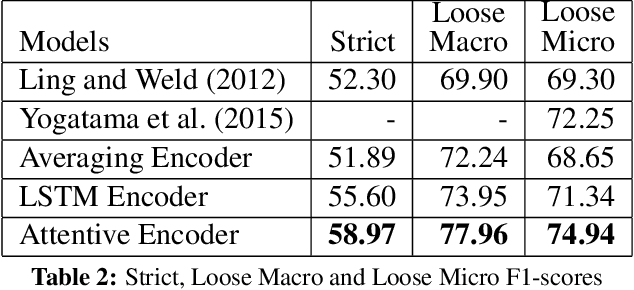

Neural Architectures for Fine-grained Entity Type Classification

Feb 21, 2017

Abstract:In this work, we investigate several neural network architectures for fine-grained entity type classification. Particularly, we consider extensions to a recently proposed attentive neural architecture and make three key contributions. Previous work on attentive neural architectures do not consider hand-crafted features, we combine learnt and hand-crafted features and observe that they complement each other. Additionally, through quantitative analysis we establish that the attention mechanism is capable of learning to attend over syntactic heads and the phrase containing the mention, where both are known strong hand-crafted features for our task. We enable parameter sharing through a hierarchical label encoding method, that in low-dimensional projections show clear clusters for each type hierarchy. Lastly, despite using the same evaluation dataset, the literature frequently compare models trained using different data. We establish that the choice of training data has a drastic impact on performance, with decreases by as much as 9.85% loose micro F1 score for a previously proposed method. Despite this, our best model achieves state-of-the-art results with 75.36% loose micro F1 score on the well- established FIGER (GOLD) dataset.

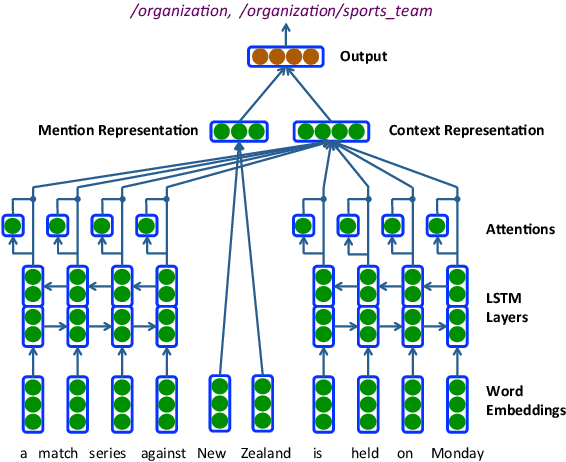

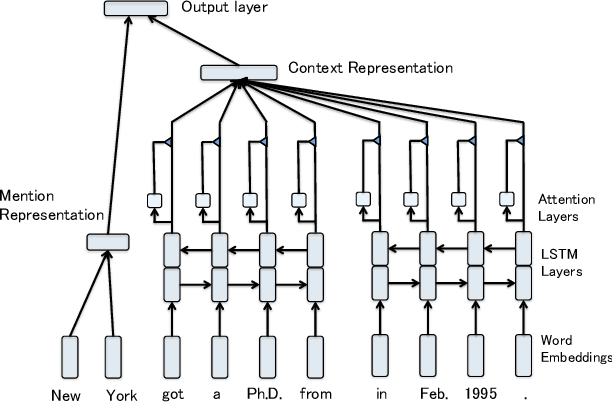

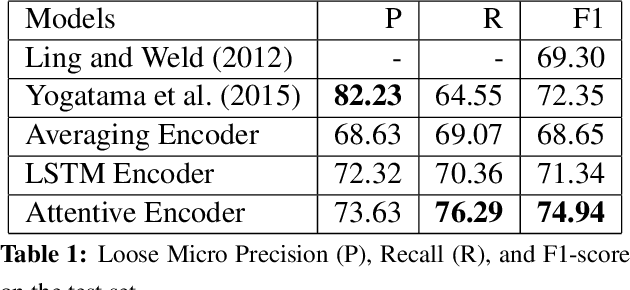

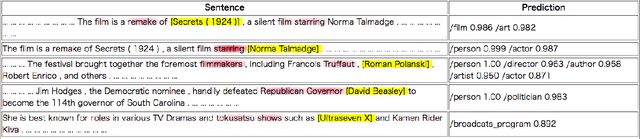

An Attentive Neural Architecture for Fine-grained Entity Type Classification

Apr 19, 2016

Abstract:In this work we propose a novel attention-based neural network model for the task of fine-grained entity type classification that unlike previously proposed models recursively composes representations of entity mention contexts. Our model achieves state-of-the-art performance with 74.94% loose micro F1-score on the well-established FIGER dataset, a relative improvement of 2.59%. We also investigate the behavior of the attention mechanism of our model and observe that it can learn contextual linguistic expressions that indicate the fine-grained category memberships of an entity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge