Shugong Xu

Fellow, IEEE

Towards Dynamic Resource Allocation and Client Scheduling in Hierarchical Federated Learning: A Two-Phase Deep Reinforcement Learning Approach

Jun 21, 2024

Abstract:Federated learning (FL) is a viable technique to train a shared machine learning model without sharing data. Hierarchical FL (HFL) system has yet to be studied regrading its multiple levels of energy, computation, communication, and client scheduling, especially when it comes to clients relying on energy harvesting to power their operations. This paper presents a new two-phase deep deterministic policy gradient (DDPG) framework, referred to as ``TP-DDPG'', to balance online the learning delay and model accuracy of an FL process in an energy harvesting-powered HFL system. The key idea is that we divide optimization decisions into two groups, and employ DDPG to learn one group in the first phase, while interpreting the other group as part of the environment to provide rewards for training the DDPG in the second phase. Specifically, the DDPG learns the selection of participating clients, and their CPU configurations and the transmission powers. A new straggler-aware client association and bandwidth allocation (SCABA) algorithm efficiently optimizes the other decisions and evaluates the reward for the DDPG. Experiments demonstrate that with substantially reduced number of learnable parameters, the TP-DDPG can quickly converge to effective polices that can shorten the training time of HFL by 39.4% compared to its benchmarks, when the required test accuracy of HFL is 0.9.

MM-KWS: Multi-modal Prompts for Multilingual User-defined Keyword Spotting

Jun 11, 2024Abstract:In this paper, we propose MM-KWS, a novel approach to user-defined keyword spotting leveraging multi-modal enrollments of text and speech templates. Unlike previous methods that focus solely on either text or speech features, MM-KWS extracts phoneme, text, and speech embeddings from both modalities. These embeddings are then compared with the query speech embedding to detect the target keywords. To ensure the applicability of MM-KWS across diverse languages, we utilize a feature extractor incorporating several multilingual pre-trained models. Subsequently, we validate its effectiveness on Mandarin and English tasks. In addition, we have integrated advanced data augmentation tools for hard case mining to enhance MM-KWS in distinguishing confusable words. Experimental results on the LibriPhrase and WenetPhrase datasets demonstrate that MM-KWS outperforms prior methods significantly.

Quality of Experience Oriented Cross-layer Optimization for Real-time XR Video Transmission

Apr 15, 2024

Abstract:Extended reality (XR) is one of the most important applications of beyond 5G and 6G networks. Real-time XR video transmission presents challenges in terms of data rate and delay. In particular, the frame-by-frame transmission mode of XR video makes real-time XR video very sensitive to dynamic network environments. To improve the users' quality of experience (QoE), we design a cross-layer transmission framework for real-time XR video. The proposed framework allows the simple information exchange between the base station (BS) and the XR server, which assists in adaptive bitrate and wireless resource scheduling. We utilize the cross-layer information to formulate the problem of maximizing user QoE by finding the optimal scheduling and bitrate adjustment strategies. To address the issue of mismatched time scales between two strategies, we decouple the original problem and solve them individually using a multi-agent-based approach. Specifically, we propose the multi-step Deep Q-network (MS-DQN) algorithm to obtain a frame-priority-based wireless resource scheduling strategy and then propose the Transformer-based Proximal Policy Optimization (TPPO) algorithm for video bitrate adaptation. The experimental results show that the TPPO+MS-DQN algorithm proposed in this study can improve the QoE by 3.6% to 37.8%. More specifically, the proposed MS-DQN algorithm enhances the transmission quality by 49.9%-80.2%.

Real-time Extended Reality Video Transmission Optimization Based on Frame-priority Scheduling

Feb 08, 2024

Abstract:Extended reality (XR) is one of the most important applications of 5G. For real-time XR video transmission in 5G networks, a low latency and high data rate are required. In this paper, we propose a resource allocation scheme based on frame-priority scheduling to meet these requirements. The optimization problem is modelled as a frame-priority-based radio resource scheduling problem to improve transmission quality. We propose a scheduling framework based on multi-step Deep Q-network (MS-DQN) and design a neural network model based on convolutional neural network (CNN). Simulation results show that the scheduling framework based on frame-priority and MS-DQN can improve transmission quality by 49.9%-80.2%.

On the performance of an integrated communication and localization system: an analytical framework

Sep 08, 2023Abstract:Quantifying the performance bound of an integrated localization and communication (ILAC) system and the trade-off between communication and localization performance is critical. In this letter, we consider an ILAC system that can perform communication and localization via time-domain or frequency-domain resource allocation. We develop an analytical framework to derive the closed-form expression of the capacity loss versus localization Cramer-Rao lower bound (CRB) loss via time-domain and frequency-domain resource allocation. Simulation results validate the analytical model and demonstrate that frequency-domain resource allocation is preferable in scenarios with a smaller number of antennas at the next generation nodeB (gNB) and a larger distance between user equipment (UE) and gNB, while time-domain resource allocation is preferable in scenarios with a larger number of antennas and smaller distance between UE and the gNB.

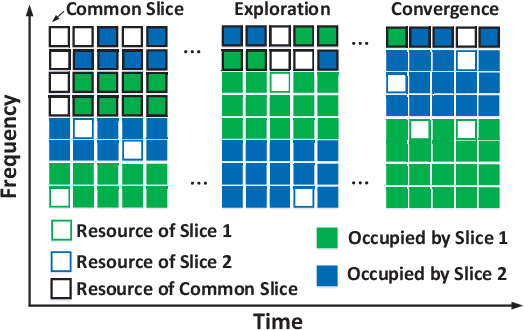

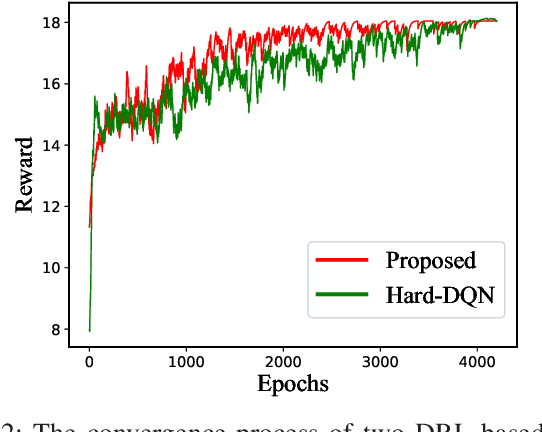

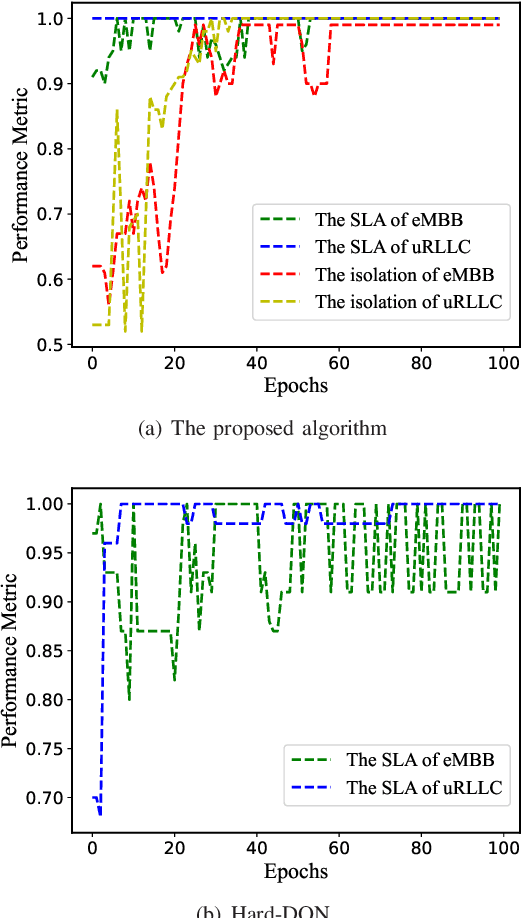

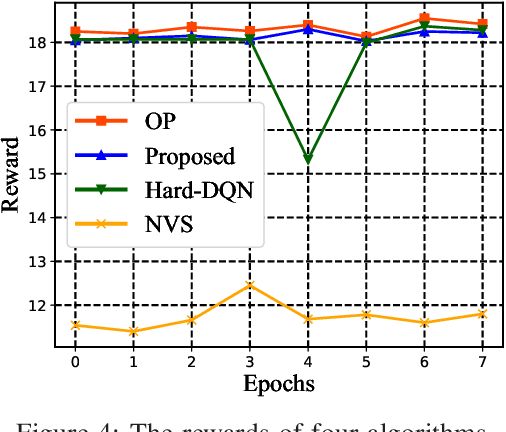

A Hard and Soft Hybrid Slicing Framework for Service Level Agreement Guarantee via Deep Reinforcement Learning

Mar 06, 2022

Abstract:Network slicing is a critical driver for guaranteeing the diverse service level agreements (SLA) in 5G and future networks. Recently, deep reinforcement learning (DRL) has been widely utilized for resource allocation in network slicing. However, existing related works do not consider the performance loss associated with the initial exploration phase of DRL. This paper proposes a new performance-guaranteed slicing strategy with a soft and hard hybrid slicing setting. Mainly, a common slice setting is applied to guarantee slices' SLA when training the neural network. Moreover, the resource of the common slice tends to precisely redistribute to slices with the training of DRL until it converges. Furthermore, experiment results confirm the effectiveness of our proposed slicing framework: the slices' SLA of the training phase can be guaranteed, and the proposed algorithm can achieve the near-optimal performance in terms of the SLA satisfaction ratio, isolation degree and spectrum maximization after convergence.

TRIG: Transformer-Based Text Recognizer with Initial Embedding Guidance

Nov 16, 2021

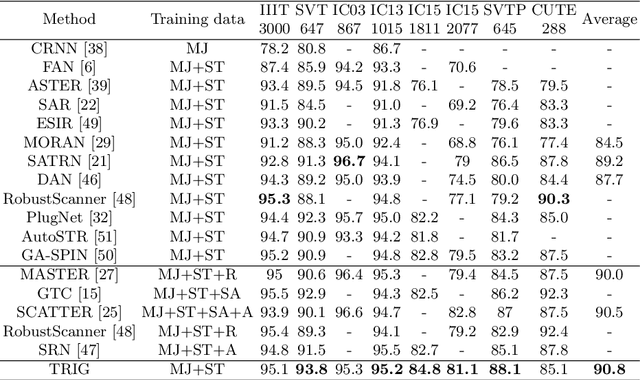

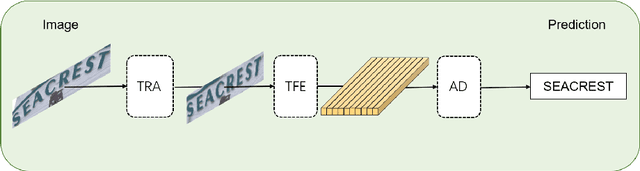

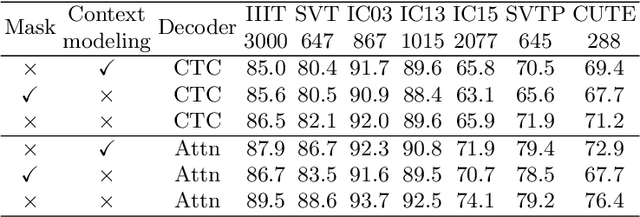

Abstract:Scene text recognition (STR) is an important bridge between images and text, attracting abundant research attention. While convolutional neural networks (CNNS) have achieved remarkable progress in this task, most of the existing works need an extra module (context modeling module) to help CNN to capture global dependencies to solve the inductive bias and strengthen the relationship between text features. Recently, the transformer has been proposed as a promising network for global context modeling by self-attention mechanism, but one of the main shortcomings, when applied to recognition, is the efficiency. We propose a 1-D split to address the challenges of complexity and replace the CNN with the transformer encoder to reduce the need for a context modeling module. Furthermore, recent methods use a frozen initial embedding to guide the decoder to decode the features to text, leading to a loss of accuracy. We propose to use a learnable initial embedding learned from the transformer encoder to make it adaptive to different input images. Above all, we introduce a novel architecture for text recognition, named TRansformer-based text recognizer with Initial embedding Guidance (TRIG), composed of three stages (transformation, feature extraction, and prediction). Extensive experiments show that our approach can achieve state-of-the-art on text recognition benchmarks.

Multi-Task and Multi-Modal Learning for RGB Dynamic Gesture Recognition

Oct 29, 2021

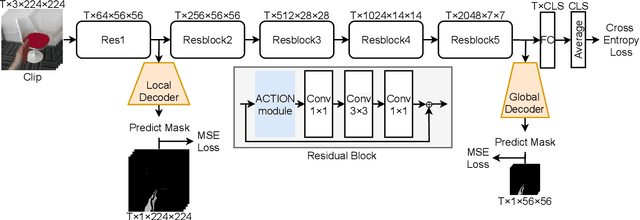

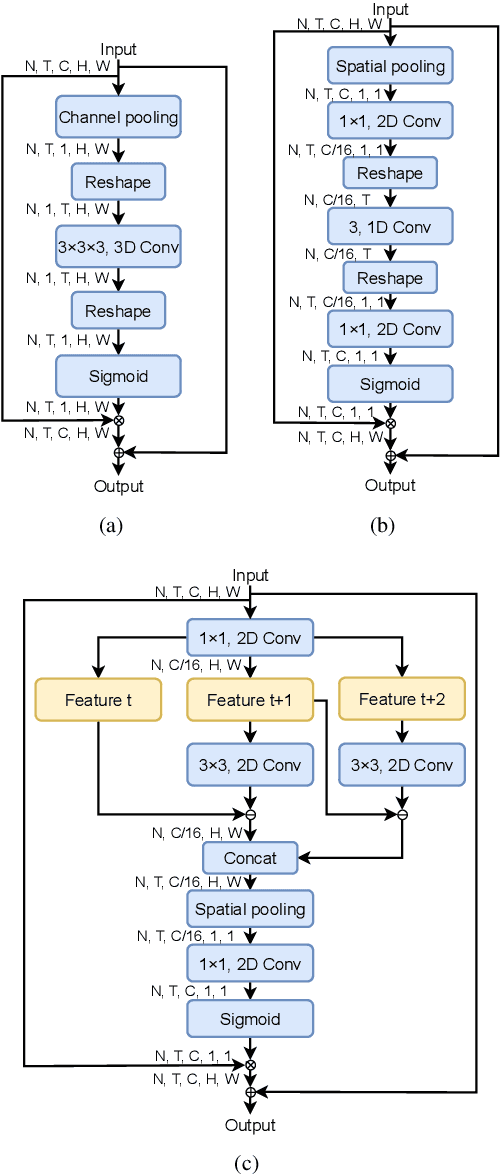

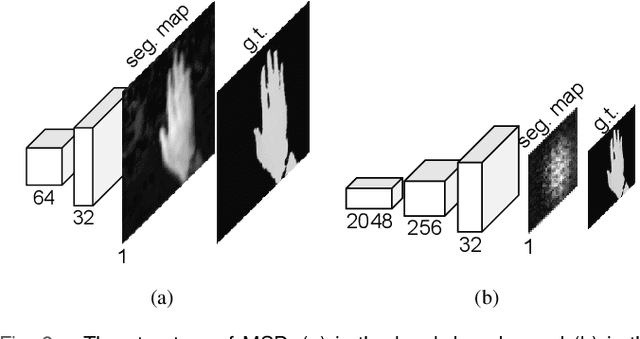

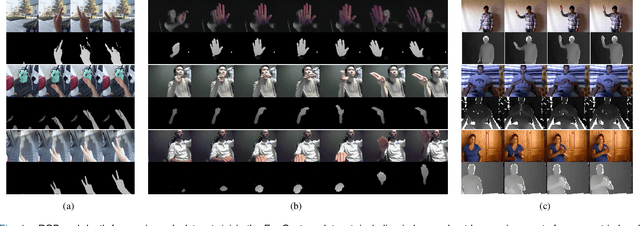

Abstract:Gesture recognition is getting more and more popular due to various application possibilities in human-machine interaction. Existing multi-modal gesture recognition systems take multi-modal data as input to improve accuracy, but such methods require more modality sensors, which will greatly limit their application scenarios. Therefore we propose an end-to-end multi-task learning framework in training 2D convolutional neural networks. The framework can use the depth modality to improve accuracy during training and save costs by using only RGB modality during inference. Our framework is trained to learn a representation for multi-task learning: gesture segmentation and gesture recognition. Depth modality contains the prior information for the location of the gesture. Therefore it can be used as the supervision for gesture segmentation. A plug-and-play module named Multi-Scale-Decoder is designed to realize gesture segmentation, which contains two sub-decoder. It is used in the lower stage and higher stage respectively, and can help the network pay attention to key target areas, ignore irrelevant information, and extract more discriminant features. Additionally, the MSD module and depth modality are only used in the training stage to improve gesture recognition performance. Only RGB modality and network without MSD are required during inference. Experimental results on three public gesture recognition datasets show that our proposed method provides superior performance compared with existing gesture recognition frameworks. Moreover, using the proposed plug-and-play MSD in other 2D CNN-based frameworks also get an excellent accuracy improvement.

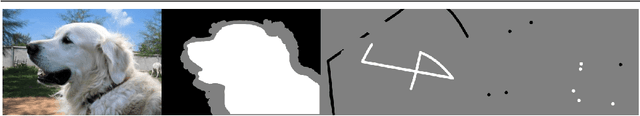

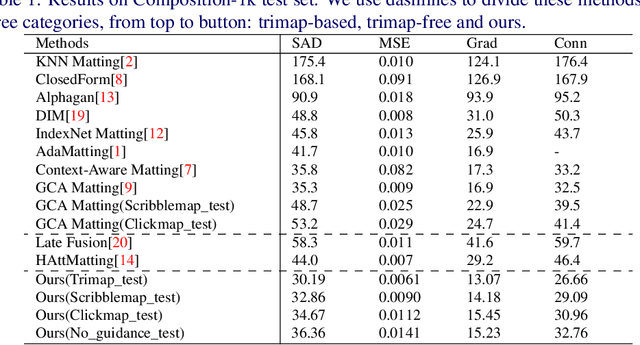

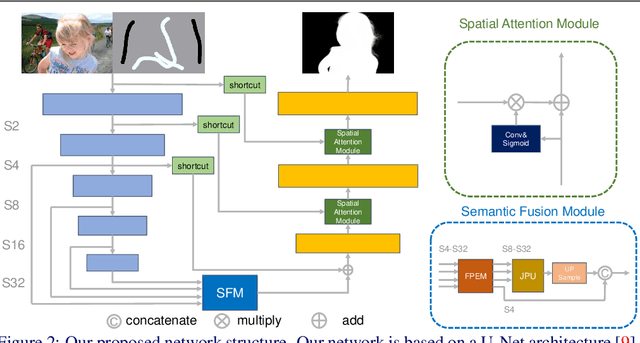

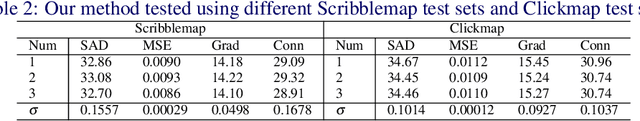

Deep Image Matting with Flexible Guidance Input

Oct 21, 2021

Abstract:Image matting is an important computer vision problem. Many existing matting methods require a hand-made trimap to provide auxiliary information, which is very expensive and limits the real world usage. Recently, some trimap-free methods have been proposed, which completely get rid of any user input. However, their performance lag far behind trimap-based methods due to the lack of guidance information. In this paper, we propose a matting method that use Flexible Guidance Input as user hint, which means our method can use trimap, scribblemap or clickmap as guidance information or even work without any guidance input. To achieve this, we propose Progressive Trimap Deformation(PTD) scheme that gradually shrink the area of the foreground and background of the trimap with the training step increases and finally become a scribblemap. To make our network robust to any user scribble and click, we randomly sample points on foreground and background and perform curve fitting. Moreover, we propose Semantic Fusion Module(SFM) which utilize the Feature Pyramid Enhancement Module(FPEM) and Joint Pyramid Upsampling(JPU) in matting task for the first time. The experiments show that our method can achieve state-of-the-art results comparing with existing trimap-based and trimap-free methods.

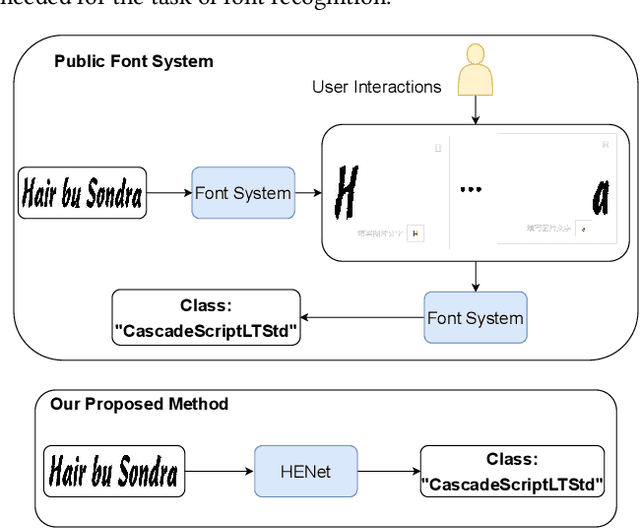

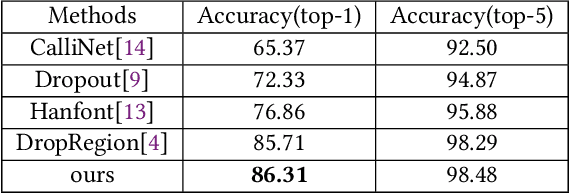

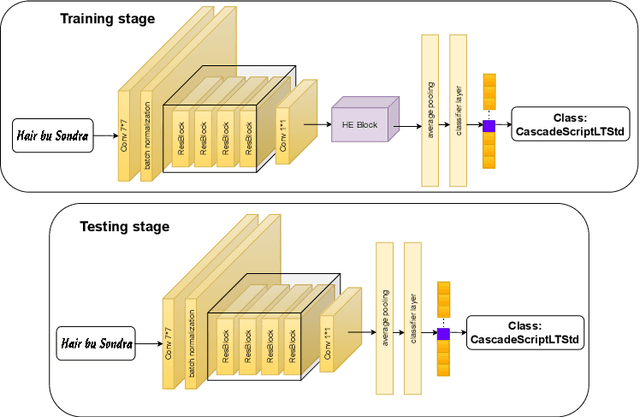

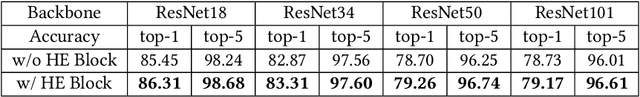

HENet: Forcing a Network to Think More for Font Recognition

Oct 21, 2021

Abstract:Although lots of progress were made in Text Recognition/OCR in recent years, the task of font recognition is remaining challenging. The main challenge lies in the subtle difference between these similar fonts, which is hard to distinguish. This paper proposes a novel font recognizer with a pluggable module solving the font recognition task. The pluggable module hides the most discriminative accessible features and forces the network to consider other complicated features to solve the hard examples of similar fonts, called HE Block. Compared with the available public font recognition systems, our proposed method does not require any interactions at the inference stage. Extensive experiments demonstrate that HENet achieves encouraging performance, including on character-level dataset Explor_all and word-level dataset AdobeVFR

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge