Shaoming Xu

Hierarchical Conditional Multi-Task Learning for Streamflow Modeling

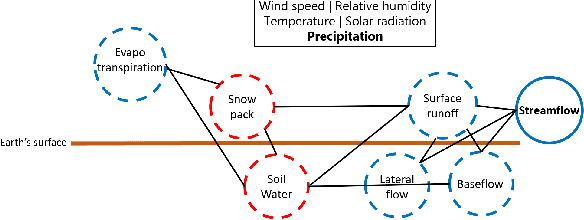

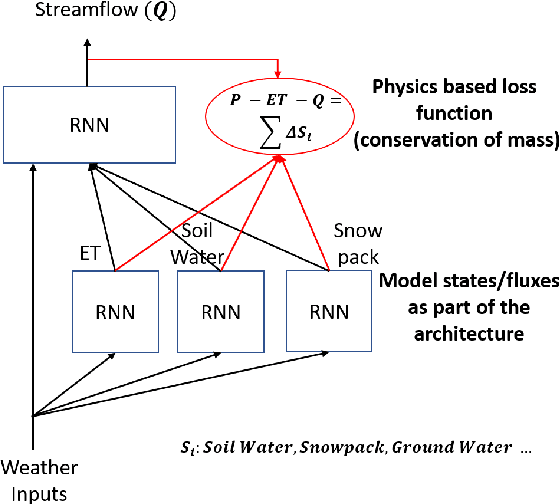

Oct 18, 2024Abstract:Streamflow, vital for water resource management, is governed by complex hydrological systems involving intermediate processes driven by meteorological forces. While deep learning models have achieved state-of-the-art results of streamflow prediction, their end-to-end single-task learning approach often fails to capture the causal relationships within these systems. To address this, we propose Hierarchical Conditional Multi-Task Learning (HCMTL), a hierarchical approach that jointly models soil water and snowpack processes based on their causal connections to streamflow. HCMTL utilizes task embeddings to connect network modules, enhancing flexibility and expressiveness while capturing unobserved processes beyond soil water and snowpack. It also incorporates the Conditional Mini-Batch strategy to improve long time series modeling. We compare HCMTL with five baselines on a global dataset. HCMTL's superior performance across hundreds of drainage basins over extended periods shows that integrating domain-specific causal knowledge into deep learning enhances both prediction accuracy and interpretability. This is essential for advancing our understanding of complex hydrological systems and supporting efficient water resource management to mitigate natural disasters like droughts and floods.

Kilometer-Scale Convection Allowing Model Emulation using Generative Diffusion Modeling

Aug 20, 2024Abstract:Storm-scale convection-allowing models (CAMs) are an important tool for predicting the evolution of thunderstorms and mesoscale convective systems that result in damaging extreme weather. By explicitly resolving convective dynamics within the atmosphere they afford meteorologists the nuance needed to provide outlook on hazard. Deep learning models have thus far not proven skilful at km-scale atmospheric simulation, despite being competitive at coarser resolution with state-of-the-art global, medium-range weather forecasting. We present a generative diffusion model called StormCast, which emulates the high-resolution rapid refresh (HRRR) model-NOAA's state-of-the-art 3km operational CAM. StormCast autoregressively predicts 99 state variables at km scale using a 1-hour time step, with dense vertical resolution in the atmospheric boundary layer, conditioned on 26 synoptic variables. We present evidence of successfully learnt km-scale dynamics including competitive 1-6 hour forecast skill for composite radar reflectivity alongside physically realistic convective cluster evolution, moist updrafts, and cold pool morphology. StormCast predictions maintain realistic power spectra for multiple predicted variables across multi-hour forecasts. Together, these results establish the potential for autoregressive ML to emulate CAMs -- opening up new km-scale frontiers for regional ML weather prediction and future climate hazard dynamical downscaling.

Message Propagation Through Time: An Algorithm for Sequence Dependency Retention in Time Series Modeling

Sep 28, 2023

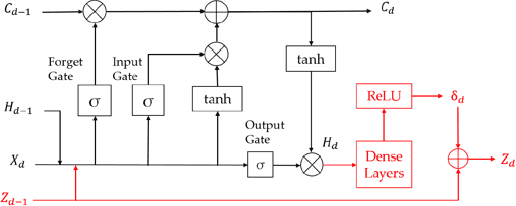

Abstract:Time series modeling, a crucial area in science, often encounters challenges when training Machine Learning (ML) models like Recurrent Neural Networks (RNNs) using the conventional mini-batch training strategy that assumes independent and identically distributed (IID) samples and initializes RNNs with zero hidden states. The IID assumption ignores temporal dependencies among samples, resulting in poor performance. This paper proposes the Message Propagation Through Time (MPTT) algorithm to effectively incorporate long temporal dependencies while preserving faster training times relative to the stateful solutions. MPTT utilizes two memory modules to asynchronously manage initial hidden states for RNNs, fostering seamless information exchange between samples and allowing diverse mini-batches throughout epochs. MPTT further implements three policies to filter outdated and preserve essential information in the hidden states to generate informative initial hidden states for RNNs, facilitating robust training. Experimental results demonstrate that MPTT outperforms seven strategies on four climate datasets with varying levels of temporal dependencies.

Mini-Batch Learning Strategies for modeling long term temporal dependencies: A study in environmental applications

Oct 15, 2022

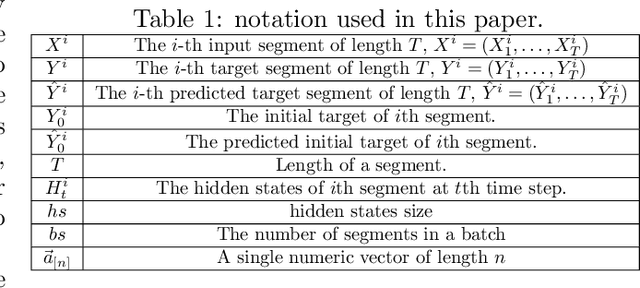

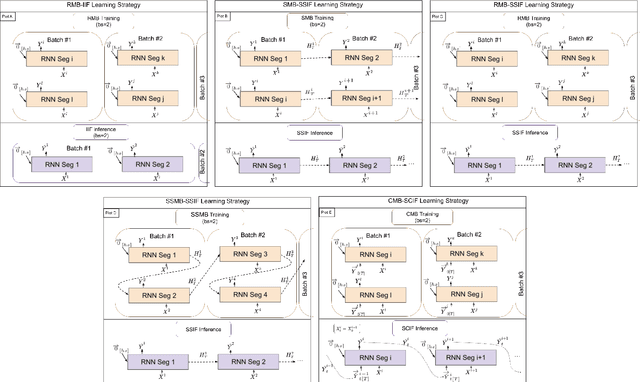

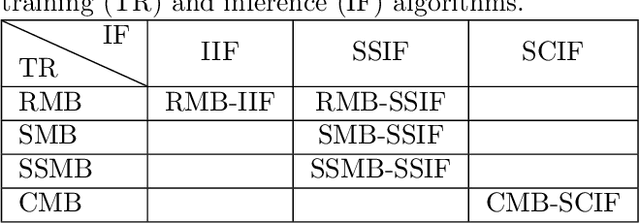

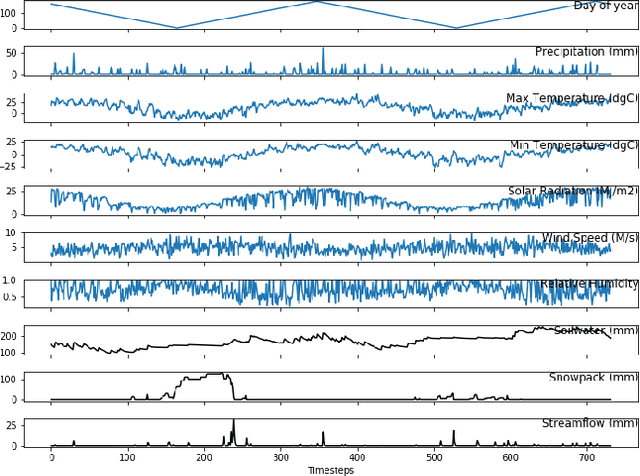

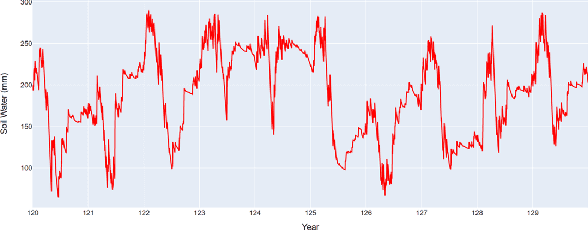

Abstract:In many environmental applications, recurrent neural networks (RNNs) are often used to model physical variables with long temporal dependencies. However, due to mini-batch training, temporal relationships between training segments within the batch (intra-batch) as well as between batches (inter-batch) are not considered, which can lead to limited performance. Stateful RNNs aim to address this issue by passing hidden states between batches. Since Stateful RNNs ignore intra-batch temporal dependency, there exists a trade-off between training stability and capturing temporal dependency. In this paper, we provide a quantitative comparison of different Stateful RNN modeling strategies, and propose two strategies to enforce both intra- and inter-batch temporal dependency. First, we extend Stateful RNNs by defining a batch as a temporally ordered set of training segments, which enables intra-batch sharing of temporal information. While this approach significantly improves the performance, it leads to much larger training times due to highly sequential training. To address this issue, we further propose a new strategy which augments a training segment with an initial value of the target variable from the timestep right before the starting of the training segment. In other words, we provide an initial value of the target variable as additional input so that the network can focus on learning changes relative to that initial value. By using this strategy, samples can be passed in any order (mini-batch training) which significantly reduces the training time while maintaining the performance. In demonstrating our approach in hydrological modeling, we observe that the most significant gains in predictive accuracy occur when these methods are applied to state variables whose values change more slowly, such as soil water and snowpack, rather than continuously moving flux variables such as streamflow.

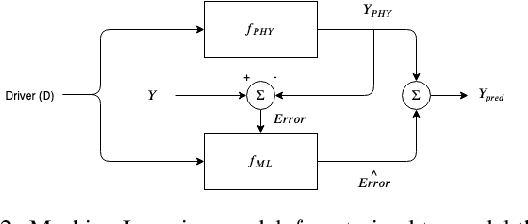

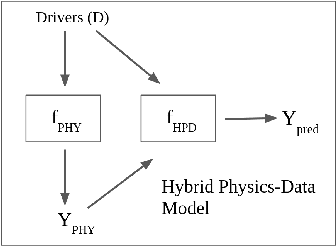

Physics Guided Machine Learning Methods for Hydrology

Dec 02, 2020

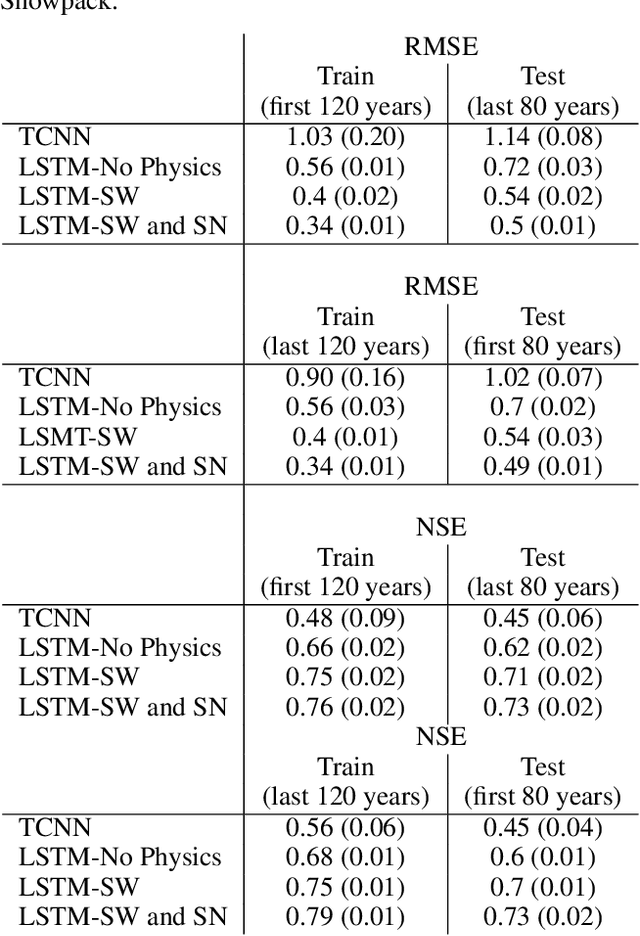

Abstract:Streamflow prediction is one of the key challenges in the field of hydrology due to the complex interplay between multiple non-linear physical mechanisms behind streamflow generation. While physically-based models are rooted in rich understanding of the physical processes, a significant performance gap still remains which can be potentially addressed by leveraging the recent advances in machine learning. The goal of this work is to incorporate our understanding of physical processes and constraints in hydrology into machine learning algorithms, and thus bridge the performance gap while reducing the need for large amounts of data compared to traditional data-driven approaches. In particular, we propose an LSTM based deep learning architecture that is coupled with SWAT (Soil and Water Assessment Tool), an hydrology model that is in wide use today. The key idea of the approach is to model auxiliary intermediate processes that connect weather drivers to streamflow, rather than directly mapping runoff from weather variables which is what a deep learning architecture without physical insight will do. The efficacy of the approach is being analyzed on several small catchments located in the South Branch of the Root River Watershed in southeast Minnesota. Apart from observation data on runoff, the approach also leverages a 200-year synthetic dataset generated by SWAT to improve the performance while reducing convergence time. In the early phases of this study, simpler versions of the physics guided deep learning architectures are being used to achieve a system understanding of the coupling of physics and machine learning. As more complexity is introduced into the present implementation, the framework will be able to generalize to more sophisticated cases where spatial heterogeneity is present.

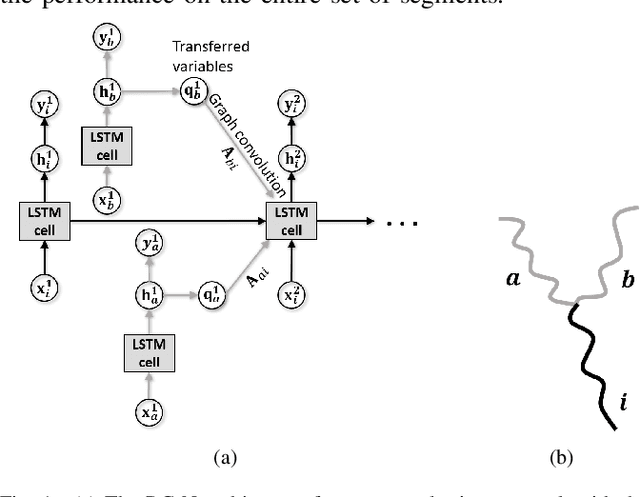

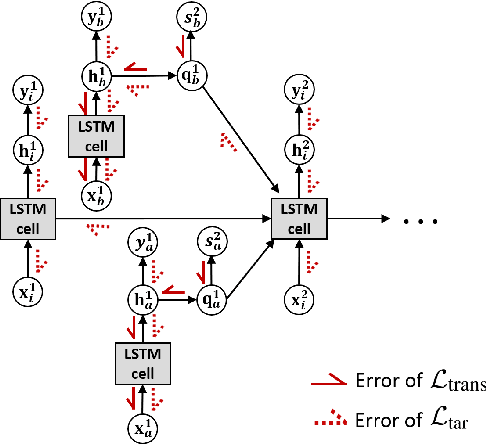

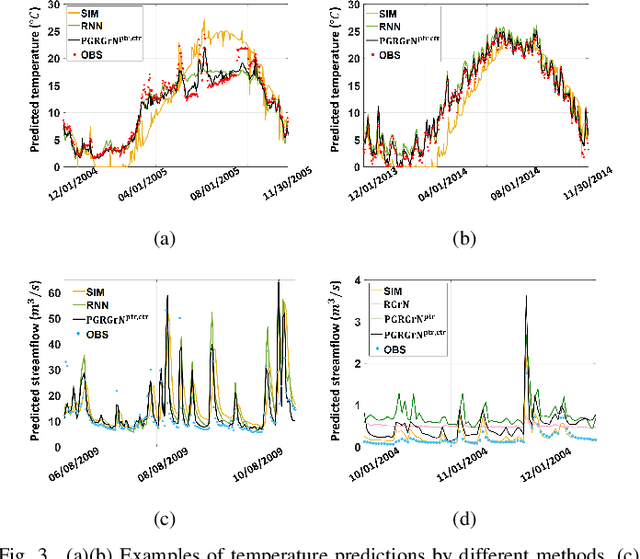

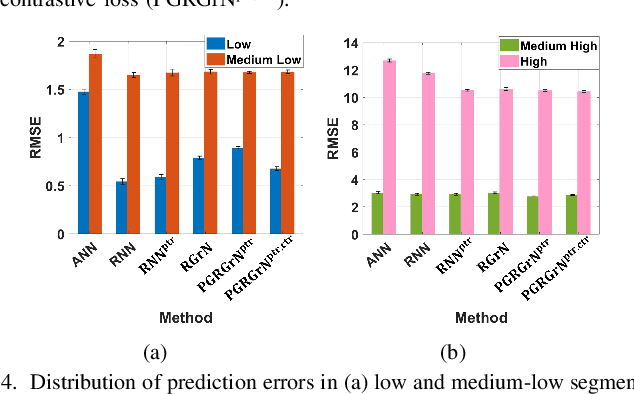

Physics-Guided Recurrent Graph Networks for Predicting Flow and Temperature in River Networks

Sep 26, 2020

Abstract:This paper proposes a physics-guided machine learning approach that combines advanced machine learning models and physics-based models to improve the prediction of water flow and temperature in river networks. We first build a recurrent graph network model to capture the interactions among multiple segments in the river network. Then we present a pre-training technique which transfers knowledge from physics-based models to initialize the machine learning model and learn the physics of streamflow and thermodynamics. We also propose a new loss function that balances the performance over different river segments. We demonstrate the effectiveness of the proposed method in predicting temperature and streamflow in a subset of the Delaware River Basin. In particular, we show that the proposed method brings a 33\%/14\% improvement over the state-of-the-art physics-based model and 24\%/14\% over traditional machine learning models (e.g., Long-Short Term Memory Neural Network) in temperature/streamflow prediction using very sparse (0.1\%) observation data for training. The proposed method has also been shown to produce better performance when generalized to different seasons or river segments with different streamflow ranges.

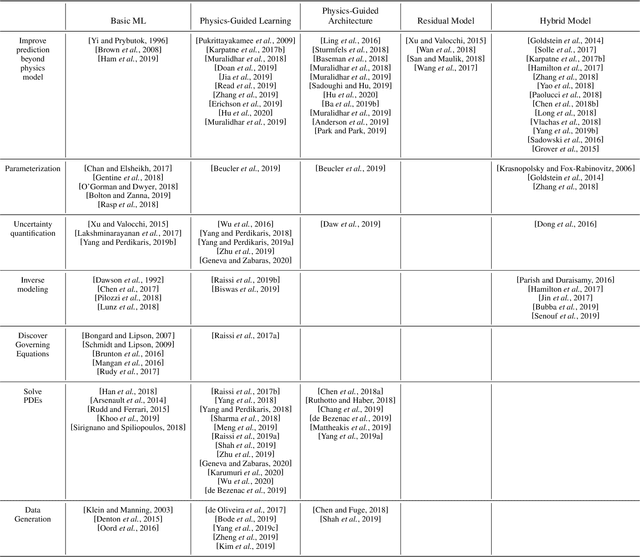

Integrating Physics-Based Modeling with Machine Learning: A Survey

Apr 01, 2020

Abstract:In this manuscript, we provide a structured and comprehensive overview of techniques to integrate machine learning with physics-based modeling. First, we provide a summary of application areas for which these approaches have been applied. Then, we describe classes of methodologies used to construct physics-guided machine learning models and hybrid physics-machine learning frameworks from a machine learning standpoint. With this foundation, we then provide a systematic organization of these existing techniques and discuss ideas for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge