Jared Willard

Foundation Models for Environmental Science: A Survey of Emerging Frontiers

Apr 05, 2025Abstract:Modeling environmental ecosystems is essential for effective resource management, sustainable development, and understanding complex ecological processes. However, traditional data-driven methods face challenges in capturing inherently complex and interconnected processes and are further constrained by limited observational data in many environmental applications. Foundation models, which leverages large-scale pre-training and universal representations of complex and heterogeneous data, offer transformative opportunities for capturing spatiotemporal dynamics and dependencies in environmental processes, and facilitate adaptation to a broad range of applications. This survey presents a comprehensive overview of foundation model applications in environmental science, highlighting advancements in common environmental use cases including forward prediction, data generation, data assimilation, downscaling, inverse modeling, model ensembling, and decision-making across domains. We also detail the process of developing these models, covering data collection, architecture design, training, tuning, and evaluation. Through discussions on these emerging methods as well as their future opportunities, we aim to promote interdisciplinary collaboration that accelerates advancements in machine learning for driving scientific discovery in addressing critical environmental challenges.

Huge Ensembles Part I: Design of Ensemble Weather Forecasts using Spherical Fourier Neural Operators

Aug 06, 2024

Abstract:Studying low-likelihood high-impact extreme weather events in a warming world is a significant and challenging task for current ensemble forecasting systems. While these systems presently use up to 100 members, larger ensembles could enrich the sampling of internal variability. They may capture the long tails associated with climate hazards better than traditional ensemble sizes. Due to computational constraints, it is infeasible to generate huge ensembles (comprised of 1,000-10,000 members) with traditional, physics-based numerical models. In this two-part paper, we replace traditional numerical simulations with machine learning (ML) to generate hindcasts of huge ensembles. In Part I, we construct an ensemble weather forecasting system based on Spherical Fourier Neural Operators (SFNO), and we discuss important design decisions for constructing such an ensemble. The ensemble represents model uncertainty through perturbed-parameter techniques, and it represents initial condition uncertainty through bred vectors, which sample the fastest growing modes of the forecast. Using the European Centre for Medium-Range Weather Forecasts Integrated Forecasting System (IFS) as a baseline, we develop an evaluation pipeline composed of mean, spectral, and extreme diagnostics. Using large-scale, distributed SFNOs with 1.1 billion learned parameters, we achieve calibrated probabilistic forecasts. As the trajectories of the individual members diverge, the ML ensemble mean spectra degrade with lead time, consistent with physical expectations. However, the individual ensemble members' spectra stay constant with lead time. Therefore, these members simulate realistic weather states, and the ML ensemble thus passes a crucial spectral test in the literature. The IFS and ML ensembles have similar Extreme Forecast Indices, and we show that the ML extreme weather forecasts are reliable and discriminating.

Huge Ensembles Part II: Properties of a Huge Ensemble of Hindcasts Generated with Spherical Fourier Neural Operators

Aug 02, 2024

Abstract:In Part I, we created an ensemble based on Spherical Fourier Neural Operators. As initial condition perturbations, we used bred vectors, and as model perturbations, we used multiple checkpoints trained independently from scratch. Based on diagnostics that assess the ensemble's physical fidelity, our ensemble has comparable performance to operational weather forecasting systems. However, it requires several orders of magnitude fewer computational resources. Here in Part II, we generate a huge ensemble (HENS), with 7,424 members initialized each day of summer 2023. We enumerate the technical requirements for running huge ensembles at this scale. HENS precisely samples the tails of the forecast distribution and presents a detailed sampling of internal variability. For extreme climate statistics, HENS samples events 4$\sigma$ away from the ensemble mean. At each grid cell, HENS improves the skill of the most accurate ensemble member and enhances coverage of possible future trajectories. As a weather forecasting model, HENS issues extreme weather forecasts with better uncertainty quantification. It also reduces the probability of outlier events, in which the verification value lies outside the ensemble forecast distribution.

Mini-Batch Learning Strategies for modeling long term temporal dependencies: A study in environmental applications

Oct 15, 2022

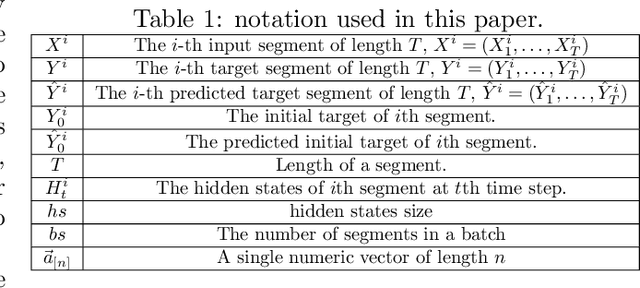

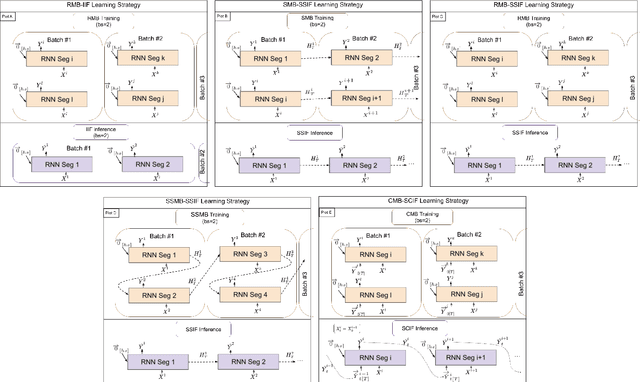

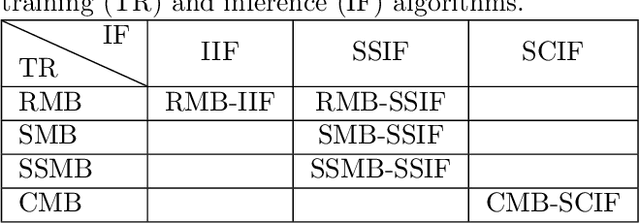

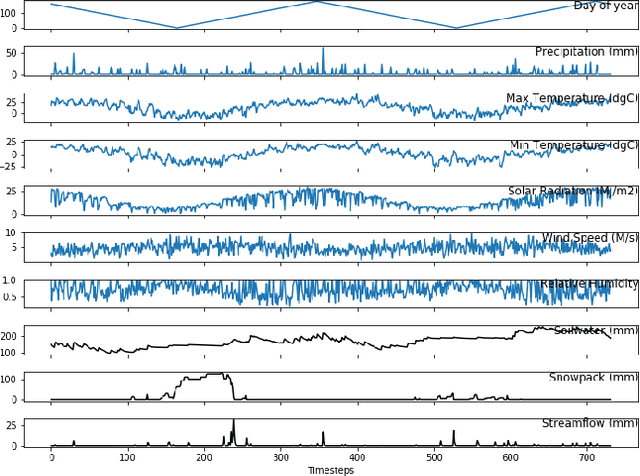

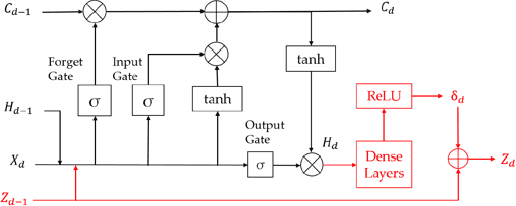

Abstract:In many environmental applications, recurrent neural networks (RNNs) are often used to model physical variables with long temporal dependencies. However, due to mini-batch training, temporal relationships between training segments within the batch (intra-batch) as well as between batches (inter-batch) are not considered, which can lead to limited performance. Stateful RNNs aim to address this issue by passing hidden states between batches. Since Stateful RNNs ignore intra-batch temporal dependency, there exists a trade-off between training stability and capturing temporal dependency. In this paper, we provide a quantitative comparison of different Stateful RNN modeling strategies, and propose two strategies to enforce both intra- and inter-batch temporal dependency. First, we extend Stateful RNNs by defining a batch as a temporally ordered set of training segments, which enables intra-batch sharing of temporal information. While this approach significantly improves the performance, it leads to much larger training times due to highly sequential training. To address this issue, we further propose a new strategy which augments a training segment with an initial value of the target variable from the timestep right before the starting of the training segment. In other words, we provide an initial value of the target variable as additional input so that the network can focus on learning changes relative to that initial value. By using this strategy, samples can be passed in any order (mini-batch training) which significantly reduces the training time while maintaining the performance. In demonstrating our approach in hydrological modeling, we observe that the most significant gains in predictive accuracy occur when these methods are applied to state variables whose values change more slowly, such as soil water and snowpack, rather than continuously moving flux variables such as streamflow.

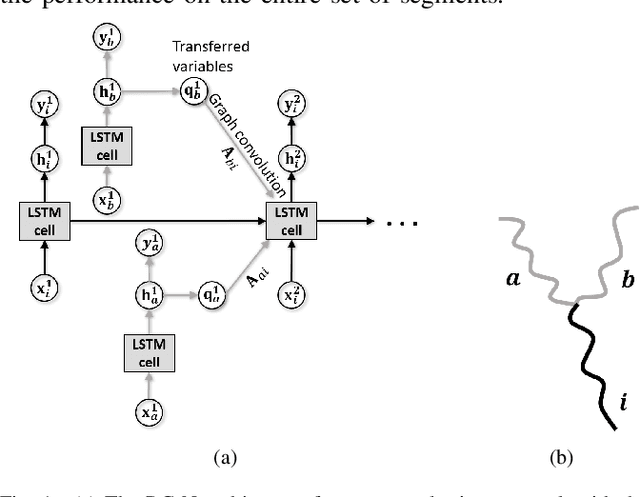

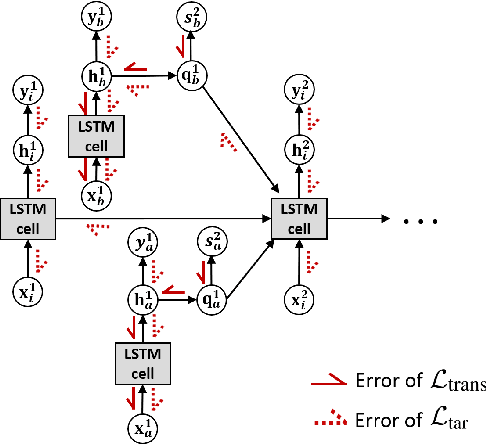

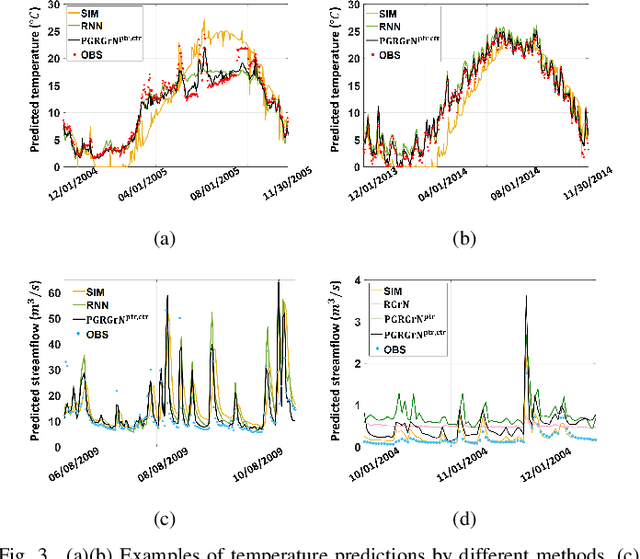

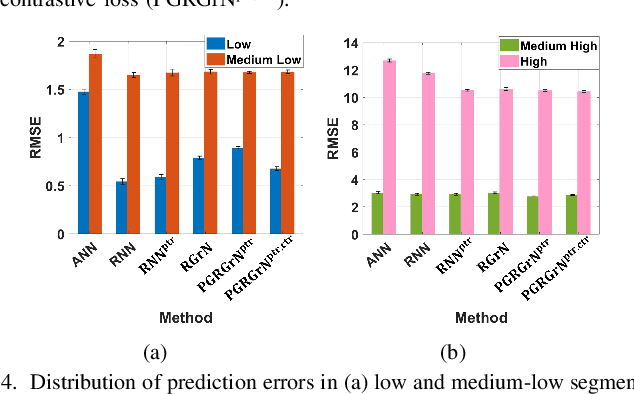

Physics-Guided Recurrent Graph Networks for Predicting Flow and Temperature in River Networks

Sep 26, 2020

Abstract:This paper proposes a physics-guided machine learning approach that combines advanced machine learning models and physics-based models to improve the prediction of water flow and temperature in river networks. We first build a recurrent graph network model to capture the interactions among multiple segments in the river network. Then we present a pre-training technique which transfers knowledge from physics-based models to initialize the machine learning model and learn the physics of streamflow and thermodynamics. We also propose a new loss function that balances the performance over different river segments. We demonstrate the effectiveness of the proposed method in predicting temperature and streamflow in a subset of the Delaware River Basin. In particular, we show that the proposed method brings a 33\%/14\% improvement over the state-of-the-art physics-based model and 24\%/14\% over traditional machine learning models (e.g., Long-Short Term Memory Neural Network) in temperature/streamflow prediction using very sparse (0.1\%) observation data for training. The proposed method has also been shown to produce better performance when generalized to different seasons or river segments with different streamflow ranges.

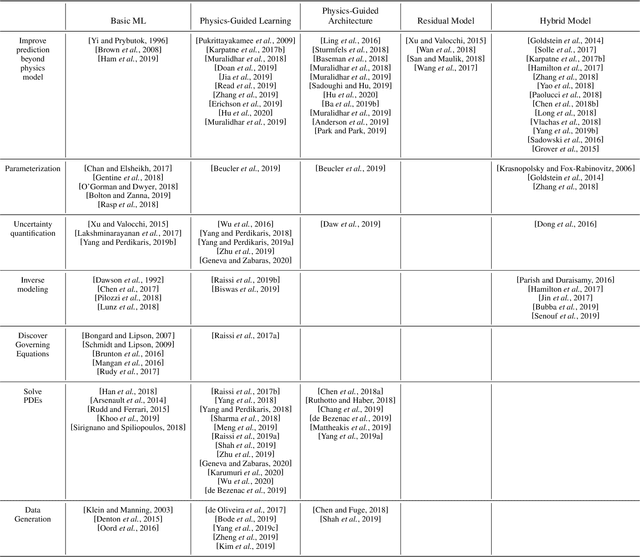

Integrating Physics-Based Modeling with Machine Learning: A Survey

Apr 01, 2020

Abstract:In this manuscript, we provide a structured and comprehensive overview of techniques to integrate machine learning with physics-based modeling. First, we provide a summary of application areas for which these approaches have been applied. Then, we describe classes of methodologies used to construct physics-guided machine learning models and hybrid physics-machine learning frameworks from a machine learning standpoint. With this foundation, we then provide a systematic organization of these existing techniques and discuss ideas for future research.

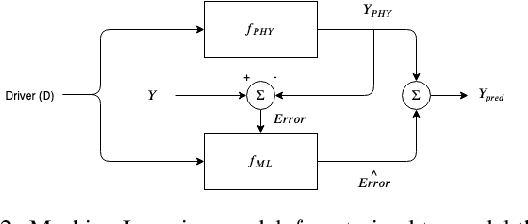

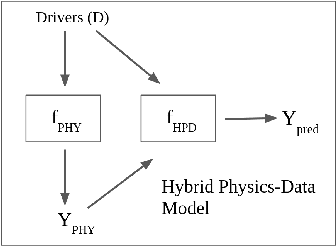

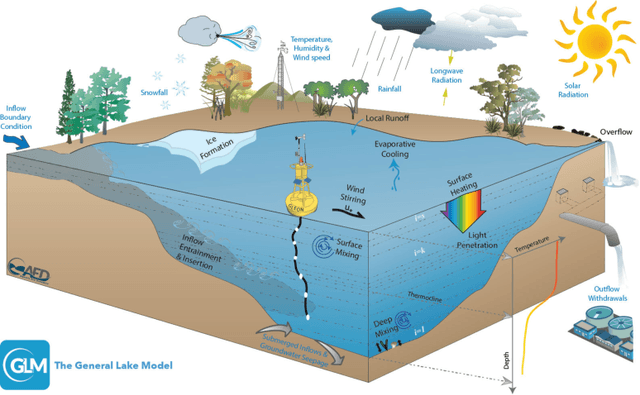

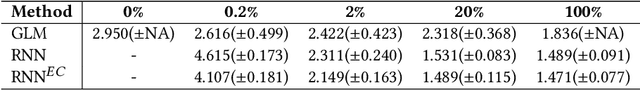

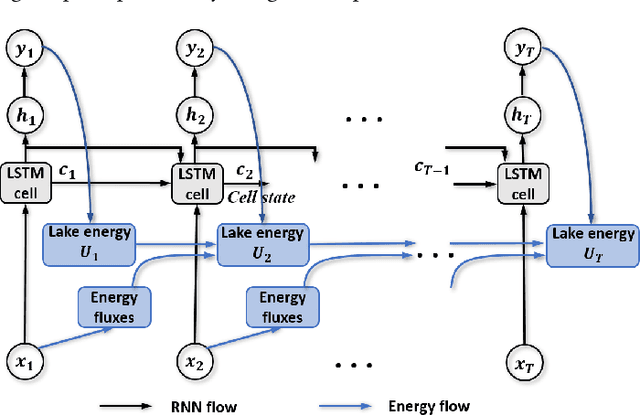

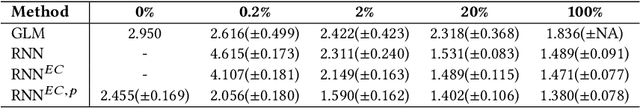

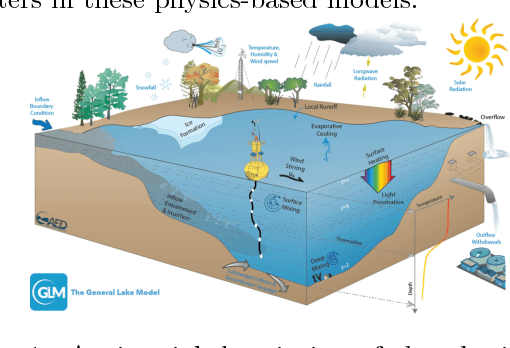

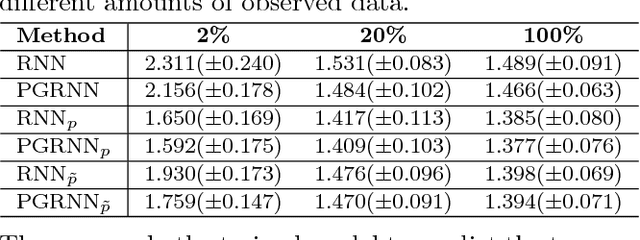

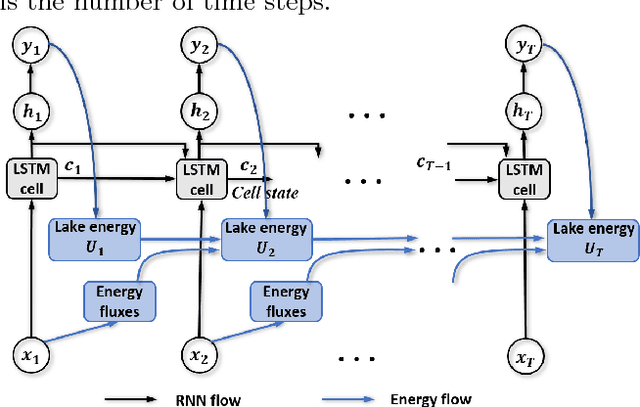

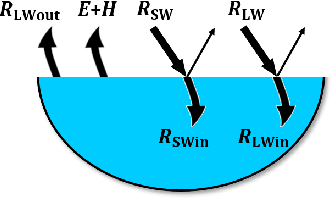

Physics-Guided Machine Learning for Scientific Discovery: An Application in Simulating Lake Temperature Profiles

Jan 28, 2020

Abstract:Physics-based models of dynamical systems are often used to study engineering and environmental systems. Despite their extensive use, these models have several well-known limitations due to simplified representations of the physical processes being modeled or challenges in selecting appropriate parameters. While-state-of-the-art machine learning models can sometimes outperform physics-based models given ample amount of training data, they can produce results that are physically inconsistent. This paper proposes a physics-guided recurrent neural network model (PGRNN) that combines RNNs and physics-based models to leverage their complementary strengths and improves the modeling of physical processes. Specifically, we show that a PGRNN can improve prediction accuracy over that of physics-based models, while generating outputs consistent with physical laws. An important aspect of our PGRNN approach lies in its ability to incorporate the knowledge encoded in physics-based models. This allows training the PGRNN model using very few true observed data while also ensuring high prediction accuracy. Although we present and evaluate this methodology in the context of modeling the dynamics of temperature in lakes, it is applicable more widely to a range of scientific and engineering disciplines where physics-based (also known as mechanistic) models are used, e.g., climate science, materials science, computational chemistry, and biomedicine.

Physics Guided RNNs for Modeling Dynamical Systems: A Case Study in Simulating Lake Temperature Profiles

Oct 31, 2018

Abstract:This paper proposes a physics-guided recurrent neural network model (PGRNN) that combines RNNs and physics-based models to leverage their complementary strengths and improve the modeling of physical processes. Specifically, we show that a PGRNN can improve prediction accuracy over that of physical models, while generating outputs consistent with physical laws, and achieving good generalizability. Standard RNNs, even when producing superior prediction accuracy, often produce physically inconsistent results and lack generalizability. We further enhance this approach by using a pre-training method that leverages the simulated data from a physics-based model to address the scarcity of observed data. The PGRNN has the flexibility to incorporate additional physical constraints and we incorporate a density-depth relationship. Both enhancements further improve PGRNN performance. Although we present and evaluate this methodology in the context of modeling the dynamics of temperature in lakes, it is applicable more widely to a range of scientific and engineering disciplines where mechanistic (also known as process-based) models are used, e.g., power engineering, climate science, materials science, computational chemistry, and biomedicine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge