Continuous Coordination As a Realistic Scenario for Lifelong Learning

Mar 04, 2021Hadi Nekoei, Akilesh Badrinaaraayanan, Aaron Courville, Sarath Chandar

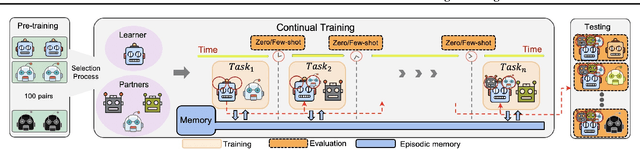

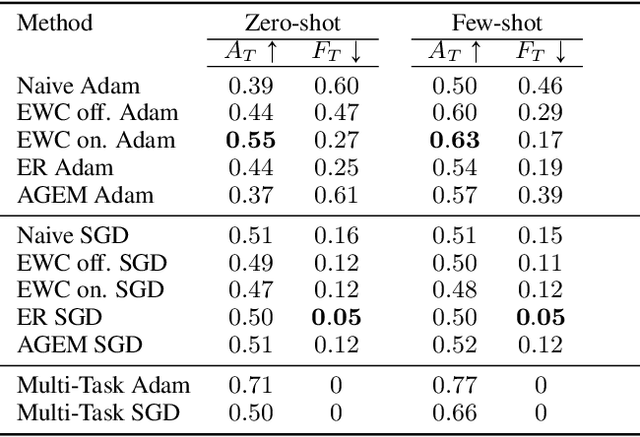

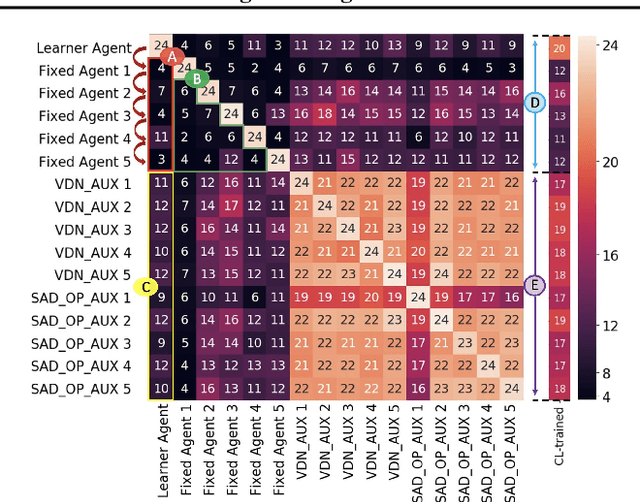

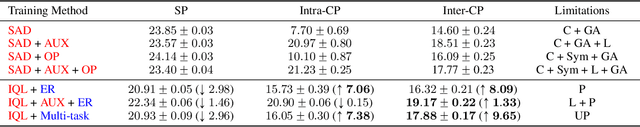

Current deep reinforcement learning (RL) algorithms are still highly task-specific and lack the ability to generalize to new environments. Lifelong learning (LLL), however, aims at solving multiple tasks sequentially by efficiently transferring and using knowledge between tasks. Despite a surge of interest in lifelong RL in recent years, the lack of a realistic testbed makes robust evaluation of LLL algorithms difficult. Multi-agent RL (MARL), on the other hand, can be seen as a natural scenario for lifelong RL due to its inherent non-stationarity, since the agents' policies change over time. In this work, we introduce a multi-agent lifelong learning testbed that supports both zero-shot and few-shot settings. Our setup is based on Hanabi -- a partially-observable, fully cooperative multi-agent game that has been shown to be challenging for zero-shot coordination. Its large strategy space makes it a desirable environment for lifelong RL tasks. We evaluate several recent MARL methods, and benchmark state-of-the-art LLL algorithms in limited memory and computation regimes to shed light on their strengths and weaknesses. This continual learning paradigm also provides us with a pragmatic way of going beyond centralized training which is the most commonly used training protocol in MARL. We empirically show that the agents trained in our setup are able to coordinate well with unseen agents, without any additional assumptions made by previous works.

IIRC: Incremental Implicitly-Refined Classification

Jan 11, 2021Mohamed Abdelsalam, Mojtaba Faramarzi, Shagun Sodhani, Sarath Chandar

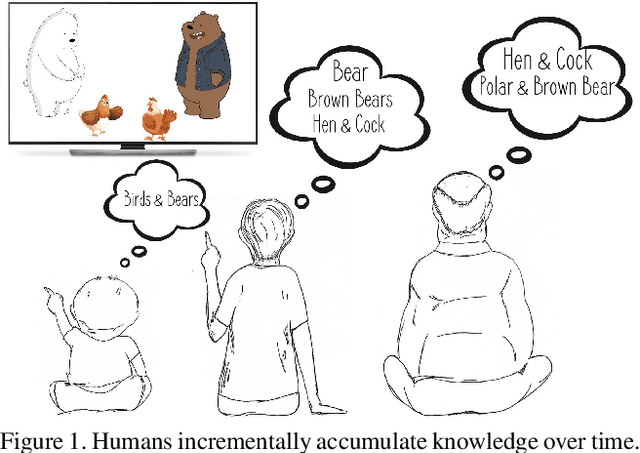

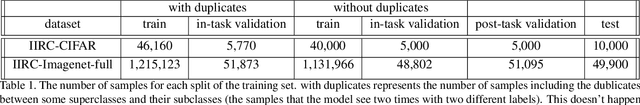

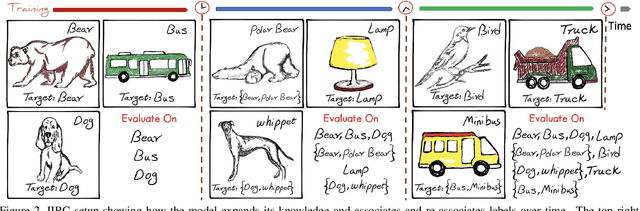

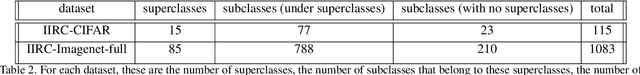

We introduce the "Incremental Implicitly-Refined Classi-fication (IIRC)" setup, an extension to the class incremental learning setup where the incoming batches of classes have two granularity levels. i.e., each sample could have a high-level (coarse) label like "bear" and a low-level (fine) label like "polar bear". Only one label is provided at a time, and the model has to figure out the other label if it has already learnfed it. This setup is more aligned with real-life scenarios, where a learner usually interacts with the same family of entities multiple times, discovers more granularity about them, while still trying not to forget previous knowledge. Moreover, this setup enables evaluating models for some important lifelong learning challenges that cannot be easily addressed under the existing setups. These challenges can be motivated by the example "if a model was trained on the class bear in one task and on polar bear in another task, will it forget the concept of bear, will it rightfully infer that a polar bear is still a bear? and will it wrongfully associate the label of polar bear to other breeds of bear?". We develop a standardized benchmark that enables evaluating models on the IIRC setup. We evaluate several state-of-the-art lifelong learning algorithms and highlight their strengths and limitations. For example, distillation-based methods perform relatively well but are prone to incorrectly predicting too many labels per image. We hope that the proposed setup, along with the benchmark, would provide a meaningful problem setting to the practitioners

Maximum Reward Formulation In Reinforcement Learning

Oct 08, 2020Sai Krishna Gottipati, Yashaswi Pathak, Rohan Nuttall, Sahir, Raviteja Chunduru, Ahmed Touati, Sriram Ganapathi Subramanian, Matthew E. Taylor, Sarath Chandar

Reinforcement learning (RL) algorithms typically deal with maximizing the expected cumulative return (discounted or undiscounted, finite or infinite horizon). However, several crucial applications in the real world, such as drug discovery, do not fit within this framework because an RL agent only needs to identify states (molecules) that achieve the highest reward within a trajectory and does not need to optimize for the expected cumulative return. In this work, we formulate an objective function to maximize the expected maximum reward along a trajectory, derive a novel functional form of the Bellman equation, introduce the corresponding Bellman operators, and provide a proof of convergence. Using this formulation, we achieve state-of-the-art results on the task of molecule generation that mimics a real-world drug discovery pipeline.

MLMLM: Link Prediction with Mean Likelihood Masked Language Model

Sep 15, 2020Louis Clouatre, Philippe Trempe, Amal Zouaq, Sarath Chandar

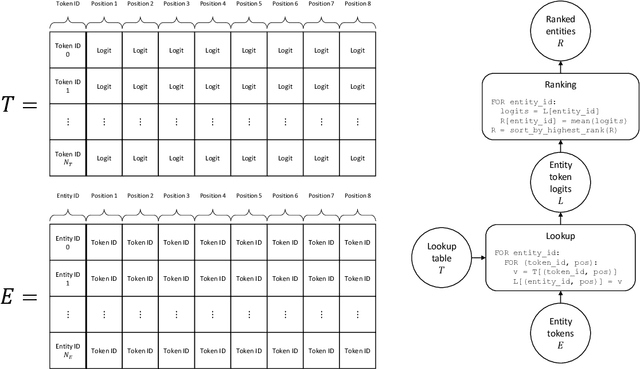

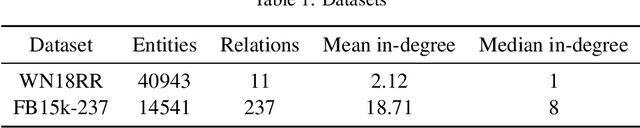

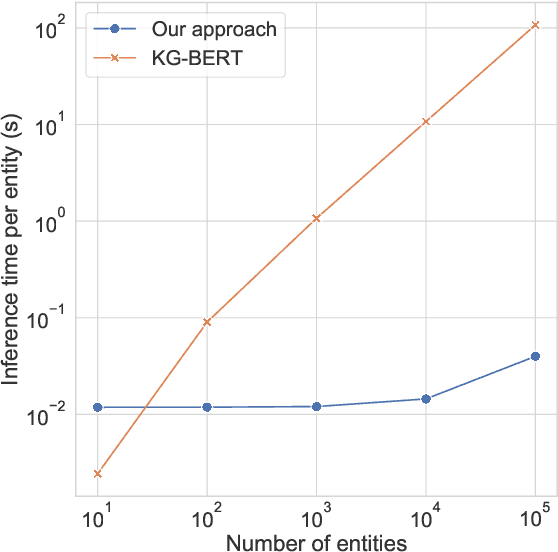

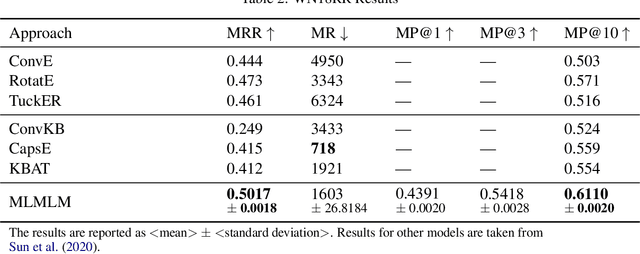

Knowledge Bases (KBs) are easy to query, verifiable, and interpretable. They however scale with man-hours and high-quality data. Masked Language Models (MLMs), such as BERT, scale with computing power as well as unstructured raw text data. The knowledge contained within those models is however not directly interpretable. We propose to perform link prediction with MLMs to address both the KBs scalability issues and the MLMs interpretability issues. To do that we introduce MLMLM, Mean Likelihood Masked Language Model, an approach comparing the mean likelihood of generating the different entities to perform link prediction in a tractable manner. We obtain State of the Art (SotA) results on the WN18RR dataset and the best non-entity-embedding based results on the FB15k-237 dataset. We also obtain convincing results on link prediction on previously unseen entities, making MLMLM a suitable approach to introducing new entities to a KB.

How To Evaluate Your Dialogue System: Probe Tasks as an Alternative for Token-level Evaluation Metrics

Aug 24, 2020Prasanna Parthasarathi, Joelle Pineau, Sarath Chandar

Though generative dialogue modeling is widely seen as a language modeling task, the task demands an agent to have a complex natural language understanding of its input text to carry a meaningful interaction with an user. The automatic metrics used evaluate the quality of the generated text as a proxy to the holistic interaction of the agent. Such metrics were earlier shown to not correlate with the human judgement. In this work, we observe that human evaluation of dialogue agents can be inconclusive due to the lack of sufficient information for appropriate evaluation. The automatic metrics are deterministic yet shallow and human evaluation can be relevant yet inconclusive. To bridge this gap in evaluation, we propose designing a set of probing tasks to evaluate dialogue models. The hand-crafted tasks are aimed at quantitatively evaluating a generative dialogue model's understanding beyond the token-level evaluation on the generated text. The probing tasks are deterministic like automatic metrics and requires human judgement in their designing; benefiting from the best of both worlds. With experiments on probe tasks we observe that, unlike RNN based architectures, transformer model may not be learning to comprehend the input text despite its generated text having higher overlap with the target text.

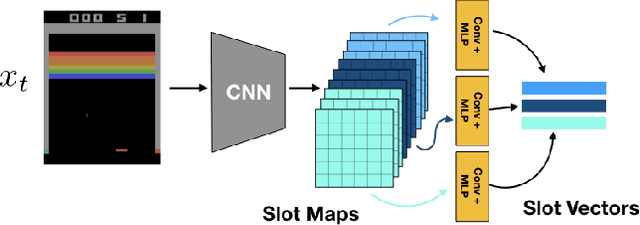

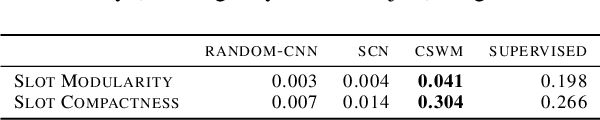

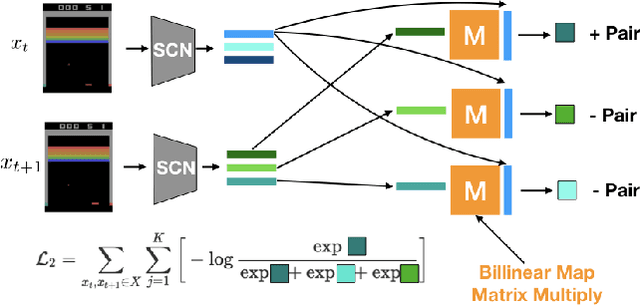

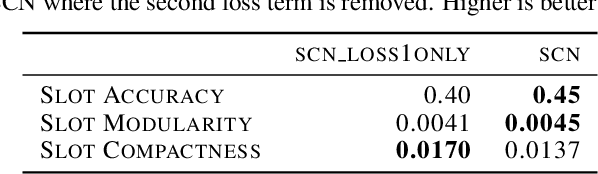

Slot Contrastive Networks: A Contrastive Approach for Representing Objects

Jul 18, 2020Evan Racah, Sarath Chandar

Unsupervised extraction of objects from low-level visual data is an important goal for further progress in machine learning. Existing approaches for representing objects without labels use structured generative models with static images. These methods focus a large amount of their capacity on reconstructing unimportant background pixels, missing low contrast or small objects. Conversely, we present a new method that avoids losses in pixel space and over-reliance on the limited signal a static image provides. Our approach takes advantage of objects' motion by learning a discriminative, time-contrastive loss in the space of slot representations, attempting to force each slot to not only capture entities that move, but capture distinct objects from the other slots. Moreover, we introduce a new quantitative evaluation metric to measure how "diverse" a set of slot vectors are, and use it to evaluate our model on 20 Atari games.

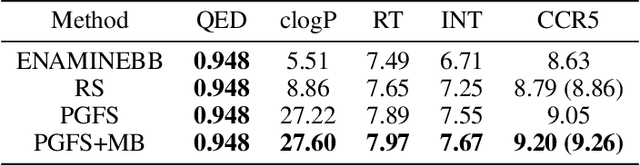

The LoCA Regret: A Consistent Metric to Evaluate Model-Based Behavior in Reinforcement Learning

Jul 07, 2020Harm van Seijen, Hadi Nekoei, Evan Racah, Sarath Chandar

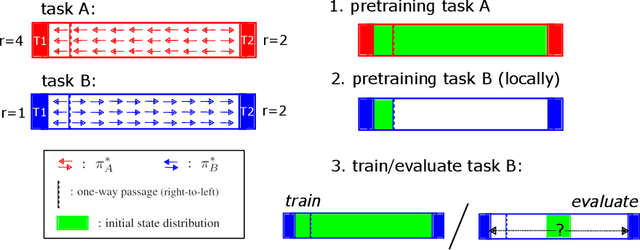

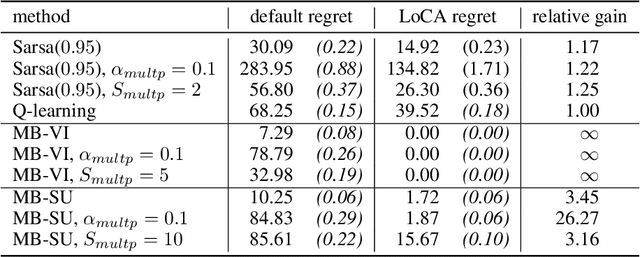

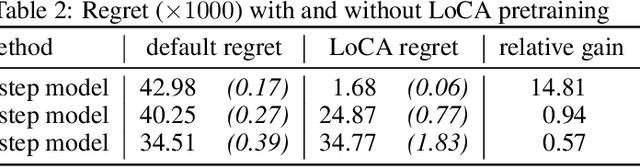

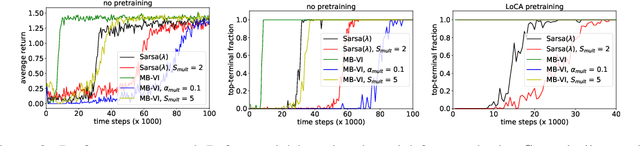

Deep model-based Reinforcement Learning (RL) has the potential to substantially improve the sample-efficiency of deep RL. While various challenges have long held it back, a number of papers have recently come out reporting success with deep model-based methods. This is a great development, but the lack of a consistent metric to evaluate such methods makes it difficult to compare various approaches. For example, the common single-task sample-efficiency metric conflates improvements due to model-based learning with various other aspects, such as representation learning, making it difficult to assess true progress on model-based RL. To address this, we introduce an experimental setup to evaluate model-based behavior of RL methods, inspired by work from neuroscience on detecting model-based behavior in humans and animals. Our metric based on this setup, the Local Change Adaptation (LoCA) regret, measures how quickly an RL method adapts to a local change in the environment. Our metric can identify model-based behavior, even if the method uses a poor representation and provides insight in how close a method's behavior is from optimal model-based behavior. We use our setup to evaluate the model-based behavior of MuZero on a variation of the classic Mountain Car task.

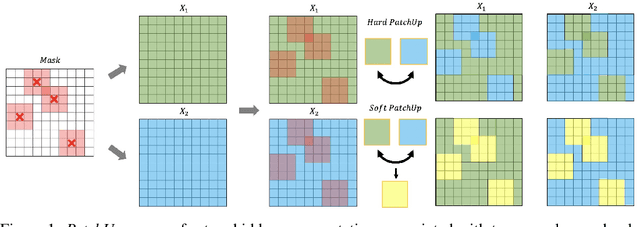

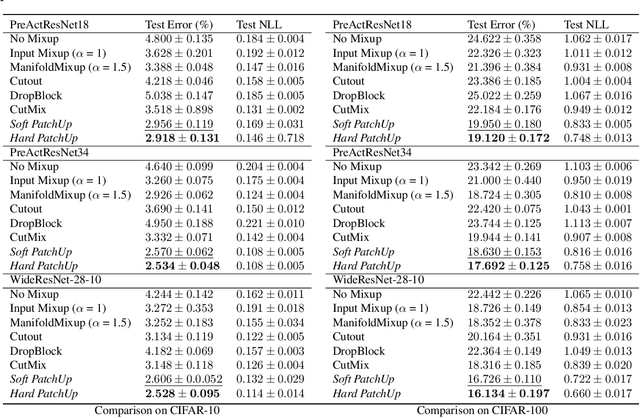

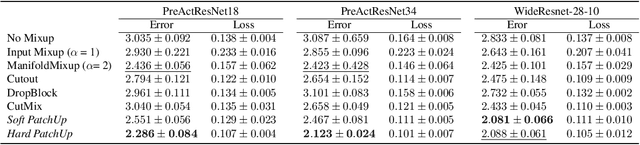

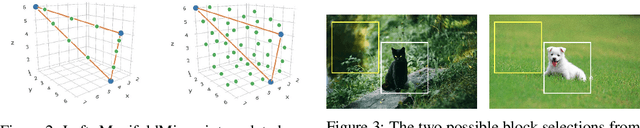

PatchUp: A Regularization Technique for Convolutional Neural Networks

Jun 14, 2020Mojtaba Faramarzi, Mohammad Amini, Akilesh Badrinaaraayanan, Vikas Verma, Sarath Chandar

Large capacity deep learning models are often prone to a high generalization gap when trained with a limited amount of labeled training data. A recent class of methods to address this problem uses various ways to construct a new training sample by mixing a pair (or more) of training samples. We propose PatchUp, a hidden state block-level regularization technique for Convolutional Neural Networks (CNNs), that is applied on selected contiguous blocks of feature maps from a random pair of samples. Our approach improves the robustness of CNN models against the manifold intrusion problem that may occur in other state-of-the-art mixing approaches like Mixup and CutMix. Moreover, since we are mixing the contiguous block of features in the hidden space, which has more dimensions than the input space, we obtain more diverse samples for training towards different dimensions. Our experiments on CIFAR-10, CIFAR-100, and SVHN datasets with PreactResnet18, PreactResnet34, and WideResnet-28-10 models show that PatchUp improves upon, or equals, the performance of current state-of-the-art regularizers for CNNs. We also show that PatchUp can provide better generalization to affine transformations of samples and is more robust against adversarial attacks.

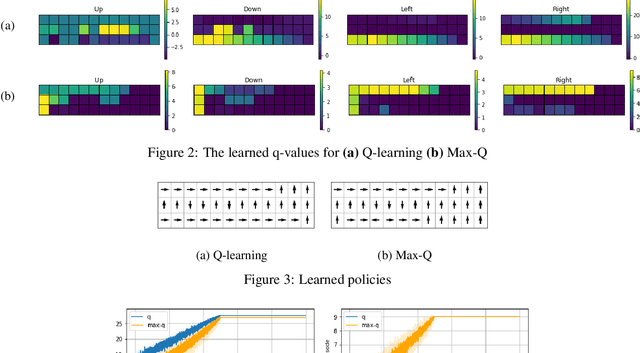

Learning To Navigate The Synthetically Accessible Chemical Space Using Reinforcement Learning

May 20, 2020Sai Krishna Gottipati, Boris Sattarov, Sufeng Niu, Yashaswi Pathak, Haoran Wei, Shengchao Liu, Karam M. J. Thomas, Simon Blackburn, Connor W. Coley, Jian Tang, Sarath Chandar, Yoshua Bengio

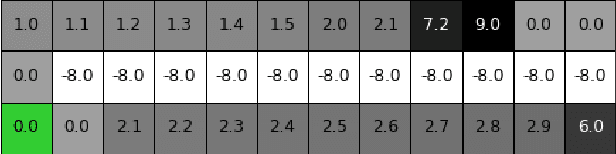

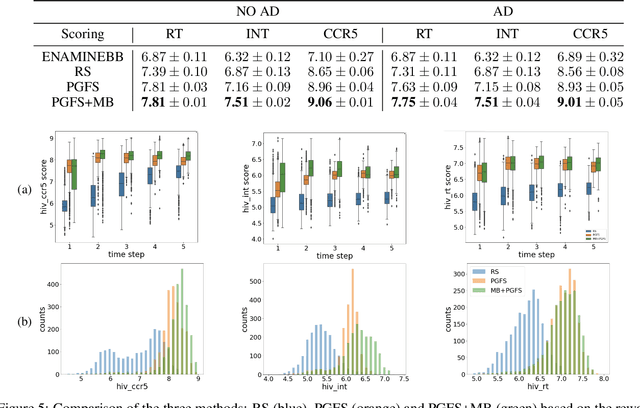

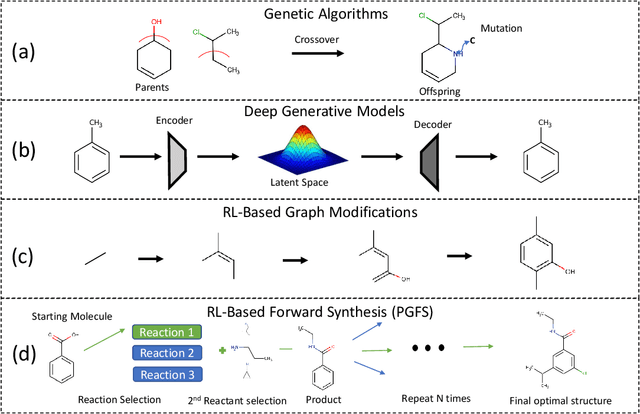

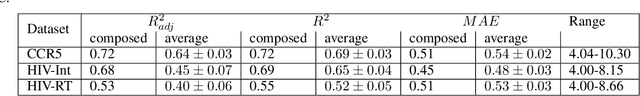

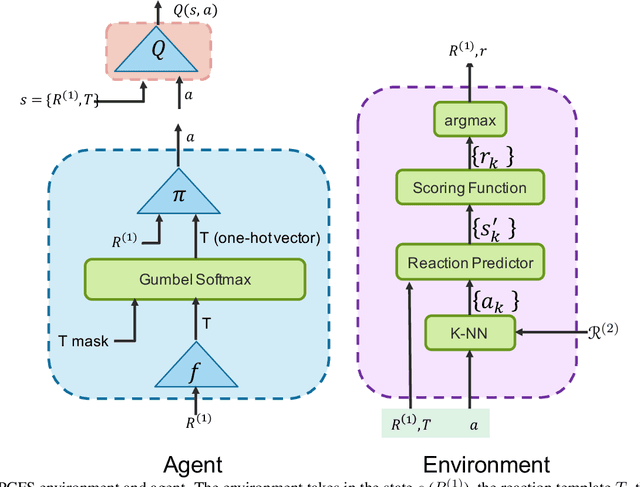

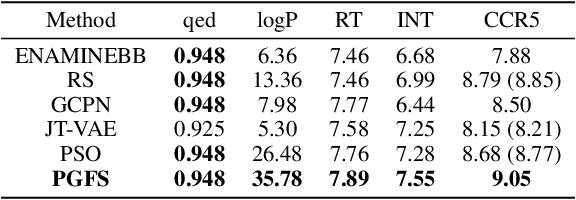

Over the last decade, there has been significant progress in the field of machine learning for de novo drug design, particularly in deep generative models. However, current generative approaches exhibit a significant challenge as they do not ensure that the proposed molecular structures can be feasibly synthesized nor do they provide the synthesis routes of the proposed small molecules, thereby seriously limiting their practical applicability. In this work, we propose a novel forward synthesis framework powered by reinforcement learning (RL) for de novo drug design, Policy Gradient for Forward Synthesis (PGFS), that addresses this challenge by embedding the concept of synthetic accessibility directly into the de novo drug design system. In this setup, the agent learns to navigate through the immense synthetically accessible chemical space by subjecting commercially available small molecule building blocks to valid chemical reactions at every time step of the iterative virtual multi-step synthesis process. The proposed environment for drug discovery provides a highly challenging test-bed for RL algorithms owing to the large state space and high-dimensional continuous action space with hierarchical actions. PGFS achieves state-of-the-art performance in generating structures with high QED and penalized clogP. Moreover, we validate PGFS in an in-silico proof-of-concept associated with three HIV targets. Finally, we describe how the end-to-end training conceptualized in this study represents an important paradigm in radically expanding the synthesizable chemical space and automating the drug discovery process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge