Richard Voyles

Coaching a Robotic Sonographer: Learning Robotic Ultrasound with Sparse Expert's Feedback

Sep 03, 2024

Abstract:Ultrasound is widely employed for clinical intervention and diagnosis, due to its advantages of offering non-invasive, radiation-free, and real-time imaging. However, the accessibility of this dexterous procedure is limited due to the substantial training and expertise required of operators. The robotic ultrasound (RUS) offers a viable solution to address this limitation; nonetheless, achieving human-level proficiency remains challenging. Learning from demonstrations (LfD) methods have been explored in RUS, which learns the policy prior from a dataset of offline demonstrations to encode the mental model of the expert sonographer. However, active engagement of experts, i.e. Coaching, during the training of RUS has not been explored thus far. Coaching is known for enhancing efficiency and performance in human training. This paper proposes a coaching framework for RUS to amplify its performance. The framework combines DRL (self-supervised practice) with sparse expert's feedback through coaching. The DRL employs an off-policy Soft Actor-Critic (SAC) network, with a reward based on image quality rating. The coaching by experts is modeled as a Partially Observable Markov Decision Process (POMDP), which updates the policy parameters based on the correction by the expert. The validation study on phantoms showed that coaching increases the learning rate by $25\%$ and the number of high-quality image acquisition by $74.5\%$.

UltraGelBot: Autonomous Gel Dispenser for Robotic Ultrasound

Jun 28, 2024Abstract:Telerobotic and Autonomous Robotic Ultrasound Systems (RUS) help alleviate the need for operator-dependability in free-hand ultrasound examinations. However, the state-of-the-art RUSs still rely on a human operator to apply the ultrasound gel. The lack of standardization in this process often leads to poor imaging of the scanned region. The reason for this has to do with air-gaps between the probe and the human body. In this paper, we developed a end-of-arm tool for RUS, referred to as UltraGelBot. This bot can autonomously detect and dispense the gel. It uses a deep learning model to detect the gel from images acquired using an on-board camera. A motorized mechanism is also developed, which will use this feedback and dispense the gel. Experiments on phantom revealed that UltraGelBot increases the acquired image quality by $18.6\%$ and reduces the procedure time by $37.2\%$.

Deep Kernel and Image Quality Estimators for Optimizing Robotic Ultrasound Controller using Bayesian Optimization

Oct 11, 2023

Abstract:Ultrasound is a commonly used medical imaging modality that requires expert sonographers to manually maneuver the ultrasound probe based on the acquired image. Autonomous Robotic Ultrasound (A-RUS) is an appealing alternative to this manual procedure in order to reduce sonographers' workload. The key challenge to A-RUS is optimizing the ultrasound image quality for the region of interest across different patients. This requires knowledge of anatomy, recognition of error sources and precise probe position, orientation and pressure. Sample efficiency is important while optimizing these parameters associated with the robotized probe controller. Bayesian Optimization (BO), a sample-efficient optimization framework, has recently been applied to optimize the 2D motion of the probe. Nevertheless, further improvements are needed to improve the sample efficiency for high-dimensional control of the probe. We aim to overcome this problem by using a neural network to learn a low-dimensional kernel in BO, termed as Deep Kernel (DK). The neural network of DK is trained using probe and image data acquired during the procedure. The two image quality estimators are proposed that use a deep convolution neural network and provide real-time feedback to the BO. We validated our framework using these two feedback functions on three urinary bladder phantoms. We obtained over 50% increase in sample efficiency for 6D control of the robotized probe. Furthermore, our results indicate that this performance enhancement in BO is independent of the specific training dataset, demonstrating inter-patient adaptability.

Expert-Agnostic Ultrasound Image Quality Assessment using Deep Variational Clustering

Jul 06, 2023Abstract:Ultrasound imaging is a commonly used modality for several diagnostic and therapeutic procedures. However, the diagnosis by ultrasound relies heavily on the quality of images assessed manually by sonographers, which diminishes the objectivity of the diagnosis and makes it operator-dependent. The supervised learning-based methods for automated quality assessment require manually annotated datasets, which are highly labour-intensive to acquire. These ultrasound images are low in quality and suffer from noisy annotations caused by inter-observer perceptual variations, which hampers learning efficiency. We propose an UnSupervised UltraSound image Quality assessment Network, US2QNet, that eliminates the burden and uncertainty of manual annotations. US2QNet uses the variational autoencoder embedded with the three modules, pre-processing, clustering and post-processing, to jointly enhance, extract, cluster and visualize the quality feature representation of ultrasound images. The pre-processing module uses filtering of images to point the network's attention towards salient quality features, rather than getting distracted by noise. Post-processing is proposed for visualizing the clusters of feature representations in 2D space. We validated the proposed framework for quality assessment of the urinary bladder ultrasound images. The proposed framework achieved 78% accuracy and superior performance to state-of-the-art clustering methods.

Robotic Sonographer: Autonomous Robotic Ultrasound using Domain Expertise in Bayesian Optimization

Jul 05, 2023Abstract:Ultrasound is a vital imaging modality utilized for a variety of diagnostic and interventional procedures. However, an expert sonographer is required to make accurate maneuvers of the probe over the human body while making sense of the ultrasound images for diagnostic purposes. This procedure requires a substantial amount of training and up to a few years of experience. In this paper, we propose an autonomous robotic ultrasound system that uses Bayesian Optimization (BO) in combination with the domain expertise to predict and effectively scan the regions where diagnostic quality ultrasound images can be acquired. The quality map, which is a distribution of image quality in a scanning region, is estimated using Gaussian process in BO. This relies on a prior quality map modeled using expert's demonstration of the high-quality probing maneuvers. The ultrasound image quality feedback is provided to BO, which is estimated using a deep convolution neural network model. This model was previously trained on database of images labelled for diagnostic quality by expert radiologists. Experiments on three different urinary bladder phantoms validated that the proposed autonomous ultrasound system can acquire ultrasound images for diagnostic purposes with a probing position and force accuracy of 98.7% and 97.8%, respectively.

From the DESK to the Battlefield -- A Robotics Exploratory Study

Nov 30, 2020

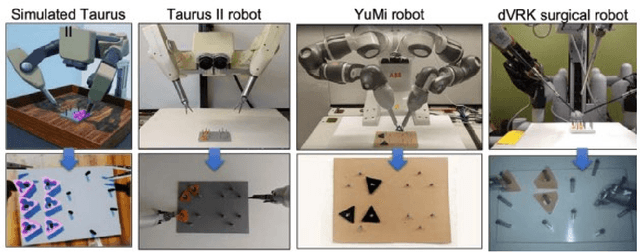

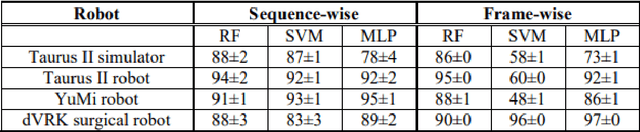

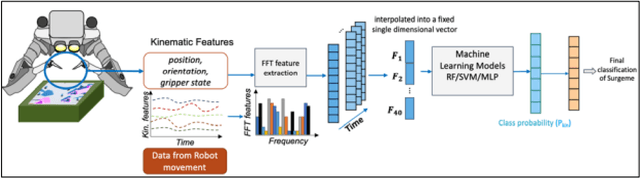

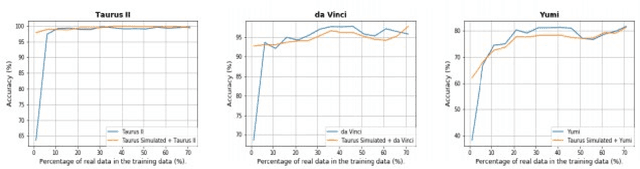

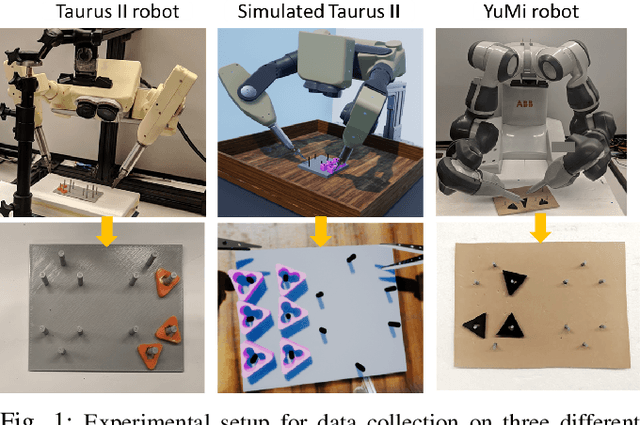

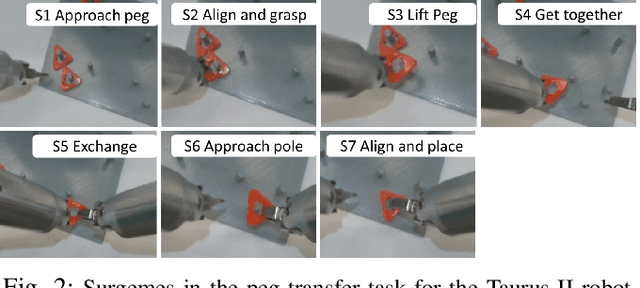

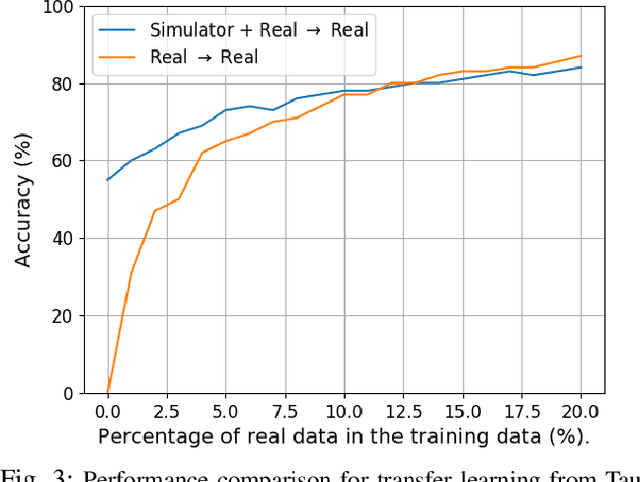

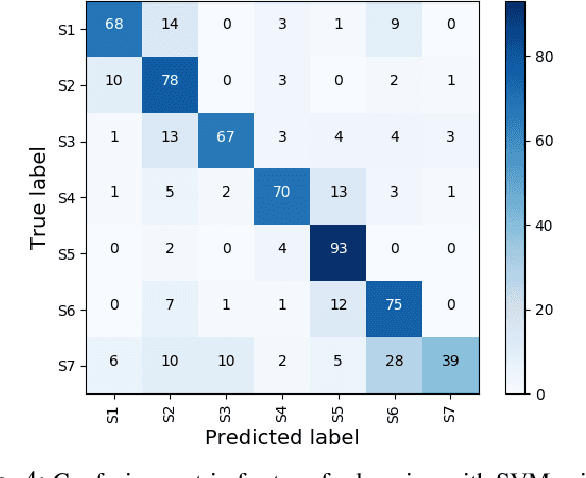

Abstract:Short response time is critical for future military medical operations in austere settings or remote areas. Such effective patient care at the point of injury can greatly benefit from the integration of semi-autonomous robotic systems. To achieve autonomy, robots would require massive libraries of maneuvers. While this is possible in controlled settings, obtaining surgical data in austere settings can be difficult. Hence, in this paper, we present the Dexterous Surgical Skill (DESK) database for knowledge transfer between robots. The peg transfer task was selected as it is one of 6 main tasks of laparoscopic training. Also, we provide a ML framework to evaluate novel transfer learning methodologies on this database. The collected DESK dataset comprises a set of surgical robotic skills using the four robotic platforms: Taurus II, simulated Taurus II, YuMi, and the da Vinci Research Kit. Then, we explored two different learning scenarios: no-transfer and domain-transfer. In the no-transfer scenario, the training and testing data were obtained from the same domain; whereas in the domain-transfer scenario, the training data is a blend of simulated and real robot data that is tested on a real robot. Using simulation data enhances the performance of the real robot where limited or no real data is available. The transfer model showed an accuracy of 81% for the YuMi robot when the ratio of real-to-simulated data was 22%-78%. For Taurus II and da Vinci robots, the model showed an accuracy of 97.5% and 93% respectively, training only with simulation data. Results indicate that simulation can be used to augment training data to enhance the performance of models in real scenarios. This shows the potential for future use of surgical data from the operating room in deployable surgical robots in remote areas.

* First 3 authors share equal contribution

Robotic Materials

Mar 25, 2019Abstract:The Computing Community Consortium (CCC) sponsored a workshop on "Robotic Materials" in Washington, DC, that was held from April 23-24, 2018. This workshop was the second in a series of interdisciplinary workshops aimed at transforming our notion of materials to become "robotic", that is have the ability to sense and impact their environment. Results of the first workshop held from March 10-12, 2017, at the University of Colorado have been summarized in a visioning paper (Correll, 2017) and have identified the key technological challenges of "Robotic Materials", namely the ability to create smart functionality with a minimum of additional wiring by relying on wireless power and communication. The goal of this second workshop was to turn these findings into recommendations for government action. Computation will become an important part of future material systems and will allow materials to analyze, change, store and communicate state in ways that are not possible using mechanical or chemical processes alone. What "computation" is and what is possibilities are, is unclear to most material scientists, while computer scientists are largely unaware of recent advances in so-called active and smart materials. This gap is currently shrinking, with computer scientists embracing neural networks and material scientists actively researching novel substrates such as memristors and other neuromorphic computing devices. Further pursuing these ideas will require an emphasis on interdisciplinary collaboration between chemists, engineers, and computer scientists, possibly elevating humankind to a new material age that is similarly disruptive as the leap from the stone to the plastic age.

DESK: A Robotic Activity Dataset for Dexterous Surgical Skills Transfer to Medical Robots

Mar 03, 2019

Abstract:Datasets are an essential component for training effective machine learning models. In particular, surgical robotic datasets have been key to many advances in semi-autonomous surgeries, skill assessment, and training. Simulated surgical environments can enhance the data collection process by making it faster, simpler and cheaper than real systems. In addition, combining data from multiple robotic domains can provide rich and diverse training data for transfer learning algorithms. In this paper, we present the DESK (Dexterous Surgical Skill) dataset. It comprises a set of surgical robotic skills collected during a surgical training task using three robotic platforms: the Taurus II robot, Taurus II simulated robot, and the YuMi robot. This dataset was used to test the idea of transferring knowledge across different domains (e.g. from Taurus to YuMi robot) for a surgical gesture classification task with seven gestures. We explored three different scenarios: 1) No transfer, 2) Transfer from simulated Taurus to real Taurus and 3) Transfer from Simulated Taurus to the YuMi robot. We conducted extensive experiments with three supervised learning models and provided baselines in each of these scenarios. Results show that using simulation data during training enhances the performance on the real robot where limited real data is available. In particular, we obtained an accuracy of 55% on the real Taurus data using a model that is trained only on the simulator data. Furthermore, we achieved an accuracy improvement of 34% when 3% of the real data is added into the training process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge