Glebys Gonzalez

Pose Imitation Constraints for Collaborative Robots

Oct 12, 2020

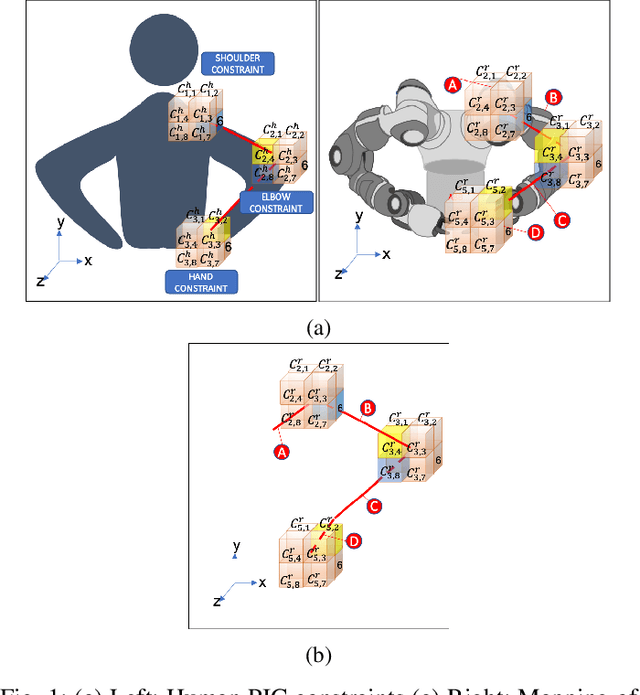

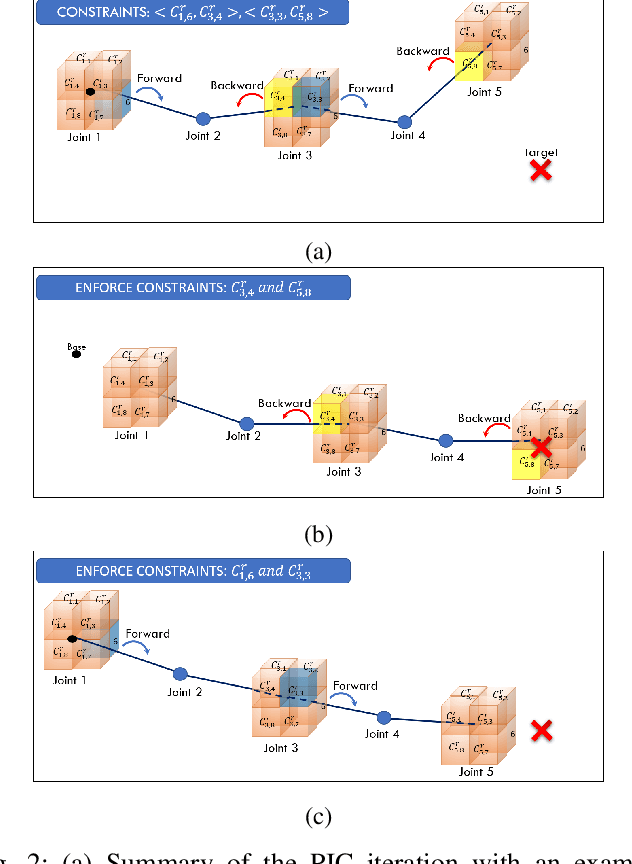

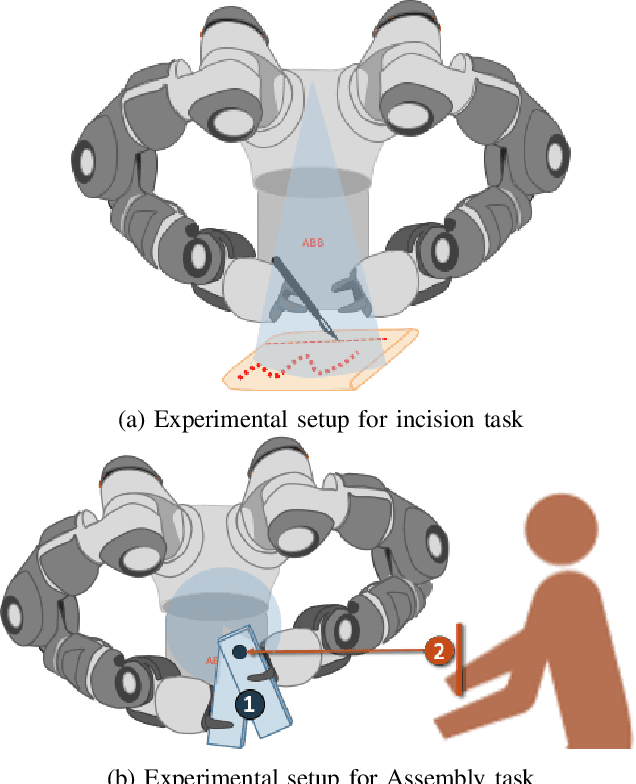

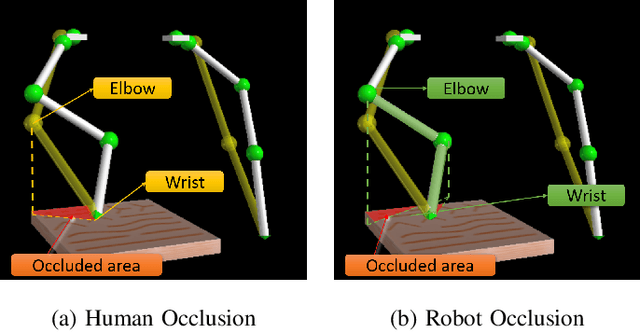

Abstract:Achieving human-like motion in robots has been a fundamental goal in many areas of robotics research. Inverse kinematic (IK) solvers have been explored as a solution to provide kinematic structures with anthropomorphic movements. In particular, numeric solvers based on geometry, such as FABRIK, have shown potential for producing human-like motion at a low computational cost. Nevertheless, these methods have shown limitations when solving for robot kinematic constraints. This work proposes a framework inspired by FABRIK for human pose imitation in real-time. The goal is to mitigate the problems of the original algorithm while retaining the resulting humanlike fluidity and low cost. We first propose a human constraint model for pose imitation. Then, we present a pose imitation algorithm (PIC), and it's soft version (PICs) that can successfully imitate human poses using the proposed constraint system. PIC was tested on two collaborative robots (Baxter and YuMi). Fifty human demonstrations were collected for a bi-manual assembly and an incision task. Then, two performance metrics were obtained for both robots: pose accuracy with respect to the human and the percentage of environment occlusion/obstruction. The performance of PIC and PICs was compared against the numerical solver baseline (FABRIK). The proposed algorithms achieve a higher pose accuracy than FABRIK for both tasks (25%-FABRIK, 53%-PICs, 58%-PICs). In addition, PIC and it's soft version achieve a lower percentage of occlusion during incision (10%-FABRIK, 4%-PICs, 9%-PICs). These results indicate that the PIC method can reproduce human poses and achieve key desired effects of human imitation.

DESK: A Robotic Activity Dataset for Dexterous Surgical Skills Transfer to Medical Robots

Mar 03, 2019

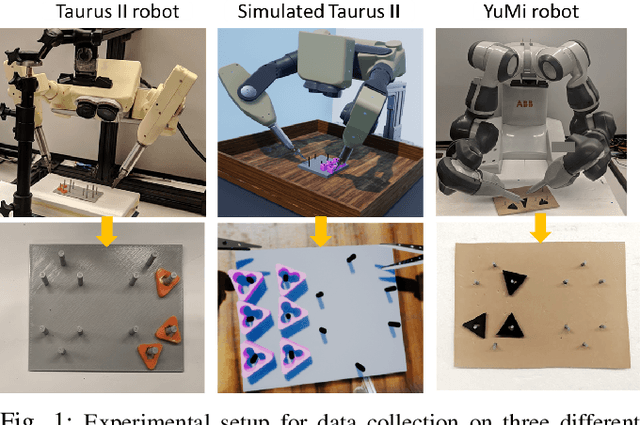

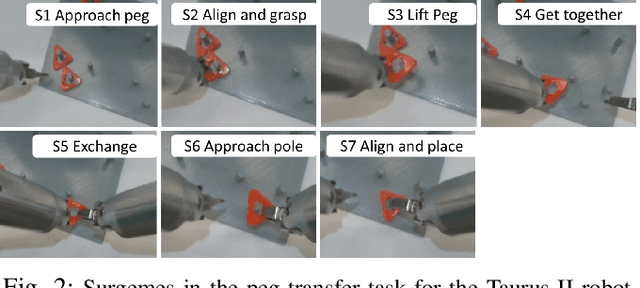

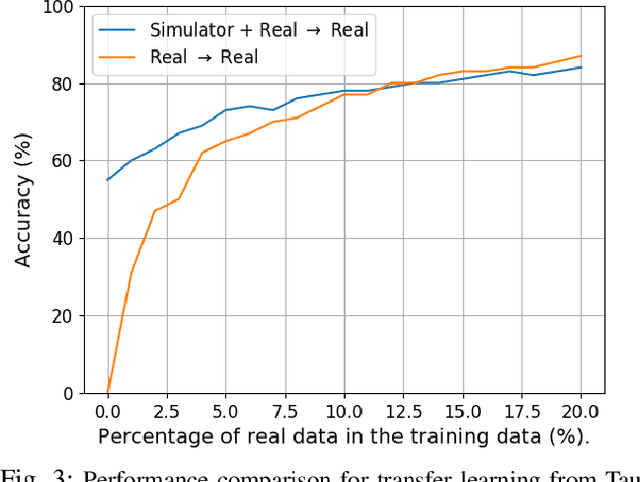

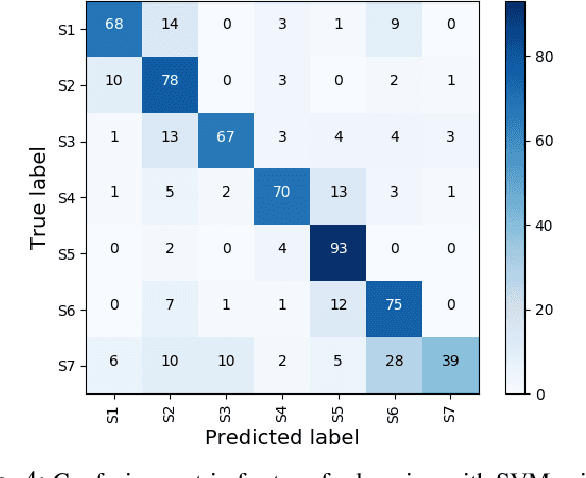

Abstract:Datasets are an essential component for training effective machine learning models. In particular, surgical robotic datasets have been key to many advances in semi-autonomous surgeries, skill assessment, and training. Simulated surgical environments can enhance the data collection process by making it faster, simpler and cheaper than real systems. In addition, combining data from multiple robotic domains can provide rich and diverse training data for transfer learning algorithms. In this paper, we present the DESK (Dexterous Surgical Skill) dataset. It comprises a set of surgical robotic skills collected during a surgical training task using three robotic platforms: the Taurus II robot, Taurus II simulated robot, and the YuMi robot. This dataset was used to test the idea of transferring knowledge across different domains (e.g. from Taurus to YuMi robot) for a surgical gesture classification task with seven gestures. We explored three different scenarios: 1) No transfer, 2) Transfer from simulated Taurus to real Taurus and 3) Transfer from Simulated Taurus to the YuMi robot. We conducted extensive experiments with three supervised learning models and provided baselines in each of these scenarios. Results show that using simulation data during training enhances the performance on the real robot where limited real data is available. In particular, we obtained an accuracy of 55% on the real Taurus data using a model that is trained only on the simulator data. Furthermore, we achieved an accuracy improvement of 34% when 3% of the real data is added into the training process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge