Qingli Li

S$^3$R: Self-supervised Spectral Regression for Hyperspectral Histopathology Image Classification

Sep 19, 2022

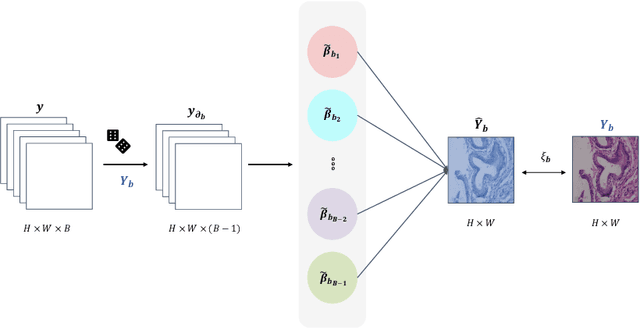

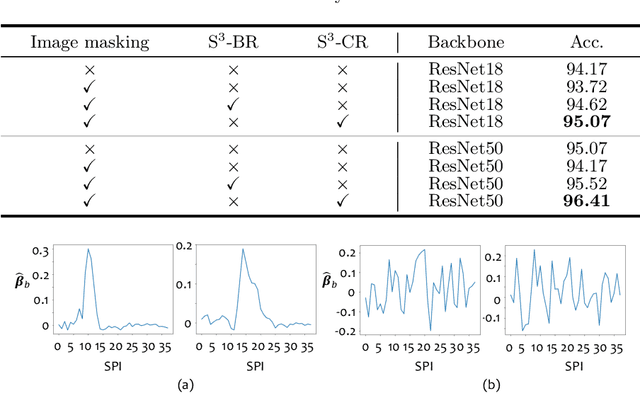

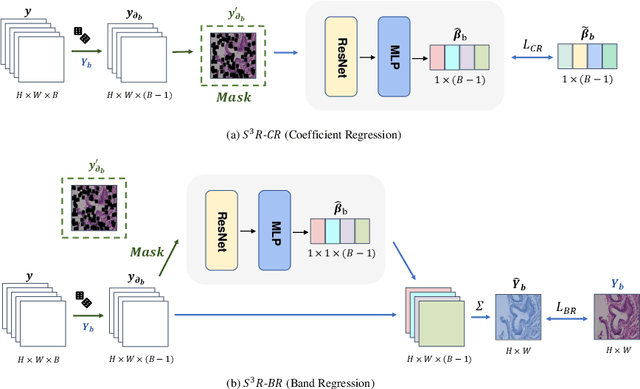

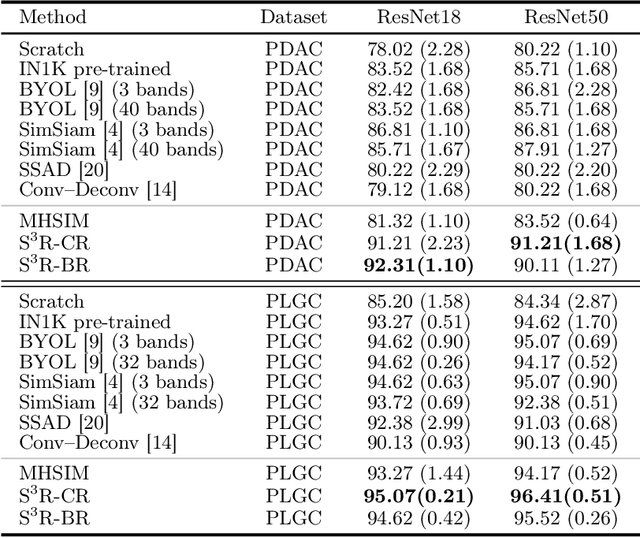

Abstract:Benefited from the rich and detailed spectral information in hyperspectral images (HSI), HSI offers great potential for a wide variety of medical applications such as computational pathology. But, the lack of adequate annotated data and the high spatiospectral dimensions of HSIs usually make classification networks prone to overfit. Thus, learning a general representation which can be transferred to the downstream tasks is imperative. To our knowledge, no appropriate self-supervised pre-training method has been designed for histopathology HSIs. In this paper, we introduce an efficient and effective Self-supervised Spectral Regression (S$^3$R) method, which exploits the low rank characteristic in the spectral domain of HSI. More concretely, we propose to learn a set of linear coefficients that can be used to represent one band by the remaining bands via masking out these bands. Then, the band is restored by using the learned coefficients to reweight the remaining bands. Two pre-text tasks are designed: (1)S$^3$R-CR, which regresses the linear coefficients, so that the pre-trained model understands the inherent structures of HSIs and the pathological characteristics of different morphologies; (2)S$^3$R-BR, which regresses the missing band, making the model to learn the holistic semantics of HSIs. Compared to prior arts i.e., contrastive learning methods, which focuses on natural images, S$^3$R converges at least 3 times faster, and achieves significant improvements up to 14% in accuracy when transferring to HSI classification tasks.

Cross Attention Based Style Distribution for Controllable Person Image Synthesis

Aug 01, 2022

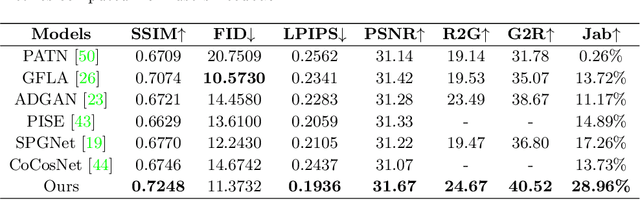

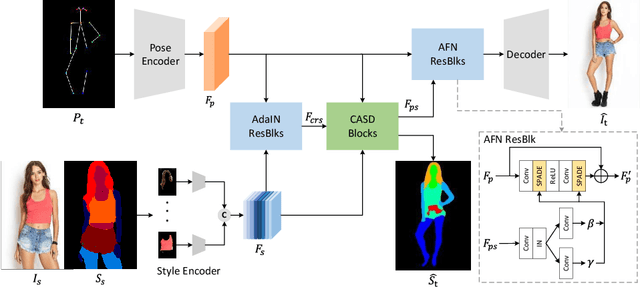

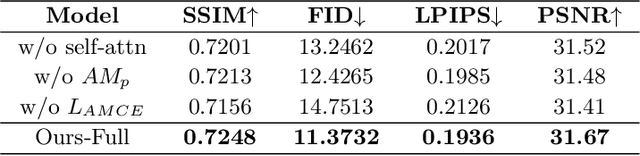

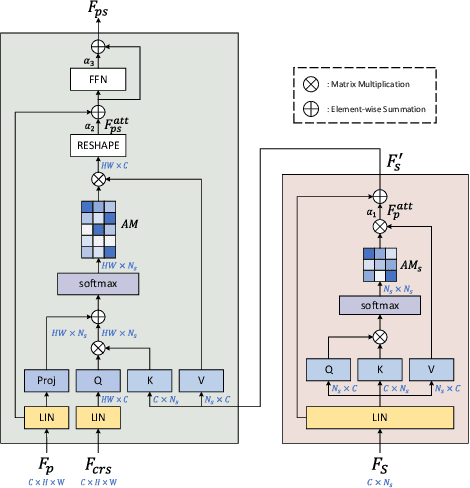

Abstract:Controllable person image synthesis task enables a wide range of applications through explicit control over body pose and appearance. In this paper, we propose a cross attention based style distribution module that computes between the source semantic styles and target pose for pose transfer. The module intentionally selects the style represented by each semantic and distributes them according to the target pose. The attention matrix in cross attention expresses the dynamic similarities between the target pose and the source styles for all semantics. Therefore, it can be utilized to route the color and texture from the source image, and is further constrained by the target parsing map to achieve a clearer objective. At the same time, to encode the source appearance accurately, the self attention among different semantic styles is also added. The effectiveness of our model is validated quantitatively and qualitatively on pose transfer and virtual try-on tasks.

QS-Attn: Query-Selected Attention for Contrastive Learning in I2I Translation

Mar 16, 2022

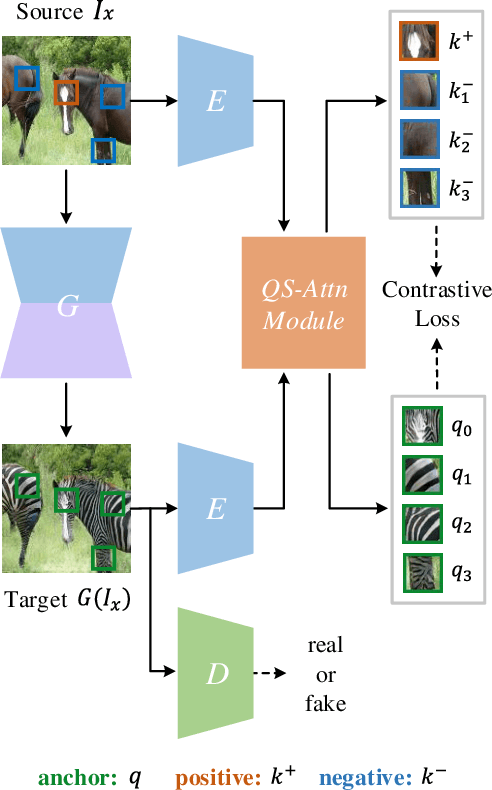

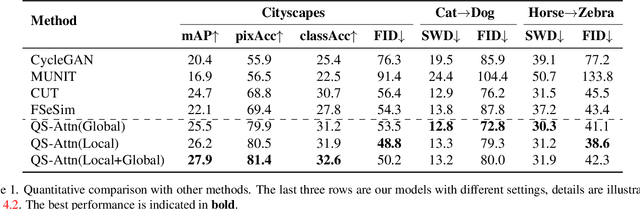

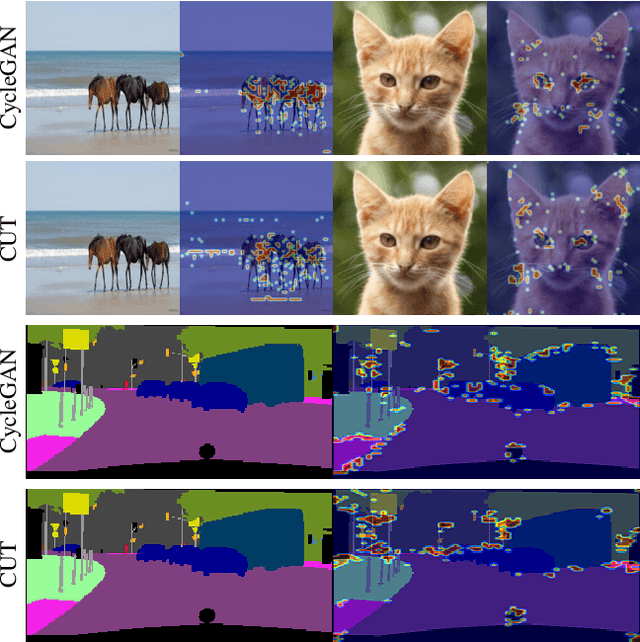

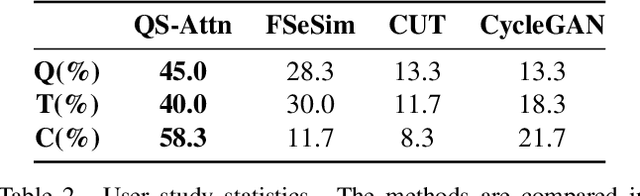

Abstract:Unpaired image-to-image (I2I) translation often requires to maximize the mutual information between the source and the translated images across different domains, which is critical for the generator to keep the source content and prevent it from unnecessary modifications. The self-supervised contrastive learning has already been successfully applied in the I2I. By constraining features from the same location to be closer than those from different ones, it implicitly ensures the result to take content from the source. However, previous work uses the features from random locations to impose the constraint, which may not be appropriate since some locations contain less information of source domain. Moreover, the feature itself does not reflect the relation with others. This paper deals with these problems by intentionally selecting significant anchor points for contrastive learning. We design a query-selected attention (QS-Attn) module, which compares feature distances in the source domain, giving an attention matrix with a probability distribution in each row. Then we select queries according to their measurement of significance, computed from the distribution. The selected ones are regarded as anchors for contrastive loss. At the same time, the reduced attention matrix is employed to route features in both domains, so that source relations maintain in the synthesis. We validate our proposed method in three different I2I datasets, showing that it increases the image quality without adding learnable parameters.

Style Transformer for Image Inversion and Editing

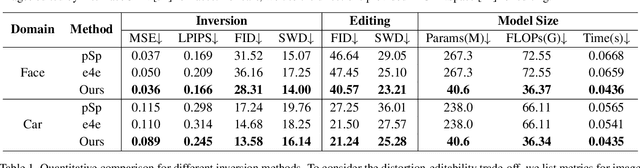

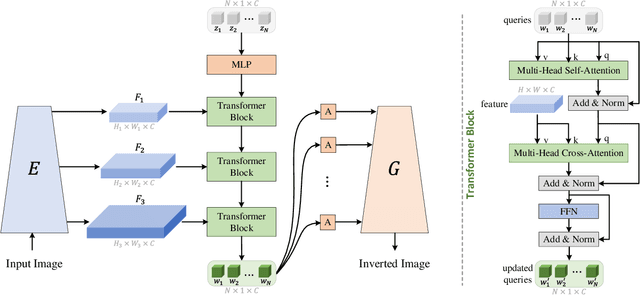

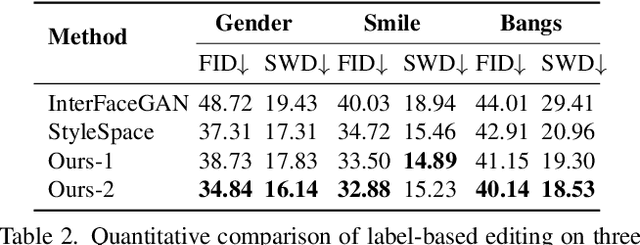

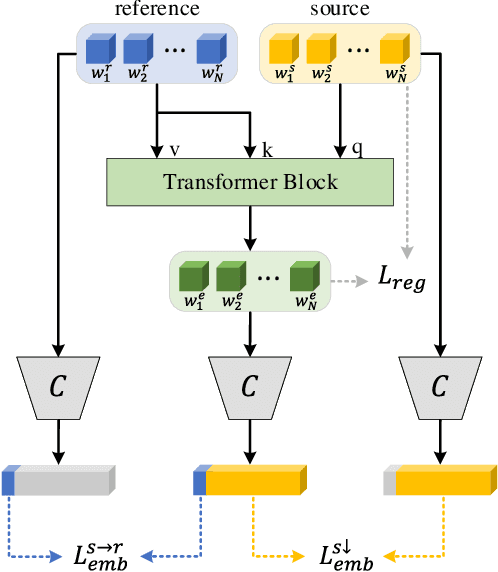

Mar 15, 2022

Abstract:Existing GAN inversion methods fail to provide latent codes for reliable reconstruction and flexible editing simultaneously. This paper presents a transformer-based image inversion and editing model for pretrained StyleGAN which is not only with less distortions, but also of high quality and flexibility for editing. The proposed model employs a CNN encoder to provide multi-scale image features as keys and values. Meanwhile it regards the style code to be determined for different layers of the generator as queries. It first initializes query tokens as learnable parameters and maps them into W+ space. Then the multi-stage alternate self- and cross-attention are utilized, updating queries with the purpose of inverting the input by the generator. Moreover, based on the inverted code, we investigate the reference- and label-based attribute editing through a pretrained latent classifier, and achieve flexible image-to-image translation with high quality results. Extensive experiments are carried out, showing better performances on both inversion and editing tasks within StyleGAN.

Deep Residual Fourier Transformation for Single Image Deblurring

Nov 23, 2021

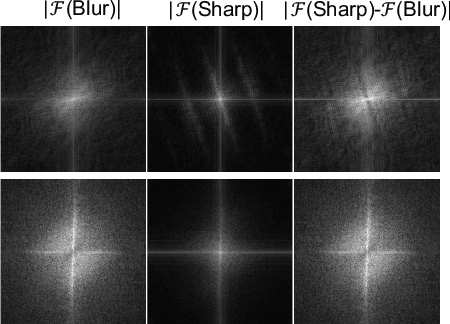

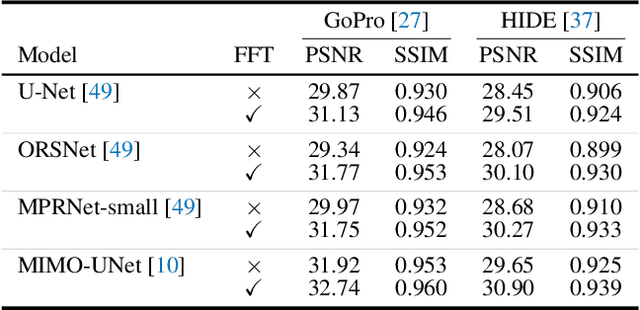

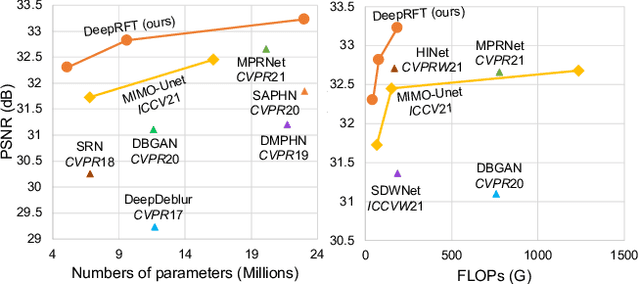

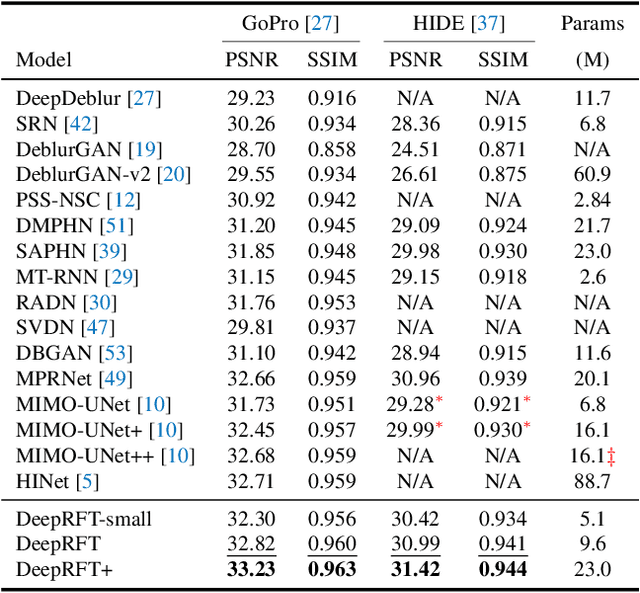

Abstract:It has been a common practice to adopt the ResBlock, which learns the difference between blurry and sharp image pairs, in end-to-end image deblurring architectures. Reconstructing a sharp image from its blurry counterpart requires changes regarding both low- and high-frequency information. Although conventional ResBlock may have good abilities in capturing the high-frequency components of images, it tends to overlook the low-frequency information. Moreover, ResBlock usually fails to felicitously model the long-distance information which is non-trivial in reconstructing a sharp image from its blurry counterpart. In this paper, we present a Residual Fast Fourier Transform with Convolution Block (Res FFT-Conv Block), capable of capturing both long-term and short-term interactions, while integrating both low- and high-frequency residual information. Res FFT-Conv Block is a conceptually simple yet computationally efficient, and plug-and-play block, leading to remarkable performance gains in different architectures. With Res FFT-Conv Block, we further propose a Deep Residual Fourier Transformation (DeepRFT) framework, based upon MIMO-UNet, achieving state-of-the-art image deblurring performance on GoPro, HIDE, RealBlur and DPDD datasets. Experiments show our DeepRFT can boost image deblurring performance significantly (e.g., with 1.09 dB improvement in PSNR on GoPro dataset compared with MIMO-UNet), and DeepRFT+ even reaches 33.23 dB in PSNR on GoPro dataset.

Bridging the Gap between Label- and Reference-based Synthesis in Multi-attribute Image-to-Image Translation

Oct 11, 2021

Abstract:The image-to-image translation (I2IT) model takes a target label or a reference image as the input, and changes a source into the specified target domain. The two types of synthesis, either label- or reference-based, have substantial differences. Particularly, the label-based synthesis reflects the common characteristics of the target domain, and the reference-based shows the specific style similar to the reference. This paper intends to bridge the gap between them in the task of multi-attribute I2IT. We design the label- and reference-based encoding modules (LEM and REM) to compare the domain differences. They first transfer the source image and target label (or reference) into a common embedding space, by providing the opposite directions through the attribute difference vector. Then the two embeddings are simply fused together to form the latent code S_rand (or S_ref), reflecting the domain style differences, which is injected into each layer of the generator by SPADE. To link LEM and REM, so that two types of results benefit each other, we encourage the two latent codes to be close, and set up the cycle consistency between the forward and backward translations on them. Moreover, the interpolation between the S_rand and S_ref is also used to synthesize an extra image. Experiments show that label- and reference-based synthesis are indeed mutually promoted, so that we can have the diverse results from LEM, and high quality results with the similar style of the reference.

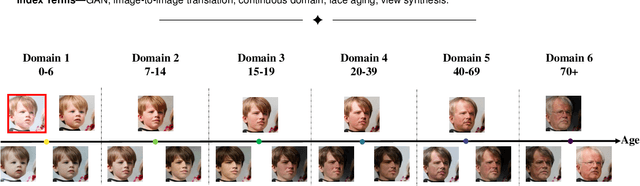

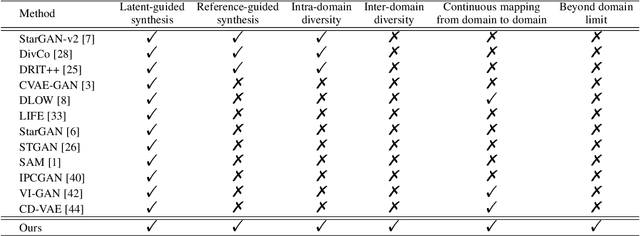

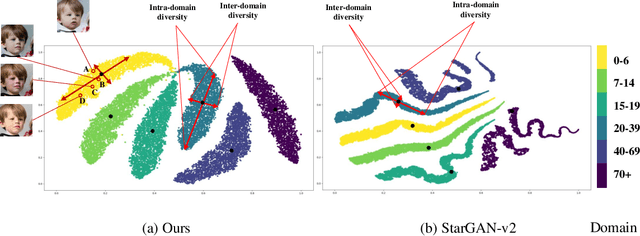

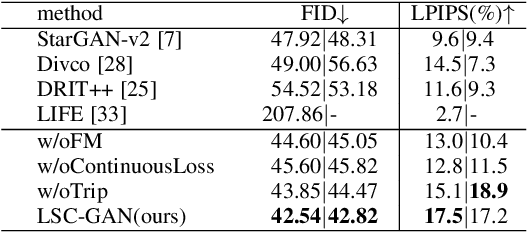

LSC-GAN: Latent Style Code Modeling for Continuous Image-to-image Translation

Oct 11, 2021

Abstract:Image-to-image (I2I) translation is usually carried out among discrete domains. However, image domains, often corresponding to a physical value, are usually continuous. In other words, images gradually change with the value, and there exists no obvious gap between different domains. This paper intends to build the model for I2I translation among continuous varying domains. We first divide the whole domain coverage into discrete intervals, and explicitly model the latent style code for the center of each interval. To deal with continuous translation, we design the editing modules, changing the latent style code along two directions. These editing modules help to constrain the codes for domain centers during training, so that the model can better understand the relation among them. To have diverse results, the latent style code is further diversified with either the random noise or features from the reference image, giving the individual style code to the decoder for label-based or reference-based synthesis. Extensive experiments on age and viewing angle translation show that the proposed method can achieve high-quality results, and it is also flexible for users.

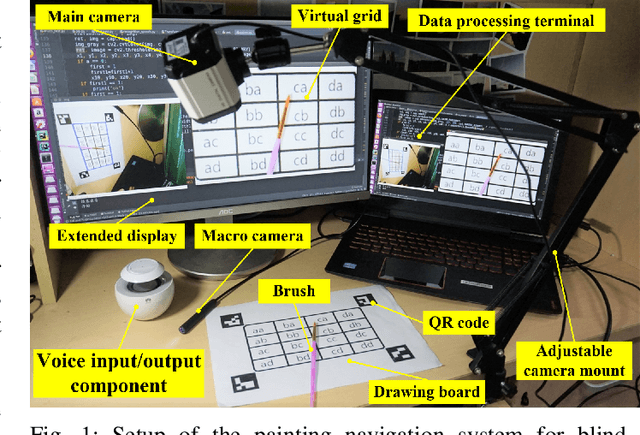

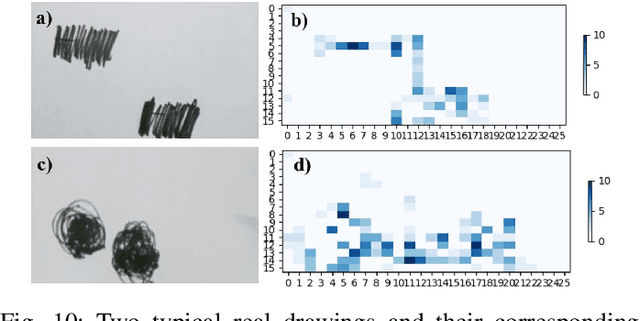

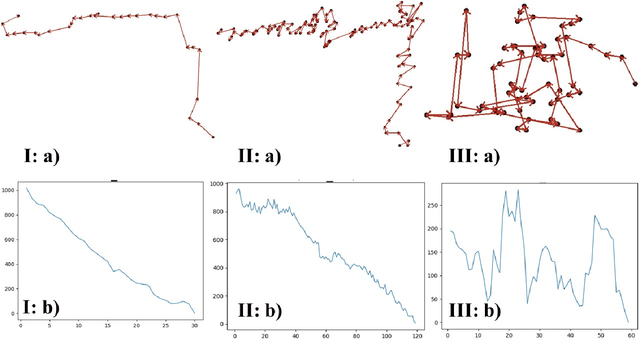

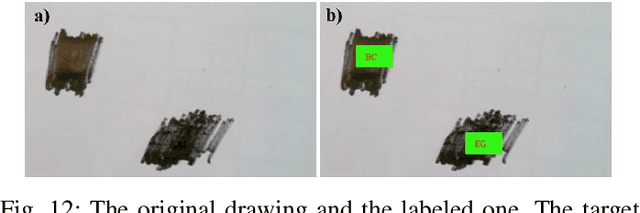

Angel's Girl for Blind Painters: an Efficient Painting Navigation System Validated by Multimodal Evaluation Approach

Jul 27, 2021

Abstract:For people who ardently love painting but unfortunately have visual impairments, holding a paintbrush to create a work is a very difficult task. People in this special group are eager to pick up the paintbrush, like Leonardo da Vinci, to create and make full use of their own talents. Therefore, to maximally bridge this gap, we propose a painting navigation system to assist blind people in painting and artistic creation. The proposed system is composed of cognitive system and guidance system. The system adopts drawing board positioning based on QR code, brush navigation based on target detection and bush real-time positioning. Meanwhile, this paper uses human-computer interaction on the basis of voice and a simple but efficient position information coding rule. In addition, we design a criterion to efficiently judge whether the brush reaches the target or not. According to the experimental results, the thermal curves extracted from the faces of testers show that it is relatively well accepted by blindfolded and even blind testers. With the prompt frequency of 1s, the painting navigation system performs best with the completion degree of 89% with SD of 8.37% and overflow degree of 347% with SD of 162.14%. Meanwhile, the excellent and good types of brush tip trajectory account for 74%, and the relative movement distance is 4.21 with SD of 2.51. This work demonstrates that it is practicable for the blind people to feel the world through the brush in their hands. In the future, we plan to deploy Angle's Eyes on the phone to make it more portable. The demo video of the proposed painting navigation system is available at: https://doi.org/10.6084/m9.figshare.9760004.v1.

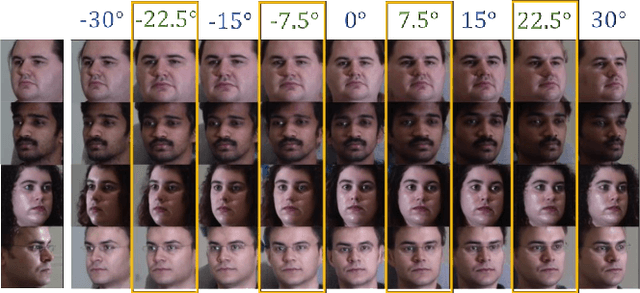

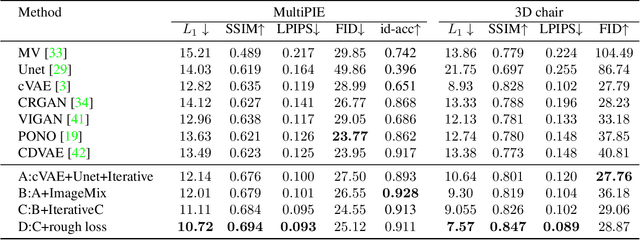

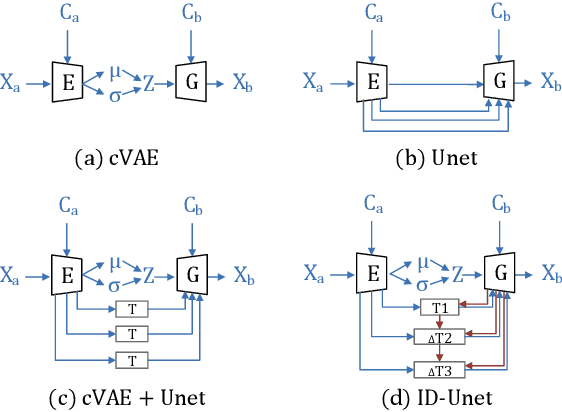

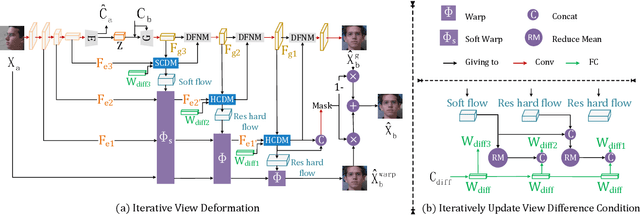

ID-Unet: Iterative Soft and Hard Deformation for View Synthesis

Mar 18, 2021

Abstract:View synthesis is usually done by an autoencoder, in which the encoder maps a source view image into a latent content code, and the decoder transforms it into a target view image according to the condition. However, the source contents are often not well kept in this setting, which leads to unnecessary changes during the view translation. Although adding skipped connections, like Unet, alleviates the problem, but it often causes the failure on the view conformity. This paper proposes a new architecture by performing the source-to-target deformation in an iterative way. Instead of simply incorporating the features from multiple layers of the encoder, we design soft and hard deformation modules, which warp the encoder features to the target view at different resolutions, and give results to the decoder to complement the details. Particularly, the current warping flow is not only used to align the feature of the same resolution, but also as an approximation to coarsely deform the high resolution feature. Then the residual flow is estimated and applied in the high resolution, so that the deformation is built up in the coarse-to-fine fashion. To better constrain the model, we synthesize a rough target view image based on the intermediate flows and their warped features. The extensive ablation studies and the final results on two different data sets show the effectiveness of the proposed model.

SpecTr: Spectral Transformer for Hyperspectral Pathology Image Segmentation

Mar 05, 2021

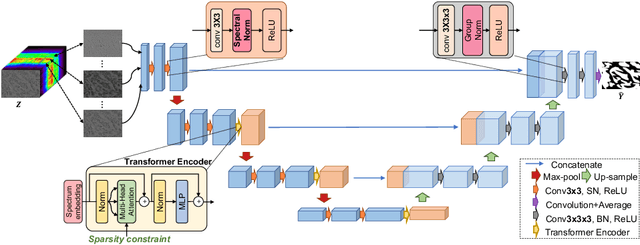

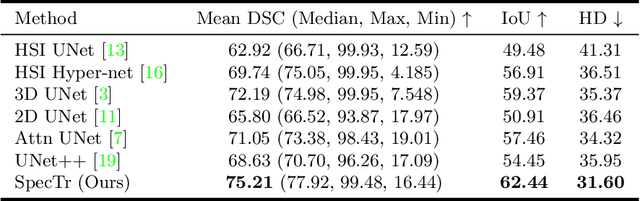

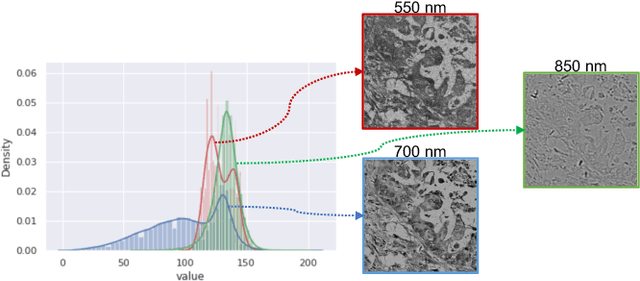

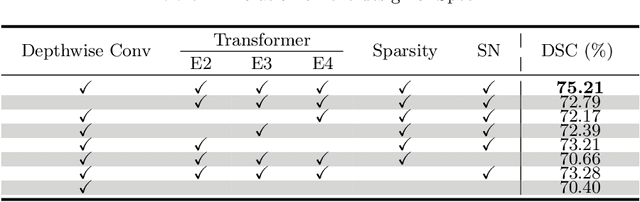

Abstract:Hyperspectral imaging (HSI) unlocks the huge potential to a wide variety of applications relied on high-precision pathology image segmentation, such as computational pathology and precision medicine. Since hyperspectral pathology images benefit from the rich and detailed spectral information even beyond the visible spectrum, the key to achieve high-precision hyperspectral pathology image segmentation is to felicitously model the context along high-dimensional spectral bands. Inspired by the strong context modeling ability of transformers, we hereby, for the first time, formulate the contextual feature learning across spectral bands for hyperspectral pathology image segmentation as a sequence-to-sequence prediction procedure by transformers. To assist spectral context learning procedure, we introduce two important strategies: (1) a sparsity scheme enforces the learned contextual relationship to be sparse, so as to eliminates the distraction from the redundant bands; (2) a spectral normalization, a separate group normalization for each spectral band, mitigates the nuisance caused by heterogeneous underlying distributions of bands. We name our method Spectral Transformer (SpecTr), which enjoys two benefits: (1) it has a strong ability to model long-range dependency among spectral bands, and (2) it jointly explores the spatial-spectral features of HSI. Experiments show that SpecTr outperforms other competing methods in a hyperspectral pathology image segmentation benchmark without the need of pre-training. Code is available at https://github.com/hfut-xc-yun/SpecTr.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge