Nihar B. Shah

Prior and Prejudice: The Novice Reviewers' Bias against Resubmissions in Conference Peer Review

Nov 30, 2020

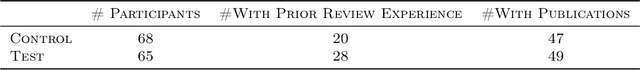

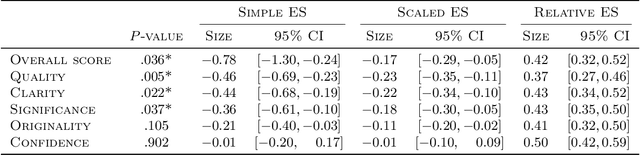

Abstract:Modern machine learning and computer science conferences are experiencing a surge in the number of submissions that challenges the quality of peer review as the number of competent reviewers is growing at a much slower rate. To curb this trend and reduce the burden on reviewers, several conferences have started encouraging or even requiring authors to declare the previous submission history of their papers. Such initiatives have been met with skepticism among authors, who raise the concern about a potential bias in reviewers' recommendations induced by this information. In this work, we investigate whether reviewers exhibit a bias caused by the knowledge that the submission under review was previously rejected at a similar venue, focusing on a population of novice reviewers who constitute a large fraction of the reviewer pool in leading machine learning and computer science conferences. We design and conduct a randomized controlled trial closely replicating the relevant components of the peer-review pipeline with $133$ reviewers (master's, junior PhD students, and recent graduates of top US universities) writing reviews for $19$ papers. The analysis reveals that reviewers indeed become negatively biased when they receive a signal about paper being a resubmission, giving almost 1 point lower overall score on a 10-point Likert item ($\Delta = -0.78, \ 95\% \ \text{CI} = [-1.30, -0.24]$) than reviewers who do not receive such a signal. Looking at specific criteria scores (originality, quality, clarity and significance), we observe that novice reviewers tend to underrate quality the most.

Uncovering Latent Biases in Text: Method and Application to Peer Review

Oct 29, 2020

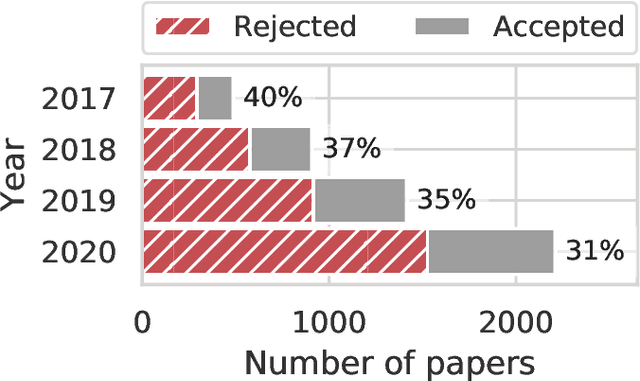

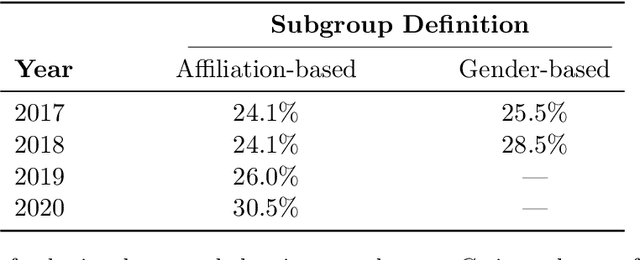

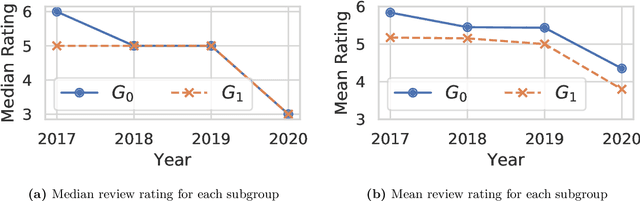

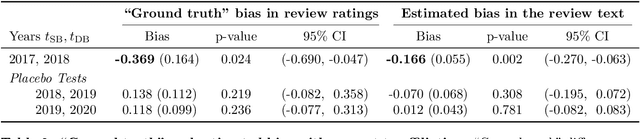

Abstract:Quantifying systematic disparities in numerical quantities such as employment rates and wages between population subgroups provides compelling evidence for the existence of societal biases. However, biases in the text written for members of different subgroups (such as in recommendation letters for male and non-male candidates), though widely reported anecdotally, remain challenging to quantify. In this work, we introduce a novel framework to quantify bias in text caused by the visibility of subgroup membership indicators. We develop a nonparametric estimation and inference procedure to estimate this bias. We then formalize an identification strategy to causally link the estimated bias to the visibility of subgroup membership indicators, provided observations from time periods both before and after an identity-hiding policy change. We identify an application wherein "ground truth" bias can be inferred to evaluate our framework, instead of relying on synthetic or secondary data. Specifically, we apply our framework to quantify biases in the text of peer reviews from a reputed machine learning conference before and after the conference adopted a double-blind reviewing policy. We show evidence of biases in the review ratings that serves as "ground truth", and show that our proposed framework accurately detects these biases from the review text without having access to the review ratings.

Catch Me if I Can: Detecting Strategic Behaviour in Peer Assessment

Oct 08, 2020

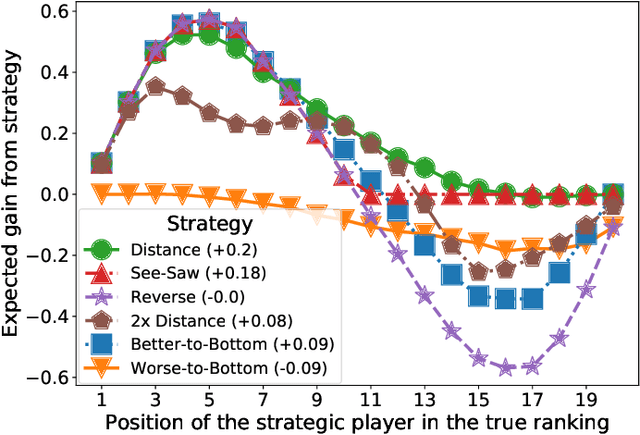

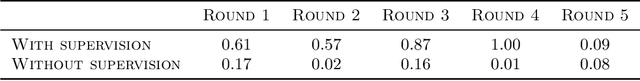

Abstract:We consider the issue of strategic behaviour in various peer-assessment tasks, including peer grading of exams or homeworks and peer review in hiring or promotions. When a peer-assessment task is competitive (e.g., when students are graded on a curve), agents may be incentivized to misreport evaluations in order to improve their own final standing. Our focus is on designing methods for detection of such manipulations. Specifically, we consider a setting in which agents evaluate a subset of their peers and output rankings that are later aggregated to form a final ordering. In this paper, we investigate a statistical framework for this problem and design a principled test for detecting strategic behaviour. We prove that our test has strong false alarm guarantees and evaluate its detection ability in practical settings. For this, we design and execute an experiment that elicits strategic behaviour from subjects and release a dataset of patterns of strategic behaviour that may be of independent interest. We then use the collected data to conduct a series of real and semi-synthetic evaluations that demonstrate a strong detection power of our test.

Mitigating Manipulation in Peer Review via Randomized Reviewer Assignments

Jun 29, 2020

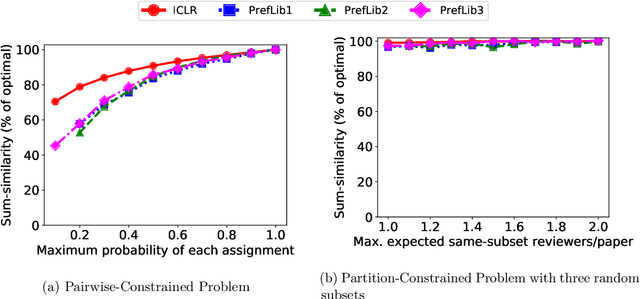

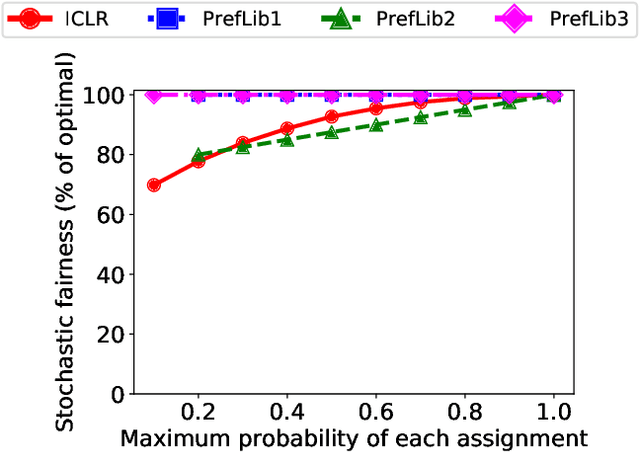

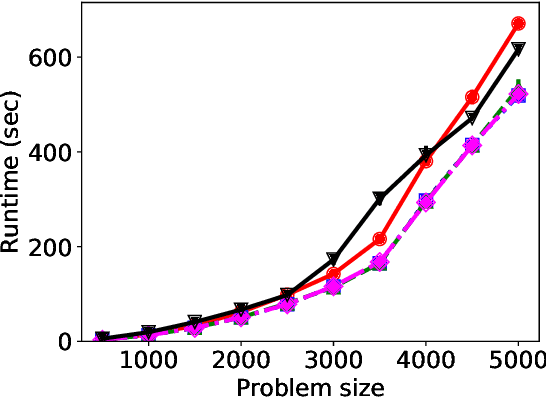

Abstract:We consider three important challenges in conference peer review: (i) reviewers maliciously attempting to get assigned to certain papers to provide positive reviews, possibly as part of quid-pro-quo arrangements with the authors; (ii) "torpedo reviewing," where reviewers deliberately attempt to get assigned to certain papers that they dislike in order to reject them; (iii) reviewer de-anonymization on release of the similarities and the reviewer-assignment code. On the conceptual front, we identify connections between these three problems and present a framework that brings all these challenges under a common umbrella. We then present a (randomized) algorithm for reviewer assignment that can optimally solve the reviewer-assignment problem under any given constraints on the probability of assignment for any reviewer-paper pair. We further consider the problem of restricting the joint probability that certain suspect pairs of reviewers are assigned to certain papers, and show that this problem is NP-hard for arbitrary constraints on these joint probabilities but efficiently solvable for a practical special case. Finally, we experimentally evaluate our algorithms on datasets from past conferences, where we observe that they can limit the chance that any malicious reviewer gets assigned to their desired paper to 50% while producing assignments with over 90% of the total optimal similarity. Our algorithms still achieve this similarity while also preventing reviewers with close associations from being assigned to the same paper.

On the Privacy-Utility Tradeoff in Peer-Review Data Analysis

Jun 29, 2020

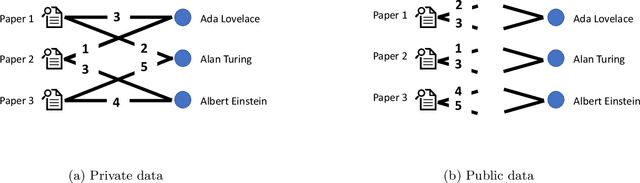

Abstract:A major impediment to research on improving peer review is the unavailability of peer-review data, since any release of such data must grapple with the sensitivity of the peer review data in terms of protecting identities of reviewers from authors. We posit the need to develop techniques to release peer-review data in a privacy-preserving manner. Identifying this problem, in this paper we propose a framework for privacy-preserving release of certain conference peer-review data -- distributions of ratings, miscalibration, and subjectivity -- with an emphasis on the accuracy (or utility) of the released data. The crux of the framework lies in recognizing that a part of the data pertaining to the reviews is already available in public, and we use this information to post-process the data released by any privacy mechanism in a manner that improves the accuracy (utility) of the data while retaining the privacy guarantees. Our framework works with any privacy-preserving mechanism that operates via releasing perturbed data. We present several positive and negative theoretical results, including a polynomial-time algorithm for improving on the privacy-utility tradeoff.

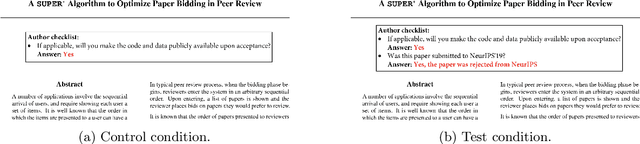

A $\texttt{SUPER}^{\ast}$ Algorithm to Optimize Paper Bidding in Peer Review

Jun 27, 2020

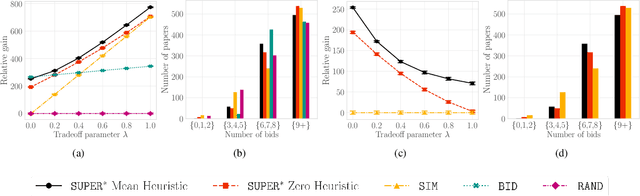

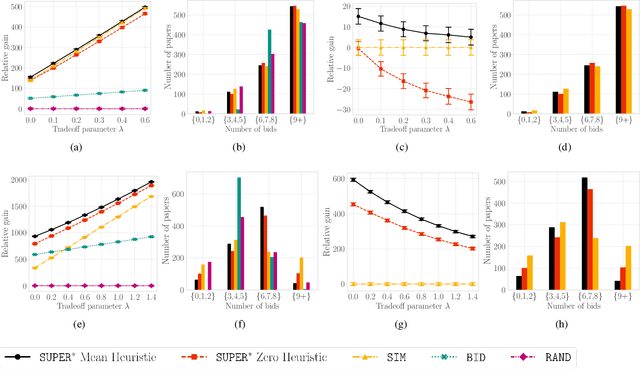

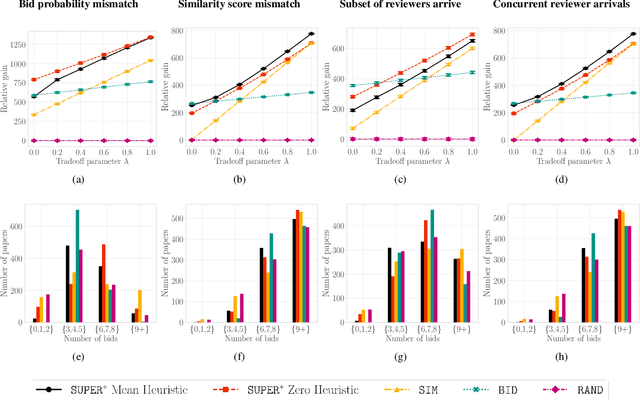

Abstract:A number of applications involve sequential arrival of users, and require showing each user an ordering of items. A prime example (which forms the focus of this paper) is the bidding process in conference peer review where reviewers enter the system sequentially, each reviewer needs to be shown the list of submitted papers, and the reviewer then "bids" to review some papers. The order of the papers shown has a significant impact on the bids due to primacy effects. In deciding on the ordering of papers to show, there are two competing goals: (i) obtaining sufficiently many bids for each paper, and (ii) satisfying reviewers by showing them relevant items. In this paper, we begin by developing a framework to study this problem in a principled manner. We present an algorithm called $\texttt{SUPER}^{\ast}$, inspired by the A$^{\ast}$ algorithm, for this goal. Theoretically, we show a local optimality guarantee of our algorithm and prove that popular baselines are considerably suboptimal. Moreover, under a community model for the similarities, we prove that $\texttt{SUPER}^{\ast}$ is near-optimal whereas the popular baselines are considerably suboptimal. In experiments on real data from ICLR 2018 and synthetic data, we find that $\texttt{SUPER}^{\ast}$ considerably outperforms baselines deployed in existing systems, consistently reducing the number of papers with fewer than requisite bids by 50-75% or more, and is also robust to various real world complexities.

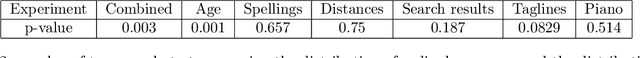

Two-Sample Testing on Ranked Preference Data and the Role of Modeling Assumptions

Jun 21, 2020

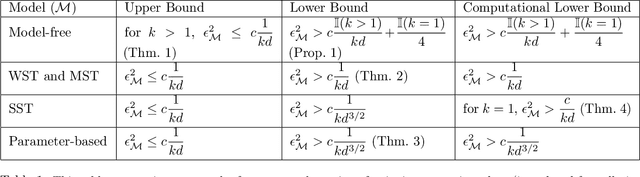

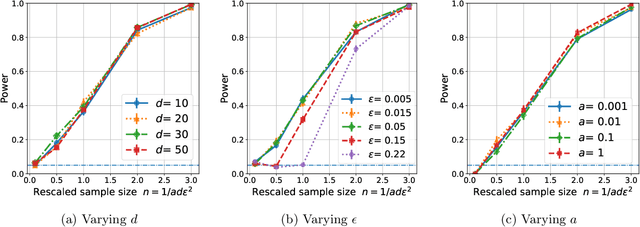

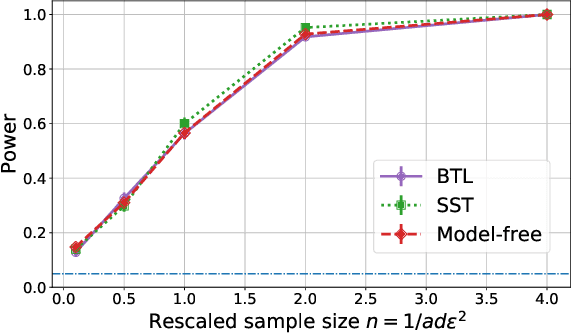

Abstract:A number of applications require two-sample testing on ranked preference data. For instance, in crowdsourcing, there is a long-standing question of whether pairwise comparison data provided by people is distributed similar to ratings-converted-to-comparisons. Other examples include sports data analysis and peer grading. In this paper, we design two-sample tests for pairwise comparison data and ranking data. For our two-sample test for pairwise comparison data, we establish an upper bound on the sample complexity required to correctly distinguish between the distributions of the two sets of samples. Our test requires essentially no assumptions on the distributions. We then prove complementary lower bounds showing that our results are tight (in the minimax sense) up to constant factors. We investigate the role of modeling assumptions by proving lower bounds for a range of pairwise comparison models (WST, MST,SST, parameter-based such as BTL and Thurstone). We also provide testing algorithms and associated sample complexity bounds for the problem of two-sample testing with partial (or total) ranking data.Furthermore, we empirically evaluate our results via extensive simulations as well as two real-world datasets consisting of pairwise comparisons. By applying our two-sample test on real-world pairwise comparison data, we conclude that ratings and rankings provided by people are indeed distributed differently. On the other hand, our test recognizes no significant difference in the relative performance of European football teams across two seasons. Finally, we apply our two-sample test on a real-world partial and total ranking dataset and find a statistically significant difference in Sushi preferences across demographic divisions based on gender, age and region of residence.

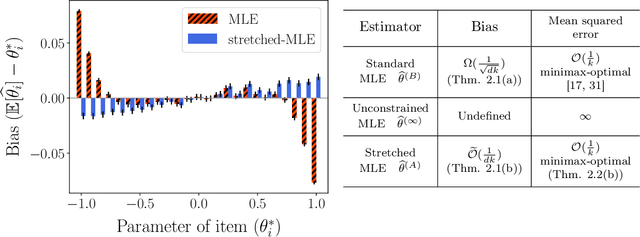

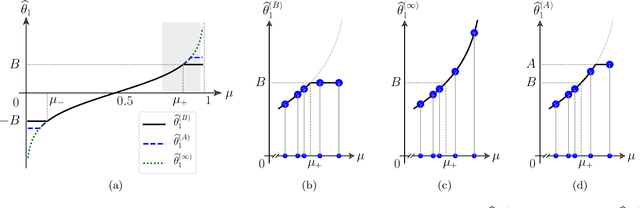

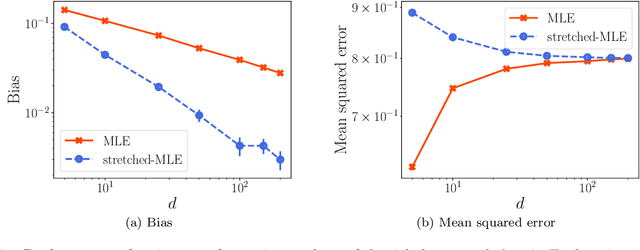

Stretching the Effectiveness of MLE from Accuracy to Bias for Pairwise Comparisons

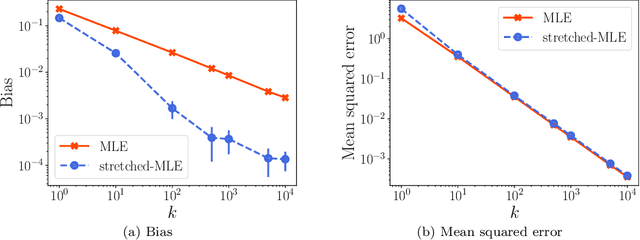

Jun 10, 2019

Abstract:A number of applications (e.g., AI bot tournaments, sports, peer grading, crowdsourcing) use pairwise comparison data and the Bradley-Terry-Luce (BTL) model to evaluate a given collection of items (e.g., bots, teams, students, search results). Past work has shown that under the BTL model, the widely-used maximum-likelihood estimator (MLE) is minimax-optimal in estimating the item parameters, in terms of the mean squared error. However, another important desideratum for designing estimators is fairness. In this work, we consider fairness modeled by the notion of bias in statistics. We show that the MLE incurs a suboptimal rate in terms of bias. We then propose a simple modification to the MLE, which "stretches" the bounding box of the maximum-likelihood optimizer by a small constant factor from the underlying ground truth domain. We show that this simple modification leads to an improved rate in bias, while maintaining minimax-optimality in the mean squared error. In this manner, our proposed class of estimators provably improves fairness represented by bias without loss in accuracy.

Your 2 is My 1, Your 3 is My 9: Handling Arbitrary Miscalibrations in Ratings

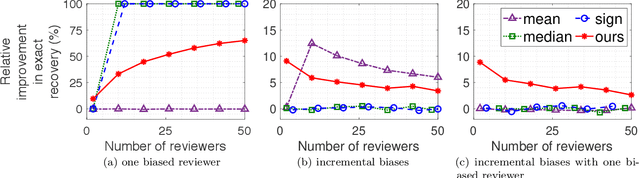

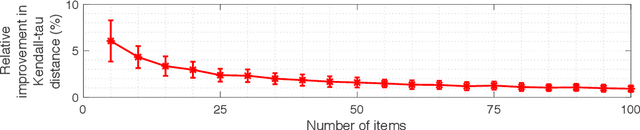

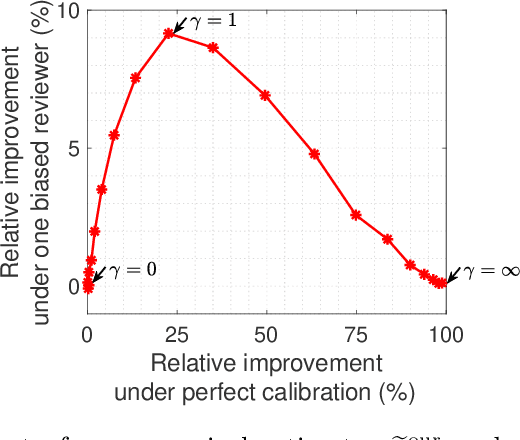

Sep 13, 2018

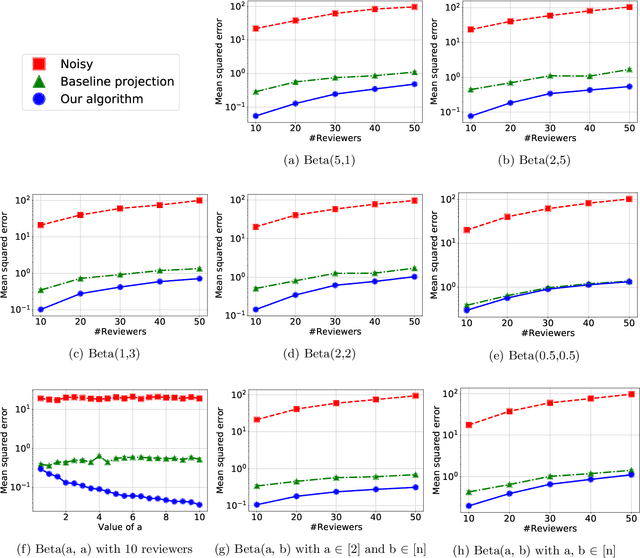

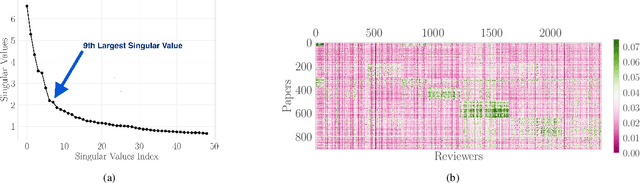

Abstract:Cardinal scores (numeric ratings) collected from people are well known to suffer from miscalibrations. A popular approach to address this issue is to assume simplistic models of miscalibration (such as linear biases) to de-bias the scores. This approach, however, often fares poorly because people's miscalibrations are typically far more complex and not well understood. In the absence of simplifying assumptions on the miscalibration, it is widely believed by the crowdsourcing community that the only useful information in the cardinal scores is the induced ranking. In this paper, inspired by the framework of Stein's shrinkage, empirical Bayes, and the classic two-envelope problem, we contest this widespread belief. Specifically, we consider cardinal scores with arbitrary (or even adversarially chosen) miscalibrations which are only required to be consistent with the induced ranking. We design estimators which despite making no assumptions on the miscalibration, strictly and uniformly outperform all possible estimators that rely on only the ranking. Our estimators are flexible in that they can be used as a plug-in for a variety of applications, and we provide a proof-of-concept for A/B testing and ranking. Our results thus provide novel insights in the eternal debate between cardinal and ordinal data.

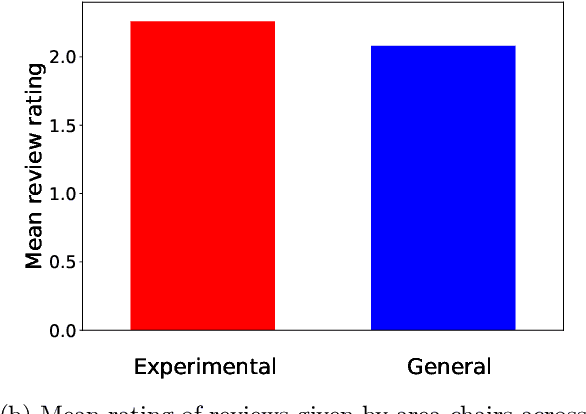

Choosing How to Choose Papers

Aug 27, 2018

Abstract:It is common to see a handful of reviewers reject a highly novel paper, because they view, say, extensive experiments as far more important than novelty, whereas the community as a whole would have embraced the paper. More generally, the disparate mapping of criteria scores to final recommendations by different reviewers is a major source of inconsistency in peer review. In this paper we present a framework --- based on $L(p,q)$-norm empirical risk minimization --- for learning the community's aggregate mapping. We draw on computational social choice to identify desirable values of $p$ and $q$; specifically, we characterize $p=q=1$ as the only choice that satisfies three natural axiomatic properties. Finally, we implement and apply our approach to reviews from IJCAI 2017.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge