Ariel D. Procaccia

How RLHF Amplifies Sycophancy

Feb 01, 2026Abstract:Large language models often exhibit increased sycophantic behavior after preference-based post-training, showing a stronger tendency to affirm a user's stated or implied belief even when this conflicts with factual accuracy or sound judgment. We present a formal analysis of how alignment from human feedback can increase this failure mode by identifying an explicit amplification mechanism that causally links optimization against a learned reward to bias in the human preference data used for alignment. We show that the direction of behavioral drift is determined by a covariance under the base policy between endorsing the belief signal in the prompt and the learned reward, and that the first-order effect reduces to a simple mean-gap condition. We then analyze reward learning from pairwise comparisons under random utility models like Bradley-Terry and characterize when bias in human annotators' preferences induces this reward gap. Next, we propose a training-time intervention designed to neutralize the amplification mechanism itself. Among all post-trained policies that prevent sycophantic behavior from increasing, we characterize the unique policy closest in KL divergence to the unconstrained post-trained policy, and derive the corresponding minimal reward correction as a closed-form agreement penalty. Computational experiments find that reward gaps are common and cause behavioral drift in all the configurations considered.

Generative Social Choice: The Next Generation

May 28, 2025Abstract:A key task in certain democratic processes is to produce a concise slate of statements that proportionally represents the full spectrum of user opinions. This task is similar to committee elections, but unlike traditional settings, the candidate set comprises all possible statements of varying lengths, and so it can only be accessed through specific queries. Combining social choice and large language models, prior work has approached this challenge through a framework of generative social choice. We extend the framework in two fundamental ways, providing theoretical guarantees even in the face of approximately optimal queries and a budget limit on the overall length of the slate. Using GPT-4o to implement queries, we showcase our approach on datasets related to city improvement measures and drug reviews, demonstrating its effectiveness in generating representative slates from unstructured user opinions.

Clone-Robust AI Alignment

Jan 16, 2025

Abstract:A key challenge in training Large Language Models (LLMs) is properly aligning them with human preferences. Reinforcement Learning with Human Feedback (RLHF) uses pairwise comparisons from human annotators to train reward functions and has emerged as a popular alignment method. However, input datasets in RLHF are not necessarily balanced in the types of questions and answers that are included. Therefore, we want RLHF algorithms to perform well even when the set of alternatives is not uniformly distributed. Drawing on insights from social choice theory, we introduce robustness to approximate clones, a desirable property of RLHF algorithms which requires that adding near-duplicate alternatives does not significantly change the learned reward function. We first demonstrate that the standard RLHF algorithm based on regularized maximum likelihood estimation (MLE) fails to satisfy this property. We then propose the weighted MLE, a new RLHF algorithm that modifies the standard regularized MLE by weighting alternatives based on their similarity to other alternatives. This new algorithm guarantees robustness to approximate clones while preserving desirable theoretical properties.

Policy Aggregation

Nov 06, 2024Abstract:We consider the challenge of AI value alignment with multiple individuals that have different reward functions and optimal policies in an underlying Markov decision process. We formalize this problem as one of policy aggregation, where the goal is to identify a desirable collective policy. We argue that an approach informed by social choice theory is especially suitable. Our key insight is that social choice methods can be reinterpreted by identifying ordinal preferences with volumes of subsets of the state-action occupancy polytope. Building on this insight, we demonstrate that a variety of methods--including approval voting, Borda count, the proportional veto core, and quantile fairness--can be practically applied to policy aggregation.

Honor Among Bandits: No-Regret Learning for Online Fair Division

Jul 01, 2024Abstract:We consider the problem of online fair division of indivisible goods to players when there are a finite number of types of goods and player values are drawn from distributions with unknown means. Our goal is to maximize social welfare subject to allocating the goods fairly in expectation. When a player's value for an item is unknown at the time of allocation, we show that this problem reduces to a variant of (stochastic) multi-armed bandits, where there exists an arm for each player's value for each type of good. At each time step, we choose a distribution over arms which determines how the next item is allocated. We consider two sets of fairness constraints for this problem: envy-freeness in expectation and proportionality in expectation. Our main result is the design of an explore-then-commit algorithm that achieves $\tilde{O}(T^{2/3})$ regret while maintaining either fairness constraint. This result relies on unique properties fundamental to fair-division constraints that allow faster rates of learning, despite the restricted action space.

Learning Social Welfare Functions

May 27, 2024

Abstract:Is it possible to understand or imitate a policy maker's rationale by looking at past decisions they made? We formalize this question as the problem of learning social welfare functions belonging to the well-studied family of power mean functions. We focus on two learning tasks; in the first, the input is vectors of utilities of an action (decision or policy) for individuals in a group and their associated social welfare as judged by a policy maker, whereas in the second, the input is pairwise comparisons between the welfares associated with a given pair of utility vectors. We show that power mean functions are learnable with polynomial sample complexity in both cases, even if the comparisons are social welfare information is noisy. Finally, we design practical algorithms for these tasks and evaluate their performance.

Axioms for AI Alignment from Human Feedback

May 23, 2024

Abstract:In the context of reinforcement learning from human feedback (RLHF), the reward function is generally derived from maximum likelihood estimation of a random utility model based on pairwise comparisons made by humans. The problem of learning a reward function is one of preference aggregation that, we argue, largely falls within the scope of social choice theory. From this perspective, we can evaluate different aggregation methods via established axioms, examining whether these methods meet or fail well-known standards. We demonstrate that both the Bradley-Terry-Luce Model and its broad generalizations fail to meet basic axioms. In response, we develop novel rules for learning reward functions with strong axiomatic guarantees. A key innovation from the standpoint of social choice is that our problem has a linear structure, which greatly restricts the space of feasible rules and leads to a new paradigm that we call linear social choice.

Generative Social Choice

Sep 03, 2023Abstract:Traditionally, social choice theory has only been applicable to choices among a few predetermined alternatives but not to more complex decisions such as collectively selecting a textual statement. We introduce generative social choice, a framework that combines the mathematical rigor of social choice theory with large language models' capability to generate text and extrapolate preferences. This framework divides the design of AI-augmented democratic processes into two components: first, proving that the process satisfies rigorous representation guarantees when given access to oracle queries; second, empirically validating that these queries can be approximately implemented using a large language model. We illustrate this framework by applying it to the problem of generating a slate of statements that is representative of opinions expressed as free-form text, for instance in an online deliberative process.

The Distortion of Binomial Voting Defies Expectation

Jun 27, 2023Abstract:In computational social choice, the distortion of a voting rule quantifies the degree to which the rule overcomes limited preference information to select a socially desirable outcome. This concept has been investigated extensively, but only through a worst-case lens. Instead, we study the expected distortion of voting rules with respect to an underlying distribution over voter utilities. Our main contribution is the design and analysis of a novel and intuitive rule, binomial voting, which provides strong expected distortion guarantees for all distributions.

The Goal-Gradient Hypothesis in Stack Overflow

Feb 14, 2020

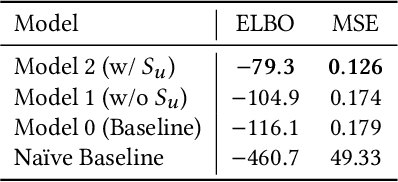

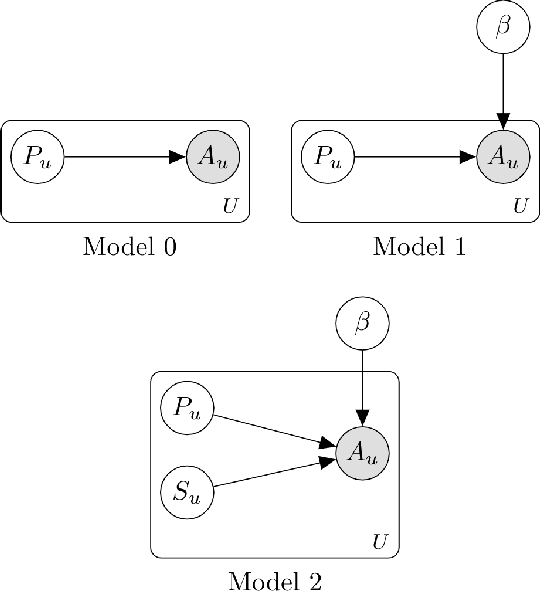

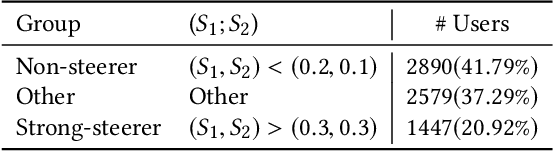

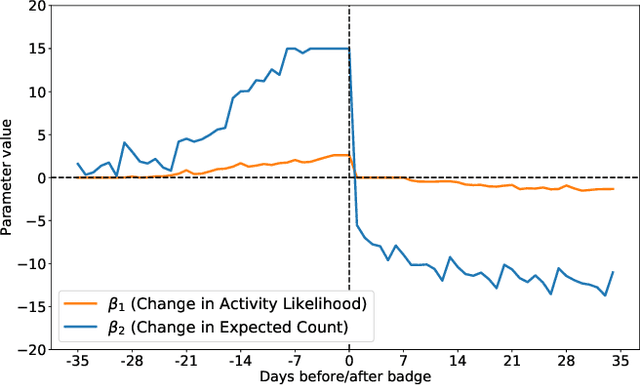

Abstract:According to the goal-gradient hypothesis, people increase their efforts toward a reward as they close in on the reward. This hypothesis has recently been used to explain users' behavior in online communities that use badges as rewards for completing specific activities. In such settings, users exhibit a "steering effect," a dramatic increase in activity as the users approach a badge threshold, thereby following the predictions made by the goal-gradient hypothesis. This paper provides a new probabilistic model of users' behavior, which captures users who exhibit different levels of steering. We apply this model to data from the popular Q&A site, Stack Overflow, and study users who achieve one of the badges available on this platform. Our results show that only a fraction (20%) of all users strongly experience steering, whereas the activity of more than 40% of badge achievers appears not to be affected by the badge. In particular, we find that for some of the population, an increased activity in and around the badge acquisition date may reflect a statistical artifact rather than steering, as was previously thought in prior work. These results are important for system designers who hope to motivate and guide their users towards certain actions. We have highlighted the need for further studies which investigate what motivations drive the non-steered users to contribute to online communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge