Sivaraman Balakrishnan

Carnegie Mellon University

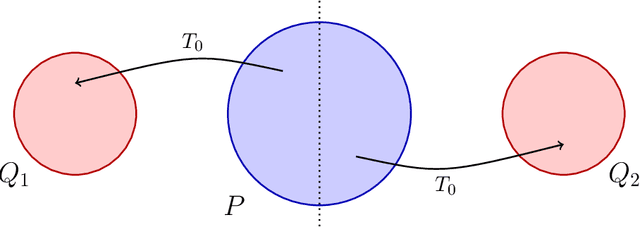

On Nonasymptotic Confidence Intervals for Treatment Effects in Randomized Experiments

Jan 16, 2026Abstract:We study nonasymptotic (finite-sample) confidence intervals for treatment effects in randomized experiments. In the existing literature, the effective sample sizes of nonasymptotic confidence intervals tend to be looser than the corresponding central-limit-theorem-based confidence intervals by a factor depending on the square root of the propensity score. We show that this performance gap can be closed, designing nonasymptotic confidence intervals that have the same effective sample size as their asymptotic counterparts. Our approach involves systematic exploitation of negative dependence or variance adaptivity (or both). We also show that the nonasymptotic rates that we achieve are unimprovable in an information-theoretic sense.

Panprediction: Optimal Predictions for Any Downstream Task and Loss

Oct 31, 2025Abstract:Supervised learning is classically formulated as training a model to minimize a fixed loss function over a fixed distribution, or task. However, an emerging paradigm instead views model training as extracting enough information from data so that the model can be used to minimize many losses on many downstream tasks. We formalize a mathematical framework for this paradigm, which we call panprediction, and study its statistical complexity. Formally, panprediction generalizes omniprediction and sits upstream from multi-group learning, which respectively focus on predictions that generalize to many downstream losses or many downstream tasks, but not both. Concretely, we design algorithms that learn deterministic and randomized panpredictors with $\tilde{O}(1/\varepsilon^3)$ and $\tilde{O}(1/\varepsilon^2)$ samples, respectively. Our results demonstrate that under mild assumptions, simultaneously minimizing infinitely many losses on infinitely many tasks can be as statistically easy as minimizing one loss on one task. Along the way, we improve the best known sample complexity guarantee of deterministic omniprediction by a factor of $1/\varepsilon$, and match all other known sample complexity guarantees of omniprediction and multi-group learning. Our key technical ingredient is a nearly lossless reduction from panprediction to a statistically efficient notion of calibration, called step calibration.

Statistical Inference for Optimal Transport Maps: Recent Advances and Perspectives

Jun 23, 2025

Abstract:In many applications of optimal transport (OT), the object of primary interest is the optimal transport map. This map rearranges mass from one probability distribution to another in the most efficient way possible by minimizing a specified cost. In this paper we review recent advances in estimating and developing limit theorems for the OT map, using samples from the underlying distributions. We also review parallel lines of work that establish similar results for special cases and variants of the basic OT setup. We conclude with a discussion of key directions for future research with the goal of providing practitioners with reliable inferential tools.

Stability Bounds for Smooth Optimal Transport Maps and their Statistical Implications

Feb 17, 2025Abstract:We study estimators of the optimal transport (OT) map between two probability distributions. We focus on plugin estimators derived from the OT map between estimates of the underlying distributions. We develop novel stability bounds for OT maps which generalize those in past work, and allow us to reduce the problem of optimally estimating the transport map to that of optimally estimating densities in the Wasserstein distance. In contrast, past work provided a partial connection between these problems and relied on regularity theory for the Monge-Ampere equation to bridge the gap, a step which required unnatural assumptions to obtain sharp guarantees. We also provide some new insights into the connections between stability bounds which arise in the analysis of plugin estimators and growth bounds for the semi-dual functional which arise in the analysis of Brenier potential-based estimators of the transport map. We illustrate the applicability of our new stability bounds by revisiting the smooth setting studied by Manole et al., analyzing two of their estimators under more general conditions. Critically, our bounds do not require smoothness or boundedness assumptions on the underlying measures. As an illustrative application, we develop and analyze a novel tuning parameter-free estimator for the OT map between two strongly log-concave distributions.

Double Cross-fit Doubly Robust Estimators: Beyond Series Regression

Mar 22, 2024Abstract:Doubly robust estimators with cross-fitting have gained popularity in causal inference due to their favorable structure-agnostic error guarantees. However, when additional structure, such as H\"{o}lder smoothness, is available then more accurate "double cross-fit doubly robust" (DCDR) estimators can be constructed by splitting the training data and undersmoothing nuisance function estimators on independent samples. We study a DCDR estimator of the Expected Conditional Covariance, a functional of interest in causal inference and conditional independence testing, and derive a series of increasingly powerful results with progressively stronger assumptions. We first provide a structure-agnostic error analysis for the DCDR estimator with no assumptions on the nuisance functions or their estimators. Then, assuming the nuisance functions are H\"{o}lder smooth, but without assuming knowledge of the true smoothness level or the covariate density, we establish that DCDR estimators with several linear smoothers are semiparametric efficient under minimal conditions and achieve fast convergence rates in the non-$\sqrt{n}$ regime. When the covariate density and smoothnesses are known, we propose a minimax rate-optimal DCDR estimator based on undersmoothed kernel regression. Moreover, we show an undersmoothed DCDR estimator satisfies a slower-than-$\sqrt{n}$ central limit theorem, and that inference is possible even in the non-$\sqrt{n}$ regime. Finally, we support our theoretical results with simulations, providing intuition for double cross-fitting and undersmoothing, demonstrating where our estimator achieves semiparametric efficiency while the usual "single cross-fit" estimator fails, and illustrating asymptotic normality for the undersmoothed DCDR estimator.

Semi-Supervised U-statistics

Mar 09, 2024Abstract:Semi-supervised datasets are ubiquitous across diverse domains where obtaining fully labeled data is costly or time-consuming. The prevalence of such datasets has consistently driven the demand for new tools and methods that exploit the potential of unlabeled data. Responding to this demand, we introduce semi-supervised U-statistics enhanced by the abundance of unlabeled data, and investigate their statistical properties. We show that the proposed approach is asymptotically Normal and exhibits notable efficiency gains over classical U-statistics by effectively integrating various powerful prediction tools into the framework. To understand the fundamental difficulty of the problem, we derive minimax lower bounds in semi-supervised settings and showcase that our procedure is semi-parametrically efficient under regularity conditions. Moreover, tailored to bivariate kernels, we propose a refined approach that outperforms the classical U-statistic across all degeneracy regimes, and demonstrate its optimality properties. Simulation studies are conducted to corroborate our findings and to further demonstrate our framework.

Complementary Benefits of Contrastive Learning and Self-Training Under Distribution Shift

Dec 06, 2023Abstract:Self-training and contrastive learning have emerged as leading techniques for incorporating unlabeled data, both under distribution shift (unsupervised domain adaptation) and when it is absent (semi-supervised learning). However, despite the popularity and compatibility of these techniques, their efficacy in combination remains unexplored. In this paper, we undertake a systematic empirical investigation of this combination, finding that (i) in domain adaptation settings, self-training and contrastive learning offer significant complementary gains; and (ii) in semi-supervised learning settings, surprisingly, the benefits are not synergistic. Across eight distribution shift datasets (e.g., BREEDs, WILDS), we demonstrate that the combined method obtains 3--8% higher accuracy than either approach independently. We then theoretically analyze these techniques in a simplified model of distribution shift, demonstrating scenarios under which the features produced by contrastive learning can yield a good initialization for self-training to further amplify gains and achieve optimal performance, even when either method alone would fail.

Online Label Shift: Optimal Dynamic Regret meets Practical Algorithms

May 31, 2023Abstract:This paper focuses on supervised and unsupervised online label shift, where the class marginals $Q(y)$ varies but the class-conditionals $Q(x|y)$ remain invariant. In the unsupervised setting, our goal is to adapt a learner, trained on some offline labeled data, to changing label distributions given unlabeled online data. In the supervised setting, we must both learn a classifier and adapt to the dynamically evolving class marginals given only labeled online data. We develop novel algorithms that reduce the adaptation problem to online regression and guarantee optimal dynamic regret without any prior knowledge of the extent of drift in the label distribution. Our solution is based on bootstrapping the estimates of \emph{online regression oracles} that track the drifting proportions. Experiments across numerous simulated and real-world online label shift scenarios demonstrate the superior performance of our proposed approaches, often achieving 1-3\% improvement in accuracy while being sample and computationally efficient. Code is publicly available at https://github.com/acmi-lab/OnlineLabelShift.

The Fundamental Limits of Structure-Agnostic Functional Estimation

May 06, 2023Abstract:Many recent developments in causal inference, and functional estimation problems more generally, have been motivated by the fact that classical one-step (first-order) debiasing methods, or their more recent sample-split double machine-learning avatars, can outperform plugin estimators under surprisingly weak conditions. These first-order corrections improve on plugin estimators in a black-box fashion, and consequently are often used in conjunction with powerful off-the-shelf estimation methods. These first-order methods are however provably suboptimal in a minimax sense for functional estimation when the nuisance functions live in Holder-type function spaces. This suboptimality of first-order debiasing has motivated the development of "higher-order" debiasing methods. The resulting estimators are, in some cases, provably optimal over Holder-type spaces, but both the estimators which are minimax-optimal and their analyses are crucially tied to properties of the underlying function space. In this paper we investigate the fundamental limits of structure-agnostic functional estimation, where relatively weak conditions are placed on the underlying nuisance functions. We show that there is a strong sense in which existing first-order methods are optimal. We achieve this goal by providing a formalization of the problem of functional estimation with black-box nuisance function estimates, and deriving minimax lower bounds for this problem. Our results highlight some clear tradeoffs in functional estimation -- if we wish to remain agnostic to the underlying nuisance function spaces, impose only high-level rate conditions, and maintain compatibility with black-box nuisance estimators then first-order methods are optimal. When we have an understanding of the structure of the underlying nuisance functions then carefully constructed higher-order estimators can outperform first-order estimators.

RLSbench: Domain Adaptation Under Relaxed Label Shift

Feb 06, 2023

Abstract:Despite the emergence of principled methods for domain adaptation under label shift, the sensitivity of these methods for minor shifts in the class conditional distributions remains precariously under explored. Meanwhile, popular deep domain adaptation heuristics tend to falter when faced with shifts in label proportions. While several papers attempt to adapt these heuristics to accommodate shifts in label proportions, inconsistencies in evaluation criteria, datasets, and baselines, make it hard to assess the state of the art. In this paper, we introduce RLSbench, a large-scale relaxed label shift benchmark, consisting of >500 distribution shift pairs that draw on 14 datasets across vision, tabular, and language modalities and compose them with varying label proportions. First, we evaluate 13 popular domain adaptation methods, demonstrating more widespread failures under label proportion shifts than were previously known. Next, we develop an effective two-step meta-algorithm that is compatible with most deep domain adaptation heuristics: (i) pseudo-balance the data at each epoch; and (ii) adjust the final classifier with (an estimate of) target label distribution. The meta-algorithm improves existing domain adaptation heuristics often by 2--10\% accuracy points under extreme label proportion shifts and has little (i.e., <0.5\%) effect when label proportions do not shift. We hope that these findings and the availability of RLSbench will encourage researchers to rigorously evaluate proposed methods in relaxed label shift settings. Code is publicly available at https://github.com/acmi-lab/RLSbench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge