Mitigating Shortcuts in Language Models with Soft Label Encoding

Sep 17, 2023Zirui He, Huiqi Deng, Haiyan Zhao, Ninghao Liu, Mengnan Du

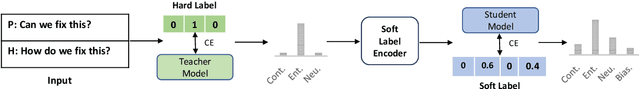

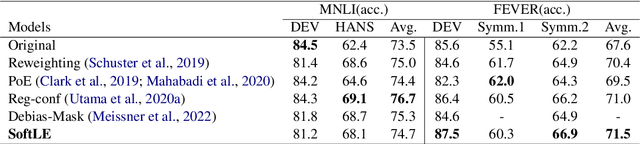

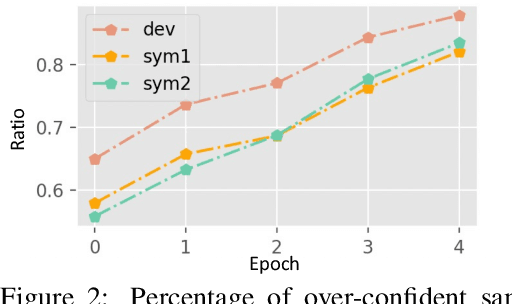

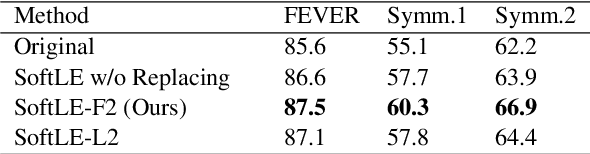

Recent research has shown that large language models rely on spurious correlations in the data for natural language understanding (NLU) tasks. In this work, we aim to answer the following research question: Can we reduce spurious correlations by modifying the ground truth labels of the training data? Specifically, we propose a simple yet effective debiasing framework, named Soft Label Encoding (SoftLE). We first train a teacher model with hard labels to determine each sample's degree of relying on shortcuts. We then add one dummy class to encode the shortcut degree, which is used to smooth other dimensions in the ground truth label to generate soft labels. This new ground truth label is used to train a more robust student model. Extensive experiments on two NLU benchmark tasks demonstrate that SoftLE significantly improves out-of-distribution generalization while maintaining satisfactory in-distribution accuracy.

Explainability for Large Language Models: A Survey

Sep 17, 2023Haiyan Zhao, Hanjie Chen, Fan Yang, Ninghao Liu, Huiqi Deng, Hengyi Cai, Shuaiqiang Wang, Dawei Yin, Mengnan Du

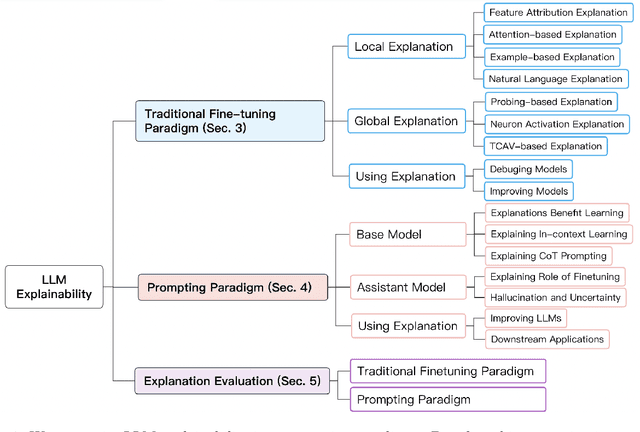

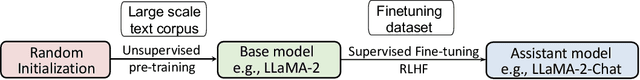

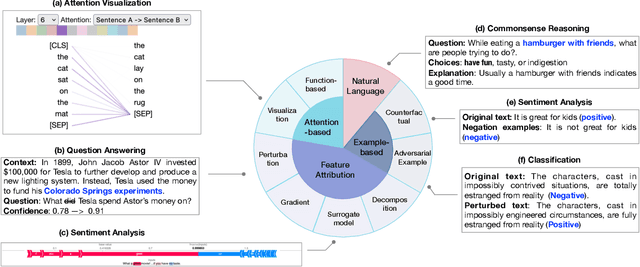

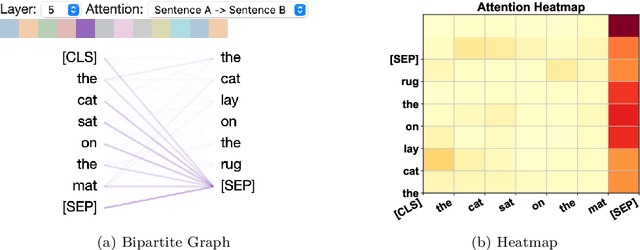

Large language models (LLMs) have demonstrated impressive capabilities in natural language processing. However, their internal mechanisms are still unclear and this lack of transparency poses unwanted risks for downstream applications. Therefore, understanding and explaining these models is crucial for elucidating their behaviors, limitations, and social impacts. In this paper, we introduce a taxonomy of explainability techniques and provide a structured overview of methods for explaining Transformer-based language models. We categorize techniques based on the training paradigms of LLMs: traditional fine-tuning-based paradigm and prompting-based paradigm. For each paradigm, we summarize the goals and dominant approaches for generating local explanations of individual predictions and global explanations of overall model knowledge. We also discuss metrics for evaluating generated explanations, and discuss how explanations can be leveraged to debug models and improve performance. Lastly, we examine key challenges and emerging opportunities for explanation techniques in the era of LLMs in comparison to conventional machine learning models.

Adaptive Priority Reweighing for Generalizing Fairness Improvement

Sep 15, 2023Zhihao Hu, Yiran Xu, Mengnan Du, Jindong Gu, Xinmei Tian, Fengxiang He

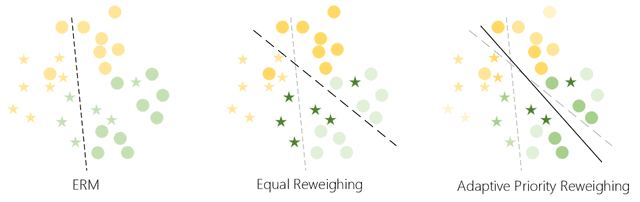

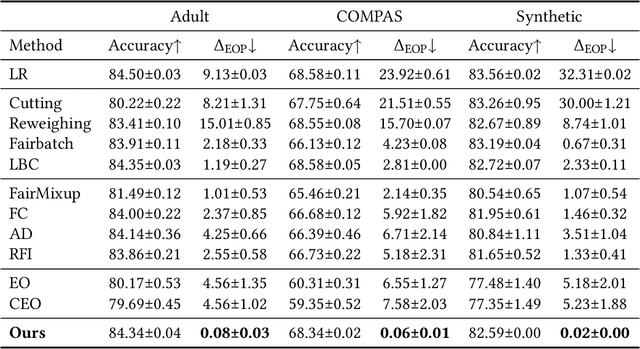

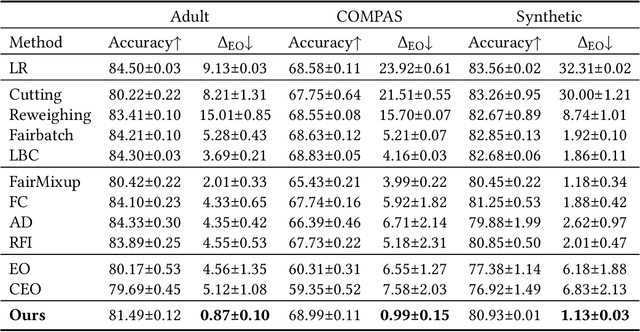

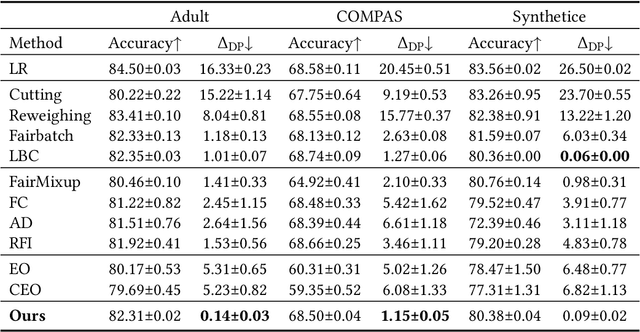

With the increasing penetration of machine learning applications in critical decision-making areas, calls for algorithmic fairness are more prominent. Although there have been various modalities to improve algorithmic fairness through learning with fairness constraints, their performance does not generalize well in the test set. A performance-promising fair algorithm with better generalizability is needed. This paper proposes a novel adaptive reweighing method to eliminate the impact of the distribution shifts between training and test data on model generalizability. Most previous reweighing methods propose to assign a unified weight for each (sub)group. Rather, our method granularly models the distance from the sample predictions to the decision boundary. Our adaptive reweighing method prioritizes samples closer to the decision boundary and assigns a higher weight to improve the generalizability of fair classifiers. Extensive experiments are performed to validate the generalizability of our adaptive priority reweighing method for accuracy and fairness measures (i.e., equal opportunity, equalized odds, and demographic parity) in tabular benchmarks. We also highlight the performance of our method in improving the fairness of language and vision models. The code is available at https://github.com/che2198/APW.

A Survey on Fairness in Large Language Models

Aug 20, 2023Yingji Li, Mengnan Du, Rui Song, Xin Wang, Ying Wang

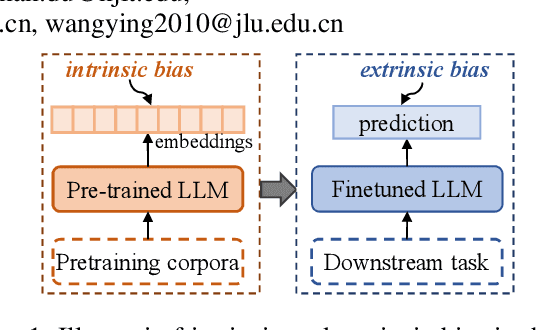

Large language models (LLMs) have shown powerful performance and development prospect and are widely deployed in the real world. However, LLMs can capture social biases from unprocessed training data and propagate the biases to downstream tasks. Unfair LLM systems have undesirable social impacts and potential harms. In this paper, we provide a comprehensive review of related research on fairness in LLMs. First, for medium-scale LLMs, we introduce evaluation metrics and debiasing methods from the perspectives of intrinsic bias and extrinsic bias, respectively. Then, for large-scale LLMs, we introduce recent fairness research, including fairness evaluation, reasons for bias, and debiasing methods. Finally, we discuss and provide insight on the challenges and future directions for the development of fairness in LLMs.

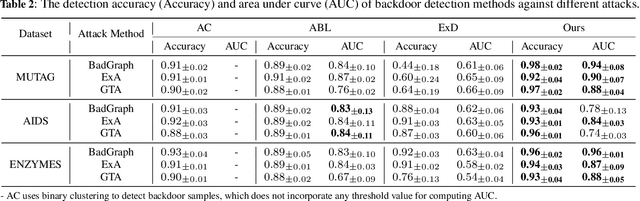

XGBD: Explanation-Guided Graph Backdoor Detection

Aug 08, 2023Zihan Guan, Mengnan Du, Ninghao Liu

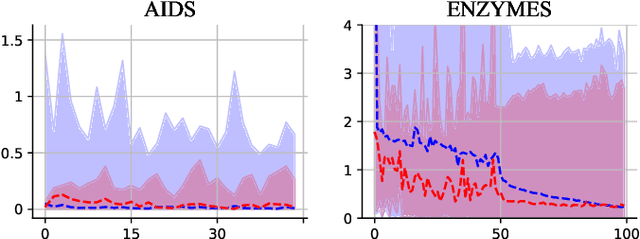

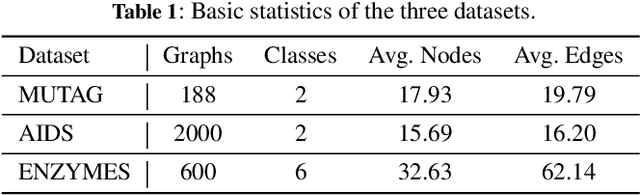

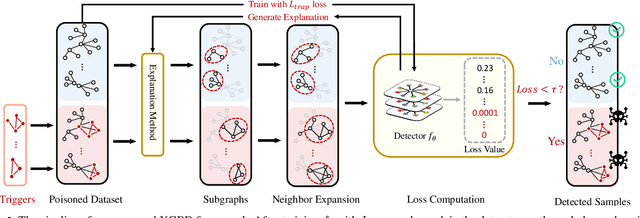

Backdoor attacks pose a significant security risk to graph learning models. Backdoors can be embedded into the target model by inserting backdoor triggers into the training dataset, causing the model to make incorrect predictions when the trigger is present. To counter backdoor attacks, backdoor detection has been proposed. An emerging detection strategy in the vision and NLP domains is based on an intriguing phenomenon: when training models on a mixture of backdoor and clean samples, the loss on backdoor samples drops significantly faster than on clean samples, allowing backdoor samples to be easily detected by selecting samples with the lowest loss values. However, the ignorance of topological feature information on graph data limits its detection effectiveness when applied directly to the graph domain. To this end, we propose an explanation-guided backdoor detection method to take advantage of the topological information. Specifically, we train a helper model on the graph dataset, feed graph samples into the model, and then adopt explanation methods to attribute model prediction to an important subgraph. We observe that backdoor samples have distinct attribution distribution than clean samples, so the explanatory subgraph could serve as more discriminative features for detecting backdoor samples. Comprehensive experiments on multiple popular datasets and attack methods demonstrate the effectiveness and explainability of our method. Our code is available: https://github.com/GuanZihan/GNN_backdoor_detection.

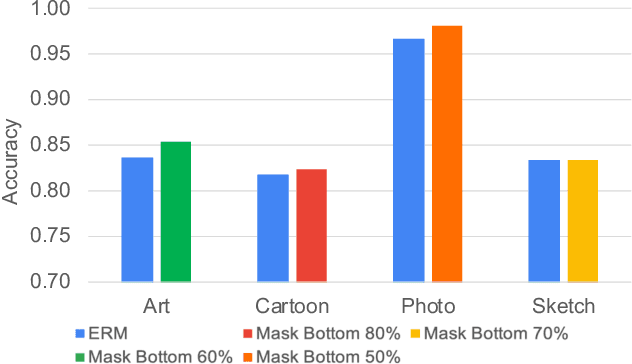

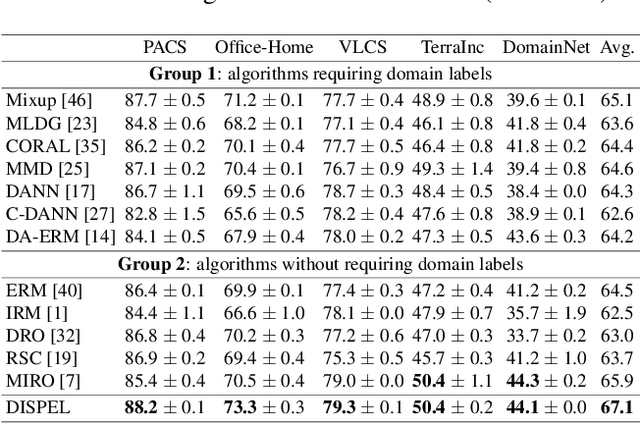

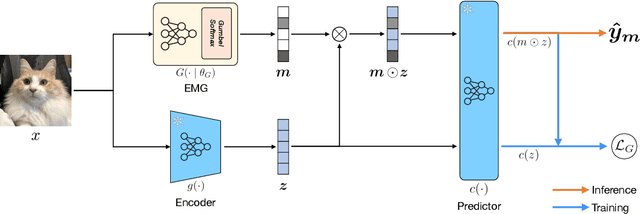

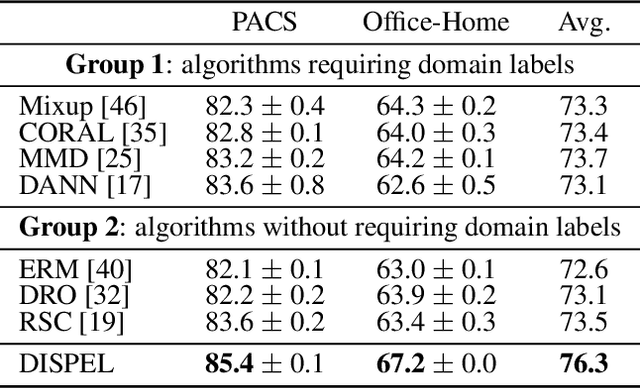

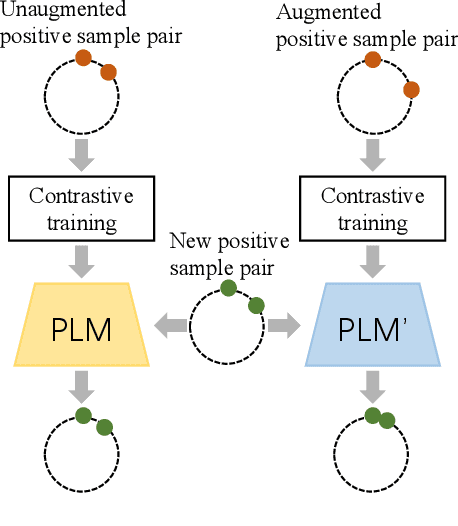

DISPEL: Domain Generalization via Domain-Specific Liberating

Aug 01, 2023Chia-Yuan Chang, Yu-Neng Chuang, Guanchu Wang, Mengnan Du, Na Zou

Domain generalization aims to learn a generalization model that can perform well on unseen test domains by only training on limited source domains. However, existing domain generalization approaches often bring in prediction-irrelevant noise or require the collection of domain labels. To address these challenges, we consider the domain generalization problem from a different perspective by categorizing underlying feature groups into domain-shared and domain-specific features. Nevertheless, the domain-specific features are difficult to be identified and distinguished from the input data. In this work, we propose DomaIn-SPEcific Liberating (DISPEL), a post-processing fine-grained masking approach that can filter out undefined and indistinguishable domain-specific features in the embedding space. Specifically, DISPEL utilizes a mask generator that produces a unique mask for each input data to filter domain-specific features. The DISPEL framework is highly flexible to be applied to any fine-tuned models. We derive a generalization error bound to guarantee the generalization performance by optimizing a designed objective loss. The experimental results on five benchmarks demonstrate DISPEL outperforms existing methods and can further generalize various algorithms.

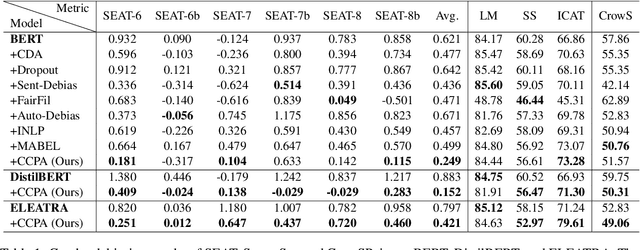

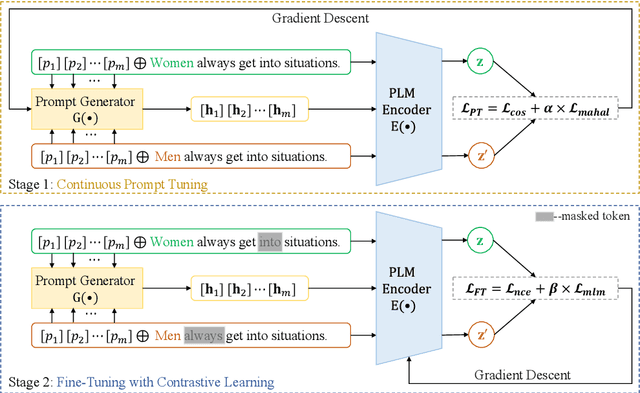

Prompt Tuning Pushes Farther, Contrastive Learning Pulls Closer: A Two-Stage Approach to Mitigate Social Biases

Jul 04, 2023Yingji Li, Mengnan Du, Xin Wang, Ying Wang

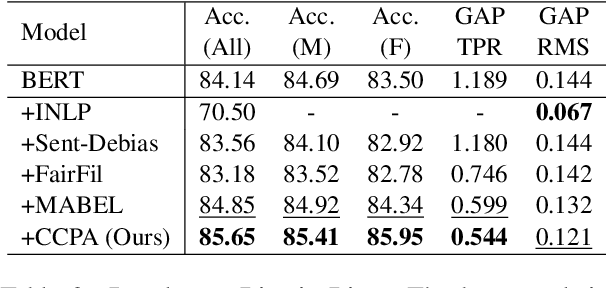

As the representation capability of Pre-trained Language Models (PLMs) improve, there is growing concern that they will inherit social biases from unprocessed corpora. Most previous debiasing techniques used Counterfactual Data Augmentation (CDA) to balance the training corpus. However, CDA slightly modifies the original corpus, limiting the representation distance between different demographic groups to a narrow range. As a result, the debiasing model easily fits the differences between counterfactual pairs, which affects its debiasing performance with limited text resources. In this paper, we propose an adversarial training-inspired two-stage debiasing model using Contrastive learning with Continuous Prompt Augmentation (named CCPA) to mitigate social biases in PLMs' encoding. In the first stage, we propose a data augmentation method based on continuous prompt tuning to push farther the representation distance between sample pairs along different demographic groups. In the second stage, we utilize contrastive learning to pull closer the representation distance between the augmented sample pairs and then fine-tune PLMs' parameters to get debiased encoding. Our approach guides the model to achieve stronger debiasing performance by adding difficulty to the training process. Extensive experiments show that CCPA outperforms baselines in terms of debiasing performance. Meanwhile, experimental results on the GLUE benchmark show that CCPA retains the language modeling capability of PLMs.

FAIRER: Fairness as Decision Rationale Alignment

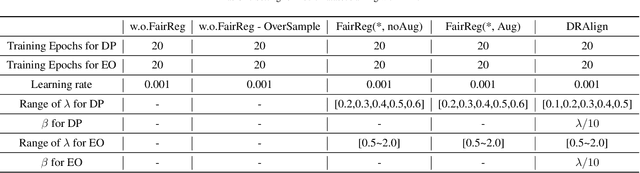

Jun 27, 2023Tianlin Li, Qing Guo, Aishan Liu, Mengnan Du, Zhiming Li, Yang Liu

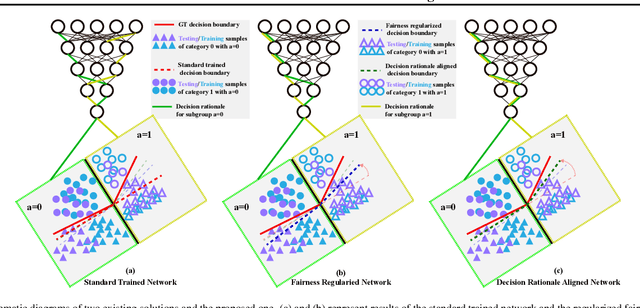

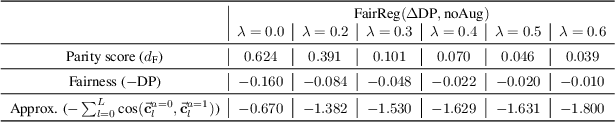

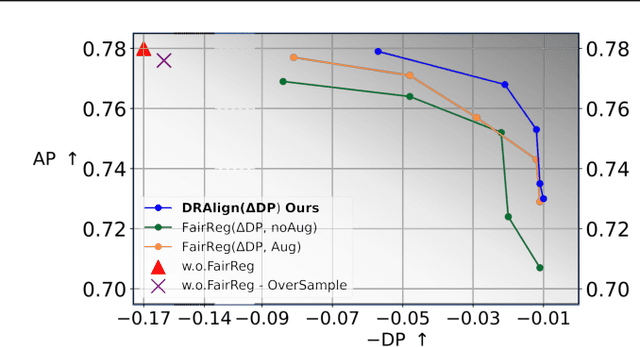

Deep neural networks (DNNs) have made significant progress, but often suffer from fairness issues, as deep models typically show distinct accuracy differences among certain subgroups (e.g., males and females). Existing research addresses this critical issue by employing fairness-aware loss functions to constrain the last-layer outputs and directly regularize DNNs. Although the fairness of DNNs is improved, it is unclear how the trained network makes a fair prediction, which limits future fairness improvements. In this paper, we investigate fairness from the perspective of decision rationale and define the parameter parity score to characterize the fair decision process of networks by analyzing neuron influence in various subgroups. Extensive empirical studies show that the unfair issue could arise from the unaligned decision rationales of subgroups. Existing fairness regularization terms fail to achieve decision rationale alignment because they only constrain last-layer outputs while ignoring intermediate neuron alignment. To address the issue, we formulate the fairness as a new task, i.e., decision rationale alignment that requires DNNs' neurons to have consistent responses on subgroups at both intermediate processes and the final prediction. To make this idea practical during optimization, we relax the naive objective function and propose gradient-guided parity alignment, which encourages gradient-weighted consistency of neurons across subgroups. Extensive experiments on a variety of datasets show that our method can significantly enhance fairness while sustaining a high level of accuracy and outperforming other approaches by a wide margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge