Mazda Farshad

A Multi-View Pipeline and Benchmark Dataset for 3D Hand Pose Estimation in Surgery

Jan 22, 2026Abstract:Purpose: Accurate 3D hand pose estimation supports surgical applications such as skill assessment, robot-assisted interventions, and geometry-aware workflow analysis. However, surgical environments pose severe challenges, including intense and localized lighting, frequent occlusions by instruments or staff, and uniform hand appearance due to gloves, combined with a scarcity of annotated datasets for reliable model training. Method: We propose a robust multi-view pipeline for 3D hand pose estimation in surgical contexts that requires no domain-specific fine-tuning and relies solely on off-the-shelf pretrained models. The pipeline integrates reliable person detection, whole-body pose estimation, and state-of-the-art 2D hand keypoint prediction on tracked hand crops, followed by a constrained 3D optimization. In addition, we introduce a novel surgical benchmark dataset comprising over 68,000 frames and 3,000 manually annotated 2D hand poses with triangulated 3D ground truth, recorded in a replica operating room under varying levels of scene complexity. Results: Quantitative experiments demonstrate that our method consistently outperforms baselines, achieving a 31% reduction in 2D mean joint error and a 76% reduction in 3D mean per-joint position error. Conclusion: Our work establishes a strong baseline for 3D hand pose estimation in surgery, providing both a training-free pipeline and a comprehensive annotated dataset to facilitate future research in surgical computer vision.

IXGS-Intraoperative 3D Reconstruction from Sparse, Arbitrarily Posed Real X-rays

Apr 20, 2025Abstract:Spine surgery is a high-risk intervention demanding precise execution, often supported by image-based navigation systems. Recently, supervised learning approaches have gained attention for reconstructing 3D spinal anatomy from sparse fluoroscopic data, significantly reducing reliance on radiation-intensive 3D imaging systems. However, these methods typically require large amounts of annotated training data and may struggle to generalize across varying patient anatomies or imaging conditions. Instance-learning approaches like Gaussian splatting could offer an alternative by avoiding extensive annotation requirements. While Gaussian splatting has shown promise for novel view synthesis, its application to sparse, arbitrarily posed real intraoperative X-rays has remained largely unexplored. This work addresses this limitation by extending the $R^2$-Gaussian splatting framework to reconstruct anatomically consistent 3D volumes under these challenging conditions. We introduce an anatomy-guided radiographic standardization step using style transfer, improving visual consistency across views, and enhancing reconstruction quality. Notably, our framework requires no pretraining, making it inherently adaptable to new patients and anatomies. We evaluated our approach using an ex-vivo dataset. Expert surgical evaluation confirmed the clinical utility of the 3D reconstructions for navigation, especially when using 20 to 30 views, and highlighted the standardization's benefit for anatomical clarity. Benchmarking via quantitative 2D metrics (PSNR/SSIM) confirmed performance trade-offs compared to idealized settings, but also validated the improvement gained from standardization over raw inputs. This work demonstrates the feasibility of instance-based volumetric reconstruction from arbitrary sparse-view X-rays, advancing intraoperative 3D imaging for surgical navigation.

Creating a Digital Twin of Spinal Surgery: A Proof of Concept

Mar 25, 2024

Abstract:Surgery digitalization is the process of creating a virtual replica of real-world surgery, also referred to as a surgical digital twin (SDT). It has significant applications in various fields such as education and training, surgical planning, and automation of surgical tasks. Given their detailed representations of surgical procedures, SDTs are an ideal foundation for machine learning methods, enabling automatic generation of training data. In robotic surgery, SDTs can provide realistic virtual environments in which robots may learn through trial and error. In this paper, we present a proof of concept (PoC) for surgery digitalization that is applied to an ex-vivo spinal surgery performed in realistic conditions. The proposed digitalization focuses on the acquisition and modelling of the geometry and appearance of the entire surgical scene. We employ five RGB-D cameras for dynamic 3D reconstruction of the surgeon, a high-end camera for 3D reconstruction of the anatomy, an infrared stereo camera for surgical instrument tracking, and a laser scanner for 3D reconstruction of the operating room and data fusion. We justify the proposed methodology, discuss the challenges faced and further extensions of our prototype. While our PoC partially relies on manual data curation, its high quality and great potential motivate the development of automated methods for the creation of SDTs. The quality of our SDT can be assessed in a rendered video available at https://youtu.be/LqVaWGgaTMY .

Domain adaptation strategies for 3D reconstruction of the lumbar spine using real fluoroscopy data

Jan 29, 2024

Abstract:This study tackles key obstacles in adopting surgical navigation in orthopedic surgeries, including time, cost, radiation, and workflow integration challenges. Recently, our work X23D showed an approach for generating 3D anatomical models of the spine from only a few intraoperative fluoroscopic images. This negates the need for conventional registration-based surgical navigation by creating a direct intraoperative 3D reconstruction of the anatomy. Despite these strides, the practical application of X23D has been limited by a domain gap between synthetic training data and real intraoperative images. In response, we devised a novel data collection protocol for a paired dataset consisting of synthetic and real fluoroscopic images from the same perspectives. Utilizing this dataset, we refined our deep learning model via transfer learning, effectively bridging the domain gap between synthetic and real X-ray data. A novel style transfer mechanism also allows us to convert real X-rays to mirror the synthetic domain, enabling our in-silico-trained X23D model to achieve high accuracy in real-world settings. Our results demonstrated that the refined model can rapidly generate accurate 3D reconstructions of the entire lumbar spine from as few as three intraoperative fluoroscopic shots. It achieved an 84% F1 score, matching the accuracy of our previous synthetic data-based research. Additionally, with a computational time of only 81.1 ms, our approach provides real-time capabilities essential for surgery integration. Through examining ideal imaging setups and view angle dependencies, we've further confirmed our system's practicality and dependability in clinical settings. Our research marks a significant step forward in intraoperative 3D reconstruction, offering enhancements to surgical planning, navigation, and robotics.

Automatic registration with continuous pose updates for marker-less surgical navigation in spine surgery

Aug 05, 2023Abstract:Established surgical navigation systems for pedicle screw placement have been proven to be accurate, but still reveal limitations in registration or surgical guidance. Registration of preoperative data to the intraoperative anatomy remains a time-consuming, error-prone task that includes exposure to harmful radiation. Surgical guidance through conventional displays has well-known drawbacks, as information cannot be presented in-situ and from the surgeon's perspective. Consequently, radiation-free and more automatic registration methods with subsequent surgeon-centric navigation feedback are desirable. In this work, we present an approach that automatically solves the registration problem for lumbar spinal fusion surgery in a radiation-free manner. A deep neural network was trained to segment the lumbar spine and simultaneously predict its orientation, yielding an initial pose for preoperative models, which then is refined for each vertebra individually and updated in real-time with GPU acceleration while handling surgeon occlusions. An intuitive surgical guidance is provided thanks to the integration into an augmented reality based navigation system. The registration method was verified on a public dataset with a mean of 96\% successful registrations, a target registration error of 2.73 mm, a screw trajectory error of 1.79{\deg} and a screw entry point error of 2.43 mm. Additionally, the whole pipeline was validated in an ex-vivo surgery, yielding a 100\% screw accuracy and a registration accuracy of 1.20 mm. Our results meet clinical demands and emphasize the potential of RGB-D data for fully automatic registration approaches in combination with augmented reality guidance.

Safe Deep RL for Intraoperative Planning of Pedicle Screw Placement

May 10, 2023

Abstract:Spinal fusion surgery requires highly accurate implantation of pedicle screw implants, which must be conducted in critical proximity to vital structures with a limited view of anatomy. Robotic surgery systems have been proposed to improve placement accuracy, however, state-of-the-art systems suffer from the limitations of open-loop approaches, as they follow traditional concepts of preoperative planning and intraoperative registration, without real-time recalculation of the surgical plan. In this paper, we propose an intraoperative planning approach for robotic spine surgery that leverages real-time observation for drill path planning based on Safe Deep Reinforcement Learning (DRL). The main contributions of our method are (1) the capability to guarantee safe actions by introducing an uncertainty-aware distance-based safety filter; and (2) the ability to compensate for incomplete intraoperative anatomical information, by encoding a-priori knowledge about anatomical structures with a network pre-trained on high-fidelity anatomical models. Planning quality was assessed by quantitative comparison with the gold standard (GS) drill planning. In experiments with 5 models derived from real magnetic resonance imaging (MRI) data, our approach was capable of achieving 90% bone penetration with respect to the GS while satisfying safety requirements, even under observation and motion uncertainty. To the best of our knowledge, our approach is the first safe DRL approach focusing on orthopedic surgeries.

Next-generation Surgical Navigation: Multi-view Marker-less 6DoF Pose Estimation of Surgical Instruments

May 05, 2023

Abstract:State-of-the-art research of traditional computer vision is increasingly leveraged in the surgical domain. A particular focus in computer-assisted surgery is to replace marker-based tracking systems for instrument localization with pure image-based 6DoF pose estimation. However, the state of the art has not yet met the accuracy required for surgical navigation. In this context, we propose a high-fidelity marker-less optical tracking system for surgical instrument localization. We developed a multi-view camera setup consisting of static and mobile cameras and collected a large-scale RGB-D video dataset with dedicated synchronization and data fusions methods. Different state-of-the-art pose estimation methods were integrated into a deep learning pipeline and evaluated on multiple camera configurations. Furthermore, the performance impacts of different input modalities and camera positions, as well as training on purely synthetic data, were compared. The best model achieved an average position and orientation error of 1.3 mm and 1.0{\deg} for a surgical drill as well as 3.8 mm and 5.2{\deg} for a screwdriver. These results significantly outperform related methods in the literature and are close to clinical-grade accuracy, demonstrating that marker-less tracking of surgical instruments is becoming a feasible alternative to existing marker-based systems.

Automatic breach detection during spine pedicle drilling based on vibroacoustic sensing

Mar 27, 2023

Abstract:Pedicle drilling is a complex and critical spinal surgery task. Detecting breach or penetration of the surgical tool to the cortical wall during pilot-hole drilling is essential to avoid damage to vital anatomical structures adjacent to the pedicle, such as the spinal cord, blood vessels, and nerves. Currently, the guidance of pedicle drilling is done using image-guided methods that are radiation intensive and limited to the preoperative information. This work proposes a new radiation-free breach detection algorithm leveraging a non-visual sensor setup in combination with deep learning approach. Multiple vibroacoustic sensors, such as a contact microphone, a free-field microphone, a tri-axial accelerometer, a uni-axial accelerometer, and an optical tracking system were integrated into the setup. Data were collected on four cadaveric human spines, ranging from L5 to T10. An experienced spine surgeon drilled the pedicles relying on optical navigation. A new automatic labeling method based on the tracking data was introduced. Labeled data was subsequently fed to the network in mel-spectrograms, classifying the data into breach and non-breach. Different sensor types, sensor positioning, and their combinations were evaluated. The best results in breach recall for individual sensors could be achieved using contact microphones attached to the dorsal skin (85.8\%) and uni-axial accelerometers clamped to the spinous process of the drilled vertebra (81.0\%). The best-performing data fusion model combined the latter two sensors with a breach recall of 98\%. The proposed method shows the great potential of non-visual sensor fusion for avoiding screw misplacement and accidental bone breaches during pedicle drilling and could be extended to further surgical applications.

Improved Techniques for the Conditional Generative Augmentation of Clinical Audio Data

Nov 05, 2022

Abstract:Data augmentation is a valuable tool for the design of deep learning systems to overcome data limitations and stabilize the training process. Especially in the medical domain, where the collection of large-scale data sets is challenging and expensive due to limited access to patient data, relevant environments, as well as strict regulations, community-curated large-scale public datasets, pretrained models, and advanced data augmentation methods are the main factors for developing reliable systems to improve patient care. However, for the development of medical acoustic sensing systems, an emerging field of research, the community lacks large-scale publicly available data sets and pretrained models. To address the problem of limited data, we propose a conditional generative adversarial neural network-based augmentation method which is able to synthesize mel spectrograms from a learned data distribution of a source data set. In contrast to previously proposed fully convolutional models, the proposed model implements residual Squeeze and Excitation modules in the generator architecture. We show that our method outperforms all classical audio augmentation techniques and previously published generative methods in terms of generated sample quality and a performance improvement of 2.84% of Macro F1-Score for a classifier trained on the augmented data set, an enhancement of $1.14\%$ in relation to previous work. By analyzing the correlation of intermediate feature spaces, we show that the residual Squeeze and Excitation modules help the model to reduce redundancy in the latent features. Therefore, the proposed model advances the state-of-the-art in the augmentation of clinical audio data and improves the data bottleneck for the design of clinical acoustic sensing systems.

Sonification as a Reliable Alternative to Conventional Visual Surgical Navigation

Jun 30, 2022

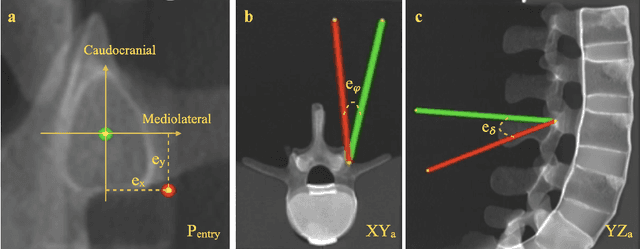

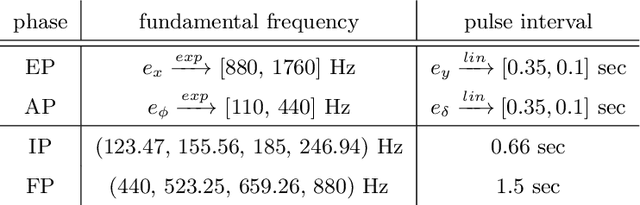

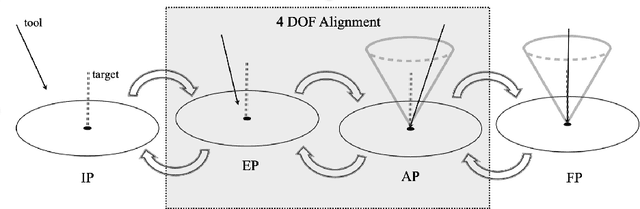

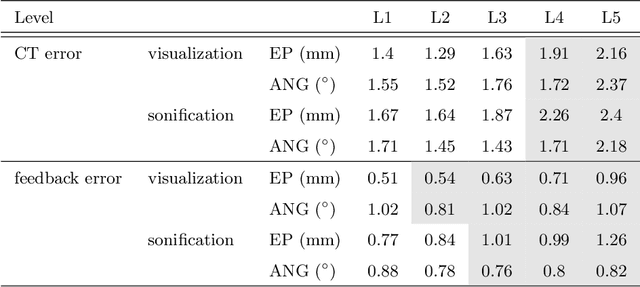

Abstract:Despite the undeniable advantages of image-guided surgical assistance systems in terms of accuracy, such systems have not yet fully met surgeons' needs or expectations regarding usability, time efficiency, and their integration into the surgical workflow. On the other hand, perceptual studies have shown that presenting independent but causally correlated information via multimodal feedback involving different sensory modalities can improve task performance. This article investigates an alternative method for computer-assisted surgical navigation, introduces a novel sonification methodology for navigated pedicle screw placement, and discusses advanced solutions based on multisensory feedback. The proposed method comprises a novel sonification solution for alignment tasks in four degrees of freedom based on frequency modulation (FM) synthesis. We compared the resulting accuracy and execution time of the proposed sonification method with visual navigation, which is currently considered the state of the art. We conducted a phantom study in which 17 surgeons executed the pedicle screw placement task in the lumbar spine, guided by either the proposed sonification-based or the traditional visual navigation method. The results demonstrated that the proposed method is as accurate as the state of the art while decreasing the surgeon's need to focus on visual navigation displays instead of the natural focus on surgical tools and targeted anatomy during task execution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge