Linkang Du

VICTOR: Dataset Copyright Auditing in Video Recognition Systems

Dec 16, 2025Abstract:Video recognition systems are increasingly being deployed in daily life, such as content recommendation and security monitoring. To enhance video recognition development, many institutions have released high-quality public datasets with open-source licenses for training advanced models. At the same time, these datasets are also susceptible to misuse and infringement. Dataset copyright auditing is an effective solution to identify such unauthorized use. However, existing dataset copyright solutions primarily focus on the image domain; the complex nature of video data leaves dataset copyright auditing in the video domain unexplored. Specifically, video data introduces an additional temporal dimension, which poses significant challenges to the effectiveness and stealthiness of existing methods. In this paper, we propose VICTOR, the first dataset copyright auditing approach for video recognition systems. We develop a general and stealthy sample modification strategy that enhances the output discrepancy of the target model. By modifying only a small proportion of samples (e.g., 1%), VICTOR amplifies the impact of published modified samples on the prediction behavior of the target models. Then, the difference in the model's behavior for published modified and unpublished original samples can serve as a key basis for dataset auditing. Extensive experiments on multiple models and datasets highlight the superiority of VICTOR. Finally, we show that VICTOR is robust in the presence of several perturbation mechanisms to the training videos or the target models.

Enabling Regulatory Multi-Agent Collaboration: Architecture, Challenges, and Solutions

Sep 11, 2025

Abstract:Large language models (LLMs)-empowered autonomous agents are transforming both digital and physical environments by enabling adaptive, multi-agent collaboration. While these agents offer significant opportunities across domains such as finance, healthcare, and smart manufacturing, their unpredictable behaviors and heterogeneous capabilities pose substantial governance and accountability challenges. In this paper, we propose a blockchain-enabled layered architecture for regulatory agent collaboration, comprising an agent layer, a blockchain data layer, and a regulatory application layer. Within this framework, we design three key modules: (i) an agent behavior tracing and arbitration module for automated accountability, (ii) a dynamic reputation evaluation module for trust assessment in collaborative scenarios, and (iii) a malicious behavior forecasting module for early detection of adversarial activities. Our approach establishes a systematic foundation for trustworthy, resilient, and scalable regulatory mechanisms in large-scale agent ecosystems. Finally, we discuss the future research directions for blockchain-enabled regulatory frameworks in multi-agent systems.

ArtistAuditor: Auditing Artist Style Pirate in Text-to-Image Generation Models

Apr 17, 2025Abstract:Text-to-image models based on diffusion processes, such as DALL-E, Stable Diffusion, and Midjourney, are capable of transforming texts into detailed images and have widespread applications in art and design. As such, amateur users can easily imitate professional-level paintings by collecting an artist's work and fine-tuning the model, leading to concerns about artworks' copyright infringement. To tackle these issues, previous studies either add visually imperceptible perturbation to the artwork to change its underlying styles (perturbation-based methods) or embed post-training detectable watermarks in the artwork (watermark-based methods). However, when the artwork or the model has been published online, i.e., modification to the original artwork or model retraining is not feasible, these strategies might not be viable. To this end, we propose a novel method for data-use auditing in the text-to-image generation model. The general idea of ArtistAuditor is to identify if a suspicious model has been finetuned using the artworks of specific artists by analyzing the features related to the style. Concretely, ArtistAuditor employs a style extractor to obtain the multi-granularity style representations and treats artworks as samplings of an artist's style. Then, ArtistAuditor queries a trained discriminator to gain the auditing decisions. The experimental results on six combinations of models and datasets show that ArtistAuditor can achieve high AUC values (> 0.937). By studying ArtistAuditor's transferability and core modules, we provide valuable insights into the practical implementation. Finally, we demonstrate the effectiveness of ArtistAuditor in real-world cases by an online platform Scenario. ArtistAuditor is open-sourced at https://github.com/Jozenn/ArtistAuditor.

UNIDOOR: A Universal Framework for Action-Level Backdoor Attacks in Deep Reinforcement Learning

Jan 26, 2025

Abstract:Deep reinforcement learning (DRL) is widely applied to safety-critical decision-making scenarios. However, DRL is vulnerable to backdoor attacks, especially action-level backdoors, which pose significant threats through precise manipulation and flexible activation, risking outcomes like vehicle collisions or drone crashes. The key distinction of action-level backdoors lies in the utilization of the backdoor reward function to associate triggers with target actions. Nevertheless, existing studies typically rely on backdoor reward functions with fixed values or conditional flipping, which lack universality across diverse DRL tasks and backdoor designs, resulting in fluctuations or even failure in practice. This paper proposes the first universal action-level backdoor attack framework, called UNIDOOR, which enables adaptive exploration of backdoor reward functions through performance monitoring, eliminating the reliance on expert knowledge and grid search. We highlight that action tampering serves as a crucial component of action-level backdoor attacks in continuous action scenarios, as it addresses attack failures caused by low-frequency target actions. Extensive evaluations demonstrate that UNIDOOR significantly enhances the attack performance of action-level backdoors, showcasing its universality across diverse attack scenarios, including single/multiple agents, single/multiple backdoors, discrete/continuous action spaces, and sparse/dense reward signals. Furthermore, visualization results encompassing state distribution, neuron activation, and animations demonstrate the stealthiness of UNIDOOR. The source code of UNIDOOR can be found at https://github.com/maoubo/UNIDOOR.

Movable Antennas Enabled ISAC Systems: Fundamentals, Opportunities, and Future Directions

Dec 30, 2024Abstract:The movable antenna (MA)-enabled integrated sensing and communication (ISAC) system attracts widespread attention as an innovative framework. The ISAC system integrates sensing and communication functions, achieving resource sharing across various domains, significantly enhancing communication and sensing performance, and promoting the intelligent interconnection of everything. Meanwhile, MA utilizes the spatial variations of wireless channels by dynamically adjusting the positions of MA elements at the transmitter and receiver to improve the channel and further enhance the performance of the ISAC systems. In this paper, we first outline the fundamental principles of MA and introduce the application scenarios of MA-enabled ISAC systems. Then, we summarize the advantages of MA-enabled ISAC systems in enhancing spectral efficiency, achieving flexible and precise beamforming, and making the signal coverage range adjustable. Besides, a specific case is studied to show the performance gains in terms of transmit power that MA brings to ISAC systems. Finally, we discuss the challenges of MA-enabled ISAC and future research directions, aiming to provide insights for future research on MA-enabled ISAC systems.

SoK: Dataset Copyright Auditing in Machine Learning Systems

Oct 22, 2024

Abstract:As the implementation of machine learning (ML) systems becomes more widespread, especially with the introduction of larger ML models, we perceive a spring demand for massive data. However, it inevitably causes infringement and misuse problems with the data, such as using unauthorized online artworks or face images to train ML models. To address this problem, many efforts have been made to audit the copyright of the model training dataset. However, existing solutions vary in auditing assumptions and capabilities, making it difficult to compare their strengths and weaknesses. In addition, robustness evaluations usually consider only part of the ML pipeline and hardly reflect the performance of algorithms in real-world ML applications. Thus, it is essential to take a practical deployment perspective on the current dataset copyright auditing tools, examining their effectiveness and limitations. Concretely, we categorize dataset copyright auditing research into two prominent strands: intrusive methods and non-intrusive methods, depending on whether they require modifications to the original dataset. Then, we break down the intrusive methods into different watermark injection options and examine the non-intrusive methods using various fingerprints. To summarize our results, we offer detailed reference tables, highlight key points, and pinpoint unresolved issues in the current literature. By combining the pipeline in ML systems and analyzing previous studies, we highlight several future directions to make auditing tools more suitable for real-world copyright protection requirements.

Large Model Agents: State-of-the-Art, Cooperation Paradigms, Security and Privacy, and Future Trends

Sep 22, 2024

Abstract:Large Model (LM) agents, powered by large foundation models such as GPT-4 and DALL-E 2, represent a significant step towards achieving Artificial General Intelligence (AGI). LM agents exhibit key characteristics of autonomy, embodiment, and connectivity, allowing them to operate across physical, virtual, and mixed-reality environments while interacting seamlessly with humans, other agents, and their surroundings. This paper provides a comprehensive survey of the state-of-the-art in LM agents, focusing on the architecture, cooperation paradigms, security, privacy, and future prospects. Specifically, we first explore the foundational principles of LM agents, including general architecture, key components, enabling technologies, and modern applications. Then, we discuss practical collaboration paradigms from data, computation, and knowledge perspectives towards connected intelligence of LM agents. Furthermore, we systematically analyze the security vulnerabilities and privacy breaches associated with LM agents, particularly in multi-agent settings. We also explore their underlying mechanisms and review existing and potential countermeasures. Finally, we outline future research directions for building robust and secure LM agent ecosystems.

SUB-PLAY: Adversarial Policies against Partially Observed Multi-Agent Reinforcement Learning Systems

Feb 06, 2024Abstract:Recent advances in multi-agent reinforcement learning (MARL) have opened up vast application prospects, including swarm control of drones, collaborative manipulation by robotic arms, and multi-target encirclement. However, potential security threats during the MARL deployment need more attention and thorough investigation. Recent researches reveal that an attacker can rapidly exploit the victim's vulnerabilities and generate adversarial policies, leading to the victim's failure in specific tasks. For example, reducing the winning rate of a superhuman-level Go AI to around 20%. They predominantly focus on two-player competitive environments, assuming attackers possess complete global state observation. In this study, we unveil, for the first time, the capability of attackers to generate adversarial policies even when restricted to partial observations of the victims in multi-agent competitive environments. Specifically, we propose a novel black-box attack (SUB-PLAY), which incorporates the concept of constructing multiple subgames to mitigate the impact of partial observability and suggests the sharing of transitions among subpolicies to improve the exploitative ability of attackers. Extensive evaluations demonstrate the effectiveness of SUB-PLAY under three typical partial observability limitations. Visualization results indicate that adversarial policies induce significantly different activations of the victims' policy networks. Furthermore, we evaluate three potential defenses aimed at exploring ways to mitigate security threats posed by adversarial policies, providing constructive recommendations for deploying MARL in competitive environments.

ORL-AUDITOR: Dataset Auditing in Offline Deep Reinforcement Learning

Sep 06, 2023

Abstract:Data is a critical asset in AI, as high-quality datasets can significantly improve the performance of machine learning models. In safety-critical domains such as autonomous vehicles, offline deep reinforcement learning (offline DRL) is frequently used to train models on pre-collected datasets, as opposed to training these models by interacting with the real-world environment as the online DRL. To support the development of these models, many institutions make datasets publicly available with opensource licenses, but these datasets are at risk of potential misuse or infringement. Injecting watermarks to the dataset may protect the intellectual property of the data, but it cannot handle datasets that have already been published and is infeasible to be altered afterward. Other existing solutions, such as dataset inference and membership inference, do not work well in the offline DRL scenario due to the diverse model behavior characteristics and offline setting constraints. In this paper, we advocate a new paradigm by leveraging the fact that cumulative rewards can act as a unique identifier that distinguishes DRL models trained on a specific dataset. To this end, we propose ORL-AUDITOR, which is the first trajectory-level dataset auditing mechanism for offline RL scenarios. Our experiments on multiple offline DRL models and tasks reveal the efficacy of ORL-AUDITOR, with auditing accuracy over 95% and false positive rates less than 2.88%. We also provide valuable insights into the practical implementation of ORL-AUDITOR by studying various parameter settings. Furthermore, we demonstrate the auditing capability of ORL-AUDITOR on open-source datasets from Google and DeepMind, highlighting its effectiveness in auditing published datasets. ORL-AUDITOR is open-sourced at https://github.com/link-zju/ORL-Auditor.

Privacy-preserving Distributed Machine Learning via Local Randomization and ADMM Perturbation

Sep 09, 2019

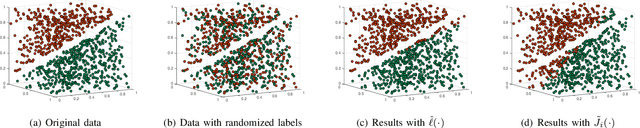

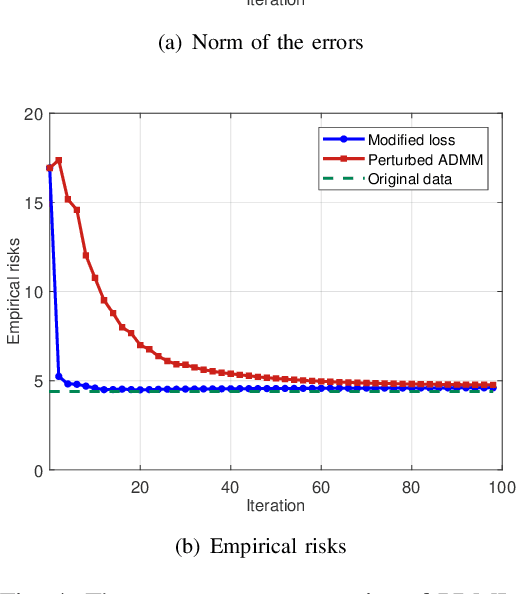

Abstract:With the proliferation of training data, distributed machine learning (DML) is becoming more competent for large-scale learning tasks. However, privacy concerns have to be given priority in DML, since training data may contain sensitive information of users. In this paper, we propose a privacy-preserving ADMM-based DML framework with two novel features: First, we remove the assumption commonly made in the literature that the users trust the server collecting their data. Second, the framework provides heterogeneous privacy for users depending on data's sensitive levels and servers' trust degrees. The challenging issue is to keep the accumulation of privacy losses over ADMM iterations minimal. In the proposed framework, a local randomization approach, which is differentially private, is adopted to provide users with self-controlled privacy guarantee for the most sensitive information. Further, the ADMM algorithm is perturbed through a combined noise-adding method, which simultaneously preserves privacy for users' less sensitive information and strengthens the privacy protection of the most sensitive information. We provide detailed analyses on the performance of the trained model according to its generalization error. Finally, we conduct extensive experiments using real-world datasets to validate the theoretical results and evaluate the classification performance of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge