Li Mao

LimiX: Unleashing Structured-Data Modeling Capability for Generalist Intelligence

Sep 03, 2025

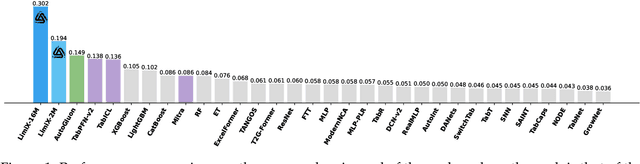

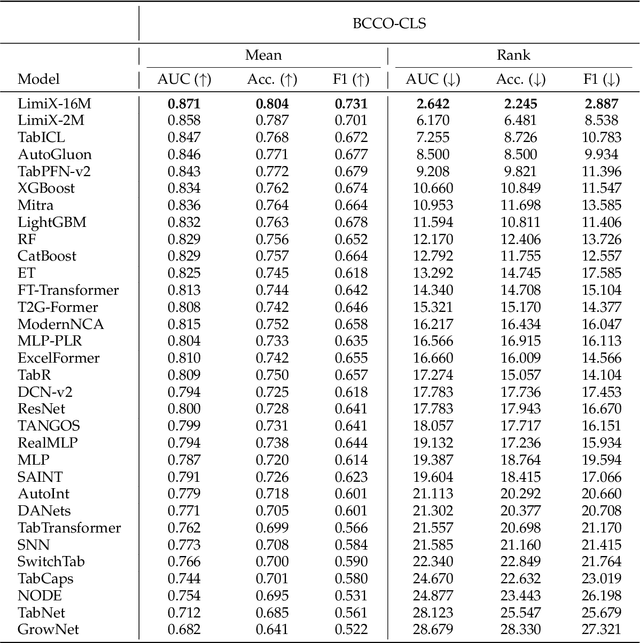

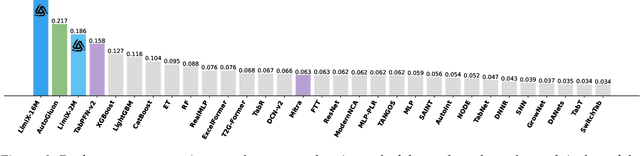

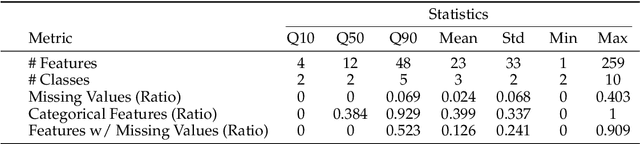

Abstract:We argue that progress toward general intelligence requires complementary foundation models grounded in language, the physical world, and structured data. This report presents LimiX, the first installment of our large structured-data models (LDMs). LimiX treats structured data as a joint distribution over variables and missingness, thus capable of addressing a wide range of tabular tasks through query-based conditional prediction via a single model. LimiX is pretrained using masked joint-distribution modeling with an episodic, context-conditional objective, where the model predicts for query subsets conditioned on dataset-specific contexts, supporting rapid, training-free adaptation at inference. We evaluate LimiX across 10 large structured-data benchmarks with broad regimes of sample size, feature dimensionality, class number, categorical-to-numerical feature ratio, missingness, and sample-to-feature ratios. With a single model and a unified interface, LimiX consistently surpasses strong baselines including gradient-boosting trees, deep tabular networks, recent tabular foundation models, and automated ensembles, as shown in Figure 1 and Figure 2. The superiority holds across a wide range of tasks, such as classification, regression, missing value imputation, and data generation, often by substantial margins, while avoiding task-specific architectures or bespoke training per task. All LimiX models are publicly accessible under Apache 2.0.

Crime Forecasting: A Spatio-temporal Analysis with Deep Learning Models

Feb 11, 2025

Abstract:This study uses deep-learning models to predict city partition crime counts on specific days. It helps police enhance surveillance, gather intelligence, and proactively prevent crimes. We formulate crime count prediction as a spatiotemporal sequence challenge, where both input data and prediction targets are spatiotemporal sequences. In order to improve the accuracy of crime forecasting, we introduce a new model that combines Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks. We conducted a comparative analysis to access the effects of various data sequences, including raw and binned data, on the prediction errors of four deep learning forecasting models. Directly inputting raw crime data into the forecasting model causes high prediction errors, making the model unsuitable for real - world use. The findings indicate that the proposed CNN-LSTM model achieves optimal performance when crime data is categorized into 10 or 5 groups. Data binning can enhance forecasting model performance, but poorly defined intervals may reduce map granularity. Compared to dividing into 5 bins, binning into 10 intervals strikes an optimal balance, preserving data characteristics and surpassing raw data in predictive modelling efficacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge