Karen Leung

Refining Obstacle Perception Safety Zones via Maneuver-Based Decomposition

Aug 11, 2023

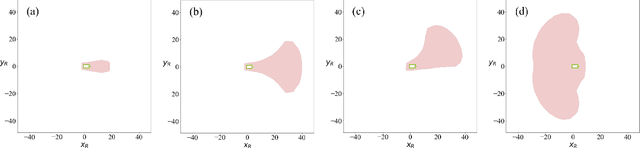

Abstract:A critical task for developing safe autonomous driving stacks is to determine whether an obstacle is safety-critical, i.e., poses an imminent threat to the autonomous vehicle. Our previous work showed that Hamilton Jacobi reachability theory can be applied to compute interaction-dynamics-aware perception safety zones that better inform an ego vehicle's perception module which obstacles are considered safety-critical. For completeness, these zones are typically larger than absolutely necessary, forcing the perception module to pay attention to a larger collection of objects for the sake of conservatism. As an improvement, we propose a maneuver-based decomposition of our safety zones that leverages information about the ego maneuver to reduce the zone volume. In particular, we propose a "temporal convolution" operation that produces safety zones for specific ego maneuvers, thus limiting the ego's behavior to reduce the size of the safety zones. We show with numerical experiments that maneuver-based zones are significantly smaller (up to 76% size reduction) than the baseline while maintaining completeness.

Task-Aware Risk Estimation of Perception Failures for Autonomous Vehicles

May 03, 2023

Abstract:Safety and performance are key enablers for autonomous driving: on the one hand we want our autonomous vehicles (AVs) to be safe, while at the same time their performance (e.g., comfort or progression) is key to adoption. To effectively walk the tight-rope between safety and performance, AVs need to be risk-averse, but not entirely risk-avoidant. To facilitate safe-yet-performant driving, in this paper, we develop a task-aware risk estimator that assesses the risk a perception failure poses to the AV's motion plan. If the failure has no bearing on the safety of the AV's motion plan, then regardless of how egregious the perception failure is, our task-aware risk estimator considers the failure to have a low risk; on the other hand, if a seemingly benign perception failure severely impacts the motion plan, then our estimator considers it to have a high risk. In this paper, we propose a task-aware risk estimator to decide whether a safety maneuver needs to be triggered. To estimate the task-aware risk, first, we leverage the perception failure - detected by a perception monitor - to synthesize an alternative plausible model for the vehicle's surroundings. The risk due to the perception failure is then formalized as the "relative" risk to the AV's motion plan between the perceived and the alternative plausible scenario. We employ a statistical tool called copula, which models tail dependencies between distributions, to estimate this risk. The theoretical properties of the copula allow us to compute probably approximately correct (PAC) estimates of the risk. We evaluate our task-aware risk estimator using NuPlan and compare it with established baselines, showing that the proposed risk estimator achieves the best F1-score (doubling the score of the best baseline) and exhibits a good balance between recall and precision, i.e., a good balance of safety and performance.

HALO: Hazard-Aware Landing Optimization for Autonomous Systems

Apr 04, 2023Abstract:With autonomous aerial vehicles enacting safety-critical missions, such as the Mars Science Laboratory Curiosity rover's landing on Mars, the tasks of automatically identifying and reasoning about potentially hazardous landing sites is paramount. This paper presents a coupled perception-planning solution which addresses the hazard detection, optimal landing trajectory generation, and contingency planning challenges encountered when landing in uncertain environments. Specifically, we develop and combine two novel algorithms, Hazard-Aware Landing Site Selection (HALSS) and Adaptive Deferred-Decision Trajectory Optimization (Adaptive-DDTO), to address the perception and planning challenges, respectively. The HALSS framework processes point cloud information to identify feasible safe landing zones, while Adaptive-DDTO is a multi-target contingency planner that adaptively replans as new perception information is received. We demonstrate the efficacy of our approach using a simulated Martian environment and show that our coupled perception-planning method achieves greater landing success whilst being more fuel efficient compared to a nonadaptive DDTO approach.

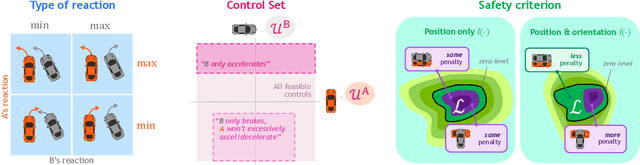

Learning Responsibility Allocations for Safe Human-Robot Interaction with Applications to Autonomous Driving

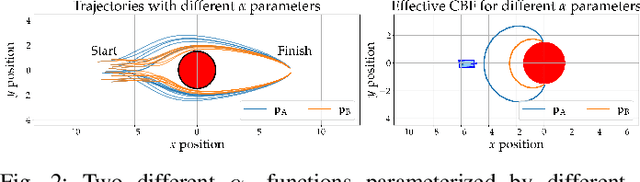

Mar 06, 2023Abstract:Drivers have a responsibility to exercise reasonable care to avoid collision with other road users. This assumed responsibility allows interacting agents to maintain safety without explicit coordination. Thus to enable safe autonomous vehicle (AV) interactions, AVs must understand what their responsibilities are to maintain safety and how they affect the safety of nearby agents. In this work we seek to understand how responsibility is shared in multi-agent settings where an autonomous agent is interacting with human counterparts. We introduce Responsibility-Aware Control Barrier Functions (RA-CBFs) and present a method to learn responsibility allocations from data. By combining safety-critical control and learning-based techniques, RA-CBFs allow us to account for scene-dependent responsibility allocations and synthesize safe and efficient driving behaviors without making worst-case assumptions that typically result in overly-conservative behaviors. We test our framework using real-world driving data and demonstrate its efficacy as a tool for both safe control and forensic analysis of unsafe driving.

Receding Horizon Planning with Rule Hierarchies for Autonomous Vehicles

Dec 06, 2022Abstract:Autonomous vehicles must often contend with conflicting planning requirements, e.g., safety and comfort could be at odds with each other if avoiding a collision calls for slamming the brakes. To resolve such conflicts, assigning importance ranking to rules (i.e., imposing a rule hierarchy) has been proposed, which, in turn, induces rankings on trajectories based on the importance of the rules they satisfy. On one hand, imposing rule hierarchies can enhance interpretability, but introduce combinatorial complexity to planning; while on the other hand, differentiable reward structures can be leveraged by modern gradient-based optimization tools, but are less interpretable and unintuitive to tune. In this paper, we present an approach to equivalently express rule hierarchies as differentiable reward structures amenable to modern gradient-based optimizers, thereby, achieving the best of both worlds. We achieve this by formulating rank-preserving reward functions that are monotonic in the rank of the trajectories induced by the rule hierarchy; i.e., higher ranked trajectories receive higher reward. Equipped with a rule hierarchy and its corresponding rank-preserving reward function, we develop a two-stage planner that can efficiently resolve conflicting planning requirements. We demonstrate that our approach can generate motion plans in ~7-10 Hz for various challenging road navigation and intersection negotiation scenarios.

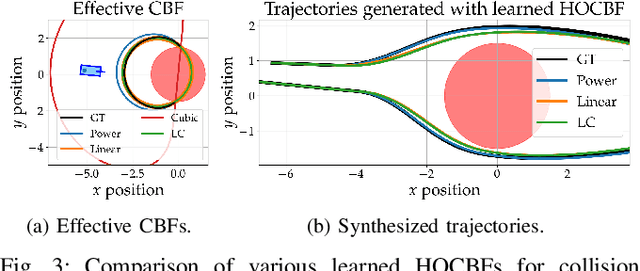

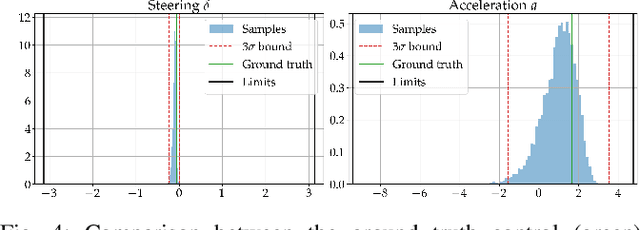

Learning Autonomous Vehicle Safety Concepts from Demonstrations

Oct 06, 2022

Abstract:Evaluating the safety of an autonomous vehicle (AV) depends on the behavior of surrounding agents which can be heavily influenced by factors such as environmental context and informally-defined driving etiquette. A key challenge is in determining a minimum set of assumptions on what constitutes reasonable foreseeable behaviors of other road users for the development of AV safety models and techniques. In this paper, we propose a data-driven AV safety design methodology that first learns ``reasonable'' behavioral assumptions from data, and then synthesizes an AV safety concept using these learned behavioral assumptions. We borrow techniques from control theory, namely high order control barrier functions and Hamilton-Jacobi reachability, to provide inductive bias to aid interpretability, verifiability, and tractability of our approach. In our experiments, we learn an AV safety concept using demonstrations collected from a highway traffic-weaving scenario, compare our learned concept to existing baselines, and showcase its efficacy in evaluating real-world driving logs.

Task-Relevant Failure Detection for Trajectory Predictors in Autonomous Vehicles

Jul 25, 2022

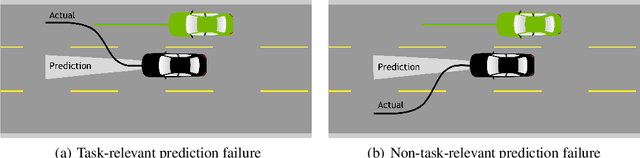

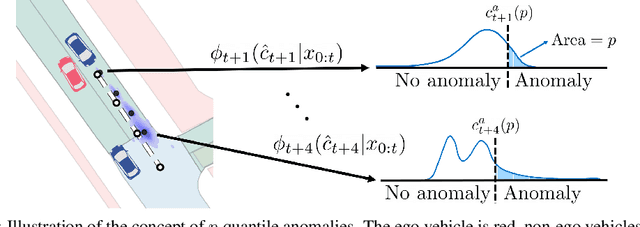

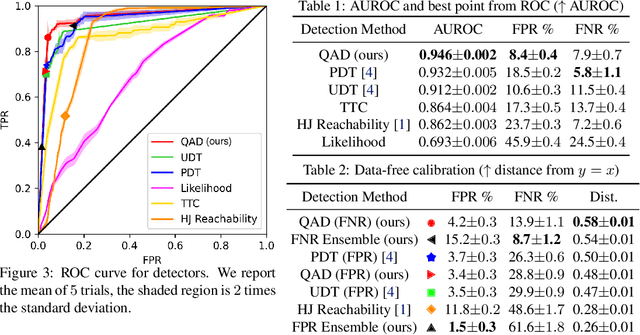

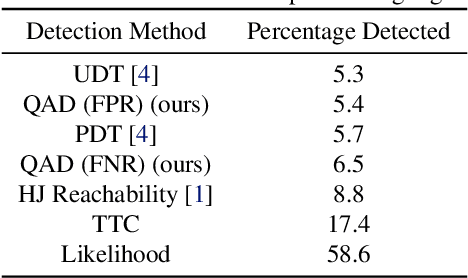

Abstract:In modern autonomy stacks, prediction modules are paramount to planning motions in the presence of other mobile agents. However, failures in prediction modules can mislead the downstream planner into making unsafe decisions. Indeed, the high uncertainty inherent to the task of trajectory forecasting ensures that such mispredictions occur frequently. Motivated by the need to improve safety of autonomous vehicles without compromising on their performance, we develop a probabilistic run-time monitor that detects when a "harmful" prediction failure occurs, i.e., a task-relevant failure detector. We achieve this by propagating trajectory prediction errors to the planning cost to reason about their impact on the AV. Furthermore, our detector comes equipped with performance measures on the false-positive and the false-negative rate and allows for data-free calibration. In our experiments we compared our detector with various others and found that our detector has the highest area under the receiver operator characteristic curve.

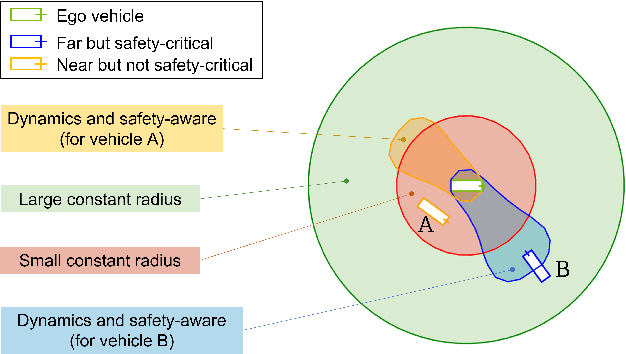

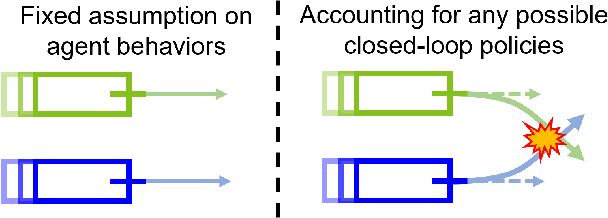

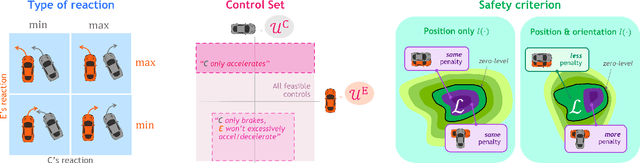

Interaction-Dynamics-Aware Perception Zones for Obstacle Detection Safety Evaluation

Jun 24, 2022

Abstract:To enable safe autonomous vehicle (AV) operations, it is critical that an AV's obstacle detection module can reliably detect obstacles that pose a safety threat (i.e., are safety-critical). It is therefore desirable that the evaluation metric for the perception system captures the safety-criticality of objects. Unfortunately, existing perception evaluation metrics tend to make strong assumptions about the objects and ignore the dynamic interactions between agents, and thus do not accurately capture the safety risks in reality. To address these shortcomings, we introduce an interaction-dynamics-aware obstacle detection evaluation metric by accounting for closed-loop dynamic interactions between an ego vehicle and obstacles in the scene. By borrowing existing theory from optimal control theory, namely Hamilton-Jacobi reachability, we present a computationally tractable method for constructing a ``safety zone'': a region in state space that defines where safety-critical obstacles lie for the purpose of defining safety metrics. Our proposed safety zone is mathematically complete, and can be easily computed to reflect a variety of safety requirements. Using an off-the-shelf detection algorithm from the nuScenes detection challenge leaderboard, we demonstrate that our approach is computationally lightweight, and can better capture safety-critical perception errors than a baseline approach.

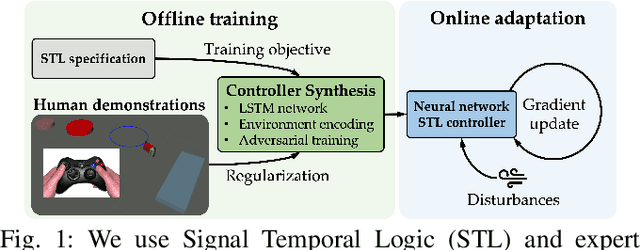

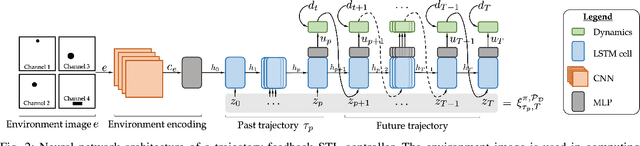

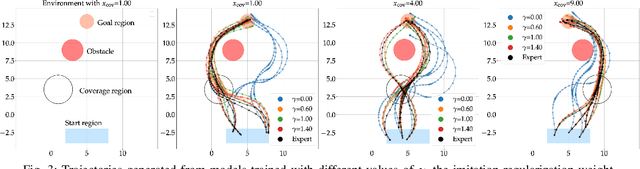

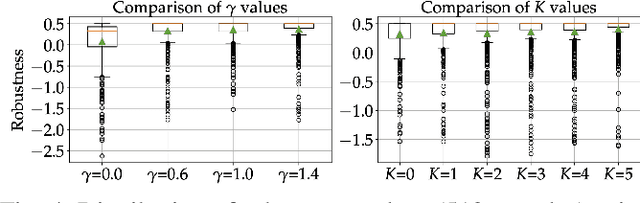

Semi-Supervised Trajectory-Feedback Controller Synthesis for Signal Temporal Logic Specifications

Feb 04, 2022

Abstract:There are spatio-temporal rules that dictate how robots should operate in complex environments, e.g., road rules govern how (self-driving) vehicles should behave on the road. However, seamlessly incorporating such rules into a robot control policy remains challenging especially for real-time applications. In this work, given a desired spatio-temporal specification expressed in the Signal Temporal Logic (STL) language, we propose a semi-supervised controller synthesis technique that is attuned to human-like behaviors while satisfying desired STL specifications. Offline, we synthesize a trajectory-feedback neural network controller via an adversarial training scheme that summarizes past spatio-temporal behaviors when computing controls, and then online, we perform gradient steps to improve specification satisfaction. Central to the offline phase is an imitation-based regularization component that fosters better policy exploration and helps induce naturalistic human behaviors. Our experiments demonstrate that having imitation-based regularization leads to higher qualitative and quantitative performance compared to optimizing an STL objective only as done in prior work. We demonstrate the efficacy of our approach with an illustrative case study and show that our proposed controller outperforms a state-of-the-art shooting method in both performance and computation time.

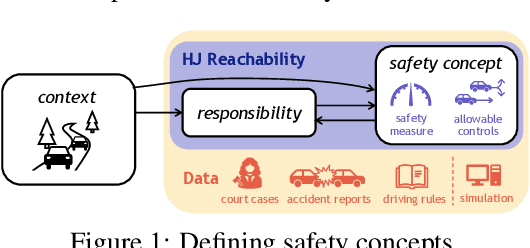

Towards the Unification and Data-Driven Synthesis of Autonomous Vehicle Safety Concepts

Jul 30, 2021

Abstract:As safety-critical autonomous vehicles (AVs) will soon become pervasive in our society, a number of safety concepts for trusted AV deployment have been recently proposed throughout industry and academia. Yet, agreeing upon an "appropriate" safety concept is still an elusive task. In this paper, we advocate for the use of Hamilton Jacobi (HJ) reachability as a unifying mathematical framework for comparing existing safety concepts, and propose ways to expand its modeling premises in a data-driven fashion. Specifically, we show that (i) existing predominant safety concepts can be embedded in the HJ reachability framework, thereby enabling a common language for comparing and contrasting modeling assumptions, and (ii) HJ reachability can serve as an inductive bias to effectively reason, in a data-driven context, about two critical, yet often overlooked aspects of safety: responsibility and context-dependency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge