Junwen Chen

When Rules Fall Short: Agent-Driven Discovery of Emerging Content Issues in Short Video Platforms

Jan 14, 2026Abstract:Trends on short-video platforms evolve at a rapid pace, with new content issues emerging every day that fall outside the coverage of existing annotation policies. However, traditional human-driven discovery of emerging issues is too slow, which leads to delayed updates of annotation policies and poses a major challenge for effective content governance. In this work, we propose an automatic issue discovery method based on multimodal LLM agents. Our approach automatically recalls short videos containing potential new issues and applies a two-stage clustering strategy to group them, with each cluster corresponding to a newly discovered issue. The agent then generates updated annotation policies from these clusters, thereby extending coverage to these emerging issues. Our agent has been deployed in the real system. Both offline and online experiments demonstrate that this agent-based method significantly improves the effectiveness of emerging-issue discovery (with an F1 score improvement of over 20%) and enhances the performance of subsequent issue governance (reducing the view count of problematic videos by approximately 15%). More importantly, compared to manual issue discovery, it greatly reduces time costs and substantially accelerates the iteration of annotation policies.

PosBridge: Multi-View Positional Embedding Transplant for Identity-Aware Image Editing

Aug 24, 2025Abstract:Localized subject-driven image editing aims to seamlessly integrate user-specified objects into target scenes. As generative models continue to scale, training becomes increasingly costly in terms of memory and computation, highlighting the need for training-free and scalable editing frameworks.To this end, we propose PosBridge an efficient and flexible framework for inserting custom objects. A key component of our method is positional embedding transplant, which guides the diffusion model to faithfully replicate the structural characteristics of reference objects.Meanwhile, we introduce the Corner Centered Layout, which concatenates reference images and the background image as input to the FLUX.1-Fill model. During progressive denoising, positional embedding transplant is applied to guide the noise distribution in the target region toward that of the reference object. In this way, Corner Centered Layout effectively directs the FLUX.1-Fill model to synthesize identity-consistent content at the desired location. Extensive experiments demonstrate that PosBridge outperforms mainstream baselines in structural consistency, appearance fidelity, and computational efficiency, showcasing its practical value and potential for broad adoption.

MMMG: A Massive, Multidisciplinary, Multi-Tier Generation Benchmark for Text-to-Image Reasoning

Jun 12, 2025Abstract:In this paper, we introduce knowledge image generation as a new task, alongside the Massive Multi-Discipline Multi-Tier Knowledge-Image Generation Benchmark (MMMG) to probe the reasoning capability of image generation models. Knowledge images have been central to human civilization and to the mechanisms of human learning--a fact underscored by dual-coding theory and the picture-superiority effect. Generating such images is challenging, demanding multimodal reasoning that fuses world knowledge with pixel-level grounding into clear explanatory visuals. To enable comprehensive evaluation, MMMG offers 4,456 expert-validated (knowledge) image-prompt pairs spanning 10 disciplines, 6 educational levels, and diverse knowledge formats such as charts, diagrams, and mind maps. To eliminate confounding complexity during evaluation, we adopt a unified Knowledge Graph (KG) representation. Each KG explicitly delineates a target image's core entities and their dependencies. We further introduce MMMG-Score to evaluate generated knowledge images. This metric combines factual fidelity, measured by graph-edit distance between KGs, with visual clarity assessment. Comprehensive evaluations of 16 state-of-the-art text-to-image generation models expose serious reasoning deficits--low entity fidelity, weak relations, and clutter--with GPT-4o achieving an MMMG-Score of only 50.20, underscoring the benchmark's difficulty. To spur further progress, we release FLUX-Reason (MMMG-Score of 34.45), an effective and open baseline that combines a reasoning LLM with diffusion models and is trained on 16,000 curated knowledge image-prompt pairs.

PrismLayers: Open Data for High-Quality Multi-Layer Transparent Image Generative Models

May 28, 2025Abstract:Generating high-quality, multi-layer transparent images from text prompts can unlock a new level of creative control, allowing users to edit each layer as effortlessly as editing text outputs from LLMs. However, the development of multi-layer generative models lags behind that of conventional text-to-image models due to the absence of a large, high-quality corpus of multi-layer transparent data. In this paper, we address this fundamental challenge by: (i) releasing the first open, ultra-high-fidelity PrismLayers (PrismLayersPro) dataset of 200K (20K) multilayer transparent images with accurate alpha mattes, (ii) introducing a trainingfree synthesis pipeline that generates such data on demand using off-the-shelf diffusion models, and (iii) delivering a strong, open-source multi-layer generation model, ART+, which matches the aesthetics of modern text-to-image generation models. The key technical contributions include: LayerFLUX, which excels at generating high-quality single transparent layers with accurate alpha mattes, and MultiLayerFLUX, which composes multiple LayerFLUX outputs into complete images, guided by human-annotated semantic layout. To ensure higher quality, we apply a rigorous filtering stage to remove artifacts and semantic mismatches, followed by human selection. Fine-tuning the state-of-the-art ART model on our synthetic PrismLayersPro yields ART+, which outperforms the original ART in 60% of head-to-head user study comparisons and even matches the visual quality of images generated by the FLUX.1-[dev] model. We anticipate that our work will establish a solid dataset foundation for the multi-layer transparent image generation task, enabling research and applications that require precise, editable, and visually compelling layered imagery.

Transformation vs Tradition: Artificial General Intelligence (AGI) for Arts and Humanities

Oct 30, 2023

Abstract:Recent advances in artificial general intelligence (AGI), particularly large language models and creative image generation systems have demonstrated impressive capabilities on diverse tasks spanning the arts and humanities. However, the swift evolution of AGI has also raised critical questions about its responsible deployment in these culturally significant domains traditionally seen as profoundly human. This paper provides a comprehensive analysis of the applications and implications of AGI for text, graphics, audio, and video pertaining to arts and the humanities. We survey cutting-edge systems and their usage in areas ranging from poetry to history, marketing to film, and communication to classical art. We outline substantial concerns pertaining to factuality, toxicity, biases, and public safety in AGI systems, and propose mitigation strategies. The paper argues for multi-stakeholder collaboration to ensure AGI promotes creativity, knowledge, and cultural values without undermining truth or human dignity. Our timely contribution summarizes a rapidly developing field, highlighting promising directions while advocating for responsible progress centering on human flourishing. The analysis lays the groundwork for further research on aligning AGI's technological capacities with enduring social goods.

ATM: Action Temporality Modeling for Video Question Answering

Sep 05, 2023

Abstract:Despite significant progress in video question answering (VideoQA), existing methods fall short of questions that require causal/temporal reasoning across frames. This can be attributed to imprecise motion representations. We introduce Action Temporality Modeling (ATM) for temporality reasoning via three-fold uniqueness: (1) rethinking the optical flow and realizing that optical flow is effective in capturing the long horizon temporality reasoning; (2) training the visual-text embedding by contrastive learning in an action-centric manner, leading to better action representations in both vision and text modalities; and (3) preventing the model from answering the question given the shuffled video in the fine-tuning stage, to avoid spurious correlation between appearance and motion and hence ensure faithful temporality reasoning. In the experiments, we show that ATM outperforms previous approaches in terms of the accuracy on multiple VideoQAs and exhibits better true temporality reasoning ability.

Defending Adversarial Patches via Joint Region Localizing and Inpainting

Jul 26, 2023

Abstract:Deep neural networks are successfully used in various applications, but show their vulnerability to adversarial examples. With the development of adversarial patches, the feasibility of attacks in physical scenes increases, and the defenses against patch attacks are urgently needed. However, defending such adversarial patch attacks is still an unsolved problem. In this paper, we analyse the properties of adversarial patches, and find that: on the one hand, adversarial patches will lead to the appearance or contextual inconsistency in the target objects; on the other hand, the patch region will show abnormal changes on the high-level feature maps of the objects extracted by a backbone network. Considering the above two points, we propose a novel defense method based on a ``localizing and inpainting" mechanism to pre-process the input examples. Specifically, we design an unified framework, where the ``localizing" sub-network utilizes a two-branch structure to represent the above two aspects to accurately detect the adversarial patch region in the image. For the ``inpainting" sub-network, it utilizes the surrounding contextual cues to recover the original content covered by the adversarial patch. The quality of inpainted images is also evaluated by measuring the appearance consistency and the effects of adversarial attacks. These two sub-networks are then jointly trained via an iterative optimization manner. In this way, the ``localizing" and ``inpainting" modules can interact closely with each other, and thus learn a better solution. A series of experiments versus traffic sign classification and detection tasks are conducted to defend against various adversarial patch attacks.

Focusing on what to decode and what to train: Efficient Training with HOI Split Decoders and Specific Target Guided DeNoising

Jul 05, 2023Abstract:Recent one-stage transformer-based methods achieve notable gains in the Human-object Interaction Detection (HOI) task by leveraging the detection of DETR. However, the current methods redirect the detection target of the object decoder, and the box target is not explicitly separated from the query embeddings, which leads to long and hard training. Furthermore, matching the predicted HOI instances with the ground-truth is more challenging than object detection, simply adapting training strategies from the object detection makes the training more difficult. To clear the ambiguity between human and object detection and share the prediction burden, we propose a novel one-stage framework (SOV), which consists of a subject decoder, an object decoder, and a verb decoder. Moreover, we propose a novel Specific Target Guided (STG) DeNoising strategy, which leverages learnable object and verb label embeddings to guide the training and accelerates the training convergence. In addition, for the inference part, the label-specific information is directly fed into the decoders by initializing the query embeddings from the learnable label embeddings. Without additional features or prior language knowledge, our method (SOV-STG) achieves higher accuracy than the state-of-the-art method in one-third of training epochs. The code is available at \url{https://github.com/cjw2021/SOV-STG}.

GateHUB: Gated History Unit with Background Suppression for Online Action Detection

Jun 09, 2022

Abstract:Online action detection is the task of predicting the action as soon as it happens in a streaming video. A major challenge is that the model does not have access to the future and has to solely rely on the history, i.e., the frames observed so far, to make predictions. It is therefore important to accentuate parts of the history that are more informative to the prediction of the current frame. We present GateHUB, Gated History Unit with Background Suppression, that comprises a novel position-guided gated cross-attention mechanism to enhance or suppress parts of the history as per how informative they are for current frame prediction. GateHUB further proposes Future-augmented History (FaH) to make history features more informative by using subsequently observed frames when available. In a single unified framework, GateHUB integrates the transformer's ability of long-range temporal modeling and the recurrent model's capacity to selectively encode relevant information. GateHUB also introduces a background suppression objective to further mitigate false positive background frames that closely resemble the action frames. Extensive validation on three benchmark datasets, THUMOS, TVSeries, and HDD, demonstrates that GateHUB significantly outperforms all existing methods and is also more efficient than the existing best work. Furthermore, a flow-free version of GateHUB is able to achieve higher or close accuracy at 2.8x higher frame rate compared to all existing methods that require both RGB and optical flow information for prediction.

Transformer-based Cross-Modal Recipe Embeddings with Large Batch Training

May 10, 2022

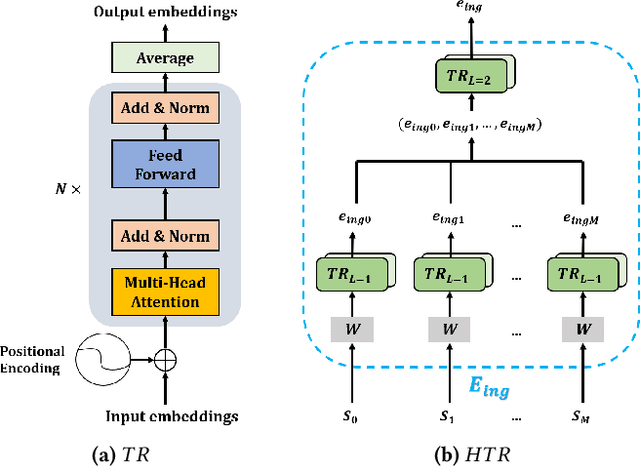

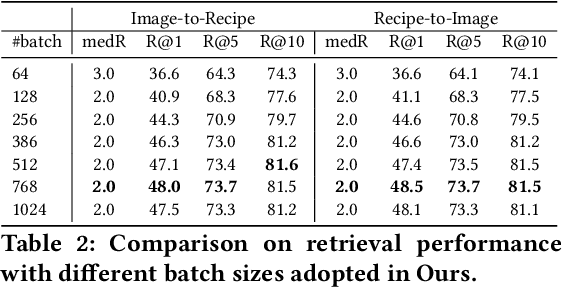

Abstract:In this paper, we present a cross-modal recipe retrieval framework, Transformer-based Network for Large Batch Training (TNLBT), which is inspired by ACME~(Adversarial Cross-Modal Embedding) and H-T~(Hierarchical Transformer). TNLBT aims to accomplish retrieval tasks while generating images from recipe embeddings. We apply the Hierarchical Transformer-based recipe text encoder, the Vision Transformer~(ViT)-based recipe image encoder, and an adversarial network architecture to enable better cross-modal embedding learning for recipe texts and images. In addition, we use self-supervised learning to exploit the rich information in the recipe texts having no corresponding images. Since contrastive learning could benefit from a larger batch size according to the recent literature on self-supervised learning, we adopt a large batch size during training and have validated its effectiveness. In the experiments, the proposed framework significantly outperformed the current state-of-the-art frameworks in both cross-modal recipe retrieval and image generation tasks on the benchmark Recipe1M. This is the first work which confirmed the effectiveness of large batch training on cross-modal recipe embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge