Jun Hu

NVIDIA, Duke University

Quality assurance of organs-at-risk delineation in radiotherapy

May 20, 2024

Abstract:The delineation of tumor target and organs-at-risk is critical in the radiotherapy treatment planning. Automatic segmentation can be used to reduce the physician workload and improve the consistency. However, the quality assurance of the automatic segmentation is still an unmet need in clinical practice. The patient data used in our study was a standardized dataset from AAPM Thoracic Auto-Segmentation Challenge. The OARs included were left and right lungs, heart, esophagus, and spinal cord. Two groups of OARs were generated, the benchmark dataset manually contoured by experienced physicians and the test dataset automatically created using a software AccuContour. A resnet-152 network was performed as feature extractor, and one-class support vector classifier was used to determine the high or low quality. We evaluate the model performance with balanced accuracy, F-score, sensitivity, specificity and the area under the receiving operator characteristic curve. We randomly generated contour errors to assess the generalization of our method, explored the detection limit, and evaluated the correlations between detection limit and various metrics such as volume, Dice similarity coefficient, Hausdorff distance, and mean surface distance. The proposed one-class classifier outperformed in metrics such as balanced accuracy, AUC, and others. The proposed method showed significant improvement over binary classifiers in handling various types of errors. Our proposed model, which introduces residual network and attention mechanism in the one-class classification framework, was able to detect the various types of OAR contour errors with high accuracy. The proposed method can significantly reduce the burden of physician review for contour delineation.

Square-Root Inverse Filter-based GNSS-Visual-Inertial Navigation

May 17, 2024Abstract:While Global Navigation Satellite System (GNSS) is often used to provide global positioning if available, its intermittency and/or inaccuracy calls for fusion with other sensors. In this paper, we develop a novel GNSS-Visual-Inertial Navigation System (GVINS) that fuses visual, inertial, and raw GNSS measurements within the square-root inverse sliding window filtering (SRI-SWF) framework in a tightly coupled fashion, which thus is termed SRI-GVINS. In particular, for the first time, we deeply fuse the GNSS pseudorange, Doppler shift, single-differenced pseudorange, and double-differenced carrier phase measurements, along with the visual-inertial measurements. Inherited from the SRI-SWF, the proposed SRI-GVINS gains significant numerical stability and computational efficiency over the start-of-the-art methods. Additionally, we propose to use a filter to sequentially initialize the reference frame transformation till converges, rather than collecting measurements for batch optimization. We also perform online calibration of GNSS-IMU extrinsic parameters to mitigate the possible extrinsic parameter degradation. The proposed SRI-GVINS is extensively evaluated on our own collected UAV datasets and the results demonstrate that the proposed method is able to suppress VIO drift in real-time and also show the effectiveness of online GNSS-IMU extrinsic calibration. The experimental validation on the public datasets further reveals that the proposed SRI-GVINS outperforms the state-of-the-art methods in terms of both accuracy and efficiency.

A hybrid iterative method based on MIONet for PDEs: Theory and numerical examples

Feb 11, 2024

Abstract:We propose a hybrid iterative method based on MIONet for PDEs, which combines the traditional numerical iterative solver and the recent powerful machine learning method of neural operator, and further systematically analyze its theoretical properties, including the convergence condition, the spectral behavior, as well as the convergence rate, in terms of the errors of the discretization and the model inference. We show the theoretical results for the frequently-used smoothers, i.e. Richardson (damped Jacobi) and Gauss-Seidel. We give an upper bound of the convergence rate of the hybrid method w.r.t. the model correction period, which indicates a minimum point to make the hybrid iteration converge fastest. Several numerical examples including the hybrid Richardson (Gauss-Seidel) iteration for the 1-d (2-d) Poisson equation are presented to verify our theoretical results, and also reflect an excellent acceleration effect. As a meshless acceleration method, it is provided with enormous potentials for practice applications.

The NeurIPS 2022 Neural MMO Challenge: A Massively Multiagent Competition with Specialization and Trade

Nov 07, 2023

Abstract:In this paper, we present the results of the NeurIPS-2022 Neural MMO Challenge, which attracted 500 participants and received over 1,600 submissions. Like the previous IJCAI-2022 Neural MMO Challenge, it involved agents from 16 populations surviving in procedurally generated worlds by collecting resources and defeating opponents. This year's competition runs on the latest v1.6 Neural MMO, which introduces new equipment, combat, trading, and a better scoring system. These elements combine to pose additional robustness and generalization challenges not present in previous competitions. This paper summarizes the design and results of the challenge, explores the potential of this environment as a benchmark for learning methods, and presents some practical reinforcement learning training approaches for complex tasks with sparse rewards. Additionally, we have open-sourced our baselines, including environment wrappers, benchmarks, and visualization tools for future research.

Efficient Heterogeneous Graph Learning via Random Projection

Oct 23, 2023

Abstract:Heterogeneous Graph Neural Networks (HGNNs) are powerful tools for deep learning on heterogeneous graphs. Typical HGNNs require repetitive message passing during training, limiting efficiency for large-scale real-world graphs. Recent pre-computation-based HGNNs use one-time message passing to transform a heterogeneous graph into regular-shaped tensors, enabling efficient mini-batch training. Existing pre-computation-based HGNNs can be mainly categorized into two styles, which differ in how much information loss is allowed and efficiency. We propose a hybrid pre-computation-based HGNN, named Random Projection Heterogeneous Graph Neural Network (RpHGNN), which combines the benefits of one style's efficiency with the low information loss of the other style. To achieve efficiency, the main framework of RpHGNN consists of propagate-then-update iterations, where we introduce a Random Projection Squashing step to ensure that complexity increases only linearly. To achieve low information loss, we introduce a Relation-wise Neighbor Collection component with an Even-odd Propagation Scheme, which aims to collect information from neighbors in a finer-grained way. Experimental results indicate that our approach achieves state-of-the-art results on seven small and large benchmark datasets while also being 230% faster compared to the most effective baseline. Surprisingly, our approach not only surpasses pre-processing-based baselines but also outperforms end-to-end methods.

One Model for All: Large Language Models are Domain-Agnostic Recommendation Systems

Oct 22, 2023

Abstract:The purpose of sequential recommendation is to utilize the interaction history of a user and predict the next item that the user is most likely to interact with. While data sparsity and cold start are two challenges that most recommender systems are still facing, many efforts are devoted to utilizing data from other domains, called cross-domain methods. However, general cross-domain methods explore the relationship between two domains by designing complex model architecture, making it difficult to scale to multiple domains and utilize more data. Moreover, existing recommendation systems use IDs to represent item, which carry less transferable signals in cross-domain scenarios, and user cross-domain behaviors are also sparse, making it challenging to learn item relationship from different domains. These problems hinder the application of multi-domain methods to sequential recommendation. Recently, large language models (LLMs) exhibit outstanding performance in world knowledge learning from text corpora and general-purpose question answering. Inspired by these successes, we propose a simple but effective framework for domain-agnostic recommendation by exploiting the pre-trained LLMs (namely LLM-Rec). We mix the user's behavior across different domains, and then concatenate the title information of these items into a sentence and model the user's behaviors with a pre-trained language model. We expect that by mixing the user's behaviors across different domains, we can exploit the common knowledge encoded in the pre-trained language model to alleviate the problems of data sparsity and cold start problems. Furthermore, we are curious about whether the latest technical advances in nature language processing (NLP) can transfer to the recommendation scenarios.

Efficient Model Adaptation for Continual Learning at the Edge

Aug 03, 2023

Abstract:Most machine learning (ML) systems assume stationary and matching data distributions during training and deployment. This is often a false assumption. When ML models are deployed on real devices, data distributions often shift over time due to changes in environmental factors, sensor characteristics, and task-of-interest. While it is possible to have a human-in-the-loop to monitor for distribution shifts and engineer new architectures in response to these shifts, such a setup is not cost-effective. Instead, non-stationary automated ML (AutoML) models are needed. This paper presents the Encoder-Adaptor-Reconfigurator (EAR) framework for efficient continual learning under domain shifts. The EAR framework uses a fixed deep neural network (DNN) feature encoder and trains shallow networks on top of the encoder to handle novel data. The EAR framework is capable of 1) detecting when new data is out-of-distribution (OOD) by combining DNNs with hyperdimensional computing (HDC), 2) identifying low-parameter neural adaptors to adapt the model to the OOD data using zero-shot neural architecture search (ZS-NAS), and 3) minimizing catastrophic forgetting on previous tasks by progressively growing the neural architecture as needed and dynamically routing data through the appropriate adaptors and reconfigurators for handling domain-incremental and class-incremental continual learning. We systematically evaluate our approach on several benchmark datasets for domain adaptation and demonstrate strong performance compared to state-of-the-art algorithms for OOD detection and few-/zero-shot NAS.

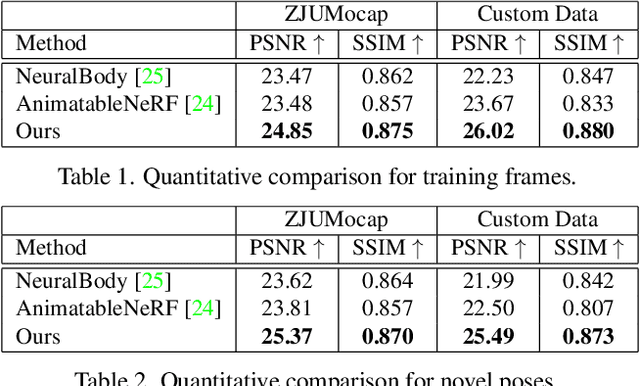

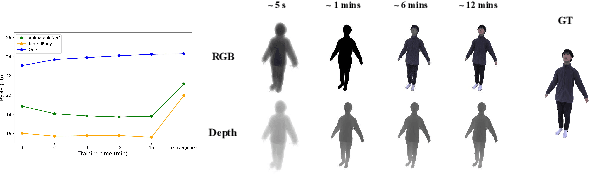

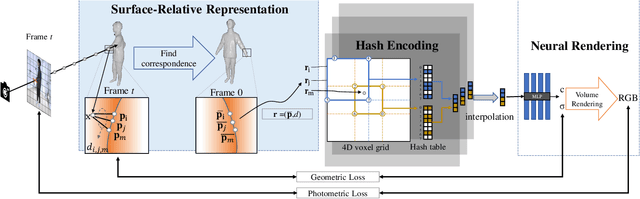

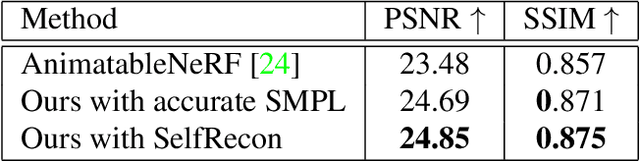

IntrinsicNGP: Intrinsic Coordinate based Hash Encoding for Human NeRF

Mar 09, 2023Abstract:Recently, many works have been proposed to utilize the neural radiance field for novel view synthesis of human performers. However, most of these methods require hours of training, making them difficult for practical use. To address this challenging problem, we propose IntrinsicNGP, which can train from scratch and achieve high-fidelity results in few minutes with videos of a human performer. To achieve this target, we introduce a continuous and optimizable intrinsic coordinate rather than the original explicit Euclidean coordinate in the hash encoding module of instant-NGP. With this novel intrinsic coordinate, IntrinsicNGP can aggregate inter-frame information for dynamic objects with the help of proxy geometry shapes. Moreover, the results trained with the given rough geometry shapes can be further refined with an optimizable offset field based on the intrinsic coordinate.Extensive experimental results on several datasets demonstrate the effectiveness and efficiency of IntrinsicNGP. We also illustrate our approach's ability to edit the shape of reconstructed subjects.

Experimental observation on a low-rank tensor model for eigenvalue problems

Feb 01, 2023

Abstract:Here we utilize a low-rank tensor model (LTM) as a function approximator, combined with the gradient descent method, to solve eigenvalue problems including the Laplacian operator and the harmonic oscillator. Experimental results show the superiority of the polynomial-based low-rank tensor model (PLTM) compared to the tensor neural network (TNN). We also test such low-rank architectures for the classification problem on the MNIST dataset.

SelfNeRF: Fast Training NeRF for Human from Monocular Self-rotating Video

Oct 04, 2022

Abstract:In this paper, we propose SelfNeRF, an efficient neural radiance field based novel view synthesis method for human performance. Given monocular self-rotating videos of human performers, SelfNeRF can train from scratch and achieve high-fidelity results in about twenty minutes. Some recent works have utilized the neural radiance field for dynamic human reconstruction. However, most of these methods need multi-view inputs and require hours of training, making it still difficult for practical use. To address this challenging problem, we introduce a surface-relative representation based on multi-resolution hash encoding that can greatly improve the training speed and aggregate inter-frame information. Extensive experimental results on several different datasets demonstrate the effectiveness and efficiency of SelfNeRF to challenging monocular videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge