Jianxi Huang

A Comprehensive Review of Agricultural Parcel and Boundary Delineation from Remote Sensing Images: Recent Progress and Future Perspectives

Aug 20, 2025

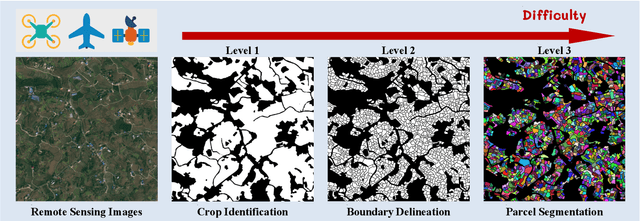

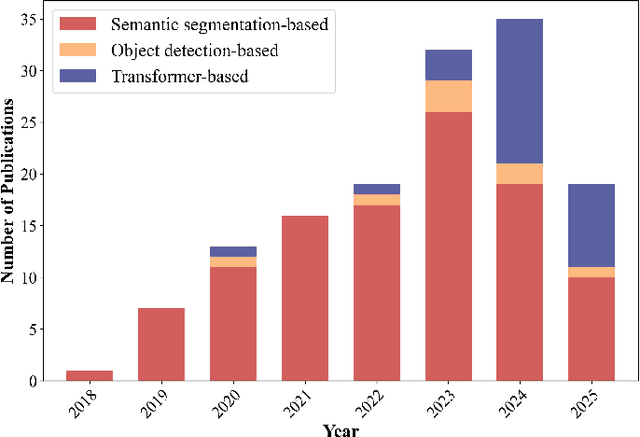

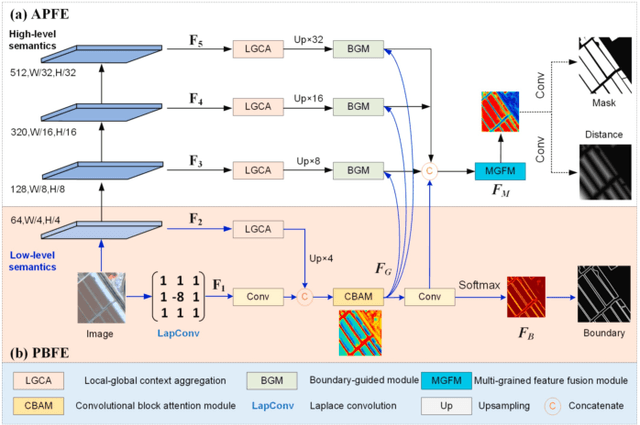

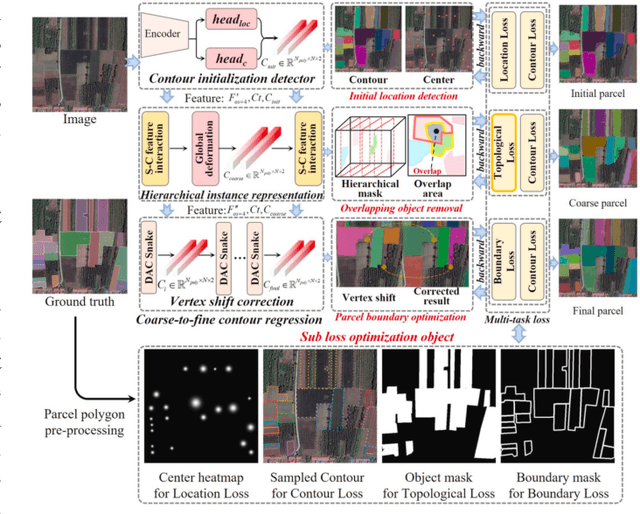

Abstract:Powered by advances in multiple remote sensing sensors, the production of high spatial resolution images provides great potential to achieve cost-efficient and high-accuracy agricultural inventory and analysis in an automated way. Lots of studies that aim at providing an inventory of the level of each agricultural parcel have generated many methods for Agricultural Parcel and Boundary Delineation (APBD). This review covers APBD methods for detecting and delineating agricultural parcels and systematically reviews the past and present of APBD-related research applied to remote sensing images. With the goal to provide a clear knowledge map of existing APBD efforts, we conduct a comprehensive review of recent APBD papers to build a meta-data analysis, including the algorithm, the study site, the crop type, the sensor type, the evaluation method, etc. We categorize the methods into three classes: (1) traditional image processing methods (including pixel-based, edge-based and region-based); (2) traditional machine learning methods (such as random forest, decision tree); and (3) deep learning-based methods. With deep learning-oriented approaches contributing to a majority, we further discuss deep learning-based methods like semantic segmentation-based, object detection-based and Transformer-based methods. In addition, we discuss five APBD-related issues to further comprehend the APBD domain using remote sensing data, such as multi-sensor data in APBD task, comparisons between single-task learning and multi-task learning in the APBD domain, comparisons among different algorithms and different APBD tasks, etc. Finally, this review proposes some APBD-related applications and a few exciting prospects and potential hot topics in future APBD research. We hope this review help researchers who involved in APBD domain to keep track of its development and tendency.

Can Large Multimodal Models Understand Agricultural Scenes? Benchmarking with AgroMind

May 18, 2025Abstract:Large Multimodal Models (LMMs) has demonstrated capabilities across various domains, but comprehensive benchmarks for agricultural remote sensing (RS) remain scarce. Existing benchmarks designed for agricultural RS scenarios exhibit notable limitations, primarily in terms of insufficient scene diversity in the dataset and oversimplified task design. To bridge this gap, we introduce AgroMind, a comprehensive agricultural remote sensing benchmark covering four task dimensions: spatial perception, object understanding, scene understanding, and scene reasoning, with a total of 13 task types, ranging from crop identification and health monitoring to environmental analysis. We curate a high-quality evaluation set by integrating eight public datasets and one private farmland plot dataset, containing 25,026 QA pairs and 15,556 images. The pipeline begins with multi-source data preprocessing, including collection, format standardization, and annotation refinement. We then generate a diverse set of agriculturally relevant questions through the systematic definition of tasks. Finally, we employ LMMs for inference, generating responses, and performing detailed examinations. We evaluated 18 open-source LMMs and 3 closed-source models on AgroMind. Experiments reveal significant performance gaps, particularly in spatial reasoning and fine-grained recognition, it is notable that human performance lags behind several leading LMMs. By establishing a standardized evaluation framework for agricultural RS, AgroMind reveals the limitations of LMMs in domain knowledge and highlights critical challenges for future work. Data and code can be accessed at https://rssysu.github.io/AgroMind/.

Low Saturation Confidence Distribution-based Test-Time Adaptation for Cross-Domain Remote Sensing Image Classification

Aug 29, 2024Abstract:Although the Unsupervised Domain Adaptation (UDA) method has improved the effect of remote sensing image classification tasks, most of them are still limited by access to the source domain (SD) data. Designs such as Source-free Domain Adaptation (SFDA) solve the challenge of a lack of SD data, however, they still rely on a large amount of target domain data and thus cannot achieve fast adaptations, which seriously hinders their further application in broader scenarios. The real-world applications of cross-domain remote sensing image classification require a balance of speed and accuracy at the same time. Therefore, we propose a novel and comprehensive test time adaptation (TTA) method -- Low Saturation Confidence Distribution Test Time Adaptation (LSCD-TTA), which is the first attempt to solve such scenarios through the idea of TTA. LSCD-TTA specifically considers the distribution characteristics of remote sensing images, including three main parts that concentrate on different optimization directions: First, low saturation distribution (LSD) considers the dominance of low-confidence samples during the later TTA stage. Second, weak-category cross-entropy (WCCE) increases the weight of categories that are more difficult to classify with less prior knowledge. Finally, diverse categories confidence (DIV) comprehensively considers the category diversity to alleviate the deviation of the sample distribution. By weighting the abovementioned three modules, the model can widely, quickly and accurately adapt to the target domain without much prior target distributions, repeated data access, and manual annotation. We evaluate LSCD-TTA on three remote-sensing image datasets. The experimental results show that LSCD-TTA achieves a significant gain of 4.96%-10.51% with Resnet-50 and 5.33%-12.49% with Resnet-101 in average accuracy compared to other state-of-the-art DA and TTA methods.

Layered Image Vectorization via Semantic Simplification

Jun 08, 2024

Abstract:This work presents a novel progressive image vectorization technique aimed at generating layered vectors that represent the original image from coarse to fine detail levels. Our approach introduces semantic simplification, which combines Score Distillation Sampling and semantic segmentation to iteratively simplify the input image. Subsequently, our method optimizes the vector layers for each of the progressively simplified images. Our method provides robust optimization, which avoids local minima and enables adjustable detail levels in the final output. The layered, compact vector representation enhances usability for further editing and modification. Comparative analysis with conventional vectorization methods demonstrates our technique's superiority in producing vectors with high visual fidelity, and more importantly, maintaining vector compactness and manageability. The project homepage is https://szuviz.github.io/layered_vectorization/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge