Jianbo Lu

D4PM: A Dual-branch Driven Denoising Diffusion Probabilistic Model with Joint Posterior Diffusion Sampling for EEG Artifacts Removal

Sep 17, 2025Abstract:Artifact removal is critical for accurate analysis and interpretation of Electroencephalogram (EEG) signals. Traditional methods perform poorly with strong artifact-EEG correlations or single-channel data. Recent advances in diffusion-based generative models have demonstrated strong potential for EEG denoising, notably improving fine-grained noise suppression and reducing over-smoothing. However, existing methods face two main limitations: lack of temporal modeling limits interpretability and the use of single-artifact training paradigms ignore inter-artifact differences. To address these issues, we propose D4PM, a dual-branch driven denoising diffusion probabilistic model that unifies multi-type artifact removal. We introduce a dual-branch conditional diffusion architecture to implicitly model the data distribution of clean EEG and artifacts. A joint posterior sampling strategy is further designed to collaboratively integrate complementary priors for high-fidelity EEG reconstruction. Extensive experiments on two public datasets show that D4PM delivers superior denoising. It achieves new state-of-the-art performance in EOG artifact removal, outperforming all publicly available baselines. The code is available at https://github.com/flysnow1024/D4PM.

Unity is Power: Semi-Asynchronous Collaborative Training of Large-Scale Models with Structured Pruning in Resource-Limited Clients

Oct 11, 2024

Abstract:In this work, we study to release the potential of massive heterogeneous weak computing power to collaboratively train large-scale models on dispersed datasets. In order to improve both efficiency and accuracy in resource-adaptive collaborative learning, we take the first step to consider the \textit{unstructured pruning}, \textit{varying submodel architectures}, \textit{knowledge loss}, and \textit{straggler} challenges simultaneously. We propose a novel semi-asynchronous collaborative training framework, namely ${Co\text{-}S}^2{P}$, with data distribution-aware structured pruning and cross-block knowledge transfer mechanism to address the above concerns. Furthermore, we provide theoretical proof that ${Co\text{-}S}^2{P}$ can achieve asymptotic optimal convergence rate of $O(1/\sqrt{N^*EQ})$. Finally, we conduct extensive experiments on a real-world hardware testbed, in which 16 heterogeneous Jetson devices can be united to train large-scale models with parameters up to 0.11 billion. The experimental results demonstrate that $Co\text{-}S^2P$ improves accuracy by up to 8.8\% and resource utilization by up to 1.2$\times$ compared to state-of-the-art methods, while reducing memory consumption by approximately 22\% and training time by about 24\% on all resource-limited devices.

Salient Bundle Adjustment for Visual SLAM

Dec 22, 2020

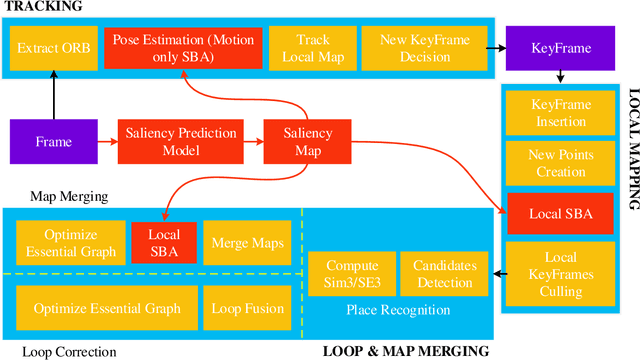

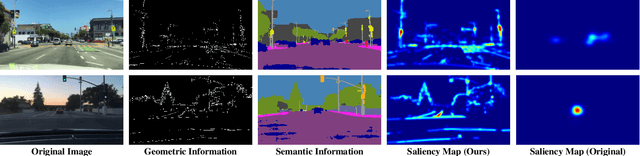

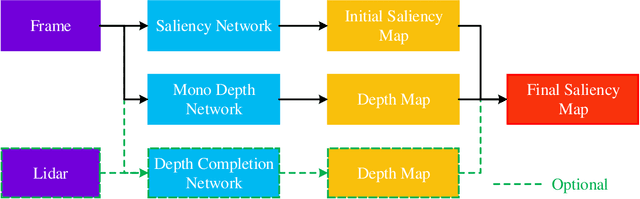

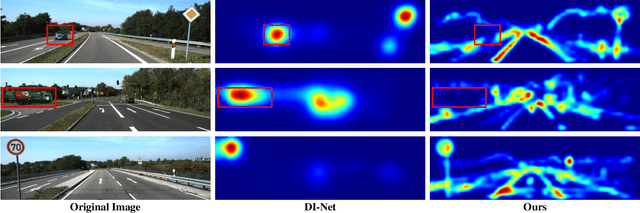

Abstract:Recently, the philosophy of visual saliency and attention has started to gain popularity in the robotics community. Therefore, this paper aims to mimic this mechanism in SLAM framework by using saliency prediction model. Comparing with traditional SLAM that treated all feature points as equal important in optimization process, we think that the salient feature points should play more important role in optimization process. Therefore, we proposed a saliency model to predict the saliency map, which can capture both scene semantic and geometric information. Then, we proposed Salient Bundle Adjustment by using the value of saliency map as the weight of the feature points in traditional Bundle Adjustment approach. Exhaustive experiments conducted with the state-of-the-art algorithm in KITTI and EuRoc datasets show that our proposed algorithm outperforms existing algorithms in both indoor and outdoor environments. Finally, we will make our saliency dataset and relevant source code open-source for enabling future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge