Is BERT a Cross-Disciplinary Knowledge Learner? A Surprising Finding of Pre-trained Models' Transferability

Mar 12, 2021Wei-Tsung Kao, Hung-Yi Lee

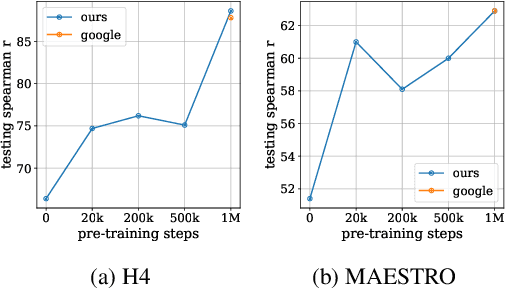

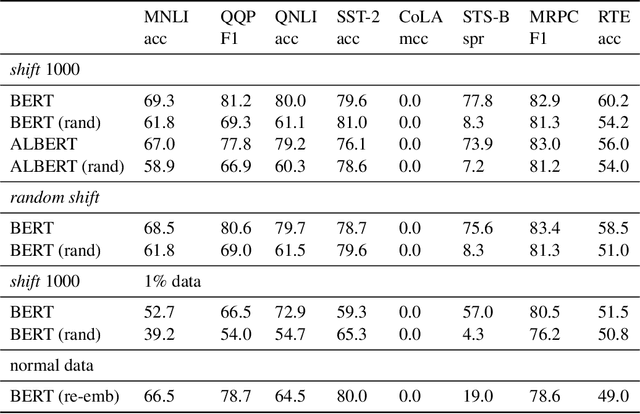

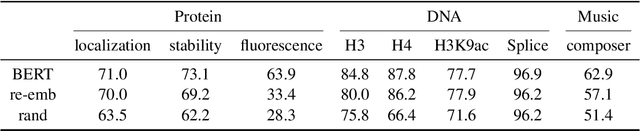

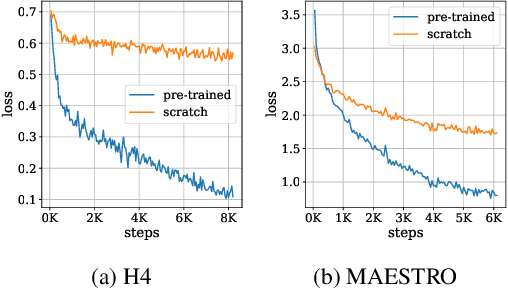

In this paper, we investigate whether the power of the models pre-trained on text data, such as BERT, can be transferred to general token sequence classification applications. To verify pre-trained models' transferability, we test the pre-trained models on (1) text classification tasks with meanings of tokens mismatches, and (2) real-world non-text token sequence classification data, including amino acid sequence, DNA sequence, and music. We find that even on non-text data, the models pre-trained on text converge faster than the randomly initialized models, and the testing performance of the pre-trained models is merely slightly worse than the models designed for the specific tasks.

TaylorGAN: Neighbor-Augmented Policy Update for Sample-Efficient Natural Language Generation

Nov 27, 2020Chun-Hsing Lin, Siang-Ruei Wu, Hung-Yi Lee, Yun-Nung Chen

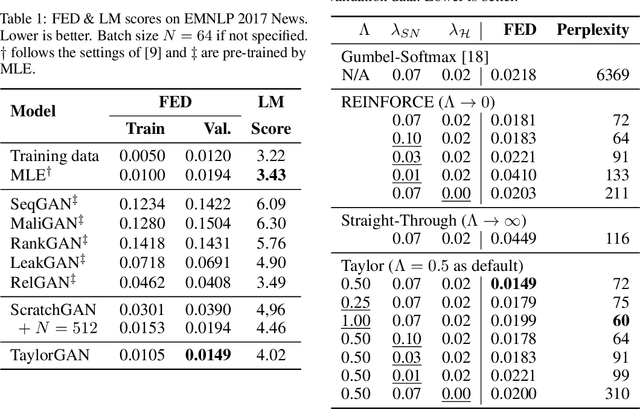

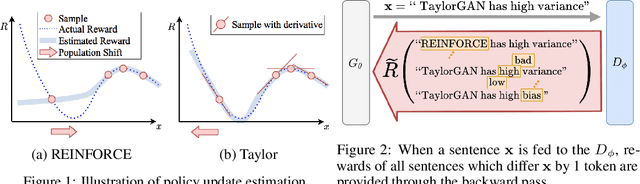

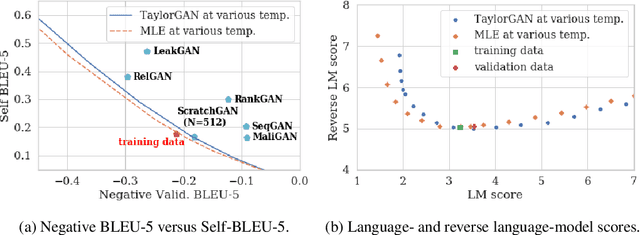

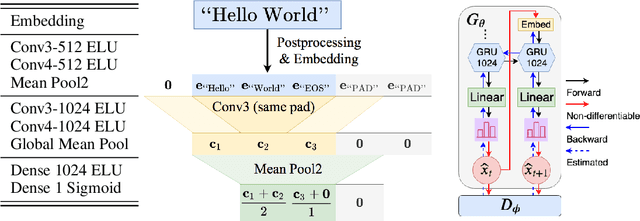

Score function-based natural language generation (NLG) approaches such as REINFORCE, in general, suffer from low sample efficiency and training instability problems. This is mainly due to the non-differentiable nature of the discrete space sampling and thus these methods have to treat the discriminator as a black box and ignore the gradient information. To improve the sample efficiency and reduce the variance of REINFORCE, we propose a novel approach, TaylorGAN, which augments the gradient estimation by off-policy update and the first-order Taylor expansion. This approach enables us to train NLG models from scratch with smaller batch size -- without maximum likelihood pre-training, and outperforms existing GAN-based methods on multiple metrics of quality and diversity. The source code and data are available at https://github.com/MiuLab/TaylorGAN

Semi-Supervised Spoken Language Understanding via Self-Supervised Speech and Language Model Pretraining

Oct 26, 2020Cheng-I Lai, Yung-Sung Chuang, Hung-Yi Lee, Shang-Wen Li, James Glass

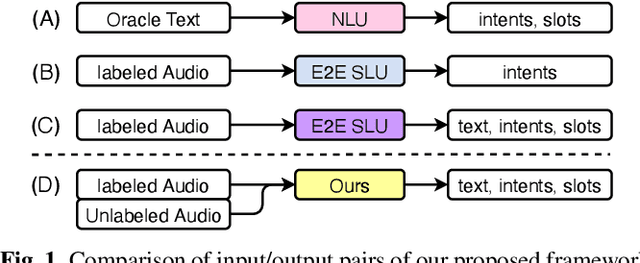

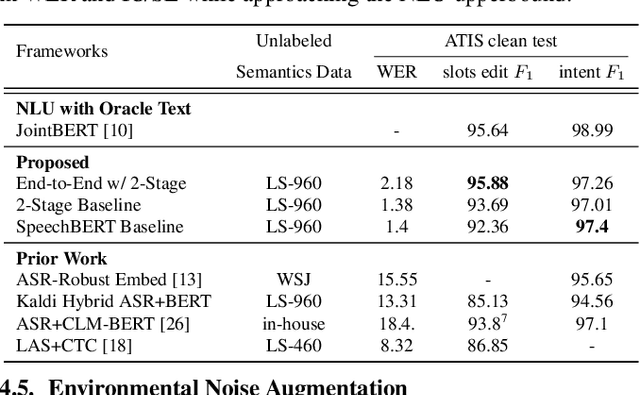

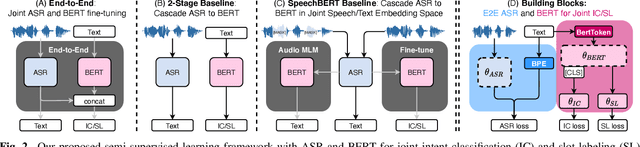

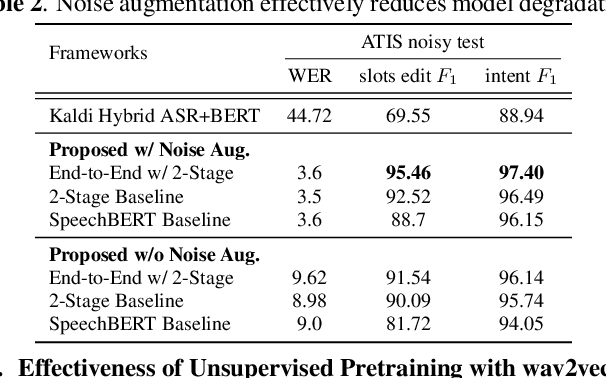

Much recent work on Spoken Language Understanding (SLU) is limited in at least one of three ways: models were trained on oracle text input and neglected ASR errors, models were trained to predict only intents without the slot values, or models were trained on a large amount of in-house data. In this paper, we propose a clean and general framework to learn semantics directly from speech with semi-supervision from transcribed or untranscribed speech to address these issues. Our framework is built upon pretrained end-to-end (E2E) ASR and self-supervised language models, such as BERT, and fine-tuned on a limited amount of target SLU data. We study two semi-supervised settings for the ASR component: supervised pretraining on transcribed speech, and unsupervised pretraining by replacing the ASR encoder with self-supervised speech representations, such as wav2vec. In parallel, we identify two essential criteria for evaluating SLU models: environmental noise-robustness and E2E semantics evaluation. Experiments on ATIS show that our SLU framework with speech as input can perform on par with those using oracle text as input in semantics understanding, even though environmental noise is present and a limited amount of labeled semantics data is available for training.

VQVC+: One-Shot Voice Conversion by Vector Quantization and U-Net architecture

Jun 07, 2020Da-Yi Wu, Yen-Hao Chen, Hung-Yi Lee

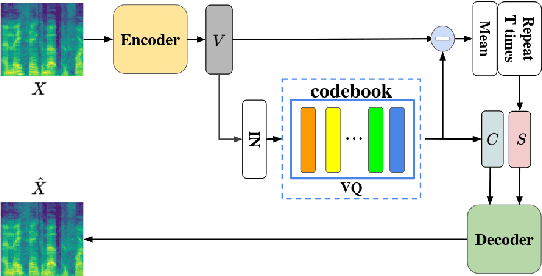

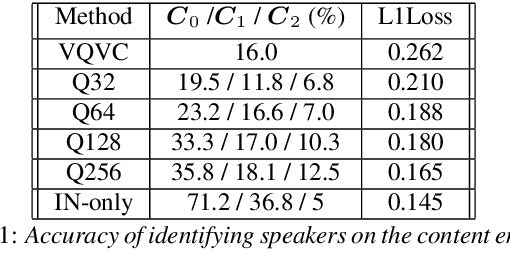

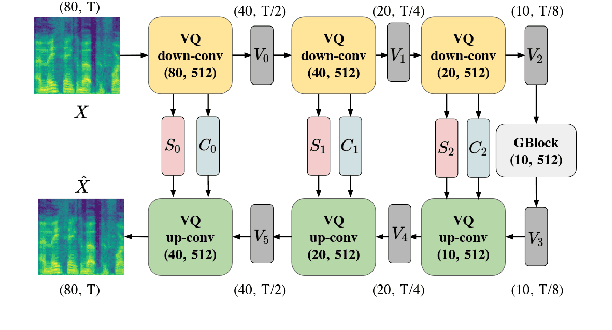

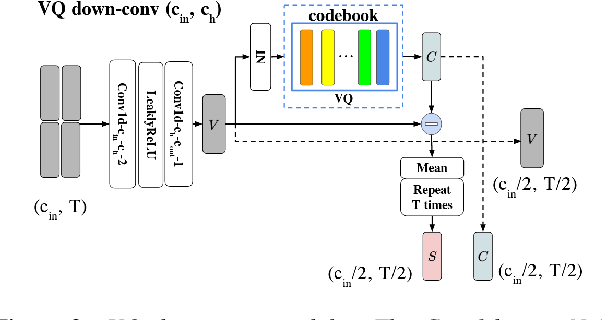

Voice conversion (VC) is a task that transforms the source speaker's timbre, accent, and tones in audio into another one's while preserving the linguistic content. It is still a challenging work, especially in a one-shot setting. Auto-encoder-based VC methods disentangle the speaker and the content in input speech without given the speaker's identity, so these methods can further generalize to unseen speakers. The disentangle capability is achieved by vector quantization (VQ), adversarial training, or instance normalization (IN). However, the imperfect disentanglement may harm the quality of output speech. In this work, to further improve audio quality, we use the U-Net architecture within an auto-encoder-based VC system. We find that to leverage the U-Net architecture, a strong information bottleneck is necessary. The VQ-based method, which quantizes the latent vectors, can serve the purpose. The objective and the subjective evaluations show that the proposed method performs well in both audio naturalness and speaker similarity.

Learning Interpretable and Discrete Representations with Adversarial Training for Unsupervised Text Classification

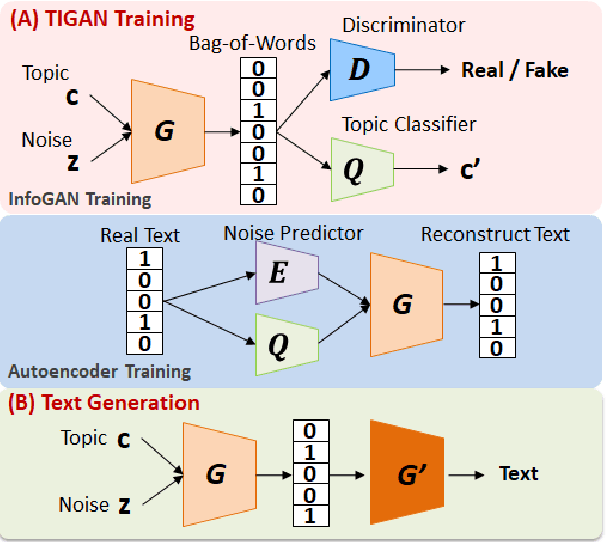

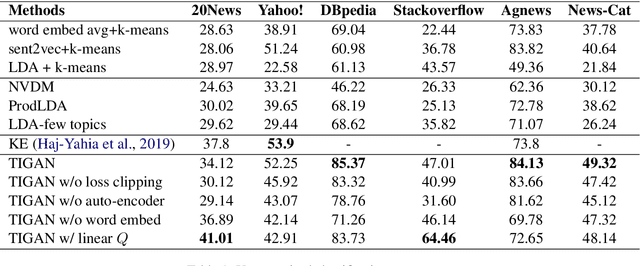

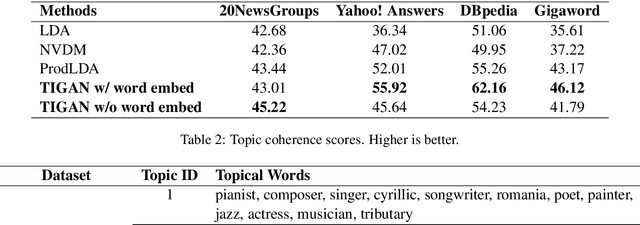

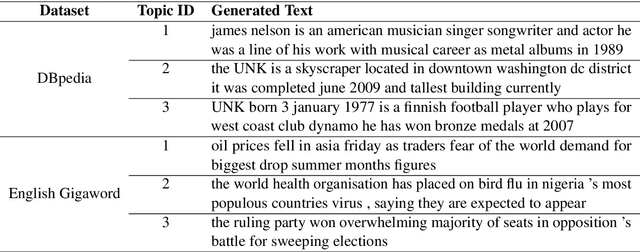

Apr 28, 2020Yau-Shian Wang, Hung-Yi Lee, Yun-Nung Chen

Learning continuous representations from unlabeled textual data has been increasingly studied for benefiting semi-supervised learning. Although it is relatively easier to interpret discrete representations, due to the difficulty of training, learning discrete representations for unlabeled textual data has not been widely explored. This work proposes TIGAN that learns to encode texts into two disentangled representations, including a discrete code and a continuous noise, where the discrete code represents interpretable topics, and the noise controls the variance within the topics. The discrete code learned by TIGAN can be used for unsupervised text classification. Compared to other unsupervised baselines, the proposed TIGAN achieves superior performance on six different corpora. Also, the performance is on par with a recently proposed weakly-supervised text classification method. The extracted topical words for representing latent topics show that TIGAN learns coherent and highly interpretable topics.

A Study of Cross-Lingual Ability and Language-specific Information in Multilingual BERT

Apr 20, 2020Chi-Liang Liu, Tsung-Yuan Hsu, Yung-Sung Chuang, Hung-Yi Lee

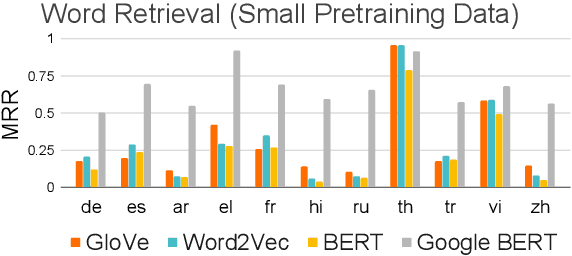

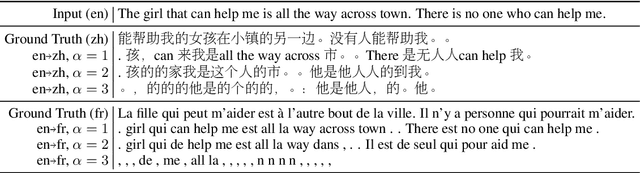

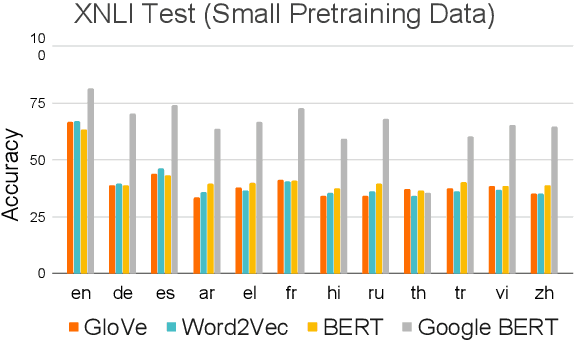

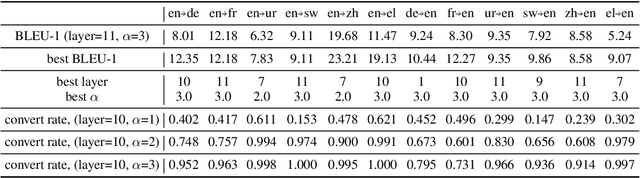

Recently, multilingual BERT works remarkably well on cross-lingual transfer tasks, superior to static non-contextualized word embeddings. In this work, we provide an in-depth experimental study to supplement the existing literature of cross-lingual ability. We compare the cross-lingual ability of non-contextualized and contextualized representation model with the same data. We found that datasize and context window size are crucial factors to the transferability. We also observe the language-specific information in multilingual BERT. By manipulating the latent representations, we can control the output languages of multilingual BERT, and achieve unsupervised token translation. We further show that based on the observation, there is a computationally cheap but effective approach to improve the cross-lingual ability of multilingual BERT.

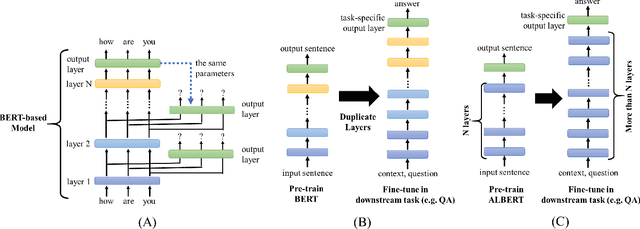

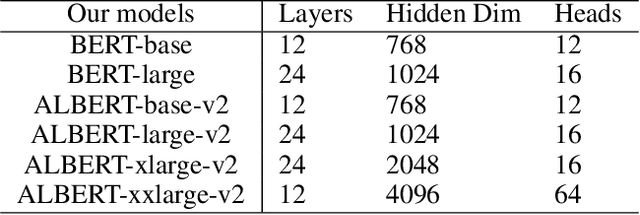

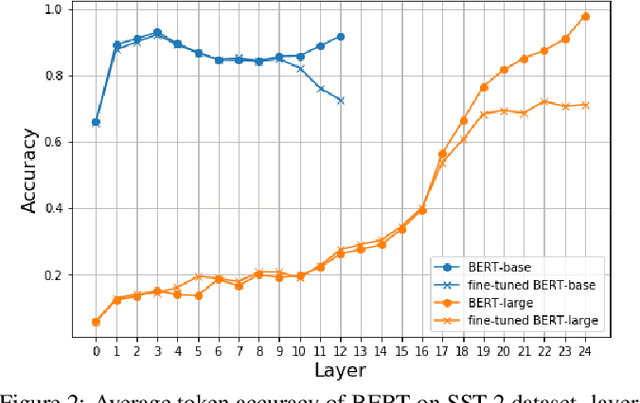

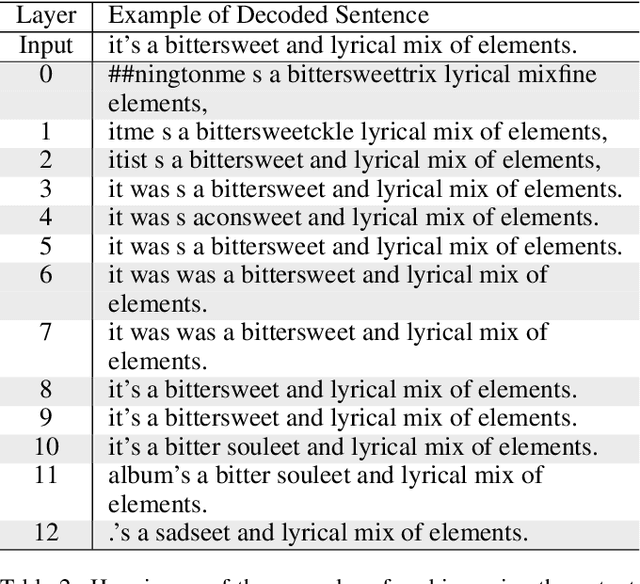

Further Boosting BERT-based Models by Duplicating Existing Layers: Some Intriguing Phenomena inside BERT

Jan 25, 2020Wei-Tsung Kao, Tsung-Han Wu, Po-Han Chi, Chun-Cheng Hsieh, Hung-Yi Lee

Although Bidirectional Encoder Representations from Transformers (BERT) have achieved tremendous success in many natural language processing (NLP) tasks, it remains a black box, so much previous work has tried to lift the veil of BERT and understand the functionality of each layer. In this paper, we found that removing or duplicating most layers in BERT would not change their outputs. This fact remains true across a wide variety of BERT-based models. Based on this observation, we propose a quite simple method to boost the performance of BERT. By duplicating some layers in the BERT-based models to make it deeper (no extra training required in this step), they obtain better performance in the down-stream tasks after fine-tuning.

J-Net: Randomly weighted U-Net for audio source separation

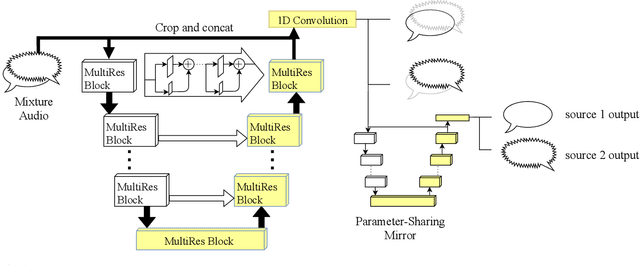

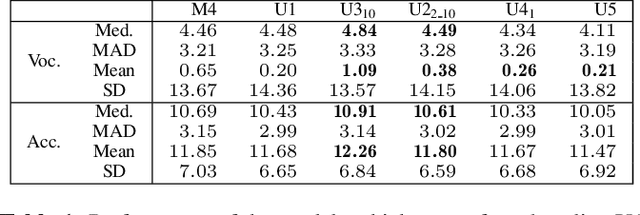

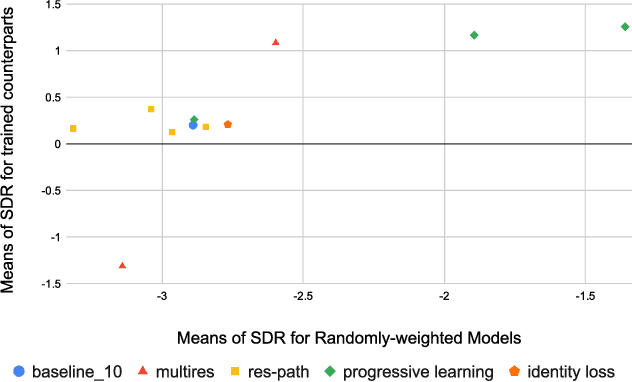

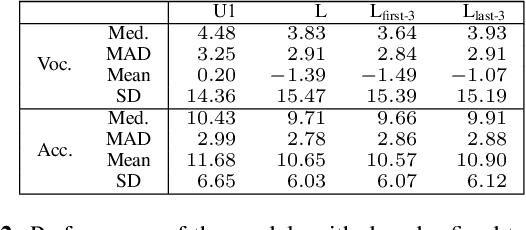

Nov 29, 2019Bo-Wen Chen, Yen-Min Hsu, Hung-Yi Lee

Several results in the computer vision literature have shown the potential of randomly weighted neural networks. While they perform fairly well as feature extractors for discriminative tasks, a positive correlation exists between their performance and their fully trained counterparts. According to these discoveries, we pose two questions: what is the value of randomly weighted networks in difficult generative audio tasks such as audio source separation and does such positive correlation still exist when it comes to large random networks and their trained counterparts? In this paper, we demonstrate that the positive correlation still exists. Based on this discovery, we can try out different architecture designs or tricks without training the whole model. Meanwhile, we find a surprising result that in comparison to the non-trained encoder (down-sample path) in Wave-U-Net, fixing the decoder (up-sample path) to random weights results in better performance, almost comparable to the fully trained model.

Training a code-switching language model with monolingual data

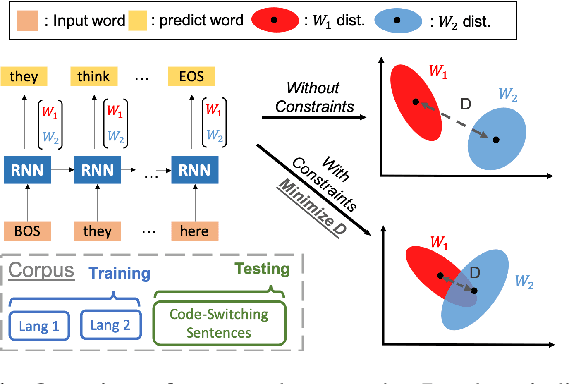

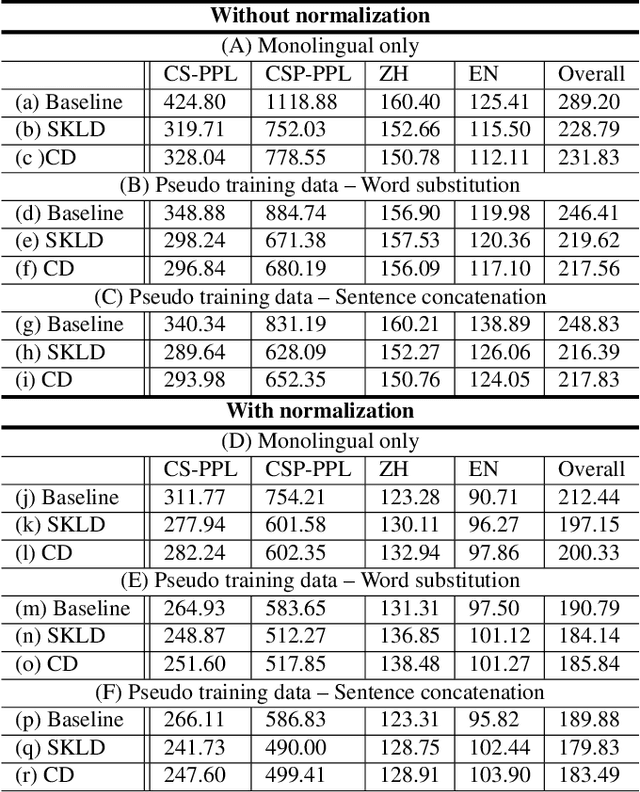

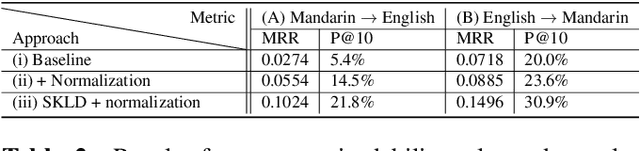

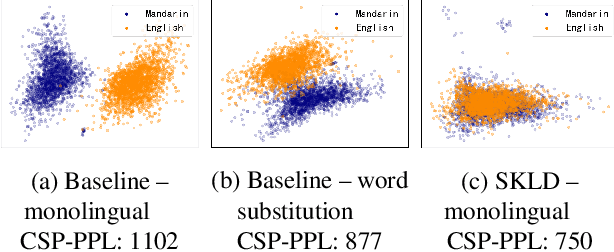

Nov 14, 2019Shun-Po Chuang, Tzu-Wei Sung, Hung-Yi Lee

A lack of code-switching data complicates the training of code-switching (CS) language models. We propose an approach to train such CS language models on monolingual data only. By constraining and normalizing the output projection matrix in RNN-based language models, we bring embeddings of different languages closer to each other. Numerical and visualization results show that the proposed approaches remarkably improve the performance of CS language models trained on monolingual data. The proposed approaches are comparable or even better than training CS language models with artificially generated CS data. We additionally use unsupervised bilingual word translation to analyze whether semantically equivalent words in different languages are mapped together.

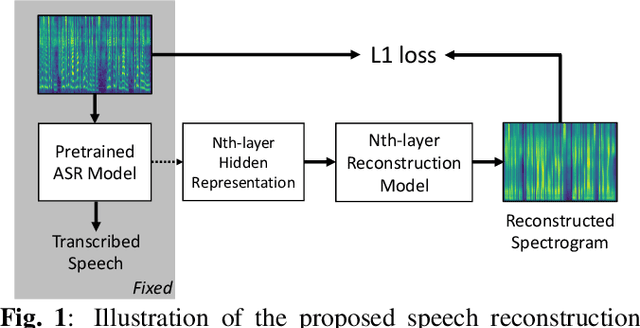

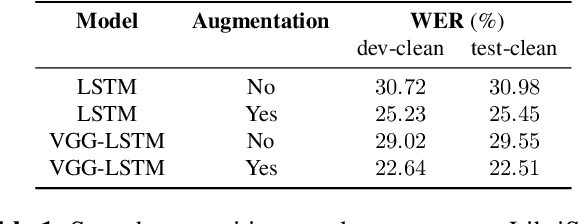

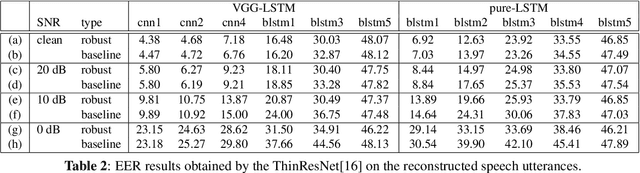

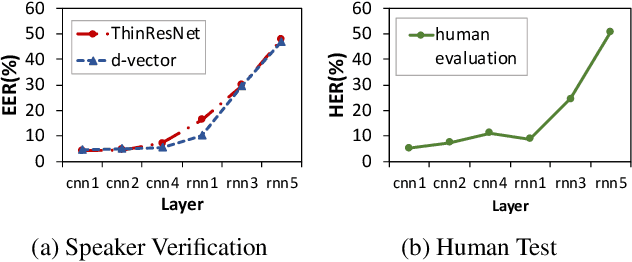

What does a network layer hear? Analyzing hidden representations of end-to-end ASR through speech synthesis

Nov 04, 2019Chung-Yi Li, Pei-Chieh Yuan, Hung-Yi Lee

End-to-end speech recognition systems have achieved competitive results compared to traditional systems. However, the complex transformations involved between layers given highly variable acoustic signals are hard to analyze. In this paper, we present our ASR probing model, which synthesizes speech from hidden representations of end-to-end ASR to examine the information maintain after each layer calculation. Listening to the synthesized speech, we observe gradual removal of speaker variability and noise as the layer goes deeper, which aligns with the previous studies on how deep network functions in speech recognition. This paper is the first study analyzing the end-to-end speech recognition model by demonstrating what each layer hears. Speaker verification and speech enhancement measurements on synthesized speech are also conducted to confirm our observation further.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge