Huifang Li

Order-Level Attention Similarity Across Language Models: A Latent Commonality

Nov 07, 2025

Abstract:In this paper, we explore an important yet previously neglected question: Do context aggregation patterns across Language Models (LMs) share commonalities? While some works have investigated context aggregation or attention weights in LMs, they typically focus on individual models or attention heads, lacking a systematic analysis across multiple LMs to explore their commonalities. In contrast, we focus on the commonalities among LMs, which can deepen our understanding of LMs and even facilitate cross-model knowledge transfer. In this work, we introduce the Order-Level Attention (OLA) derived from the order-wise decomposition of Attention Rollout and reveal that the OLA at the same order across LMs exhibits significant similarities. Furthermore, we discover an implicit mapping between OLA and syntactic knowledge. Based on these two findings, we propose the Transferable OLA Adapter (TOA), a training-free cross-LM adapter transfer method. Specifically, we treat the OLA as a unified syntactic feature representation and train an adapter that takes OLA as input. Due to the similarities in OLA across LMs, the adapter generalizes to unseen LMs without requiring any parameter updates. Extensive experiments demonstrate that TOA's cross-LM generalization effectively enhances the performance of unseen LMs. Code is available at https://github.com/jinglin-liang/OLAS.

High-Resolution Global Land Surface Temperature Retrieval via a Coupled Mechanism-Machine Learning Framework

Sep 05, 2025Abstract:Land surface temperature (LST) is vital for land-atmosphere interactions and climate processes. Accurate LST retrieval remains challenging under heterogeneous land cover and extreme atmospheric conditions. Traditional split window (SW) algorithms show biases in humid environments; purely machine learning (ML) methods lack interpretability and generalize poorly with limited data. We propose a coupled mechanism model-ML (MM-ML) framework integrating physical constraints with data-driven learning for robust LST retrieval. Our approach fuses radiative transfer modeling with data components, uses MODTRAN simulations with global atmospheric profiles, and employs physics-constrained optimization. Validation against 4,450 observations from 29 global sites shows MM-ML achieves MAE=1.84K, RMSE=2.55K, and R-squared=0.966, outperforming conventional methods. Under extreme conditions, MM-ML reduces errors by over 50%. Sensitivity analysis indicates LST estimates are most sensitive to sensor radiance, then water vapor, and less to emissivity, with MM-ML showing superior stability. These results demonstrate the effectiveness of our coupled modeling strategy for retrieving geophysical parameters. The MM-ML framework combines physical interpretability with nonlinear modeling capacity, enabling reliable LST retrieval in complex environments and supporting climate monitoring and ecosystem studies.

A Mechanism-Learning Deeply Coupled Model for Remote Sensing Retrieval of Global Land Surface Temperature

Apr 10, 2025Abstract:Land surface temperature (LST) retrieval from remote sensing data is pivotal for analyzing climate processes and surface energy budgets. However, LST retrieval is an ill-posed inverse problem, which becomes particularly severe when only a single band is available. In this paper, we propose a deeply coupled framework integrating mechanistic modeling and machine learning to enhance the accuracy and generalizability of single-channel LST retrieval. Training samples are generated using a physically-based radiative transfer model and a global collection of 5810 atmospheric profiles. A physics-informed machine learning framework is proposed to systematically incorporate the first principles from classical physical inversion models into the learning workflow, with optimization constrained by radiative transfer equations. Global validation demonstrated a 30% reduction in root-mean-square error versus standalone methods. Under extreme humidity, the mean absolute error decreased from 4.87 K to 2.29 K (53% improvement). Continental-scale tests across five continents confirmed the superior generalizability of this model.

PGCS: Physical Law embedded Generative Cloud Synthesis in Remote Sensing Images

Oct 22, 2024

Abstract:Data quantity and quality are both critical for information extraction and analyzation in remote sensing. However, the current remote sensing datasets often fail to meet these two requirements, for which cloud is a primary factor degrading the data quantity and quality. This limitation affects the precision of results in remote sensing application, particularly those derived from data-driven techniques. In this paper, a physical law embedded generative cloud synthesis method (PGCS) is proposed to generate diverse realistic cloud images to enhance real data and promote the development of algorithms for subsequent tasks, such as cloud correction, cloud detection, and data augmentation for classification, recognition, and segmentation. The PGCS method involves two key phases: spatial synthesis and spectral synthesis. In the spatial synthesis phase, a style-based generative adversarial network is utilized to simulate the spatial characteristics, generating an infinite number of single-channel clouds. In the spectral synthesis phase, the atmospheric scattering law is embedded through a local statistics and global fitting method, converting the single-channel clouds into multi-spectral clouds. The experimental results demonstrate that PGCS achieves a high accuracy in both phases and performs better than three other existing cloud synthesis methods. Two cloud correction methods are developed from PGCS and exhibits a superior performance compared to state-of-the-art methods in the cloud correction task. Furthermore, the application of PGCS with data from various sensors was investigated and successfully extended. Code will be provided at https://github.com/Liying-Xu/PGCS.

A physics-constrained machine learning method for mapping gapless land surface temperature

Jul 03, 2023Abstract:More accurate, spatio-temporally, and physically consistent LST estimation has been a main interest in Earth system research. Developing physics-driven mechanism models and data-driven machine learning (ML) models are two major paradigms for gapless LST estimation, which have their respective advantages and disadvantages. In this paper, a physics-constrained ML model, which combines the strengths in the mechanism model and ML model, is proposed to generate gapless LST with physical meanings and high accuracy. The hybrid model employs ML as the primary architecture, under which the input variable physical constraints are incorporated to enhance the interpretability and extrapolation ability of the model. Specifically, the light gradient-boosting machine (LGBM) model, which uses only remote sensing data as input, serves as the pure ML model. Physical constraints (PCs) are coupled by further incorporating key Community Land Model (CLM) forcing data (cause) and CLM simulation data (effect) as inputs into the LGBM model. This integration forms the PC-LGBM model, which incorporates surface energy balance (SEB) constraints underlying the data in CLM-LST modeling within a biophysical framework. Compared with a pure physical method and pure ML methods, the PC-LGBM model improves the prediction accuracy and physical interpretability of LST. It also demonstrates a good extrapolation ability for the responses to extreme weather cases, suggesting that the PC-LGBM model enables not only empirical learning from data but also rationally derived from theory. The proposed method represents an innovative way to map accurate and physically interpretable gapless LST, and could provide insights to accelerate knowledge discovery in land surface processes and data mining in geographical parameter estimation.

Collaborative Perception in Autonomous Driving: Methods, Datasets and Challenges

Jan 16, 2023

Abstract:Collaborative perception is essential to address occlusion and sensor failure issues in autonomous driving. In recent years, deep learning on collaborative perception has become even thriving, with numerous methods have been proposed. Although some works have reviewed and analyzed the basic architecture and key components in this field, there is still a lack of reviews on systematical collaboration modules in perception networks and large-scale collaborative perception datasets. The primary goal of this work is to address the abovementioned issues and provide a comprehensive review of recent achievements in this field. First, we introduce fundamental technologies and collaboration schemes. Following that, we provide an overview of practical collaborative perception methods and systematically summarize the collaboration modules in networks to improve collaboration efficiency and performance while also ensuring collaboration robustness and safety. Then, we present large-scale public datasets and summarize quantitative results on these benchmarks. Finally, we discuss the remaining challenges and promising future research directions.

CATNet: Context AggregaTion Network for Instance Segmentation in Remote Sensing Images

Nov 22, 2021

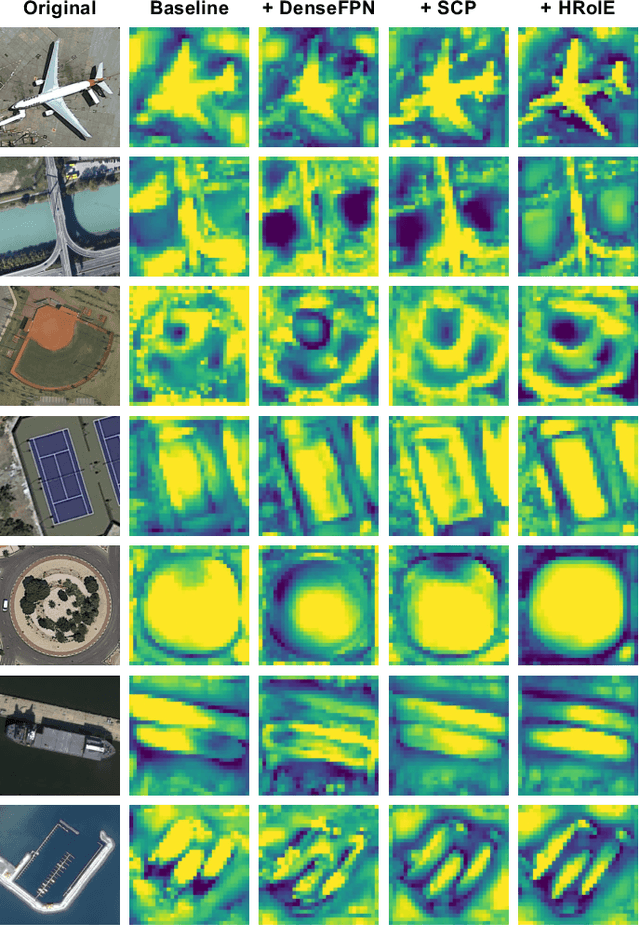

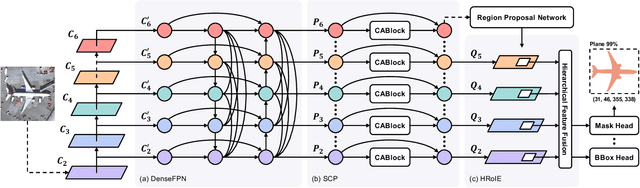

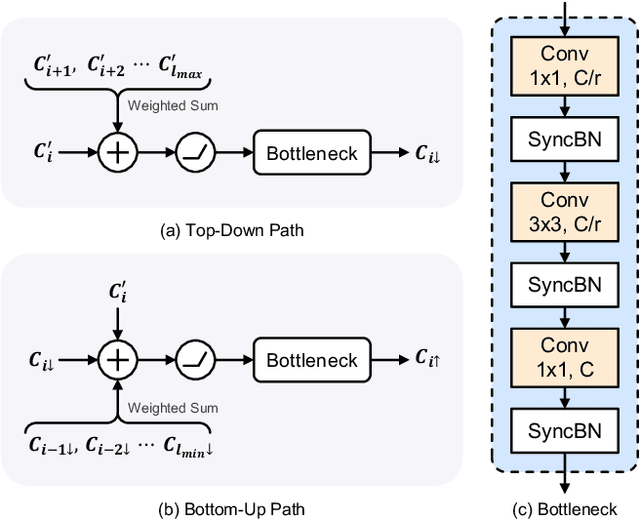

Abstract:The task of instance segmentation in remote sensing images, aiming at performing per-pixel labeling of objects at instance level, is of great importance for various civil applications. Despite previous successes, most existing instance segmentation methods designed for natural images encounter sharp performance degradations when directly applied to top-view remote sensing images. Through careful analysis, we observe that the challenges mainly come from lack of discriminative object features due to severe scale variations, low contrasts, and clustered distributions. In order to address these problems, a novel context aggregation network (CATNet) is proposed to improve the feature extraction process. The proposed model exploits three lightweight plug-and-play modules, namely dense feature pyramid network (DenseFPN), spatial context pyramid (SCP), and hierarchical region of interest extractor (HRoIE), to aggregate global visual context at feature, spatial, and instance domains, respectively. DenseFPN is a multi-scale feature propagation module that establishes more flexible information flows by adopting inter-level residual connections, cross-level dense connections, and feature re-weighting strategy. Leveraging the attention mechanism, SCP further augments the features by aggregating global spatial context into local regions. For each instance, HRoIE adaptively generates RoI features for different downstream tasks. We carry out extensive evaluation of the proposed scheme on the challenging iSAID, DIOR, NWPU VHR-10, and HRSID datasets. The evaluation results demonstrate that the proposed approach outperforms state-of-the-arts with similar computational costs. Code is available at https://github.com/yeliudev/CATNet.

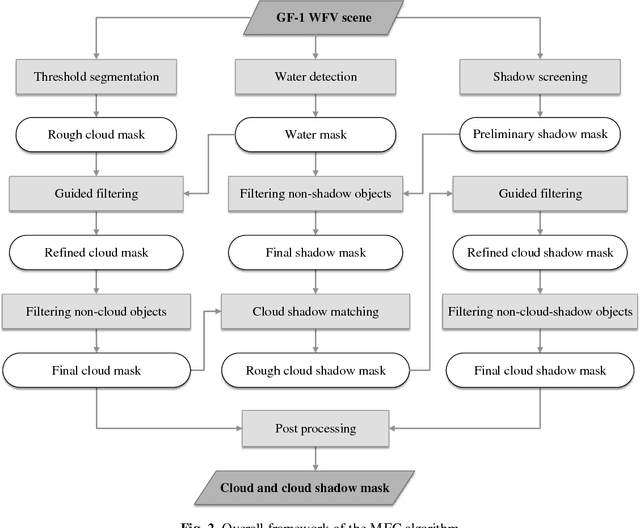

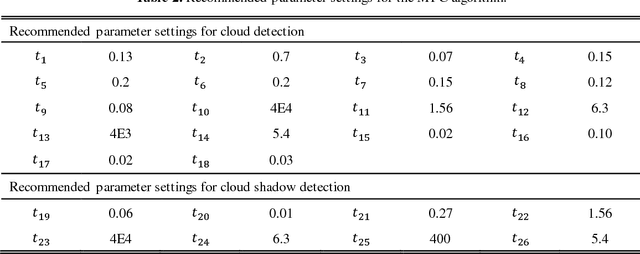

Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery

Feb 05, 2017

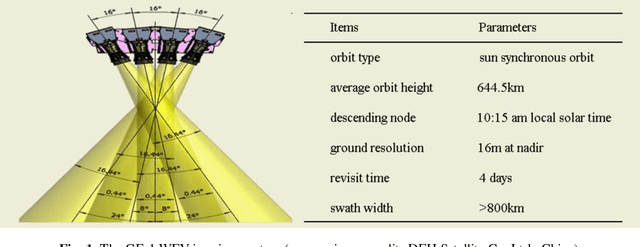

Abstract:The wide field of view (WFV) imaging system onboard the Chinese GaoFen-1 (GF-1) optical satellite has a 16-m resolution and four-day revisit cycle for large-scale Earth observation. The advantages of the high temporal-spatial resolution and the wide field of view make the GF-1 WFV imagery very popular. However, cloud cover is an inevitable problem in GF-1 WFV imagery, which influences its precise application. Accurate cloud and cloud shadow detection in GF-1 WFV imagery is quite difficult due to the fact that there are only three visible bands and one near-infrared band. In this paper, an automatic multi-feature combined (MFC) method is proposed for cloud and cloud shadow detection in GF-1 WFV imagery. The MFC algorithm first implements threshold segmentation based on the spectral features and mask refinement based on guided filtering to generate a preliminary cloud mask. The geometric features are then used in combination with the texture features to improve the cloud detection results and produce the final cloud mask. Finally, the cloud shadow mask can be acquired by means of the cloud and shadow matching and follow-up correction process. The method was validated using 108 globally distributed scenes. The results indicate that MFC performs well under most conditions, and the average overall accuracy of MFC cloud detection is as high as 96.8%. In the contrastive analysis with the official provided cloud fractions, MFC shows a significant improvement in cloud fraction estimation, and achieves a high accuracy for the cloud and cloud shadow detection in the GF-1 WFV imagery with fewer spectral bands. The proposed method could be used as a preprocessing step in the future to monitor land-cover change, and it could also be easily extended to other optical satellite imagery which has a similar spectral setting.

* This manuscript has been accepted for publication in Remote Sensing of Environment, vol. 191, pp.342-358, 2017. (http://www.sciencedirect.com/science/article/pii/S003442571730038X)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge