Hao Ma

State Key Laboratory of Information Engineering in Survering, Mapping and Remote Sensing, Wuhan University

Entailment as Few-Shot Learner

Apr 29, 2021

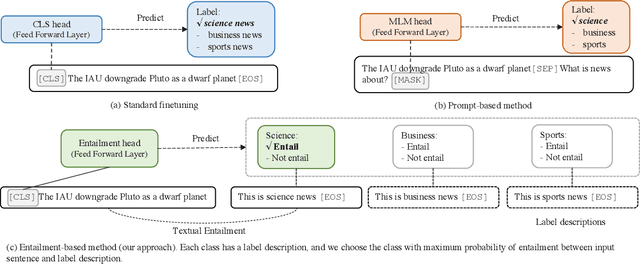

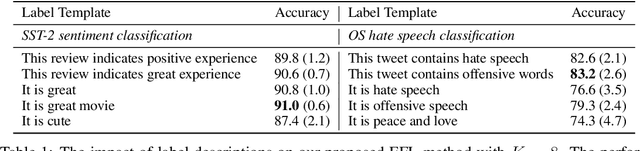

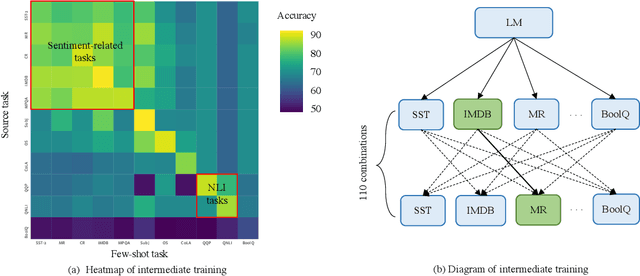

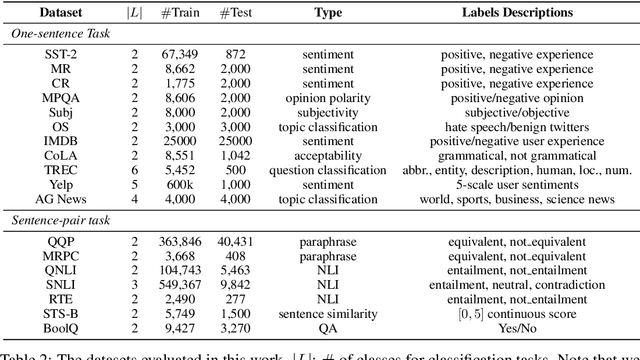

Abstract:Large pre-trained language models (LMs) have demonstrated remarkable ability as few-shot learners. However, their success hinges largely on scaling model parameters to a degree that makes it challenging to train and serve. In this paper, we propose a new approach, named as EFL, that can turn small LMs into better few-shot learners. The key idea of this approach is to reformulate potential NLP task into an entailment one, and then fine-tune the model with as little as 8 examples. We further demonstrate our proposed method can be: (i) naturally combined with an unsupervised contrastive learning-based data augmentation method; (ii) easily extended to multilingual few-shot learning. A systematic evaluation on 18 standard NLP tasks demonstrates that this approach improves the various existing SOTA few-shot learning methods by 12\%, and yields competitive few-shot performance with 500 times larger models, such as GPT-3.

On the Influence of Masking Policies in Intermediate Pre-training

Apr 18, 2021

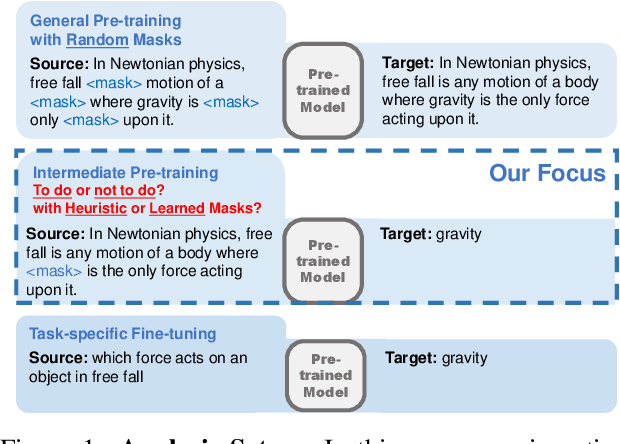

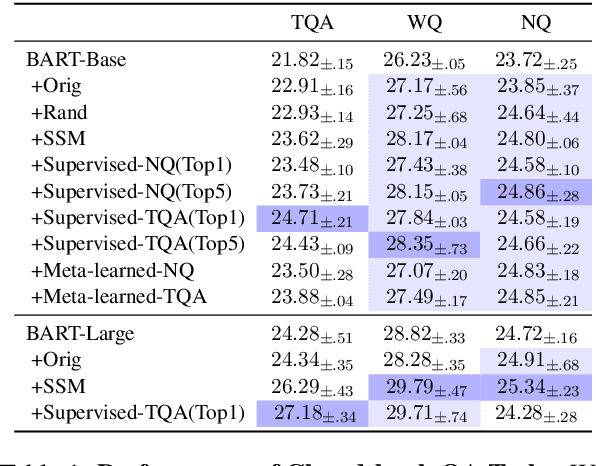

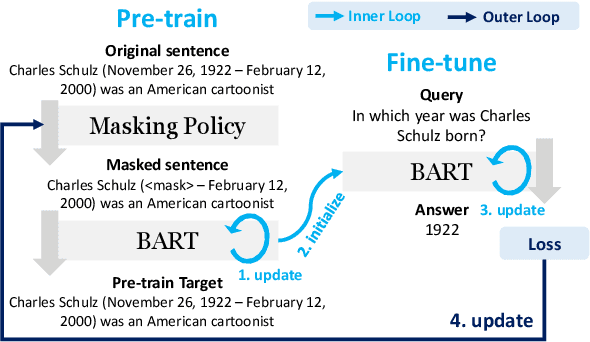

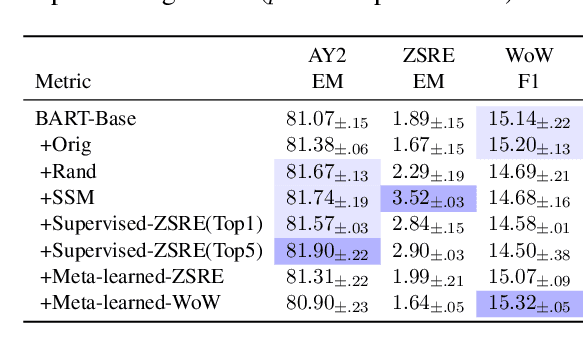

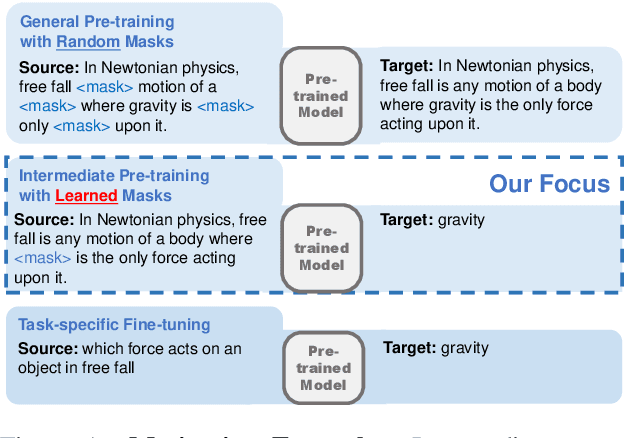

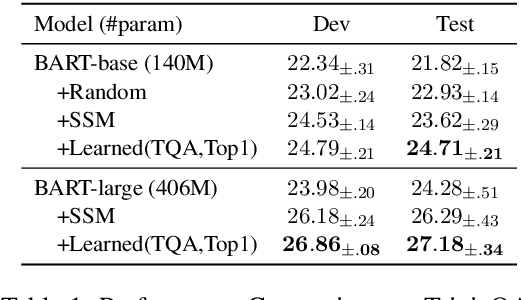

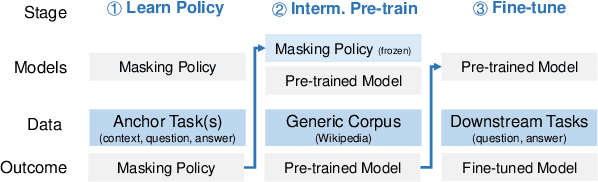

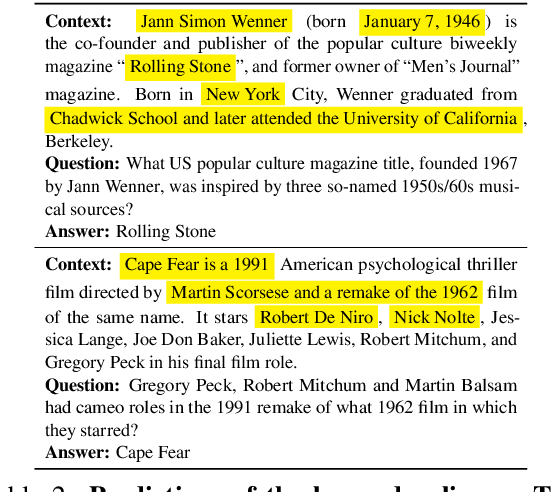

Abstract:Current NLP models are predominantly trained through a pretrain-then-finetune pipeline, where models are first pretrained on a large text corpus with a masked-language-modelling (MLM) objective, then finetuned on the downstream task. Prior work has shown that inserting an intermediate pre-training phase, with heuristic MLM objectives that resemble downstream tasks, can significantly improve final performance. However, it is still unclear (1) in what cases such intermediate pre-training is helpful, (2) whether hand-crafted heuristic objectives are optimal for a given task, and (3) whether a MLM policy designed for one task is generalizable beyond that task. In this paper, we perform a large-scale empirical study to investigate the effect of various MLM policies in intermediate pre-training. Crucially, we introduce methods to automate discovery of optimal MLM policies, by learning a masking model through either direct supervision or meta-learning on the downstream task. We investigate the effects of using heuristic, directly supervised, and meta-learned MLM policies for intermediate pretraining, on eight selected tasks across three categories (closed-book QA, knowledge-intensive language tasks, and abstractive summarization). Most notably, we show that learned masking policies outperform the heuristic of masking named entities on TriviaQA, and masking policies learned on one task can positively transfer to other tasks in certain cases.

On Unifying Misinformation Detection

Apr 12, 2021

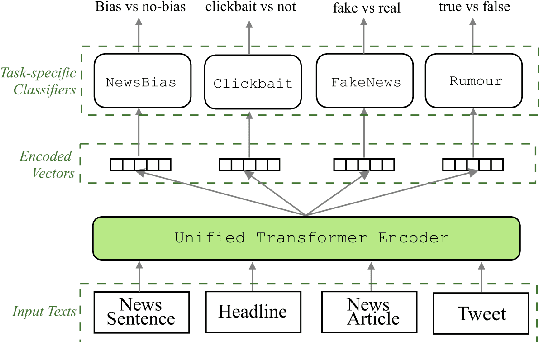

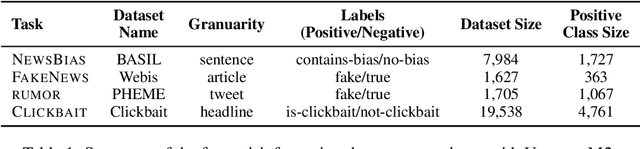

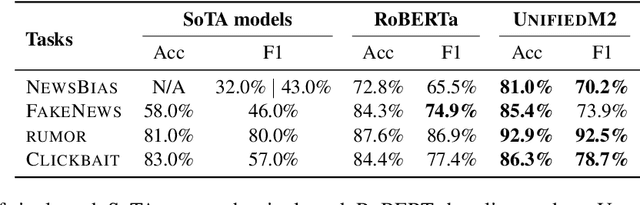

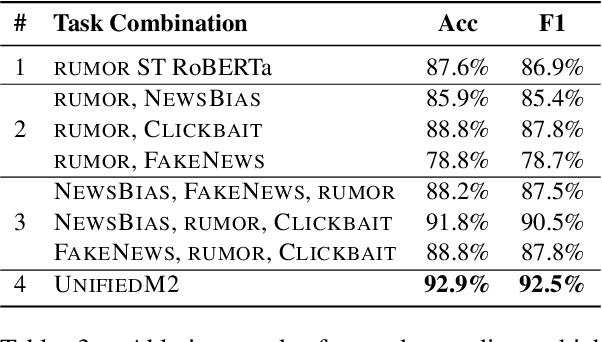

Abstract:In this paper, we introduce UnifiedM2, a general-purpose misinformation model that jointly models multiple domains of misinformation with a single, unified setup. The model is trained to handle four tasks: detecting news bias, clickbait, fake news, and verifying rumors. By grouping these tasks together, UnifiedM2learns a richer representation of misinformation, which leads to state-of-the-art or comparable performance across all tasks. Furthermore, we demonstrate that UnifiedM2's learned representation is helpful for few-shot learning of unseen misinformation tasks/datasets and model's generalizability to unseen events.

Studying Strategically: Learning to Mask for Closed-book QA

Jan 01, 2021

Abstract:Closed-book question-answering (QA) is a challenging task that requires a model to directly answer questions without access to external knowledge. It has been shown that directly fine-tuning pre-trained language models with (question, answer) examples yields surprisingly competitive performance, which is further improved upon through adding an intermediate pre-training stage between general pre-training and fine-tuning. Prior work used a heuristic during this intermediate stage, whereby named entities and dates are masked, and the model is trained to recover these tokens. In this paper, we aim to learn the optimal masking strategy for the intermediate pre-training stage. We first train our masking policy to extract spans that are likely to be tested, using supervision from the downstream task itself, then deploy the learned policy during intermediate pre-training. Thus, our policy packs task-relevant knowledge into the parameters of a language model. Our approach is particularly effective on TriviaQA, outperforming strong heuristics when used to pre-train BART.

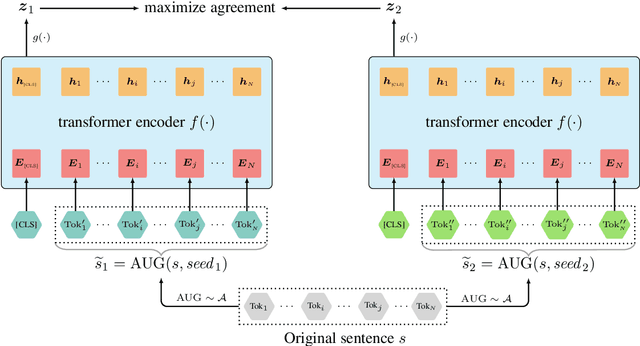

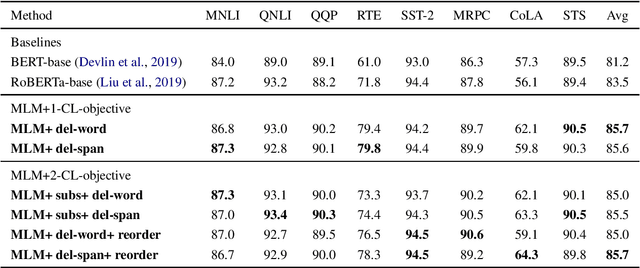

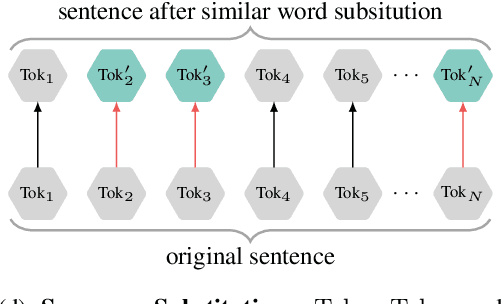

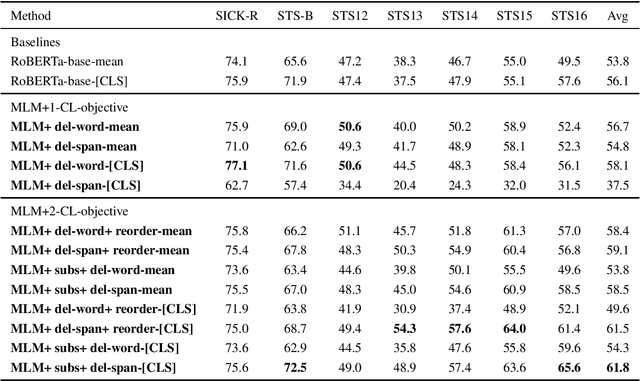

CLEAR: Contrastive Learning for Sentence Representation

Dec 31, 2020

Abstract:Pre-trained language models have proven their unique powers in capturing implicit language features. However, most pre-training approaches focus on the word-level training objective, while sentence-level objectives are rarely studied. In this paper, we propose Contrastive LEArning for sentence Representation (CLEAR), which employs multiple sentence-level augmentation strategies in order to learn a noise-invariant sentence representation. These augmentations include word and span deletion, reordering, and substitution. Furthermore, we investigate the key reasons that make contrastive learning effective through numerous experiments. We observe that different sentence augmentations during pre-training lead to different performance improvements on various downstream tasks. Our approach is shown to outperform multiple existing methods on both SentEval and GLUE benchmarks.

A Method of Generating Measurable Panoramic Image for Indoor Mobile Measurement System

Oct 27, 2020

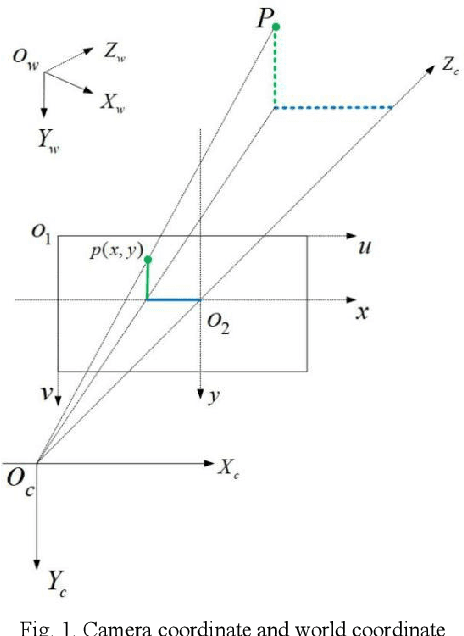

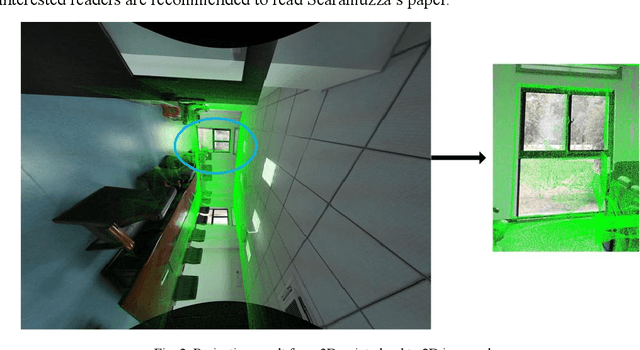

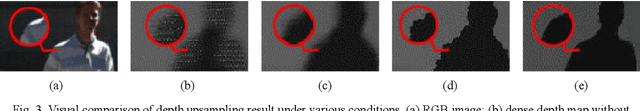

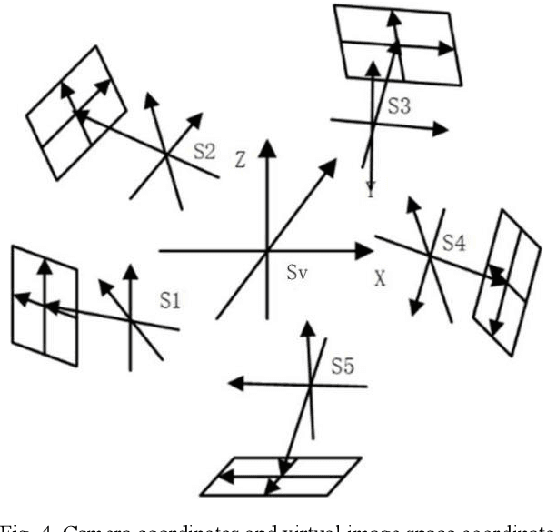

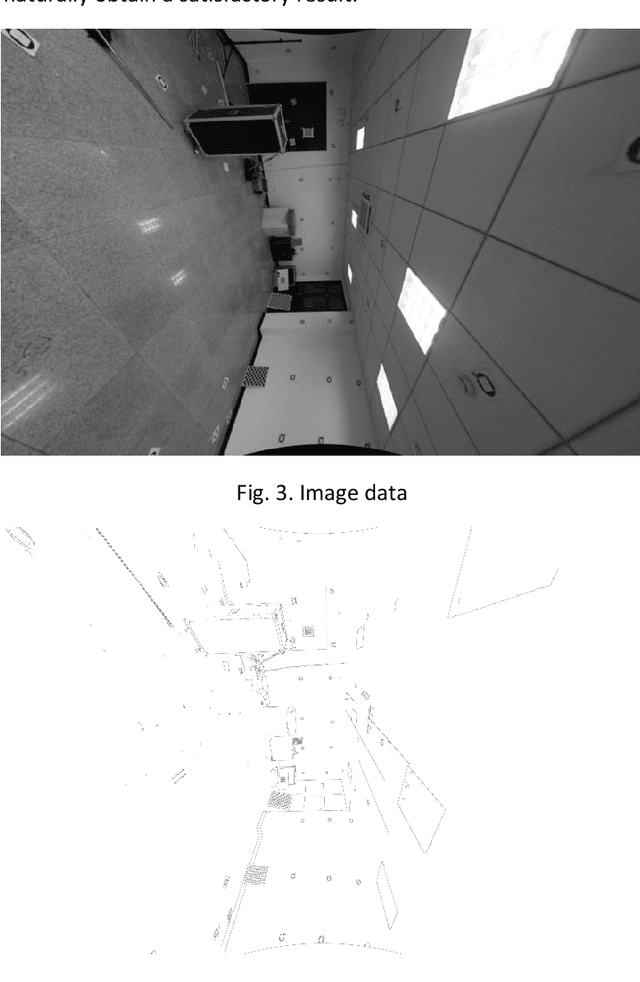

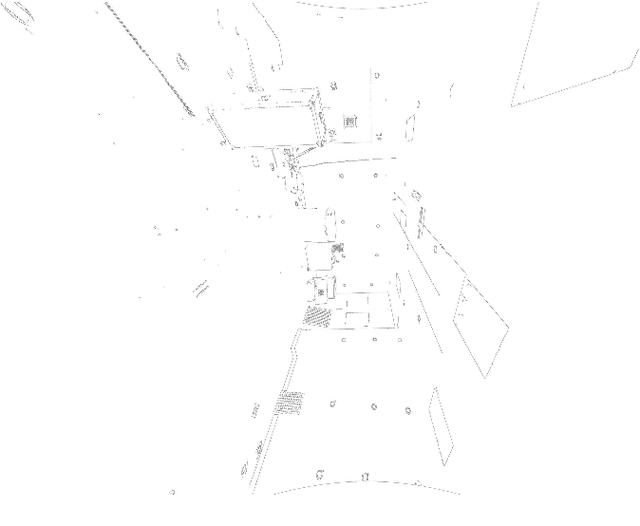

Abstract:This paper designs a technique route to generate high-quality panoramic image with depth information, which involves two critical research hotspots: fusion of LiDAR and image data and image stitching. For the fusion of 3D points and image data, since a sparse depth map can be firstly generated by projecting LiDAR point onto the RGB image plane based on our reliable calibrated and synchronized sensors, we adopt a parameter self-adaptive framework to produce 2D dense depth map. For image stitching, optimal seamline for the overlapping area is searched using a graph-cuts-based method to alleviate the geometric influence and image blending based on the pyramid multi-band is utilized to eliminate the photometric effects near the stitching line. Since each pixel is associated with a depth value, we design this depth value as a radius in the spherical projection which can further project the panoramic image to the world coordinate and consequently produces a high-quality measurable panoramic image. The purposed method is tested on the data from our data collection platform and presents a satisfactory application prospects.

A Simple and Efficient Registration of 3D Point Cloud and Image Data for Indoor Mobile Mapping System

Oct 27, 2020

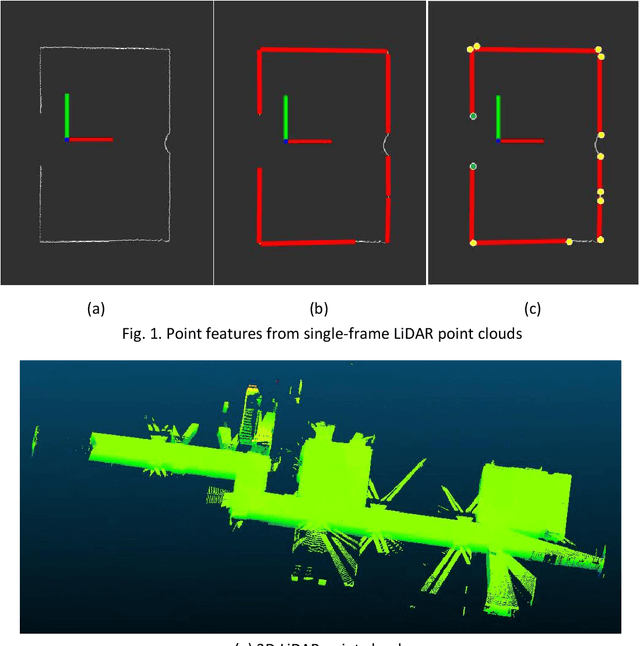

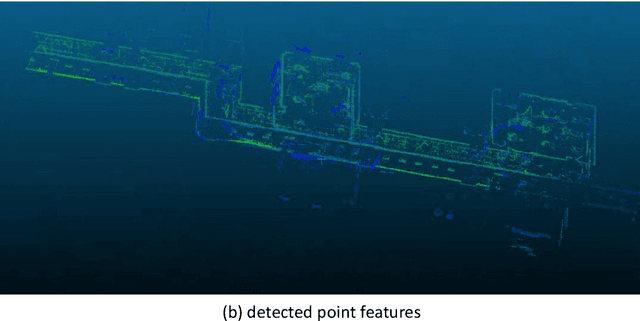

Abstract:Registration of 3D LiDAR point clouds with optical images is critical in the combination of multi-source data. Geometric misalignment originally exists in the pose data between LiDAR point clouds and optical images. To improve the accuracy of the initial pose and the applicability of the integration of 3D points and image data, we develop a simple but efficient registration method. We firstly extract point features from LiDAR point clouds and images: point features is extracted from single-frame LiDAR and point features from images using classical Canny method. Cost map is subsequently built based on Canny image edge detection. The optimization direction is guided by the cost map where low cost represents the the desired direction, and loss function is also considered to improve the robustness of the the purposed method. Experiments show pleasant results.

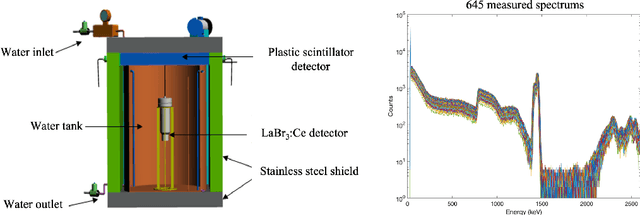

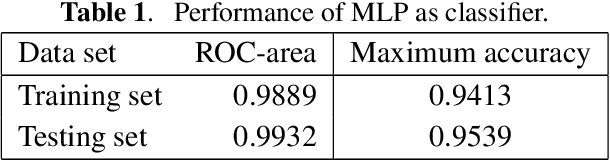

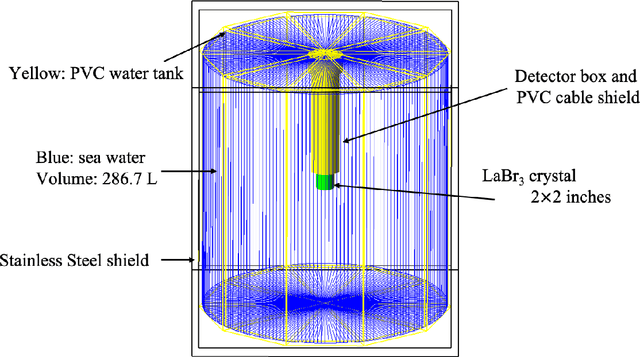

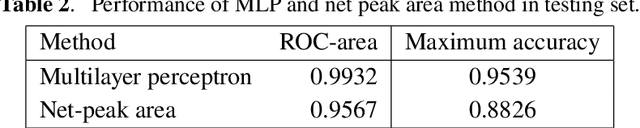

A marine radioisotope gamma-ray spectrum analysis method based on Monte Carlo simulation and MLP neural network

Oct 24, 2020

Abstract:A multilayer perceptron (MLP) neural network is built to analyze the Cs-137 concentration in seawater via gamma-ray spectrums measured by a LaBr3 detector. The MLP is trained and tested by a large data set generated by combining measured and Monte Carlo simulated spectrums under the assumption that all the measured spectrums have 0 Cs-137 concentration. And the performance of MLP is evaluated and compared with the traditional net-peak area method. The results show an improvement of 7% in accuracy and 0.036 in the ROC-curve area compared to those of the net peak area method. And the influence of the assumption of Cs-137 concentration in the training data set on the classifying performance of MLP is evaluated.

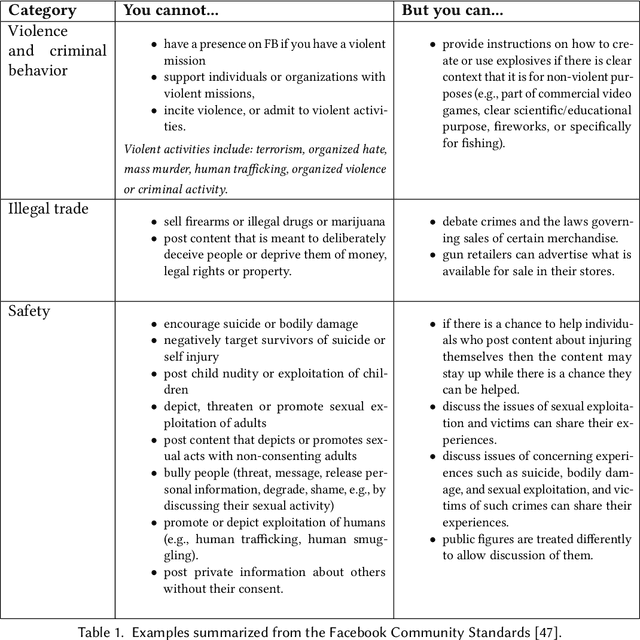

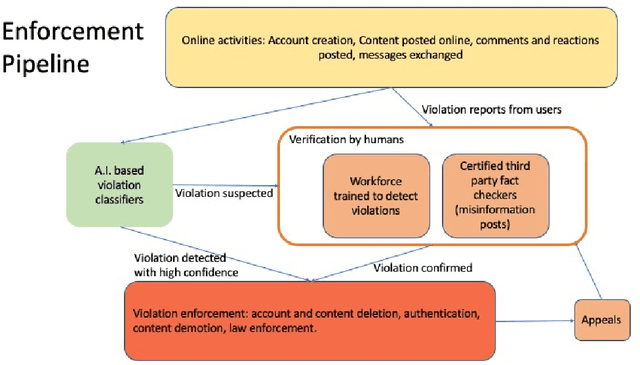

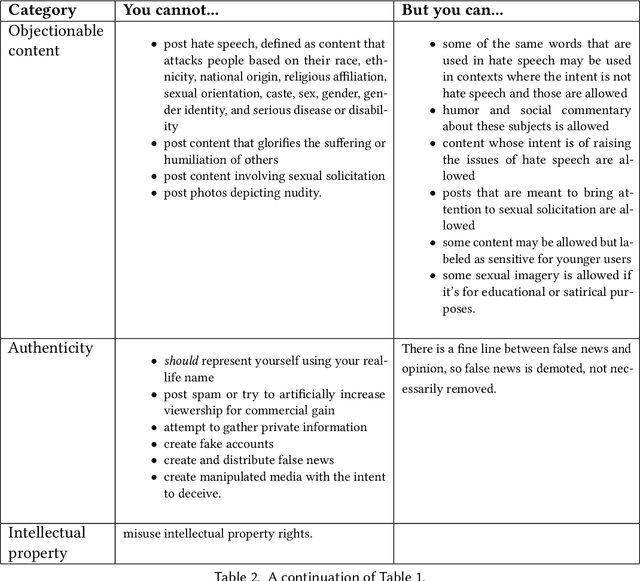

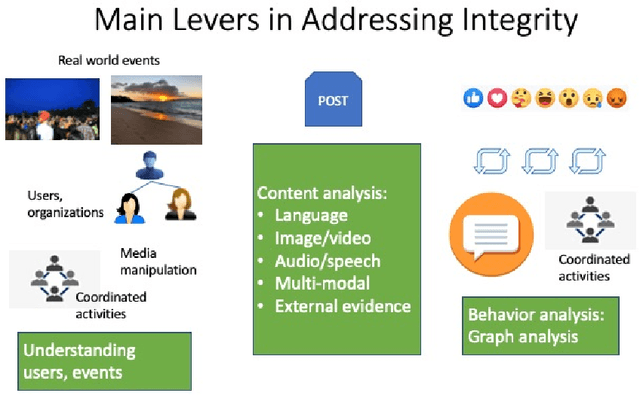

Preserving Integrity in Online Social Networks

Sep 25, 2020

Abstract:Online social networks provide a platform for sharing information and free expression. However, these networks are also used for malicious purposes, such as distributing misinformation and hate speech, selling illegal drugs, and coordinating sex trafficking or child exploitation. This paper surveys the state of the art in keeping online platforms and their users safe from such harm, also known as the problem of preserving integrity. This survey comes from the perspective of having to combat a broad spectrum of integrity violations at Facebook. We highlight the techniques that have been proven useful in practice and that deserve additional attention from the academic community. Instead of discussing the many individual violation types, we identify key aspects of the social-media eco-system, each of which is common to a wide variety violation types. Furthermore, each of these components represents an area for research and development, and the innovations that are found can be applied widely.

To Pretrain or Not to Pretrain: Examining the Benefits of Pretraining on Resource Rich Tasks

Jun 15, 2020

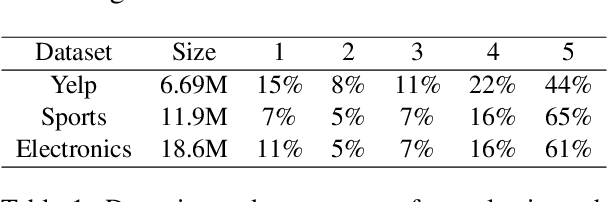

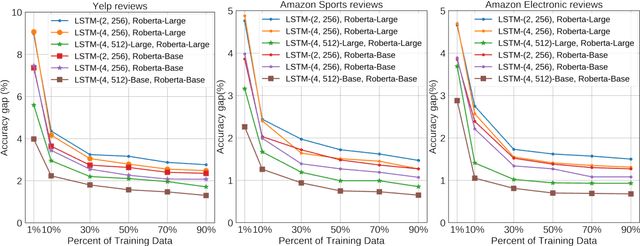

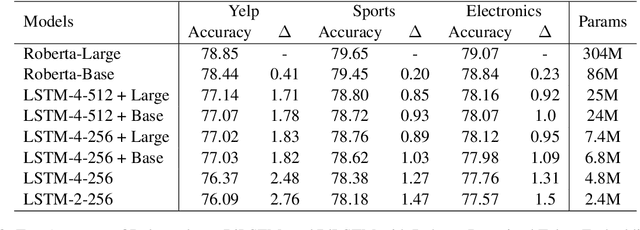

Abstract:Pretraining NLP models with variants of Masked Language Model (MLM) objectives has recently led to a significant improvements on many tasks. This paper examines the benefits of pretrained models as a function of the number of training samples used in the downstream task. On several text classification tasks, we show that as the number of training examples grow into the millions, the accuracy gap between finetuning BERT-based model and training vanilla LSTM from scratch narrows to within 1%. Our findings indicate that MLM-based models might reach a diminishing return point as the supervised data size increases significantly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge