Guilherme A. S. Pereira

A Framework for Controlling Multi-Robot Systems Using Bayesian Optimization and Linear Combination of Vectors

Mar 23, 2022

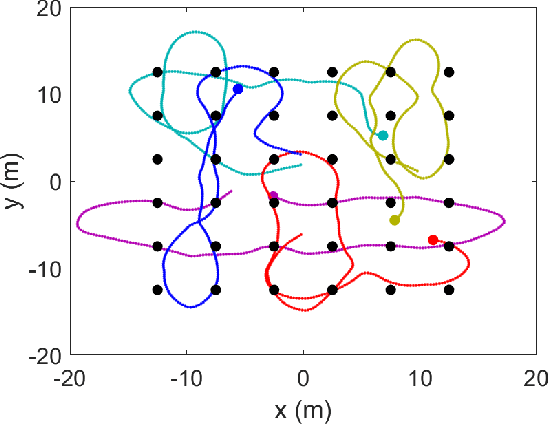

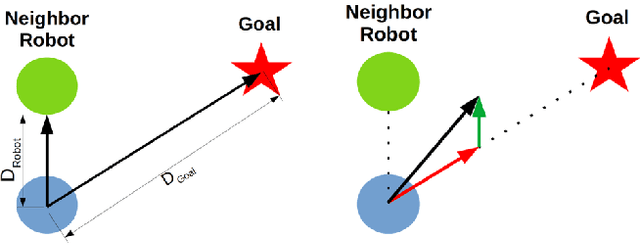

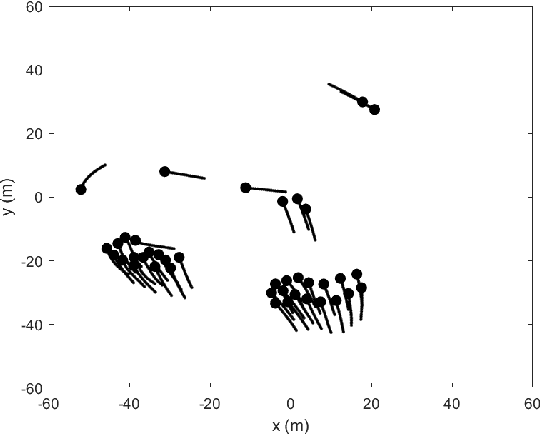

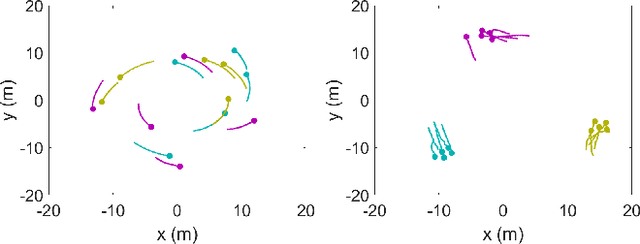

Abstract:We propose a general framework for creating parameterized control schemes for decentralized multi-robot systems. A variety of tasks can be seen in the decentralized multi-robot literature, each with many possible control schemes. For several of them, the agents choose control velocities using algorithms that extract information from the environment and combine that information in meaningful ways. From this basic formation, a framework is proposed that classifies each robots' measurement information as sets of relevant scalars and vectors and creates a linear combination of the measured vector sets. Along with an optimizable parameter set, the scalar measurements are used to generate the coefficients for the linear combination. With this framework and Bayesian optimization, we can create effective control systems for several multi-robot tasks, including cohesion and segregation, pattern formation, and searching/foraging.

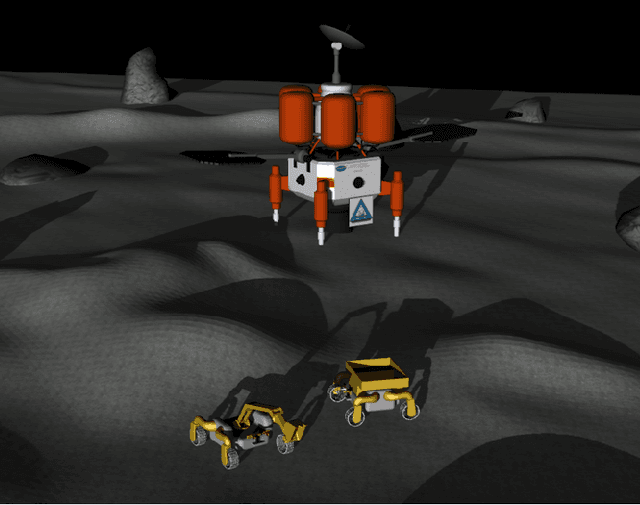

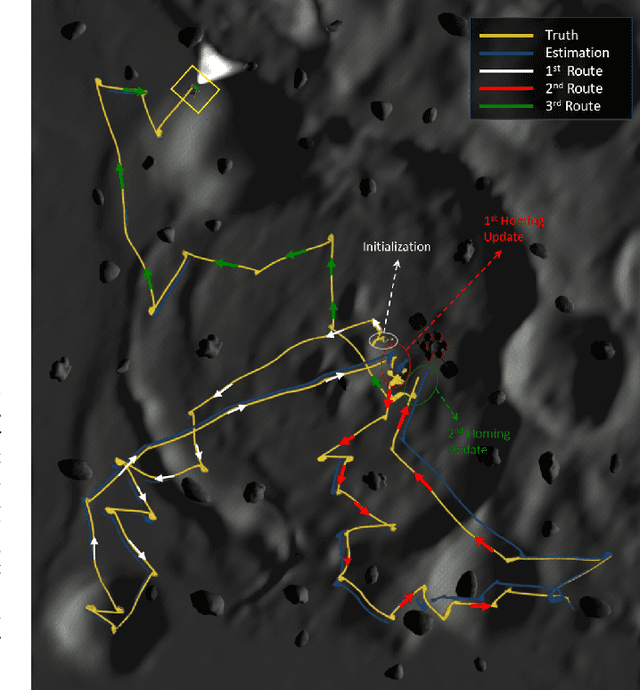

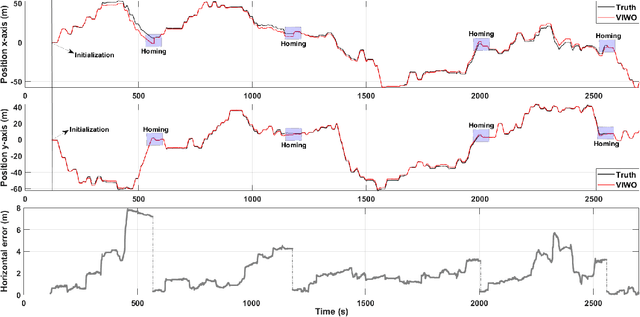

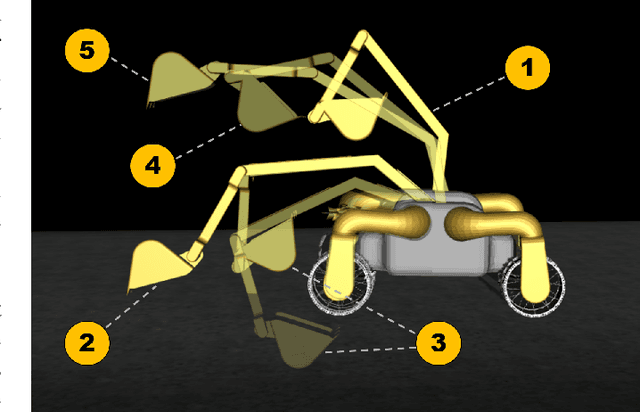

NASA Space Robotics Challenge 2 Qualification Round: An Approach to Autonomous Lunar Rover Operations

Sep 20, 2021

Abstract:Plans for establishing a long-term human presence on the Moon will require substantial increases in robot autonomy and multi-robot coordination to support establishing a lunar outpost. To achieve these objectives, algorithm design choices for the software developments need to be tested and validated for expected scenarios such as autonomous in-situ resource utilization (ISRU), localization in challenging environments, and multi-robot coordination. However, real-world experiments are extremely challenging and limited for extraterrestrial environment. Also, realistic simulation demonstrations in these environments are still rare and demanded for initial algorithm testing capabilities. To help some of these needs, the NASA Centennial Challenges program established the Space Robotics Challenge Phase 2 (SRC2) which consist of virtual robotic systems in a realistic lunar simulation environment, where a group of mobile robots were tasked with reporting volatile locations within a global map, excavating and transporting these resources, and detecting and localizing a target of interest. The main goal of this article is to share our team's experiences on the design trade-offs to perform autonomous robotic operations in a virtual lunar environment and to share strategies to complete the mission requirements posed by NASA SRC2 competition during the qualification round. Of the 114 teams that registered for participation in the NASA SRC2, team Mountaineers finished as one of only six teams to receive the top qualification round prize.

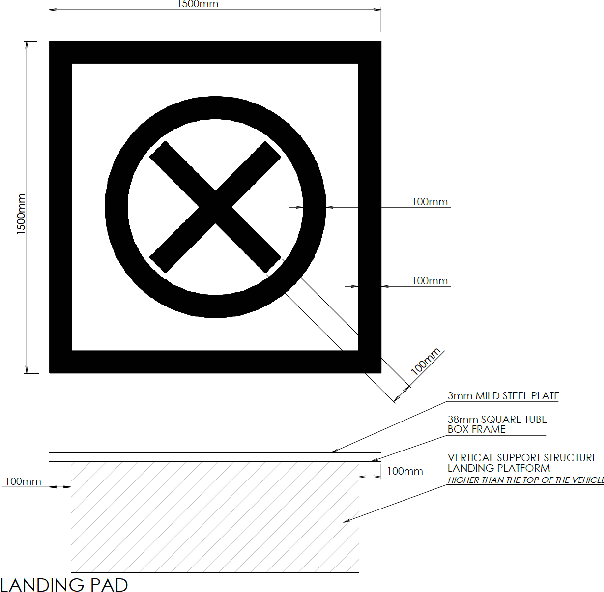

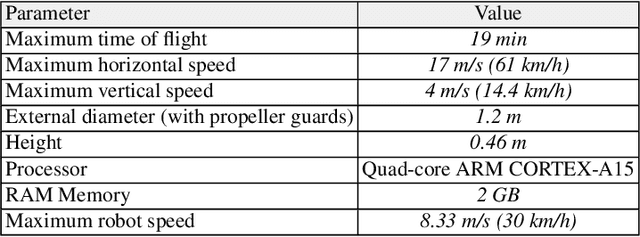

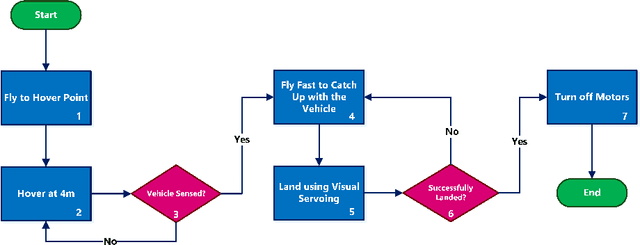

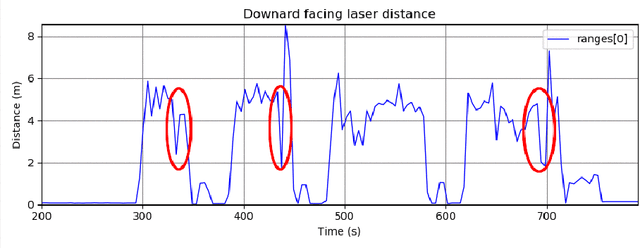

Visual Servoing Approach for Autonomous UAV Landing on a Moving Vehicle

Apr 02, 2021

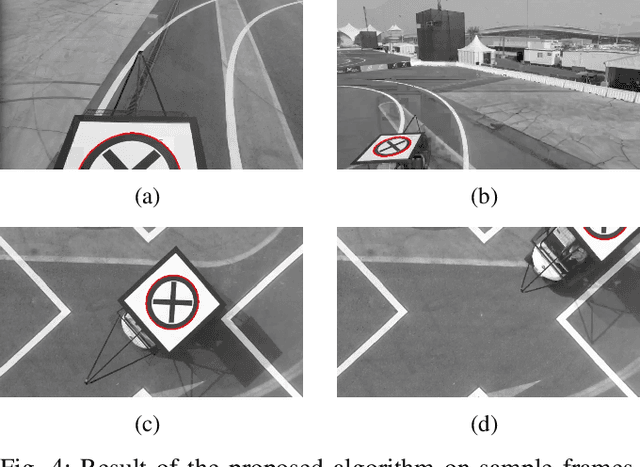

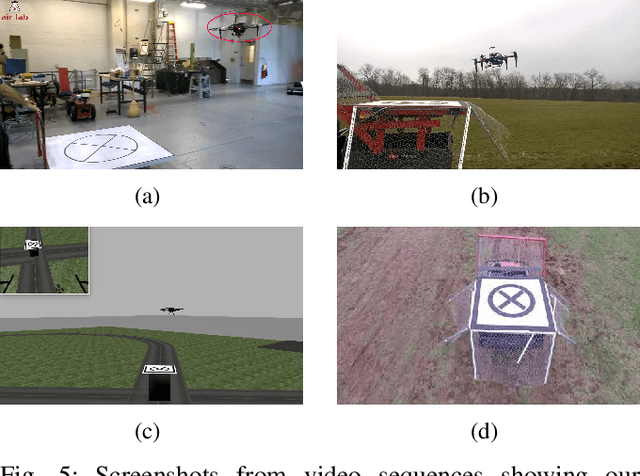

Abstract:We present a method to autonomously land an Unmanned Aerial Vehicle on a moving vehicle with a circular (or elliptical) pattern on the top. A visual servoing controller approaches the ground vehicle using velocity commands calculated directly in image space. The control laws generate velocity commands in all three dimensions, eliminating the need for a separate height controller. The method has shown the ability to approach and land on the moving deck in simulation, indoor and outdoor environments, and compared to the other available methods, it has provided the fastest landing approach. It does not rely on additional external setup, such as RTK, motion capture system, ground station, offboard processing, or communication with the vehicle, and it requires only a minimal set of hardware and localization sensors. The videos and source codes can be accessed from http://theairlab.org/landing-on-vehicle.

Real-Time Ellipse Detection for Robotics Applications

Feb 25, 2021

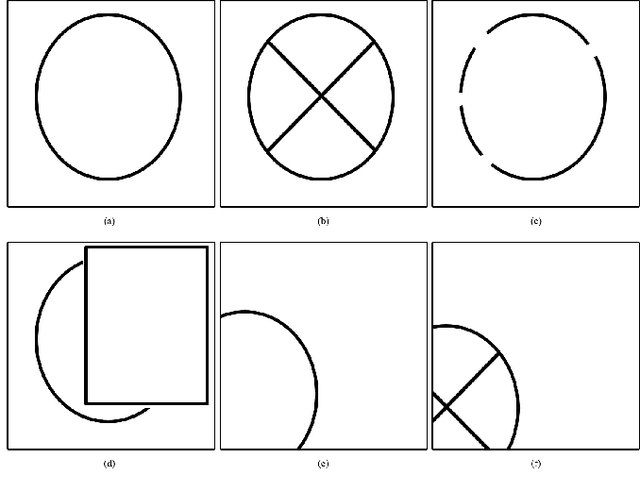

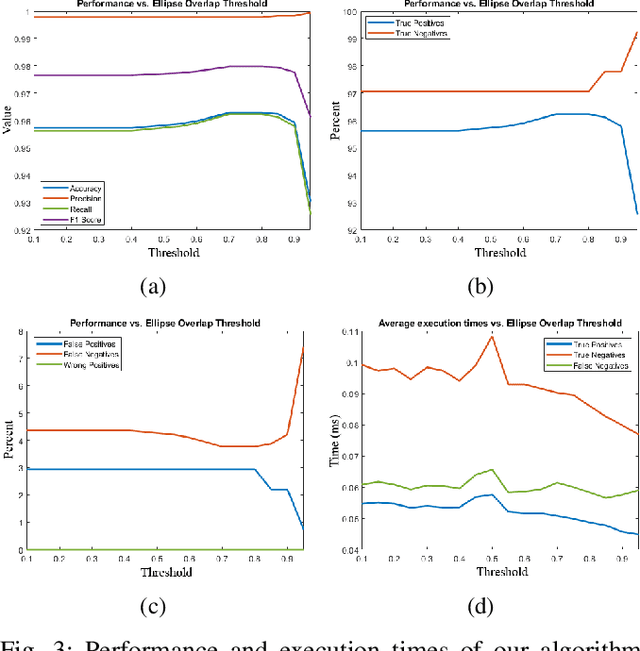

Abstract:We propose a new algorithm for real-time detection and tracking of elliptic patterns suitable for real-world robotics applications. The method fits ellipses to each contour in the image frame and rejects ellipses that do not yield a good fit. It can detect complete, partial, and imperfect ellipses in extreme weather and lighting conditions and is lightweight enough to be used on robots' resource-limited onboard computers. The method is used on an example application of autonomous UAV landing on a fast-moving vehicle to show its performance indoors, outdoors, and in simulation on a real-world robotics task. The comparison with other well-known ellipse detection methods shows that our proposed algorithm outperforms other methods with the F1 score of 0.981 on a dataset with over 1500 frames. The videos of experiments, the source codes, and the collected dataset are provided with the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge