Finale Doshi-Velez

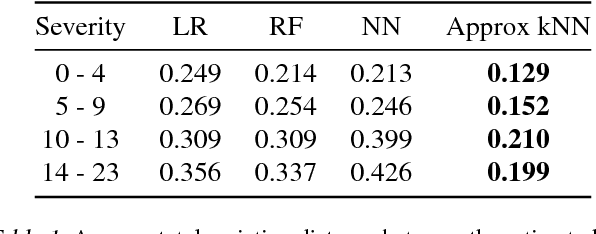

Improving Sepsis Treatment Strategies by Combining Deep and Kernel-Based Reinforcement Learning

Jan 15, 2019

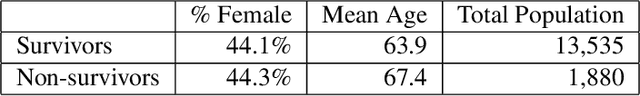

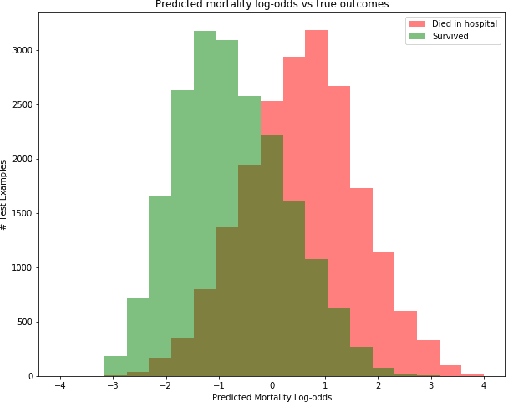

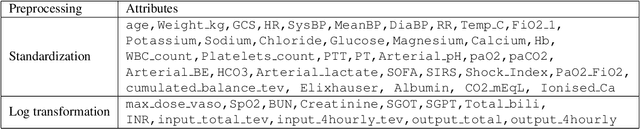

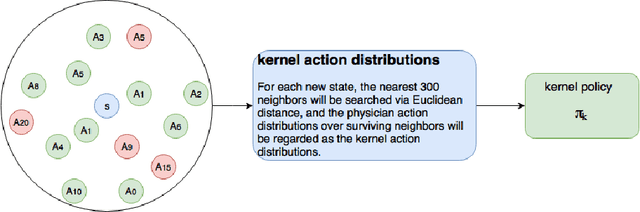

Abstract:Sepsis is the leading cause of mortality in the ICU. It is challenging to manage because individual patients respond differently to treatment. Thus, tailoring treatment to the individual patient is essential for the best outcomes. In this paper, we take steps toward this goal by applying a mixture-of-experts framework to personalize sepsis treatment. The mixture model selectively alternates between neighbor-based (kernel) and deep reinforcement learning (DRL) experts depending on patient's current history. On a large retrospective cohort, this mixture-based approach outperforms physician, kernel only, and DRL-only experts.

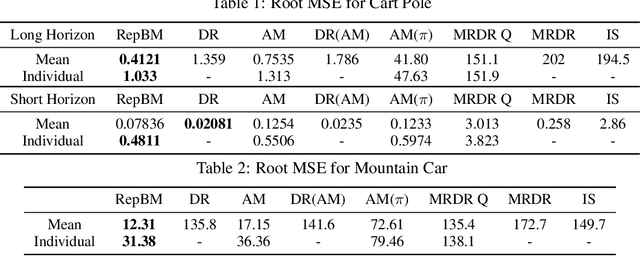

Representation Balancing MDPs for Off-Policy Policy Evaluation

Oct 31, 2018

Abstract:We study the problem of off-policy policy evaluation (OPPE) in RL. In contrast to prior work, we consider how to estimate both the individual policy value and average policy value accurately. We draw inspiration from recent work in causal reasoning, and propose a new finite sample generalization error bound for value estimates from MDP models. Using this upper bound as an objective, we develop a learning algorithm of an MDP model with a balanced representation, and show that our approach can yield substantially lower MSE in common synthetic benchmarks and a HIV treatment simulation domain.

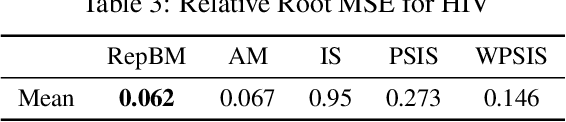

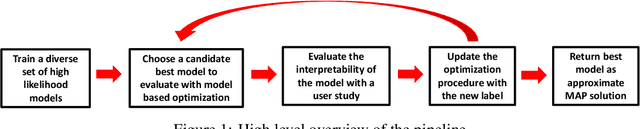

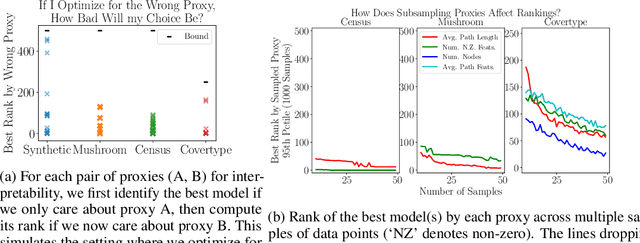

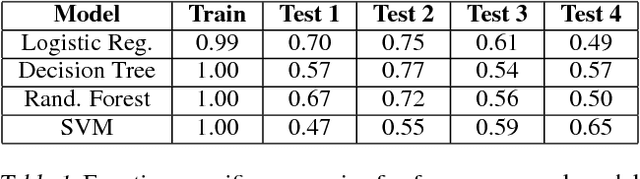

Human-in-the-Loop Interpretability Prior

Oct 30, 2018

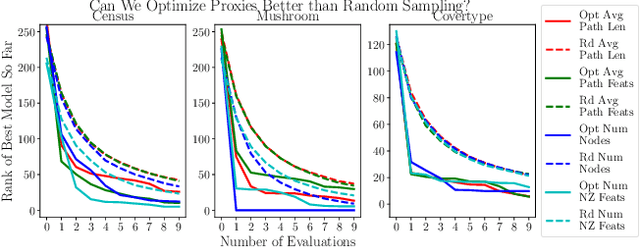

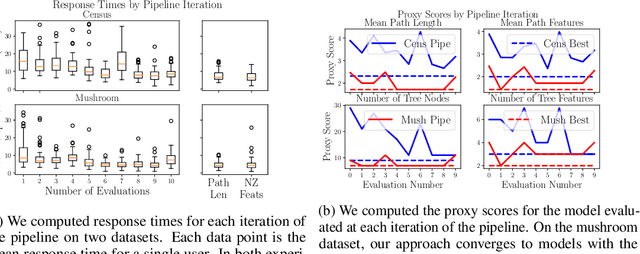

Abstract:We often desire our models to be interpretable as well as accurate. Prior work on optimizing models for interpretability has relied on easy-to-quantify proxies for interpretability, such as sparsity or the number of operations required. In this work, we optimize for interpretability by directly including humans in the optimization loop. We develop an algorithm that minimizes the number of user studies to find models that are both predictive and interpretable and demonstrate our approach on several data sets. Our human subjects results show trends towards different proxy notions of interpretability on different datasets, which suggests that different proxies are preferred on different tasks.

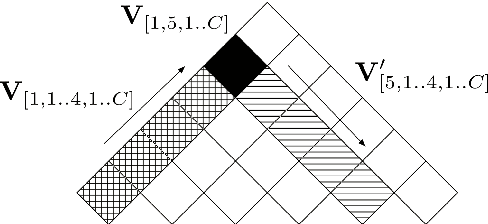

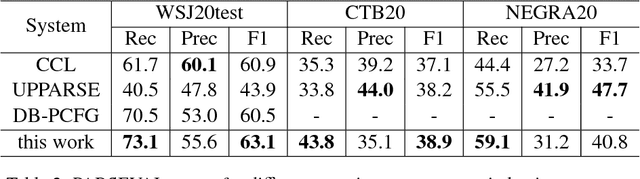

Depth-bounding is effective: Improvements and evaluation of unsupervised PCFG induction

Sep 10, 2018

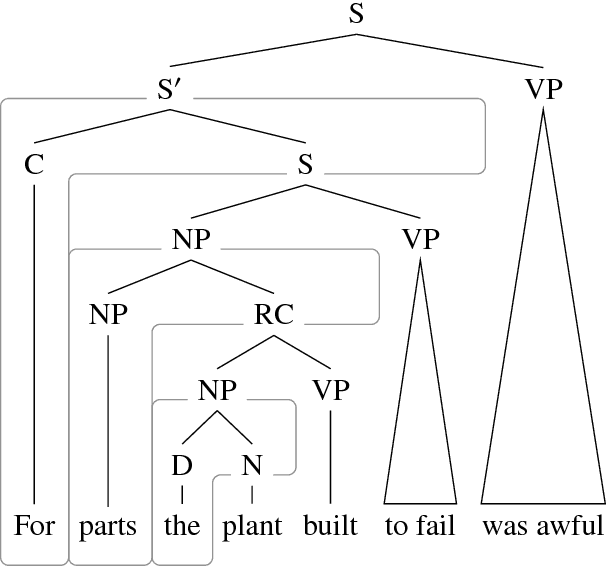

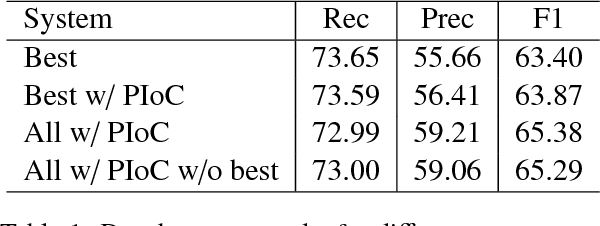

Abstract:There have been several recent attempts to improve the accuracy of grammar induction systems by bounding the recursive complexity of the induction model (Ponvert et al., 2011; Noji and Johnson, 2016; Shain et al., 2016; Jin et al., 2018). Modern depth-bounded grammar inducers have been shown to be more accurate than early unbounded PCFG inducers, but this technique has never been compared against unbounded induction within the same system, in part because most previous depth-bounding models are built around sequence models, the complexity of which grows exponentially with the maximum allowed depth. The present work instead applies depth bounds within a chart-based Bayesian PCFG inducer (Johnson et al., 2007b), where bounding can be switched on and off, and then samples trees with and without bounding. Results show that depth-bounding is indeed significantly effective in limiting the search space of the inducer and thereby increasing the accuracy of the resulting parsing model. Moreover, parsing results on English, Chinese and German show that this bounded model with a new inference technique is able to produce parse trees more accurately than or competitively with state-of-the-art constituency-based grammar induction models.

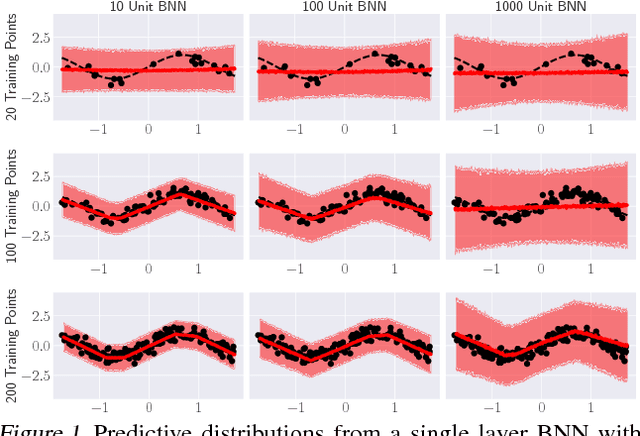

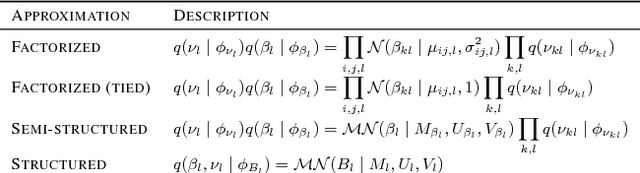

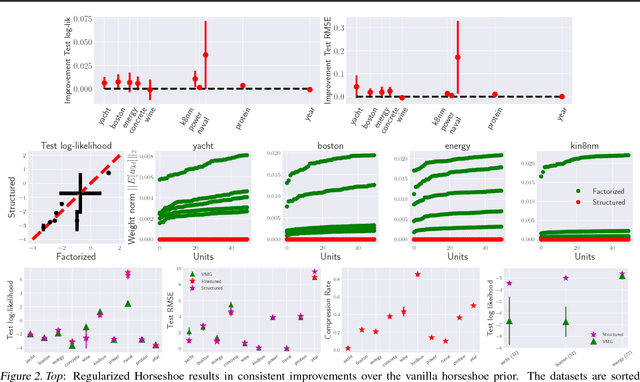

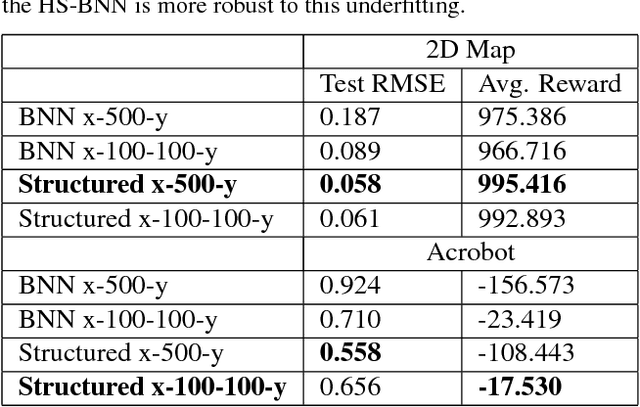

Structured Variational Learning of Bayesian Neural Networks with Horseshoe Priors

Jul 31, 2018

Abstract:Bayesian Neural Networks (BNNs) have recently received increasing attention for their ability to provide well-calibrated posterior uncertainties. However, model selection---even choosing the number of nodes---remains an open question. Recent work has proposed the use of a horseshoe prior over node pre-activations of a Bayesian neural network, which effectively turns off nodes that do not help explain the data. In this work, we propose several modeling and inference advances that consistently improve the compactness of the model learned while maintaining predictive performance, especially in smaller-sample settings including reinforcement learning.

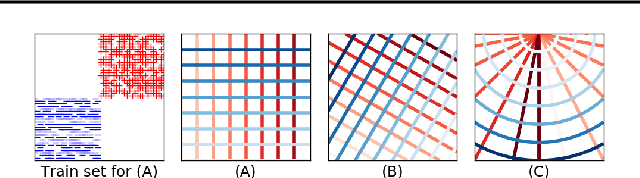

Learning Qualitatively Diverse and Interpretable Rules for Classification

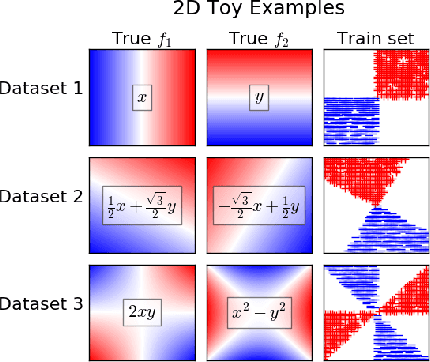

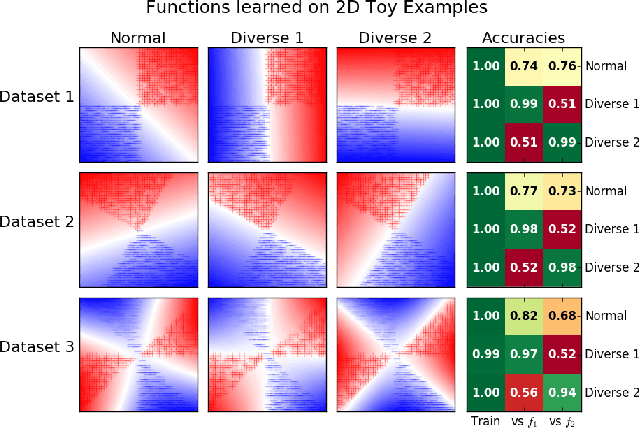

Jul 19, 2018

Abstract:There has been growing interest in developing accurate models that can also be explained to humans. Unfortunately, if there exist multiple distinct but accurate models for some dataset, current machine learning methods are unlikely to find them: standard techniques will likely recover a complex model that combines them. In this work, we introduce a way to identify a maximal set of distinct but accurate models for a dataset. We demonstrate empirically that, in situations where the data supports multiple accurate classifiers, we tend to recover simpler, more interpretable classifiers rather than more complex ones.

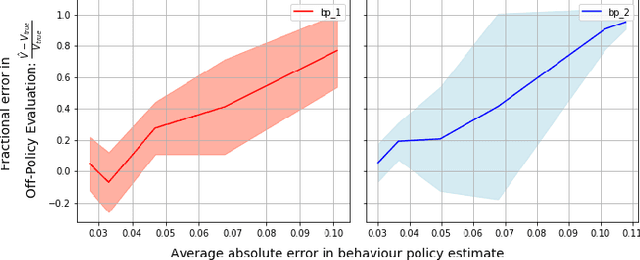

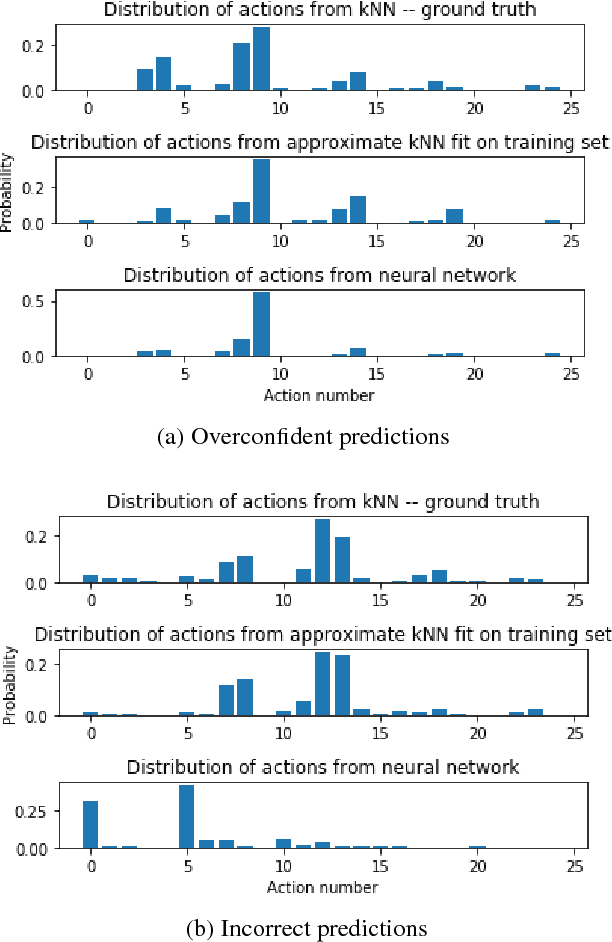

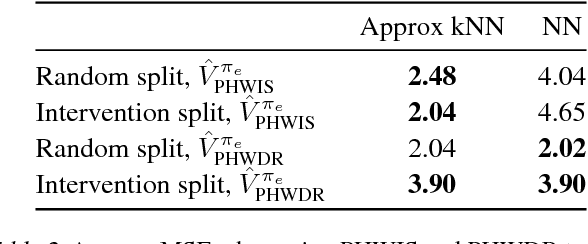

Behaviour Policy Estimation in Off-Policy Policy Evaluation: Calibration Matters

Jul 10, 2018

Abstract:In this work, we consider the problem of estimating a behaviour policy for use in Off-Policy Policy Evaluation (OPE) when the true behaviour policy is unknown. Via a series of empirical studies, we demonstrate how accurate OPE is strongly dependent on the calibration of estimated behaviour policy models: how precisely the behaviour policy is estimated from data. We show how powerful parametric models such as neural networks can result in highly uncalibrated behaviour policy models on a real-world medical dataset, and illustrate how a simple, non-parametric, k-nearest neighbours model produces better calibrated behaviour policy estimates and can be used to obtain superior importance sampling-based OPE estimates.

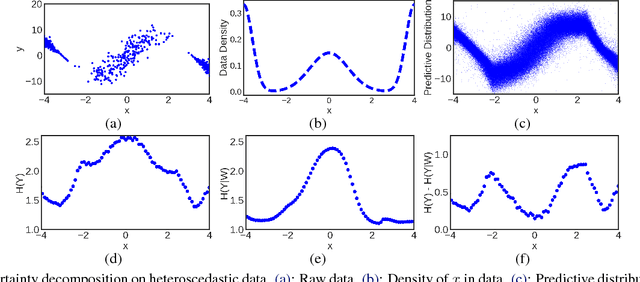

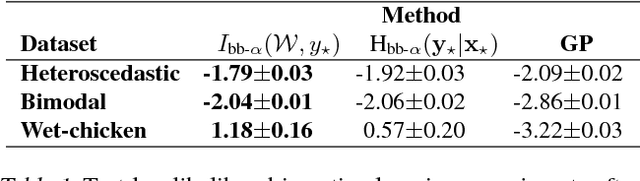

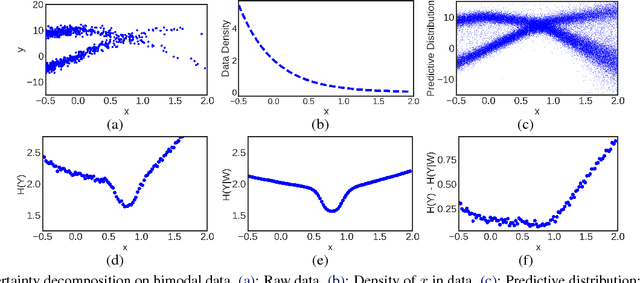

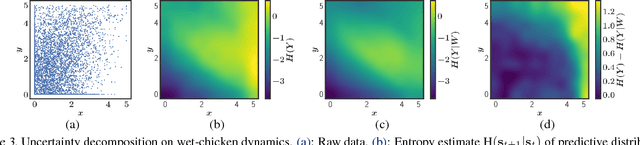

Decomposition of Uncertainty in Bayesian Deep Learning for Efficient and Risk-sensitive Learning

Jun 15, 2018

Abstract:Bayesian neural networks with latent variables are scalable and flexible probabilistic models: They account for uncertainty in the estimation of the network weights and, by making use of latent variables, can capture complex noise patterns in the data. We show how to extract and decompose uncertainty into epistemic and aleatoric components for decision-making purposes. This allows us to successfully identify informative points for active learning of functions with heteroscedastic and bimodal noise. Using the decomposition we further define a novel risk-sensitive criterion for reinforcement learning to identify policies that balance expected cost, model-bias and noise aversion.

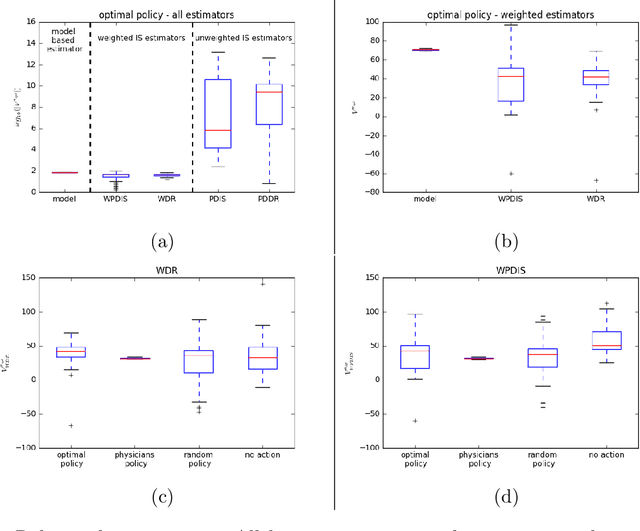

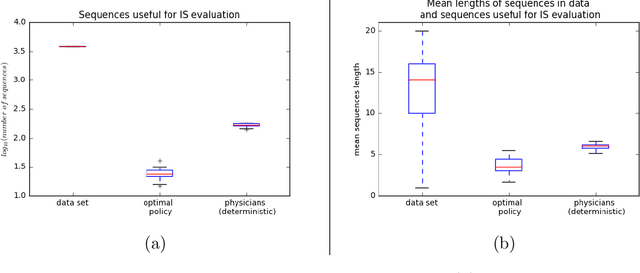

Evaluating Reinforcement Learning Algorithms in Observational Health Settings

May 31, 2018

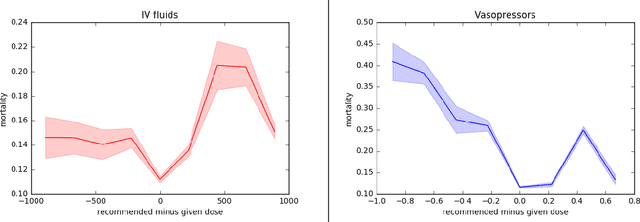

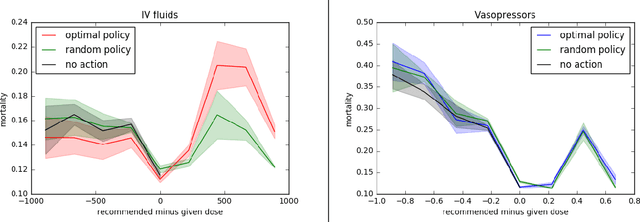

Abstract:Much attention has been devoted recently to the development of machine learning algorithms with the goal of improving treatment policies in healthcare. Reinforcement learning (RL) is a sub-field within machine learning that is concerned with learning how to make sequences of decisions so as to optimize long-term effects. Already, RL algorithms have been proposed to identify decision-making strategies for mechanical ventilation, sepsis management and treatment of schizophrenia. However, before implementing treatment policies learned by black-box algorithms in high-stakes clinical decision problems, special care must be taken in the evaluation of these policies. In this document, our goal is to expose some of the subtleties associated with evaluating RL algorithms in healthcare. We aim to provide a conceptual starting point for clinical and computational researchers to ask the right questions when designing and evaluating algorithms for new ways of treating patients. In the following, we describe how choices about how to summarize a history, variance of statistical estimators, and confounders in more ad-hoc measures can result in unreliable, even misleading estimates of the quality of a treatment policy. We also provide suggestions for mitigating these effects---for while there is much promise for mining observational health data to uncover better treatment policies, evaluation must be performed thoughtfully.

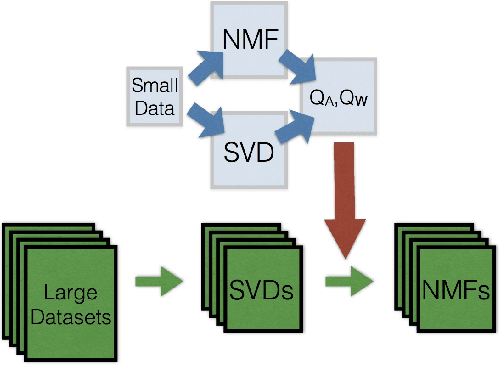

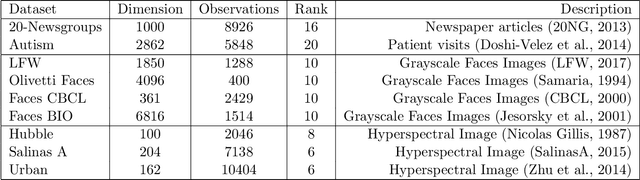

A particle-based variational approach to Bayesian Non-negative Matrix Factorization

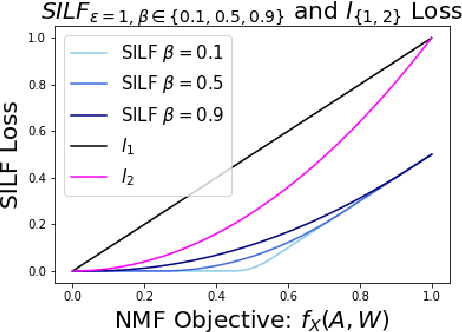

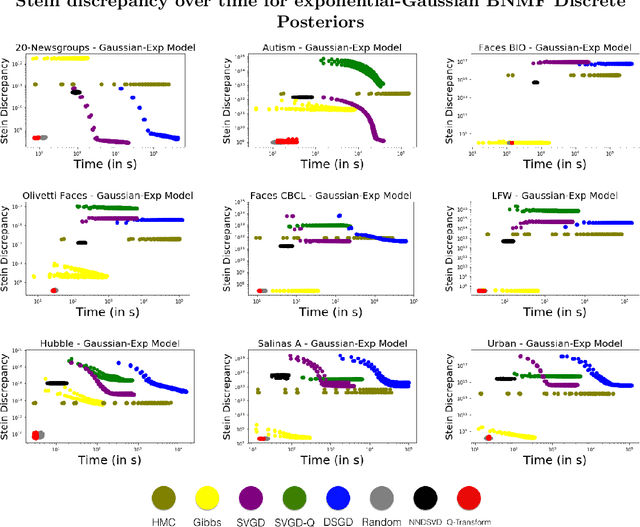

Mar 16, 2018

Abstract:Bayesian Non-negative Matrix Factorization (NMF) is a promising approach for understanding uncertainty and structure in matrix data. However, a large volume of applied work optimizes traditional non-Bayesian NMF objectives that fail to provide a principled understanding of the non-identifiability inherent in NMF-- an issue ideally addressed by a Bayesian approach. Despite their suitability, current Bayesian NMF approaches have failed to gain popularity in an applied setting; they sacrifice flexibility in modeling for tractable computation, tend to get stuck in local modes, and require many thousands of samples for meaningful uncertainty estimates. We address these issues through a particle-based variational approach to Bayesian NMF that only requires the joint likelihood to be differentiable for tractability, uses a novel initialization technique to identify multiple modes in the posterior, and allows domain experts to inspect a `small' set of factorizations that faithfully represent the posterior. We introduce and employ a class of likelihood and prior distributions for NMF that formulate a Bayesian model using popular non-Bayesian NMF objectives. On several real datasets, we obtain better particle approximations to the Bayesian NMF posterior in less time than baselines and demonstrate the significant role that multimodality plays in NMF-related tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge