José Miguel Hernández-Lobato

Stochastic Interpolants in Hilbert Spaces

Feb 02, 2026Abstract:Although diffusion models have successfully extended to function-valued data, stochastic interpolants -- which offer a flexible way to bridge arbitrary distributions -- remain limited to finite-dimensional settings. This work bridges this gap by establishing a rigorous framework for stochastic interpolants in infinite-dimensional Hilbert spaces. We provide comprehensive theoretical foundations, including proofs of well-posedness and explicit error bounds. We demonstrate the effectiveness of the proposed framework for conditional generation, focusing particularly on complex PDE-based benchmarks. By enabling generative bridges between arbitrary functional distributions, our approach achieves state-of-the-art results, offering a powerful, general-purpose tool for scientific discovery.

A Diffusive Classification Loss for Learning Energy-based Generative Models

Jan 28, 2026Abstract:Score-based generative models have recently achieved remarkable success. While they are usually parameterized by the score, an alternative way is to use a series of time-dependent energy-based models (EBMs), where the score is obtained from the negative input-gradient of the energy. Crucially, EBMs can be leveraged not only for generation, but also for tasks such as compositional sampling or building Boltzmann Generators via Monte Carlo methods. However, training EBMs remains challenging. Direct maximum likelihood is computationally prohibitive due to the need for nested sampling, while score matching, though efficient, suffers from mode blindness. To address these issues, we introduce the Diffusive Classification (DiffCLF) objective, a simple method that avoids blindness while remaining computationally efficient. DiffCLF reframes EBM learning as a supervised classification problem across noise levels, and can be seamlessly combined with standard score-based objectives. We validate the effectiveness of DiffCLF by comparing the estimated energies against ground truth in analytical Gaussian mixture cases, and by applying the trained models to tasks such as model composition and Boltzmann Generator sampling. Our results show that DiffCLF enables EBMs with higher fidelity and broader applicability than existing approaches.

Expanding the Chaos: Neural Operator for Stochastic (Partial) Differential Equations

Jan 03, 2026Abstract:Stochastic differential equations (SDEs) and stochastic partial differential equations (SPDEs) are fundamental tools for modeling stochastic dynamics across the natural sciences and modern machine learning. Developing deep learning models for approximating their solution operators promises not only fast, practical solvers, but may also inspire models that resolve classical learning tasks from a new perspective. In this work, we build on classical Wiener chaos expansions (WCE) to design neural operator (NO) architectures for SPDEs and SDEs: we project the driving noise paths onto orthonormal Wick Hermite features and parameterize the resulting deterministic chaos coefficients with neural operators, so that full solution trajectories can be reconstructed from noise in a single forward pass. On the theoretical side, we investigate the classical WCE results for the class of multi-dimensional SDEs and semilinear SPDEs considered here by explicitly writing down the associated coupled ODE/PDE systems for their chaos coefficients, which makes the separation between stochastic forcing and deterministic dynamics fully explicit and directly motivates our model designs. On the empirical side, we validate our models on a diverse suite of problems: classical SPDE benchmarks, diffusion one-step sampling on images, topological interpolation on graphs, financial extrapolation, parameter estimation, and manifold SDEs for flood prediction, demonstrating competitive accuracy and broad applicability. Overall, our results indicate that WCE-based neural operators provide a practical and scalable way to learn SDE/SPDE solution operators across diverse domains.

Scalable Gaussian Processes with Latent Kronecker Structure

Jun 07, 2025

Abstract:Applying Gaussian processes (GPs) to very large datasets remains a challenge due to limited computational scalability. Matrix structures, such as the Kronecker product, can accelerate operations significantly, but their application commonly entails approximations or unrealistic assumptions. In particular, the most common path to creating a Kronecker-structured kernel matrix is by evaluating a product kernel on gridded inputs that can be expressed as a Cartesian product. However, this structure is lost if any observation is missing, breaking the Cartesian product structure, which frequently occurs in real-world data such as time series. To address this limitation, we propose leveraging latent Kronecker structure, by expressing the kernel matrix of observed values as the projection of a latent Kronecker product. In combination with iterative linear system solvers and pathwise conditioning, our method facilitates inference of exact GPs while requiring substantially fewer computational resources than standard iterative methods. We demonstrate that our method outperforms state-of-the-art sparse and variational GPs on real-world datasets with up to five million examples, including robotics, automated machine learning, and climate applications.

RNE: a plug-and-play framework for diffusion density estimation and inference-time control

Jun 06, 2025

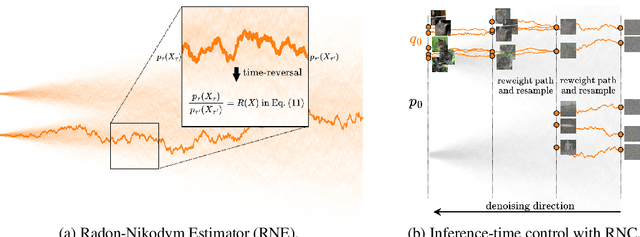

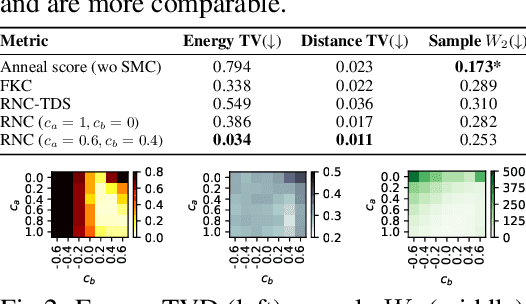

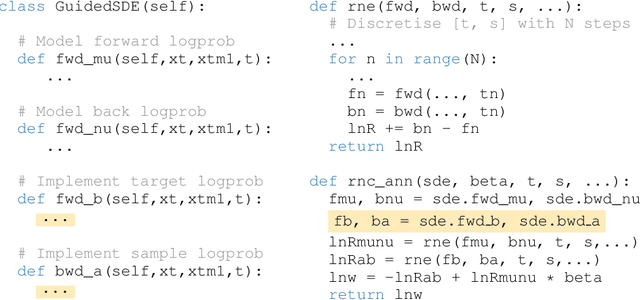

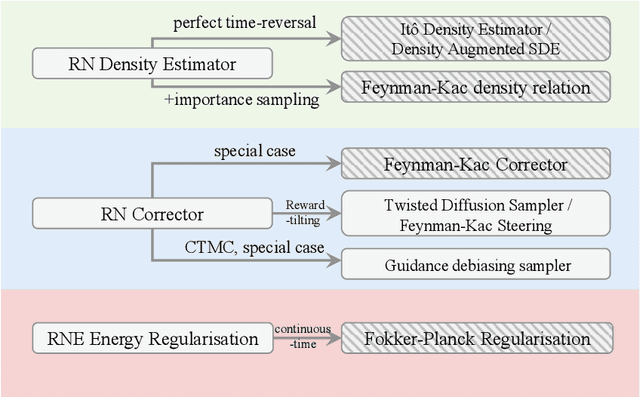

Abstract:In this paper, we introduce the Radon-Nikodym Estimator (RNE), a flexible, plug-and-play framework for diffusion inference-time density estimation and control, based on the concept of the density ratio between path distributions. RNE connects and unifies a variety of existing density estimation and inference-time control methods under a single and intuitive perspective, stemming from basic variational inference and probabilistic principles therefore offering both theoretical clarity and practical versatility. Experiments demonstrate that RNE achieves promising performances in diffusion density estimation and inference-time control tasks, including annealing, composition of diffusion models, and reward-tilting.

Aligning Multimodal Representations through an Information Bottleneck

Jun 05, 2025Abstract:Contrastive losses have been extensively used as a tool for multimodal representation learning. However, it has been empirically observed that their use is not effective to learn an aligned representation space. In this paper, we argue that this phenomenon is caused by the presence of modality-specific information in the representation space. Although some of the most widely used contrastive losses maximize the mutual information between representations of both modalities, they are not designed to remove the modality-specific information. We give a theoretical description of this problem through the lens of the Information Bottleneck Principle. We also empirically analyze how different hyperparameters affect the emergence of this phenomenon in a controlled experimental setup. Finally, we propose a regularization term in the loss function that is derived by means of a variational approximation and aims to increase the representational alignment. We analyze in a set of controlled experiments and real-world applications the advantages of including this regularization term.

Progressive Tempering Sampler with Diffusion

Jun 05, 2025Abstract:Recent research has focused on designing neural samplers that amortize the process of sampling from unnormalized densities. However, despite significant advancements, they still fall short of the state-of-the-art MCMC approach, Parallel Tempering (PT), when it comes to the efficiency of target evaluations. On the other hand, unlike a well-trained neural sampler, PT yields only dependent samples and needs to be rerun -- at considerable computational cost -- whenever new samples are required. To address these weaknesses, we propose the Progressive Tempering Sampler with Diffusion (PTSD), which trains diffusion models sequentially across temperatures, leveraging the advantages of PT to improve the training of neural samplers. We also introduce a novel method to combine high-temperature diffusion models to generate approximate lower-temperature samples, which are minimally refined using MCMC and used to train the next diffusion model. PTSD enables efficient reuse of sample information across temperature levels while generating well-mixed, uncorrelated samples. Our method significantly improves target evaluation efficiency, outperforming diffusion-based neural samplers.

There Was Never a Bottleneck in Concept Bottleneck Models

Jun 05, 2025

Abstract:Deep learning representations are often difficult to interpret, which can hinder their deployment in sensitive applications. Concept Bottleneck Models (CBMs) have emerged as a promising approach to mitigate this issue by learning representations that support target task performance while ensuring that each component predicts a concrete concept from a predefined set. In this work, we argue that CBMs do not impose a true bottleneck: the fact that a component can predict a concept does not guarantee that it encodes only information about that concept. This shortcoming raises concerns regarding interpretability and the validity of intervention procedures. To overcome this limitation, we propose Minimal Concept Bottleneck Models (MCBMs), which incorporate an Information Bottleneck (IB) objective to constrain each representation component to retain only the information relevant to its corresponding concept. This IB is implemented via a variational regularization term added to the training loss. As a result, MCBMs support concept-level interventions with theoretical guarantees, remain consistent with Bayesian principles, and offer greater flexibility in key design choices.

Efficient and Unbiased Sampling from Boltzmann Distributions via Variance-Tuned Diffusion Models

May 27, 2025Abstract:Score-based diffusion models (SBDMs) are powerful amortized samplers for Boltzmann distributions; however, imperfect score estimates bias downstream Monte Carlo estimates. Classical importance sampling (IS) can correct this bias, but computing exact likelihoods requires solving the probability-flow ordinary differential equation (PF-ODE), a procedure that is prohibitively costly and scales poorly with dimensionality. We introduce Variance-Tuned Diffusion Importance Sampling (VT-DIS), a lightweight post-training method that adapts the per-step noise covariance of a pretrained SBDM by minimizing the $\alpha$-divergence ($\alpha=2$) between its forward diffusion and reverse denoising trajectories. VT-DIS assigns a single trajectory-wise importance weight to the joint forward-reverse process, yielding unbiased expectation estimates at test time with negligible overhead compared to standard sampling. On the DW-4, LJ-13, and alanine-dipeptide benchmarks, VT-DIS achieves effective sample sizes of approximately 80 %, 35 %, and 3.5 %, respectively, while using only a fraction of the computational budget required by vanilla diffusion + IS or PF-ODE-based IS.

FEAT: Free energy Estimators with Adaptive Transport

Apr 15, 2025

Abstract:We present Free energy Estimators with Adaptive Transport (FEAT), a novel framework for free energy estimation -- a critical challenge across scientific domains. FEAT leverages learned transports implemented via stochastic interpolants and provides consistent, minimum-variance estimators based on escorted Jarzynski equality and controlled Crooks theorem, alongside variational upper and lower bounds on free energy differences. Unifying equilibrium and non-equilibrium methods under a single theoretical framework, FEAT establishes a principled foundation for neural free energy calculations. Experimental validation on toy examples, molecular simulations, and quantum field theory demonstrates improvements over existing learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge